Storage system with OpenSolaris and Comstar

Storage Star

The advanced ZFS filesystem by Sun, and now Oracle, heralded a major change in the storage sector. ZFS's amazing qualities are an obvious choice for a storage system, but Sun failed to provide a viable network-attached storage system. Sun engineers knew the implementation of a modern framework that served up block devices throughout the network was the next step. The resulting product was Comstar (Common Multi-Protocol SCSI Target, [1]), a subsystem that uses current and future media and protocols to provide storage in the form of block devices. In combination with OpenSolaris [2], ZFS, and the NFS and CIFS implementation, Comstar anchors a fully unified storage system.

ZFS isn't actually a requirement: Comstar is capable of providing files and partitions (known as slices in Solaris-speak) to arbitrary consumers in the form of logical units.

But ZFS adds value in the form of snapshots, clones, and thin provisioning, and it is thus very much worth using. The similarities with a NetApp storage system are hard to overlook [3].

You can take your first steps toward OpenSolaris and Comstar on a virtual machine, although a virtual configuration restricts the choice of transport protocols to iSCSI. This approach is comparable to the installation of the popular Openfiler system on Linux. Incidentally, Oracle has previously used Openfiler in the Oracle RAC and Oracle Grid HowTos. After acquiring Sun, Oracle might want to change this and replace Openfiler with OpenSolaris and Comstar.

The deployment of an OpenSolaris/Comstar system involves the following steps:

- Install OpenSolaris.

- Install the Comstar packages.

- Provide the block device.

- Advertise the block device in Comstar.

- Present the block device (including LUN masking).

The requirements for the virtual machine you use with your initial tests are quite reasonable: 1GB memory, a virtual CPU, about 20GB of disk space, and a bridged network adapter with an Internet connection will do for now. Sun xVM VirtualBox is a good choice for a virtualization product, but VMware Workstation is fine, too. Both solutions include templates for OpenSolaris and Solaris 10 installations.

The OpenSolaris install shouldn't pose any problems. Afterward, you will probably want to install the vendor-specific tools (such as the VMware tools) to improve the managability of the virtual guest.

iSCSI Target

The first step is to configure OpenSolaris with Comstar as an iSCSI target. You will need a static IP address. To avoid making the setup too complex, I assume you have a network connection with Internet access and simply need to assign an IP address to the virtual machine. If you operate a DHCP server, you can assign a static address for the machine via DHCP.

Likely, it's easier to assign a static IP address as root on the OpenSolaris guest in a terminal window:

$ pfexec su # echo 192.168.1.200 > /etc/hostname.e1000g0

In this example, e1000g0 is the primary network interface. The following command lists the network interfaces:

# ifconfig -a

You have to add the device name of the network adapter you configured to the /etc/hostname file – compare the MAC address with the setting in the virtualization software. The commands in Listing 1 complete the network configuration for the OpenSolaris system.

Listing 1: Network Configuration

01 # echo myiscsiserver > /etc/nodename (Setzen des Computernamens) 02 # echo 192.168.1.0 255.255.255.0 >> /etc/netmasks 03 # echo 192.168.1.1 > /etc/defaultrouter (Setzen des Standardgateways) 04 # vi /etc/resolv.conf (Konfigurieren der Namensauflösung) 05 search zuhause.local 06 nameserver 192.168.1.1 07 # vi /etc/nsswitch.conf (Konfigurieren der Namensauflösung) 08 ... 09 hosts files dns 10 ipnodes files dns 11 ... 12 # vi /etc/hosts 13 ::1 localhost 14 127.0.0.1 localhost 15 192.168.1.200 myiscsiserver myiscsiserver.local loghost 16 # svcadm disable network/physical:nwam 17 # svcadm enable network/physical:default

Now reboot OpenSolaris; the system should be listening on the IP address 192.168.1.200 now. If all of the previous commands worked as intended, and DNS and the standard gateway are correctly configured, the new server now has Internet access, which you will need for the Comstar installation. Now, become the system administrator, install the required package, and reboot:

$ pfexec su # pkg install storage-server SUNWiscsit # reboot

After rebooting the system, enable Comstar as an OpenSolaris service:

$ pfexec svcadm enable stmf

From now on, you have a full-fledged storage system that supports both iSCSI and Fibre Channel – assuming you have the right hardware.

To add another ZFS pool for the future block devices, you additionally need a virtual disk. To do this, you first need to shut down the OpenSolaris system as follows:

$ pfexec shutdown -y -g0 -i5

In the virtual machine settings, you can assign a new disk with sufficient space to the guest. Then restart the OpenSolaris guest and, after logging on, create the ZFS pool with the disk you added. To discover the device name, use the format command.

The command for creating the ZFS pool will be something like:

zfs create mypool c8t1d0

Listing 2 recapitulates the whole procedure. From now on, the disk space is available to the Comstar framework. As mentioned previously, this implementation uses ZFS volumes. If you prefer to use a container file or a partition, you can check out the documentation to discover the required steps. The benefits of ZFS are quite significant: The ZFS volume is capable of creating snapshots and cloning.

Listing 2: New ZFS Pool

01 $ pfexec su 02 # format 03 AVAILABLE DISK SELECTIONS: 04 0. c8t0d0 <DEFAULT cyl 2607 alt 2 hd 255 sec 63> 05 /pci@0,0/pci15ad,1976@10/sd@0,0 06 1. c8t1d0 <VMware,-VMware Virtual S-1.0-10.00GB> 07 /pci@0,0/pci15ad,1976@10/sd@1,0 08 # 09 # zpool create mypool c8t1d0 10 # zpool status -v 11 pool: mypool 12 state: ONLINE 13 scrub: none requested 14 config: 15 NAME STATE READ WRITE CKSUM 16 mypool ONLINE 0 0 0 17 c8t1d0 ONLINE 0 0 0 18 errors: No known data errors

On top of this, you can expect performance gains thanks to the ZFS filesystem. In a production environment, you can improve this even more by introducing read and write cache devices on a solid-state disk (SSD).

The following command creates the first ZFS volume:

$ pfexec su # zfs create -V 2G mypool/vol1

Now you have a 2GB volume in the mypool ZFS pool. The matching raw device is located in /dev/zvol/rdsk/mypool/vol1. Next, you need to introduce the raw device to the Comstar framework as a new logical unit:

$ pfexec su # sdbadm create-lu /dev/zvol/rdsk/mypool/vol1 Created the following LU: GUID ... -------------------------------- ... 600144f05f71070000004bc981ec0001 ... /dev/zvol/rdsk/mypool/vol1

This command creates the logical unit, which is assigned a unique identifier, or GUID, by the Comstar framework. You can use the GUID to reference the logical unit uniquely in the following steps. The GUID of a logical unit can be ascertained by typing sdbadm list-lu, which lists all the logical units.

Be Seen

Now you need to publicize the logical unit on the SAN, otherwise it will be invisible to the clients. For an initial test, you can do this:

# stmfadm add-view 600144f05f71070000004bc981ec0001

This is the GUID of the logical unit that was created earlier. From now on, you can use any protocol to address the block device.

You simply need to configure and enable the corresponding Comstar target device, which is iSCSI, initially. The Comstar iSCSI target implementation is fairly simple. Two steps are all it takes to make the logical unit accessible on the IP SAN:

# svcadm enable iscsi/target # itadm create-target Target iqn.1986-03.com.sun:02:8f4cd1fa-b81d-U c42b-c008-a70649501262 successfully created

When the iSCSI target is created, an iSCSI Qualified Name (IQN) for the target is generated automatically; you need this name in the steps that follow. The iSCSI client (or initiator in iSCSI-speak) in our lab is Ubuntu 9.10. Listing 3 shows the steps to mount a Comstar block device with the Linux Open iSCSI initiator.

Listing 3: Block Device and Initiator

01 $ sudo -s 02 # aptitude install open-iscsi 03 # vi /etc/iscsi/iscsid.conf 04 node.startup = automatic 05 # /etc/init.d/open-iscsi restart 06 # iscsiadm -m discovery -t st -p 192.168.209.200 07 192.168.209.200:3260,1 iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 08 # iscsiadm -m node 09 # /etc/init.d/open-iscsi restart 10 # fdisk -l 11 Disk /dev/sdb: 2147 MB, 2147418112 bytes 12 67 heads, 62 sectors/track, 1009 cylinders 13 Units = cylinders of 4154 * 512 = 2126848 bytes 14 Disk identifier: 0x00000000 15 16 Disk /dev/sdb doesn't contain a valid partition table 17 # fdisk /dev/sdb 18 # mkfs.ext3 /dev/sdb1 19 # mkdir /vol1 20 # mount /dev/sdb1 /vol1

From now on, the block device published by Comstar is available as /vol1. To add more block devices, create a new ZFS volume, advertise it as described earlier, and mount the device on the client system. However, the options that ZFS gives you are more interesting. If you start to run out of space on a volume, you can use ZFS to increase the size (Listing 4).

Listing 4: Expanding the ZFS Volume

01 01 # zfs list 02 02 NAME USED AVAIL REFER MOUNTPOINT 03 03 mypool 2.00G 7.78G 19K /mypool 04 04 mypool/vol1 2G 9.72G 65.3M - 05 05 # zfs get volsize mypool/vol1 06 06 NAME PROPERTY VALUE SOURCE 07 07 mypool/vol1 volsize 2G - 08 09 The volume is currently 2GB. The following commands expand it to 3GB. 10 11 01 # zfs set volsize=3g mypool/vol1 12 02 # zfs get volsize mypool/vol1 13 03 NAME PROPERTY VALUE SOURCE 14 04 mypool/vol1 volsize 3G - 15 05 # sbdadm modify-lu -s 3g 600144f05f71070000004bc981ec0001

The GUID must be the same as the logical unit in your installation. sbdadm list-lu gives an overview of the existing logical units. Reboot the Linux client and use Gparted, for example, to extend the ext3 partition. The results should look like this:

# mount /dev/sdb1 /vol1 # cd /vol1 # df -h . Filesystem Size Used Avail Use%Mounted on /dev/sdb1 3.0G 36M 2.8G 2%/vol1

The ZFS volume now provides the preset 3GB of space. On OpenSolaris, you can actually do this on the fly. The ability to enlarge ZFS volumes is just one of the many features of the ZFS filesystem. It can also, for example, create a snapshot of the volume in a couple of milliseconds:

# zfs snapshot mypool/vol1@2010-04-17-1

If the data are consistent in this period, you can download the snapshot to a tape as a backup. Systems such as Oracle databases provide options for creating consistent snapshots during operations, thus supporting backups without downtime.

Writable Snapshots

Another of ZFS's features is clones – that is, writable copies of a snapshot. Just like a ZFS volume, you can use Comstar to export the clone and then mount the clone on a test system. This gives you a complete copy of a production database and the ability to run tests without endangering your live data. This method is a typical approach for creating complete test systems. The following command:

# zfs clone mypool/vol1@2010-04-17-1 mypool/vol1-test

creates a clone from the snapshot captured previously, assigning the name of vol1-test.

Now you can publish the clone on the IP SAN, just as you did the previous volume vol1:

# sbdadm create-lu /dev/zvol/rdsk/mypool/vol1-test Created the following LU: GUID ... -------------------------------- ... 600144f05f71070000004bc999020002 /dev/zvol/rdsk/mypool/vol1-test # stmfadm add-view 600144f05f71070000004bc999020002

After a short wait, you should see another block device appear on the client system. Because the new volume is a clone of the original vol1, the partition table and filesystem are available. Rebooting the Ubuntu system and entering the following two commands

# mkdir /vol1-test # mount /dev/sdd1 /vol1-test

will mount the clone. In a production environment, you would have a test system for this. The iSCSI demo shows how easy it is to create a professional storage system with OpenSolaris, ZFS, and Comstar; an equivalent storage system from a premium provider such as NetApp, EMC, or HP would require significantly more expense.

LUN Masking and iSCSI Portal

Thus far, this guide has focused on one target (server) and one initiator (client). In a production environment, multiple clients will need disk space. Logical Unit Number (LUN) masking prevents a client accessing a block device not intended for it by publishing a logical unit to the intended client systems only. All other potential clients do not see the logical unit, even if they are on the same IP subnet and can connect to the server.

LUN masking per logical unit is typically applied to just one initiator, but if you use a cluster-capable filesystem, you can assign multiple clients to the same logical unit. LUN masking is very simple: You explicitly assign the target you want to publish to the logical unit and the consuming initiator (host). Addressing uses typical iSCSI-style IQNs. In Comstar, you create a target group (TG) and a host group (HG) and add the required IQNs.

The final step is to publish the logical unit and point to the host group and target group you created above (Listing 5). On an Ubuntu 9.10 Linux system, the following command identifies the iSCSI initiator IQN

Listing 5: LUN Masking

01 # stmfadm create-hg myiscsiclient 02 # stmfadm add-hg-member -g myiscsiclient iqn.1993-08.org.debian:01:e8643eb578bf 03 # stmfadm create-tg myiscsiserver 04 # stmfadm add-tg-member -g myiscsiserver iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 05 # stmfadm add-view -h myiscsiclient -t myiscsiserver -n 1 600144f05f71070000004bc999020002

# cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.1993-08.org.debian:01:e8643eb578bf

and this next command discovers the IQN for the OpenSolaris Comstar iSCSI target:

# itadm list-target TARGET NAME STATE SESSIONS iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 online 1

From now on, the logical unit is only reachable via the IQN iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 target, and it is only accessible via the initiator with the IQN of iqn.1993-08.org.debian:01:e8643eb578bf.

The logical unit has a LUN of 1. This number is comparable to the typical SCSI ID on a SCSI bus system. Each additional block device you publish via this target must have a unique LUN.

Of course, you can add more systems to the host or target groups created above. On a cluster system, you would add multiple hosts to the host group to allow all the systems in the cluster to see the logical unit. Changes to the target group are unusual, however.

Portal Groups

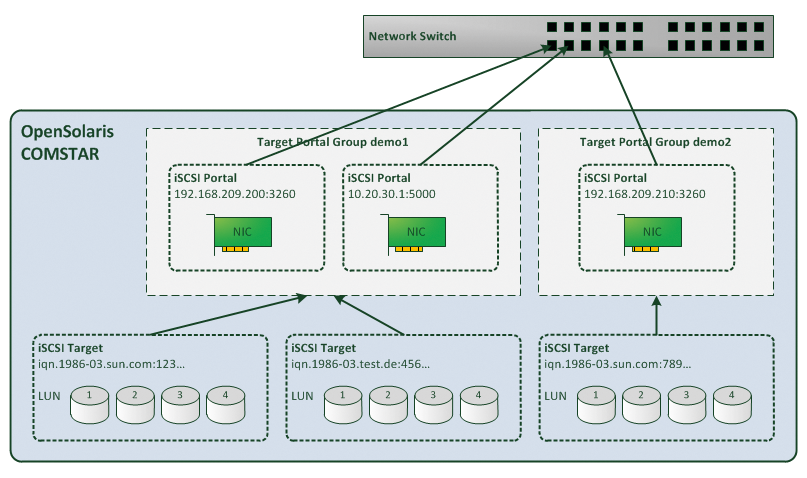

Comstar can manage multiple targets. You can assign any number of block devices or logical units (uniquely addressed by the LUN) to a target. In contrast, a target is always assigned to a Target Portal Group (TPG). By default, Comstar assigns a new target to the default TPG.

The itadm list-target -v command outputs the current target assignments. In the case here, you can see that the target is assigned to the default TPG (see Listing 6).

Listing 6: itadm list-target

01 # itadm list-target -v 02 TARGET NAME STATE SESSIONS 03 iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 online 1 04 alias: - 05 auth: none (defaults) 06 targetchapuser: - 07 targetchapsecret: unset 08 tpg-tags: defaul

A TPG describes the IP connection data in the form of an IP address and a port – in iSCSI terms, this is an iSCSI portal – that provide access to the assigned targets. The default setting means that a target is reachable via an active IP interface and the default iSCSI port of 3260.

Although the target system has two Ethernet interfaces that have assigned IP addresses, you can access the published logical units via port 3260 and the two interfaces. Figure 1 demonstrates the relationships in the target portal group.

These tools let you publish multiple targets over multiple IP networks (IP-SANs). This helps, for example, to group systems that belong together logically in a style similar to zoning on Fibre Channel SANs.

As an added benefit, you can also distribute the load over all of the installed Ethernet interfaces. Currently, iSCSI is typically restricted to 1GB Ethernet; however, as 10GB Ethernet continues to make inroads, iSCSI portals will have sufficient bandwidth.

If you would like to route the target via a second Ethernet interface, you just need to add another network adapter to the virtual machine. Then, go on to assign a static address to the network interface using the same approach as for the primary network card:

# ifconfig e1000g1 plumb up # ifconfig e1000g1 10.20.30.1 netmask 255.255.255.0 # echo 10.20.30.1 > /etc/hostname.e1000g1 # echo 10.20.30.0 255.255.255.0 >> /etc/netmasks

Also, you need to add a corresponding network adapter to the Linux client system; it has to reside in the same LAN segment as the new network interface on the OpenSolaris system. Make sure you configure the interfaces so the two Ethernet interfaces communicate via IP.

Masked

The next step is to introduce the Comstar framework to a new iSCSI portal in the form of a target portal group, which you then assign to the required target. From now on, the logical units are only accessible via the IP network on which the iSCSI portal resides – this assumes that you set up LUN masking (host group and target group) previously to support access to the logical units. In addition to the IP addresses, you still need an IQN and LUN masking.

In the iSCSI environment, now execute the commands in Listing 7. After running the commands, the target with the IQN in line 2 is only accessible via the iSCSI portal at IP 10.20.30.1, port 5000. The logical unit set up previously is no longer accessible via the local network 192.168.1.0/24.

Listing 7: iSCSI Portal

01 # itadm create-tpg myportal 10.20.30.1:5000 02 # itadm modify-target -t myportal iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 03 Target iqn.1986-03.com.sun:02:8f4cd1fa-b81d-c42b-c008-a70649501262 successfully modified

For smaller installations, it is fine to use just one iSCSI portal and thus one target portal group. LACP and IP multipathing (on (Open)Solaris IPMP) allows the system to use redundant Ethernet connections as fail-safes. Anything beyond this adds a huge amount of complexity and requires good documentation to keep track of the configuration.

Fibre Channel

Thus far, I have only looked into iSCSI and ZFS. I mentioned earlier that Comstar can use any protocol to provide storage in the form of block devices. One of the protocols currently supported is the popular Fibre Channel, typically used by large SAN installations. With a transmission rate of 8Gbps and a low protocol overhead, Fibre Channel is capable of impressive performance. To add Fibre Channel capabilities to OpenSolaris and Comstar, you need a Fibre Channel host bus adapter (HBA). Also, you need to switch the HBA to target mode, and this requires a matching adapter.

As of this writing, Comstar only supports the QLogic adapter with 4 and 8Gbps ports and any host bus adapter from Emulex Enterprise. The Emulex driver implements target mode, which vastly simplifies the configuration. If you use a QLogic adapter, you will need to delve deeper into your bag of tricks.

The following setup is not possible with virtual machines and thus requires at least two physical computers with matching host bus adapters. Ideally, you will have a couple of fast disks in your OpenSolaris system and have them grouped to form a ZFS pool. It is a good idea to restrict initial experiments to a single server and a single client. Point-to-point wiring means you can do without a SAN switch, at least initially; however, if you will be adding multiple systems to your OpenSolaris storage, there is no alternative to investing in a SAN switch.

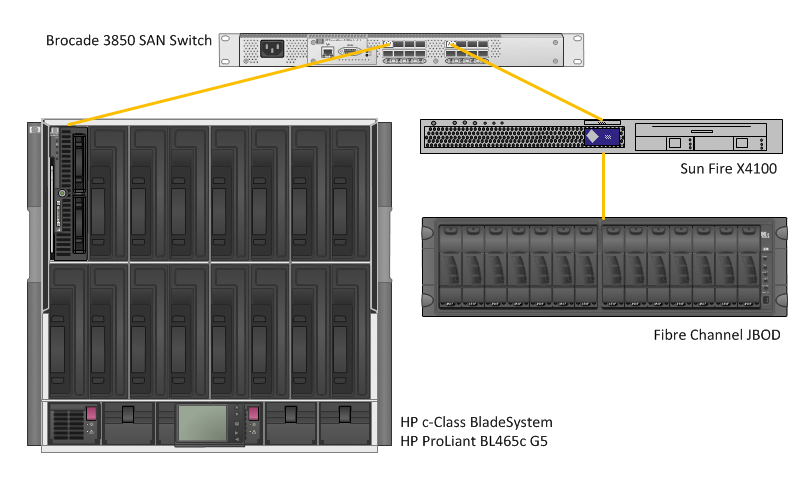

The following example uses a Sun Fire X4100 server with just a bunch of disk (JBOD) storage as an OpenSolaris target system. The Emulex LP9002L host bus adapter is connected to a Brocade 3850 SAN switch. The client is an HP ProLiant BL465c blade server running CentOS Linux 5.4 and also connected to the SAN switch. Zoning on the SAN switch is configured to allow the client and the server to communicate. Figure 2 shows the demo system.

The Comstar setup for Fibre Channel is fairly similar to the previous iSCSI example with virtual machines; addressing is the major difference.

Address Multiplicity

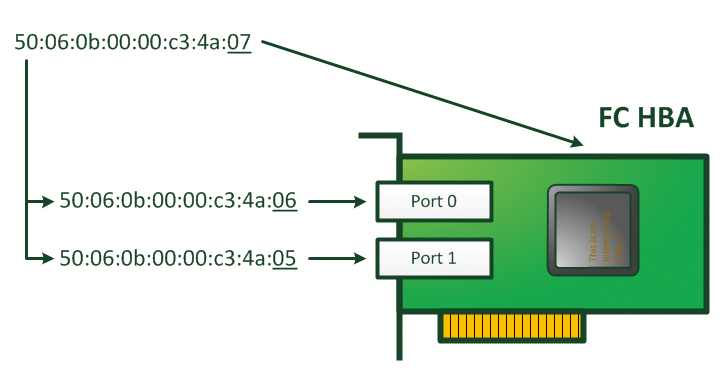

In Fibre Channel, devices are uniquely referenced by their World Wide Node Name (WWNN) and World Wide Port Name (WWPN). Each device will always have a WWNN. Because Fibre Channel adapters often have more than one connection port, a unique WWPN is additionally assigned to each port. Figure 3 shows this principle with a dual-port host bus adapter with a WWNN and its WWPNs. WWPNs are basically similar to MAC addresses on Ethernet interfaces.

For Comstar, you only need the WWPNs for the ports connected to each other. If you are using a SAN switch, you only need the WWPNs that belong to a zone on the switch. To switch an Emulex Enterprise adapter (e.g., the Emulex LP9002L) to target mode, modify the driver configuration as follows:

$ pfexec su # vi /kernel/drv/emlxs.conf target-mode=1; emlxs0-target-mode=0; emlxs1-target-mode=1; # reboot

Make sure you set the target-mode parameter to 1. This is the only setting in which target mode is active. In this example, I use two host bus adapters. Setting the emlxs0-target-mode parameter to 0 disables the first of the installed Emulex HBAs as a target device, although a Fibre Channel JBOD with a disk array is attached to it.

The second Emulex adapter is configured as a target, which I will use to publish a ZFS volume on the SAN. To do so, I create a ZFS volume, as described in the previous iSCSI example, configure the volume as a Comstar block device, then publish the logical unit (Listing 8).

Listing 8: Setup for Fibre Channel

01 # zfs create -V 10g pool-0/vol1 02 # sbdadm create-lu /dev/zvol/rdsk/pool-0/vol1 03 Created the following LU: 04 05 GUID DATA SIZE SOURCE 06 ----------------------------------------------------------------------- 07 600144f021ef4c0000004bcb3fb70001 10737352704 /dev/zvol/rdsk/pool-0/vol1 08 # svcadm disable stmf 09 # fcinfo hba-port 10 ? 11 HBA Port WWN: 10000000c93805a6 12 Port Mode: Target 13 Port ID: 10200 14 OS Device Name: Not Applicable 15 Manufacturer: Emulex 16 Model: LP9002L 17 ? 18 # stmfadm create-tg myfcserver 19 # stmfadm add-tg-member -g myfcserver wwn.10000000c93805a6 20 # stmfadm create-hg myfcclient 21 # stmfadm add-hg-member -g myfcclient wwn.50060b0000c26204 22 # svcadm enable stmf 23 # stmfadm add-view -t myfcserver -h myfcclient -n 1 600144f021ef4c0000004bcb3fb70001

This process provides a logical unit with a LUN of 1 for the client with a WWPN of 50:06:0b:00:00:c2:62:04. The fcinfo hba-port command discovers the OpenSolaris system's WWPN. The command lists all the data for the installed Fibre Channel host bus adapters. With the output, you can identify the WWPN and then configure the target group to reflect it. The WWPN for the CentOS Linux client system is discovered by Listing 9. For other flavors of Linux, you might need other commands.

Listing 9: Fibre Channel WWPN on Centos

01 # systool -c fc_host -v 02 Class = "fc_host" 03 04 Class Device = "host0" 05 Class Device path = "/sys/class/fc_host/host0" 06 fabric_name = "0x100000051e3447e3" 07 issue_lip = <store method only> 08 node_name = "0x50060b0000c26205" 09 port_id = "0x010901" 10 port_name = "0x50060b0000c26204" 11 port_state = "Online" 12 port_type = "NPort (fabric via point-to-point)" 13 speed = "4 Gbit" 14 supported_classes = "Class 3" 15 supported_speeds = "1 Gbit, 2 Gbit, 4 Gbit" 16 symbolic_name = "QMH2462 FW:v4.04.09 DVR:v8.03.00.10.05.04-k" 17 system_hostname = "" 18 tgtid_bind_type = "wwpn (World Wide Port Name)" 19 uevent = <store method only>

If all of this works and you have the right WWPNs, you should see a new block device appear on your Linux system after a short while:

# fdisk -l Disk /dev/sda: 10.7 GB, 10737352704 bytes 64 heads, 32 sectors/track, 10239 cylinders Units = cylinders of 2048 * 512 = 1048576 bytes Disk /dev/sda doesn't contain a valid partition table

Just as in the iSCSI example, you can create a filesystem on the block device and leverage the benefits of ZFS. In combination with a ZFS pool made up of high-performance disks, this gives you a powerful Fibre Channel storage system. With some TLC, the system should also be multipathing-capable, although you will not be able to configure a fail-safe storage cluster right now.

Conclusions

OpenSolaris, ZFS, and Comstar are powerful components for implementing storage systems, and the system is easy to set up, although it does not offer a GUI. Comstar's particular appeal is its modular structure, which lets you export virtually any kind of block device via any supported protocol. A logical unit can be accessible via iSCSI and Fibre Channel at the same time. The community is working on more protocols – for example, an Infiniband implementation is available for high-performance apps. The ZFS filesystem, cheap and big SATA disks, and solid-state disks as a fast cache, create a powerful, low-budget storage system.