Backup tools to save you time and money

Safety Net

Computers are of little value if they are not doing what they're supposed to, whether they've stopped working or they're not configured correctly. Redundant systems or standby machines are common methods of quickly getting back to business, but they are of little help if the problem is caused by incorrect configuration and those configuration files get copied to the standby machines. Sometimes, the only solution is to restore from backup.

Backing up all of your data every day is not always the best approach. The amount of time and the amount of storage space required can be limiting factors. On workstations with only a handful of configuration files and few data files, it might be enough to store these files on external media (such as USB drives). Then, if the system should crash, it could be simpler to reinstall and restore the little data you have. Doing a full system restore often takes longer.

Because each system is different, no single solution is ideal. Even if you find a product that has all the features you could imagine, the time and effort needed to administer the software might be restrictive. Therefore, knowing the most significant aspects of backups and how these can be addressed in the software are key issues when deciding which product to implement (see also the "Support" box).

How Strong Is Your Parachute?

A simple copy of your data to a local machine with rsync started from a cron job once a day is an effective way to back up data when everything comes from a single directory. This method is too simple for businesses that have much more and many different kinds of data – sometimes so much data that it is impractical to back it all up every day. In such cases, you need to make decisions about what to back up and when (see the "Backup Alternatives" box).

In terms of what to back up and how often, files increase in importance on the basis of how difficult they are to recreate. The most important files are your "data," such as database files, word-processing documents, spreadsheets, media files, email, and so forth. Such files would be very difficult to recreate from scratch, so they must be protected. Configuration information for system software, such as Apache or your email server, typically changes frequently, but these files still need to be backed up.

Sometimes, reinstalling is not practical if you have to install a lot of patches, or it might be impossible if you have software that is licensed for a particular machine and you cannot simply reinstall the OS without obtaining a new license key.

How often you should back up your files is the next thing to determine. For example, database files could be backed up to remote machines every 15 minutes, even if the files are on cluster machines with a hardware RAID. Local machines could be backed up twice a day: The first time to copy the backup from the previous day to an external hard disk, and the second time to create a new full backup. Then, once a month, the most important data could be burned to DVD (see also the "Incremental vs. Differential" box).

What Color Is your Parachute?

Individuals and many businesses are not concerned with storing various versions of files over long periods of time. However, in some cases, it might be necessary to store months or even years worth of backups, and the only effective means to store them is on removable media (e.g., a tape drive). Further requirements to store backups off-site often compound the problem. Although archives can be kept on external hard disks, it becomes cumbersome when you get into terabytes of data.

In deciding which type of backup medium you need to use, you have to consider many things. For example, you need to consider not only the total amount of data but also how many machines you need to back up. Part of this involves the ability to distinguish quickly between backups from different machines. Moving an external hard disk between two machines might be easiest, but with 20 machines, you should definitely consider a centralized system.

Here, too, you must consider the speed at which you can make a backup and possibly recover your data. One company I worked for had so much data it took more than a day to back up all of the machines. Thus, full backups were done over the weekend, with incrementals in between. Also, in a business environment with dozens of machines, trying to figure out exactly where the specific version of the data resides increases the recovery time considerably.

Finally, you must also consider the cost. Although you might be tempted to get a larger single drive because it is less expensive than two drives that are only half as big, being able to switch between two drives (or more) adds an extra level of safety if one fails. Furthermore, you could potentially take one home every night. If you are writing to tape, an extra tape drive also increases safety; it can also speed up backups and recovery.

Which Tape?

Some companies remove all of the tapes after the backup is completed and store them in a fireproof safe or somewhere off-site. This means that when doing incremental backups, the most recent copy of a specific file might be on any one of a dozen tapes. Naturally, the question becomes, "Which tape?" (see also the "Whose Data" box). To solve this problem, the backup software must be able keep track of which version of which file is stored where (i.e., which tape or disk).

Once a software product has reached this level, it will typically also be able to manage multiple versions of a given file. Sometimes you will need to make monthly or even yearly backups, which are then stored for longer periods of time. (This setup is common when you have sensitive data like credit card or bank information.) To prevent the software from overwriting tapes that it shouldn't, you should be able to define a "recycle time" that specifies the minimum amount of time before the media can be reused.

Because not all backups are the same and not all companies are the same, you should consider the ability of the software to be configured to your needs. If you have enough time and space, software that can only do a full backup might be sufficient. On the other hand, you might want to be able to pick and choose just specific directories, even when doing a "full" backup.

Many of the products I looked at have the ability to define "profiles" (or use a similar term). For example, you define a Linux MySQL profile, assign it to a subset of your machines, and the backup software automatically knows which directories to include and which to ignore. The Apache profile, for example, has a different set of directories. This might also include a pre-command that is run immediately before the backup, then a post-command that is run immediately afterward.

Storage

How is the backup information stored? Does the backup software have its own internal format or does it use a database such as my SQL? The more systems you back up, the more you need a product that indexes which files are saved and where they are saved as well. Unless you are simply doing a complete backup every night to one destination for one machine (i.e., one tape or remote directory), finding the right location for a given file can be a nightmare. Even if you are dealing with just a few systems, administration of the backups can become a burden.

This leads into the question of how easy it is to recover your data. Can you easily find files from a specific date if there are multiple copies? How easy is it to restore individual files? What about all files changed on a specific date?

Depending on your business, you might have legal obligations in terms of how long you are required to keep certain kinds of data. In some cases, it might be a matter of weeks; in other cases, it can be 10 years or longer. Can you recover data from that long ago? Even if it's not required by law, having long-term backups is a good idea. If you accidentally delete something and don't notice it has happened for a period longer than your backup cycle, you will probably never get your data back. How easy is it for your backup software to make full backups at the end of each month – for example, to ensure that the media does not get overwritten?

Scheduling

If your situation prevents you from doing complete backups all the time, consider how easy it is to schedule them. Can you ensure that a complete backup is done every weekend, for example?

Also, you need to consider the scheduling options for the respective tool. Can it start backups automatically? Is it dependent on some command? Is it simply a GUI for an existing tool, and all the operations need to be started manually? Just because a particular operating system has no client does not mean you are out of luck: You can mount filesystems using Samba or NFS and then back up the files.

rsync

Sometimes you do not need to look farther than your own backyard. Rsync is available for all Linux distributions, all major Unix versions, Mac OS X, and Windows. With a handful of machines, configuring rsync by hand might be a viable solution. If you prefer a graphical interface, several different graphical interfaces are available. In fact, many different applications rely on it to do the backup.

The rsync tool can be used to copy files either from a local machine to a remote machine or the other way around. A number of features also make rsync a useful tool for synchronizing directories (which is part of its name). For example, rsync can ignore files that have not been changed since the last backup, and it can delete files on the target system that no longer exist on the source. If you don't want existing files to be overwritten but still want all of the files to be copied, you can tell rsync to add a suffix to files that already exist on the target.

The ability to specify files and directories to include or exclude is very useful when doing backups. This can be done by full name or with wildcards, and rsync allows you to specify a file that it reads to determine what to include or exclude. When determining whether a file is a new version or not, rsync can look at the size and modification date, but it can also compare a checksum of the files.

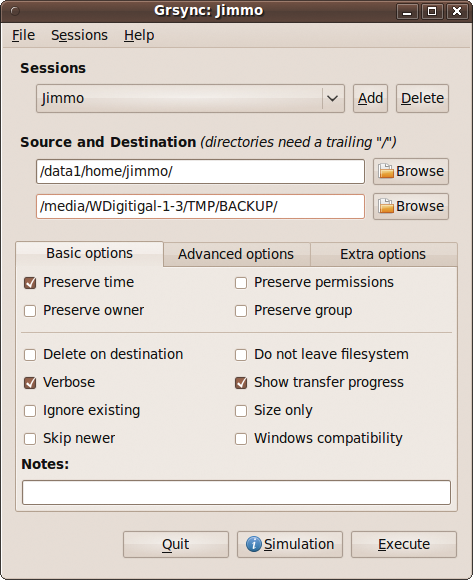

A "batch mode" can be used to update multiple destinations from a single source machine. For example, changes to the configuration files can be propagated to all of your machines without having to specify the change files for each target. Rsync also has a GUI, Grsync Figure 1.

luckyBackup

At first, I was hesitant to go into details about luckyBackup [1], because it is still a 0.X version and it has a somewhat "amateurish" appearance. However, my skepticism quickly faded as I began working with it. luckyBackup is very easy to use and provides a surprising number of options. Despite its simplicity, luckyBackup had the distinction of winning third place in the 2009 SourceForge Community Choice Awards as a "Best New Project."

The repository I used had version 0.33, so I downloaded and installed that (although v0.4.1 is current). The source code is available, but various Linux distributions have compiled packages.

Describing itself as a backup and synchronization tool, luckyBackup uses rsync, to which it passes various configuration options. It provides the ability to pass any option to rsync, if necessary. Although it's not a client-server application, all it needs is an rsync connection to back up data from a remote system.

When you define which files and directories to back up, you create a "profile" that is stored under the user's home directory. Profiles can be imported and exported, so it is possible to create backup templates that are copied to remote machines. (You still need the luckyBackup binary to run the commands.)

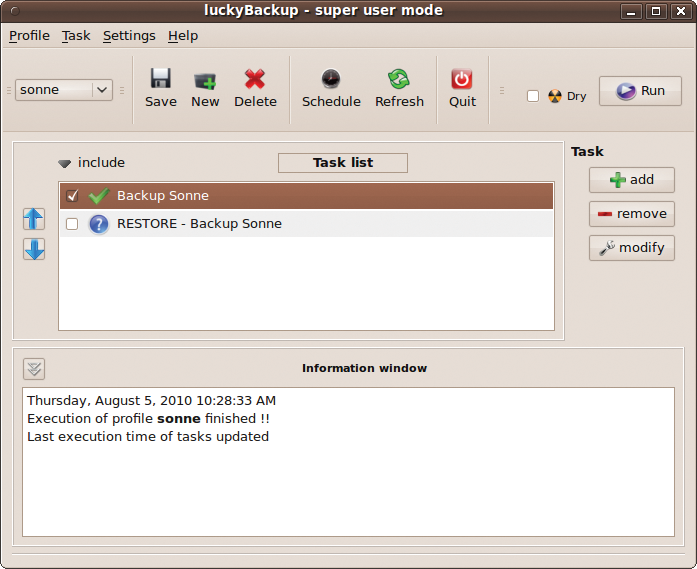

Each profile contains one or more tasks, each with a specific source and target directory, and includes the configuration options you select Figure 2. Thus, it is possible to have different options for different directories (tasks), all for a single machine (profile).

Within a profile, the tool makes it easy to define a restore task on the basis of a given backup task. Essentially, this is the reverse of what you defined for the backup task, but it is very straightforward to change options for the restores, such as restoring to a different directory.

Scheduling of the backup profiles is done by cron, but the tool provides a simple interface. The cron parameters are selected in the GUI; you click a button, and the job is submitted to cron.

A console, or command-line mode, allows you to manage and configure your backups, even when a GUI is not available, such as when connecting via ssh. Because the profiles are stored in the user's home directory, it would be possible for users to create their own profile and make their own backups.

Although I would not recommend it for large companies (no insult intended), luckyBackup does provide a basic set of features that can satisfy home users and small companies.

Amanda

Initially developed internally at the University of Maryland, the Advanced Maryland Automatic Network Disk Archiver (Amanda) [2] is one of the most widely used open source backup tools. The software development is "sponsored" by the company Zmanda [3], which provides an "enterprise" version of Amanda that you can purchase from the Zmanda website. The server only runs on Linux and Solaris (including OpenSolaris), but Mac OS X and various Windows versions also have clients.

The documentation describes Amanda has having been designed to work in "moderately sized computer centers." This and other parts of the product description seem to indicate the free, community version might have problems with larger computer centers. Perhaps this is one reason for selling an "enterprise" version. The latest version is 3.1.1, which came out in June 2010, but it just provided bug fixes. Version 3.1.0 was released in May 2010.

Amanda stores the index of the files and their locations in a text file. This naturally has the potential to slow down searches when you need to recover specific files. However, the commercial version uses MySQL to store the information.

Backups from multiple machines can be configured to run in parallel, even if you only have one tape drive. Data are written to a "holding disk" and from there go onto tape. Data are written with the use of standard ("built-in") tools like tar, which means data can be recovered independently from Amanda. Proprietary tools typically have a proprietary format, which often means you cannot access your data if the server is down.

Scheduling is also done with a local tool: cron. Commands are simply started at the desired time with the respective configuration file as an argument.

Amanda supports the concept of "virtual tapes," which are stored on your disk. These can be of any size smaller than the physical hard disk. This approach is useful for splitting up your files into small enough chunks to be written to DVD, or even CD.

Backups are defined by "levels," with 0 level indicating a full backup; the subsequent levels are backups of the changes made since the last n - 1 or less. The wiki indicates that Amanda's scheduling mechanism uses these levels to implement a strategy of "optimization" in your backups. Although optimization can be useful in many situations, the explanation is somewhat vague about how this is accomplished – and vague descriptions of how a system makes decisions on its own always annoy me.

One important caveat is that Amanda was developed with a particular environment in mind, and it is possible (if not likely) that you will need to jump through hoops to get it to behave the way you want it to. The default should always be to trust the administrator, in my opinion. If the admin wants to configure it a certain way, the product shouldn't think it knows better.

For example, you should determine whether the scheduling mechanism is doing full backups at times other than when you expect or even want. In many cases, large data centers do full backups on the weekend when there is less traffic and not simply "every five days." If your installation has sudden spikes in data, Amanda might think it knows better and change the schedule.

Although such situations can be addressed by tweaking the system, I have a bad feeling when software has the potential for doing something unexpected. After all, as a sys admin, I was hired to think, not simply to push buttons. To make things easier in this regard, Zmanda recommends their commercial enterprise product.

Although Amanda has been around for years and is used by many organizations, I was left with a bad taste in my mouth. Much of the information on their website was outdated, and many links went to the commercial Zmanda website, where you could purchase their products. Additionally, a page with the wish list and planned features is as old as 2004. Although a note states that the page is old, there is no mention of why the page is still online or any explanation of what items are still valid. Half of the pages on the administration table of contents (last updated in 2007) simply list the title with no link to another page.

Also, I must admit I was shocked when I read the "Zmanda Contributor License Agreement." Amanda is an open source tool, which is freely available to everyone. However, in the agreement "you assign and transfer the copyrights of your contribution to Zmanda." In return, you receive a broad license to use and distribute your contribution. Translated, this means you give up your copyright and not simply give Zmanda the right to use it, which also means Zmanda is free to add your changes to their commercial product and make money off of it – and all you get is a T-shirt!

Areca Backup

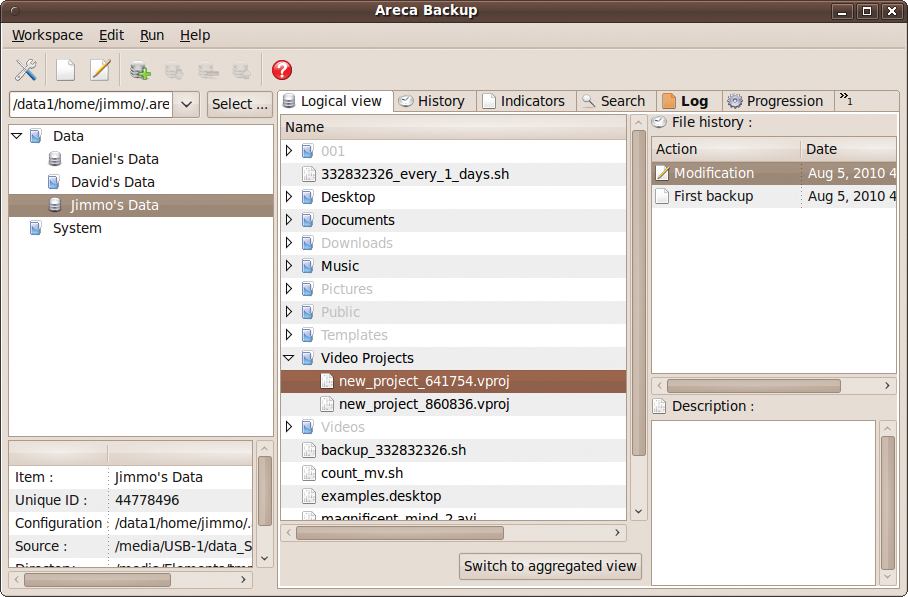

Sitting in the middle of the features spectrum and somewhat less well known is Areca Backup [4]. Running from either a GUI Figure 3 or a command-line interface, Areca provides a simple design and a wide range of features. The documentation says it runs on all operating systems with Java 1.4.2 or later, but only Linux and Windows packages are available for download. Installing it on my Ubuntu systems was no problem, and I could not find any references to limitations with specific distributions or other operating systems.

Areca is not a client-server application, but rather a local filesystem backup. The Areca website explicitly states that it cannot do filesystem or disk images, nor can it write to CDs or DVDs. Backups can be stored on remote machines with FTP or FTPS, and you can back up from remotely mounted filesystems, but with no remote agent. Areca provides no scheduler, so it expects you to use some other "task-scheduling software" (e.g., cron) to start your backup automatically.

In my opinion, the interface is not as intuitive as others, and it uses terminology that is different from other backup tools, making for slower progress at the beginning. For example, the configuration directory is called a "workspace" and a collection of configurations (which can be started at once) is a "group," as opposed to a collection of machines.

Areca provides three "modes," which determine how the files are saved: standard, delta, and image. The standard mode is more or less an incremental backup, storing all new files and those modified since the last backup. The delta mode stores the modified parts of files. The image mode is explicitly not a disk image; basically, it is a snapshot that stores a unique archive of all your files with each backup. The standard backups (differential, incremental, or full) determine which files to include.

The GUI provides two views of your backups. The physical view lists the archives created by a given target. The logical view is a consolidated view of the files and directories in the archive.

Areca is able to trigger pre- and post-actions, like sending a backup report by email, launching shell scripts before or after your backup, and so forth. It also provides a number of variables, such as the archive and computer name, which you can pass to a script. Additionally, you can define multiple scripts and specify when they should run. For example, you can start one script when the backup is successful but start a different one if it returns errors.

Areca provides a number of interesting options when creating backups. It allows you to compress the individual files as well as create a single compressed file. To avoid problems with very large files, you can configure the backup to split the compressed archive into files of a given size. Also, you can encrypt the archives with either AES 128 or AES 256. One aspect I liked was the ability to drop directories from the file explorer directly into Areca.

The Areca forum has relatively low traffic, but posts are fairly current. However, I did see a number of recent posts remain unanswered for a month or longer. The wiki is pretty limited, so you should probably look through the user documentation, which I found to be very extensive and easy to understand.

Two "wizards" also ease the creation of backups. The Backup Shortcut wizard simplifies the process of creating the necessary Areca commands, which are then stored in a script that you can execute from the command line or with cron.

The Backup Strategy wizard generates a script containing a set of backup commands to implement a specific strategy for the given target. For example, you can create a backup every day for a week, a weekly backup for three weeks, and a monthly backup for six months.

Bacula

The Backup Dracula "comes by night and sucks the vital essence from your computers." Despite this somewhat cheesy tag line, Bacula [5] is an amazing product. Although it's a newer product than Amanda, I definitely think it surpasses Amanda in both features and quality. To be honest, the setup is not the point-and-click type that you get with other products, but that is not really to be expected considering the range of features Bacula offers.

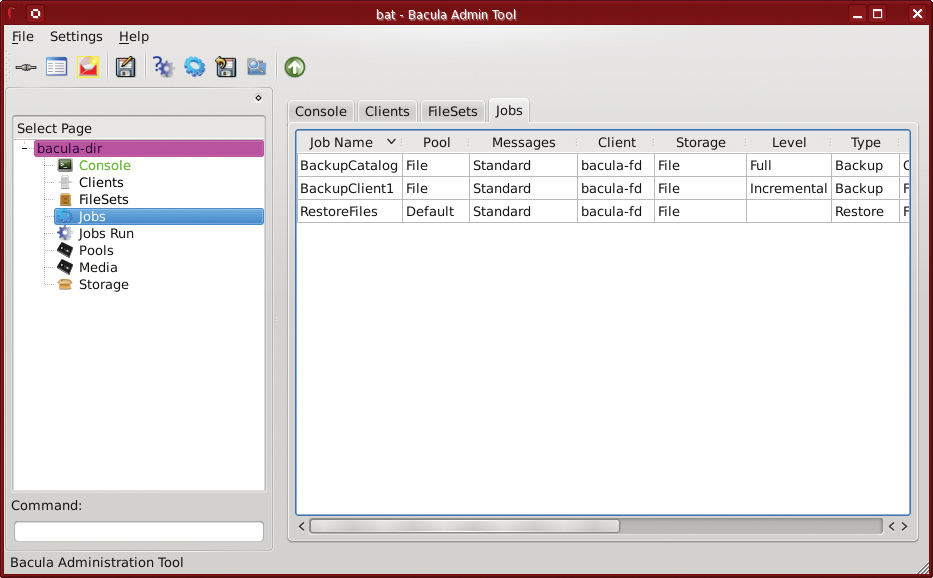

Although Bacula uses local tools to do the backup, it is a true client-server product with five major components that use authenticated communication: Director, Console, File, Storage, and Catalog. These elements are deployed individually on the basis of the function of the respective machine.

The Director supervises all backup, restore, and other operations, including scheduling backup jobs. Backup jobs can start simultaneously as well as on a priority basis. The Director also provides the centralized control and administration and is responsible for maintaining the file catalog. The Console is used for interaction with the Bacula director and is available as a GUI or command-line tool.

The File component is also referred to as the client program, which is the software that is installed on the machines to be backed up. As its name implies, the Storage component is responsible for the storage and recovery of data to and from the physical media. It receives instructions from the Director and then transfers data to or from a file daemon as appropriate. It then updates the catalog by sending file location information to the Director.

The Catalog is responsible for maintaining the file indexes and volume database, allowing the user to locate and restore files quickly. The Catalog maintains a record of not only the files but also the jobs run. Currently, Bacula supports MySQL, PostgreSQL, and SQLite. As of this writing, the Directory and Storage daemons on Windows are not directly supported by Bacula, although they are reported to work.

One interesting aspect of Bacula is the built-in Python interpreter for scripting that can be used, for example, before starting a job, on errors, when the job ends, and so on. Additionally, you can create a rescue CD for a "bare metal" recovery, which avoids the necessity of reinstalling your system manually and then recovering your data. This process is supported by a "bootstrap file" that contains a compact form of Bacula commands, thus allowing you to store your system without having access to the Catalog.

The basic unit is called a "job," which consists of one client and one set of files, the level of backup, what is being done (backing up, migrating, restoring), and so forth.

Bacula supports the concept of a "media pool," which is a set of volumes (i.e., disk, tape). With labeled volumes, it can easily match the external labels on the medium (e.g., tape) as well as prevent accidental overwriting of that medium. It also supports backing up to a single medium from multiple clients, even if they are on different operating systems.

The Bacula website is not as fancy as Amanda, but I found it more useful because the details about how the program works are much more accessible, and the information is more up to date.

The Right Fit

Although I only skimmed the surfaces of these products, this article should give you a good idea what is possible in a backup application. Naturally, each product has many more features than I looked at, so if any of these products piqued your interest, take a look at the website to see everything that product has to offer.