Container and hardware e-virtualization under one roof

Double Sure

The Proxmox Virtual Environment, or VE for short, is an open source project [1] that provides an easy-to-manage, web-based virtualization platform administrators can use to deploy and manage OpenVZ containers or fully virtualized KVM machines. In creating Proxmox, the developers have achieved their vision of allowing administrators to create a virtualization structure within a couple of minutes. The Proxmox bare-metal installer will convert virtually any PC into a powerful hypervisor for the two most popular open source virtualization technologies: KVM and OpenVZ.

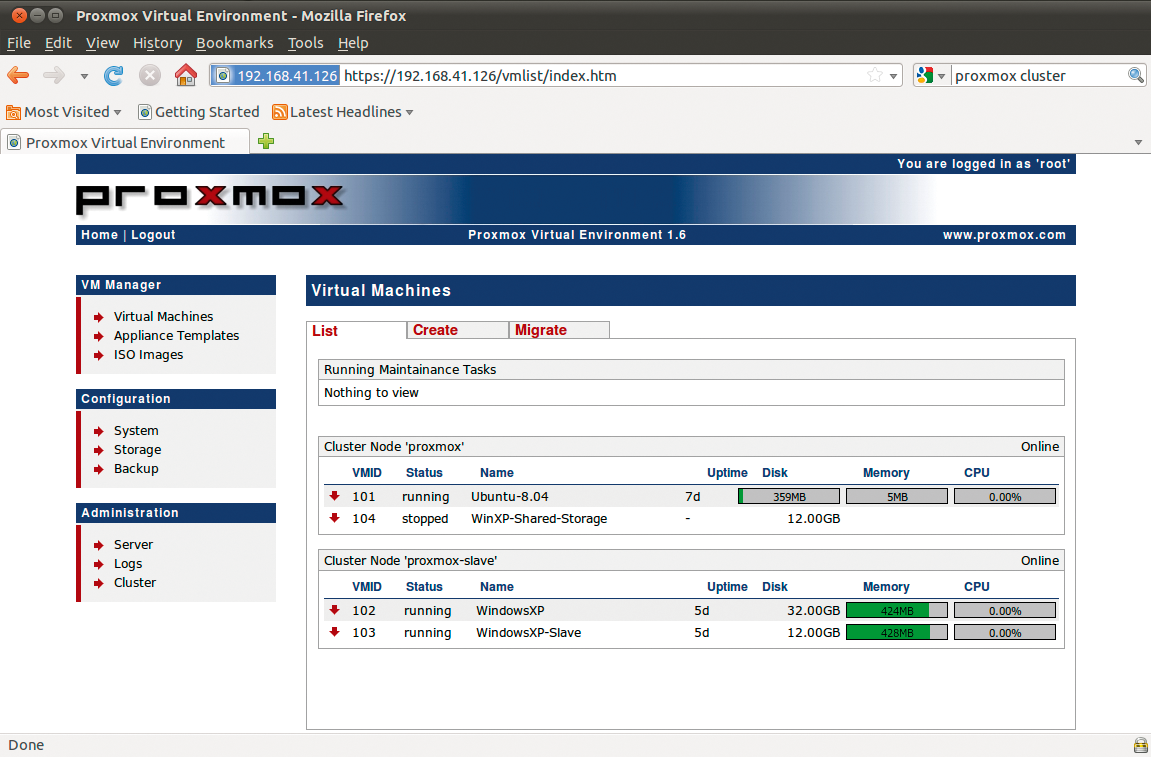

The system lets you set up clusters comprising at least two physical machines (see the "Cluster Setup" box). One server always acts as the cluster master, to which you can assign other servers as nodes. This is not genuine clustering with load balancing, but the model does support centralized management of all the nodes in the cluster, even though the individual virtual machines in a Proxmox cluster are always assigned to one physical server. The clear-cut web interface facilitates the process of creating and managing virtual KVM machines or OpenVZ containers and live migration of virtual machines between nodes (Figure 1).

Offering the two most popular free virtualization technologies on a single system as a mega-virtualization solution gives administrators the freedom to choose the best virtualization solution for the task at hand. OpenVZ [2] is a resource container; whereas KVM [3] supports genuine hardware virtualization in combination with the right CPU. Both virtualization technologies support maximum performance for the guest system in line with the current state of the art.

OpenVZ

The OpenVZ resource container typically is deployed by hosting service providers wanting to offer their customers virtual Linux servers (vServers). Depending on the scale of the OpenVZ host (node), you can run as many containers as you like in parallel.

The setup doesn't emulate hardware for the guest system. Instead, each container is designed to provide what looks like a complete operating system from the applications' point of view – a filesystem with installed software, storage space for data, and a number of device access functions – with a run-time environment.

In the host context, containers are simply directories. All the containers share the kernel with the host system and can only be of the same type as the host operating system, which is Linux.

An abstraction layer in the host kernel isolates the containers from one another and manages the required resources, such as CPU time, memory requirements, and hard disk capacity. Each container only consumes as much CPU time and RAM as the applications running in it, and the abstraction layer overhead is negligible. Virtualization based on OpenVZ is unparalleled with respect to efficiency.

The Linux installation on the guest only consumes disk space. OpenVZ is thus always the best possible virtualization solution if you have no need to load a different kernel or driver in the guest context to access hardware devices.

Hardware Virtualizers

In contrast to this solution, hardware virtualizers offer more flexibility than resource containers with respect to the choice of guest system; however, the performance depends to a great extent on the ability to access the host system's hardware. The most flexible but slowest solution is complete emulation of privileged processor instructions and I/O devices, as offered by emulators such as Qemu without KVM. Hardware virtualization without CPU support, as offered by VMware Server or Parallels entails a performance hit of about 30 percent compared with hardware-supported virtualization.

Paravirtualizers like VMware ESX or Xen implement a special software layer between the host and guest systems that gives the guest system access to the resources on the host via a special interface. However, this kind of solution means modifying the guest system to ensure that privileged CPU functions are rerouted to the hypervisor, which means having custom drivers (kernel patches) on the guest.

Hardware-supported virtualization, which Linux implements at kernel level in Linux with KVM, also emulates a complete PC hardware environment for the guest system, so that you can install a complete operating system with its own kernel. Full virtualization is transparent for the guest system, thus removing the need to modify the guest kernel or install special drivers on the guest system. However, these solutions do require a CPU VT extension (Intel-VT/Vanderpool or AMD V/Pacifica), which makes the guest system believe that it has the hardware all to itself, and thus privileged access, although the guest systems are effectively isolated from doing exactly that. The host system or a hypervisor handles the task of allocating resources.

One major advantage compared with paravirtualization is that the hardware extensions allow you to run unmodified guest systems, and thus support commercial guest systems such as Windows. In contrast to other hardware virtualizers, like VMware ESXi or Citrix XenServer, KVM is free, and it became an official Linux kernel component in kernel version 2.6.20.

KVM Inside

As a kind of worst-case fallback, KVM can provide a slow but functional emulator for privileged functions, but it provides genuine virtual I/O drivers (PCI passthrough) for most guest systems and benefits from the VT extensions of today's CPUs. The KVM driver CD [4] offers a large selection of virtual I/O drivers for Windows. Most distributions provide virtual I/O drivers for Linux.

The KVM framework on the host comprises the /dev/kvm device and three kernel modules: kvm.ko, kvm-intel, or kvm-amd for access to the Intel and AMD instruction sets. KVM extends the user and kernel operating modes on VT-capable CPUs, adding a third, known as guest mode, in which KVM starts and manages the virtual machines, which in turn see a privileged CPU context. The kvm.ko module accesses the architecture-dependent modules and kvm-intel and kvm-amd directories, thus removing the need for a time-consuming MMU emulation.

Proxmox, Hands-On

The current v1.5 of Proxmox VE from May 2010 [1] is based on the Debian 5.0 (Lenny) 64-bit version. The Proxmox VE kernel is a standard 64-bit kernel with Debian patches, OpenVZ patches for Debian, and KVM support. To be more precise, three different Proxmox kernels exist right now. The ISO image of Proxmox VE version 1.5 with a build number of 03.02.2010 uses a 2.6.18 kernel version.

For more efficient deployment of OpenVZ or KVM, you might try a more recent kernel release. For example, the official OpenVZ beta release is based on the 2.6.32 kernel. For this reason, Proxmox offers kernel updates to 2.6.24 and 2.6.32.2C (the latter with KVM_0.12.4 and gPXE) on its website; you can install the updates manually after installing Proxmox VE and performing a system upgrade:

apt-get update apt-get upgrade apt-get install proxmox-ve-2.6.32

A Proxmox cluster master can also manage mixed kernel setups, such as two nodes with 2.6.24, one with 2.6.32, and one with 2.6.18. If you mainly want to run OpenVZ containers, you can keep to the 2.6.18 kernel. The 2.6.32 kernel might give you the latest KVM version with KSM (Kernel Samepage Merging); however, it doesn't currently offer OpenVZ support. On the other hand, kernel 2.6.18, which is the official stable release of KVM, doesn't offer SCSI emulation for guest systems, only IDE or VirtID.

The minimum hardware requirements for Proxmox are a 64-bit CPU with Intel (Vanderpool/VT) or AMD (Pacifica/AMD-V) support and 1GB of RAM. Hardware virtualization with KVM allows any guest complete access to the CPU (Ring0) at maximum speed and with all available extensions (MMX, ET64, 3DNow). After the installation of Proxmox VE, you can verify the existence of a CPU with VT extensions by typing

pveversion -v

at the Proxmox console. If you have the 2.6.24 kernel, the output should look similar to Listing 1.

Listing 1: Kernel 2.6.24 pveversion Output

01 # pveversion -v 02 pve-manager: 1.5-10 (pve-manager/1.5/4822) 03 running kernel: 2.6.24-11-pve 04 proxmox-ve-2.6.24: 1.5-23 05 pve-kernel-2.6.24-11-pve: 2.6.24-23 06 qemu-server: 1.1-16 07 pve-firmware: 1.0-5 08 libpve-storage-perl: 1.0-13 09 vncterm: 0.9-2 10 vzctl: 3.0.23-1pve11 11 vzdump: 1.2-5 12 vzprocps: 2.0.11-1dso2 13 vzquota: 3.0.11-1 14 pve-qemu-kvm: 0.12.4-1

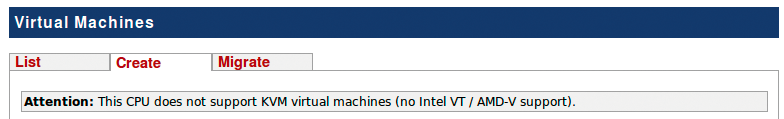

Alternatively, experienced administrators can also check flags in /proc/cpuinfo for entries such as vmx or svm. Missing KVM kernel support is revealed in the web interface by the fact you can only create OpenVZ containers below virtual machines in VM Manager. Additionally, the web interface outputs a message, saying Attention: This CPU does not support KVM virtual machines (no Intel VT/AMD-V support) (Figure 2).

Installing Proxmox

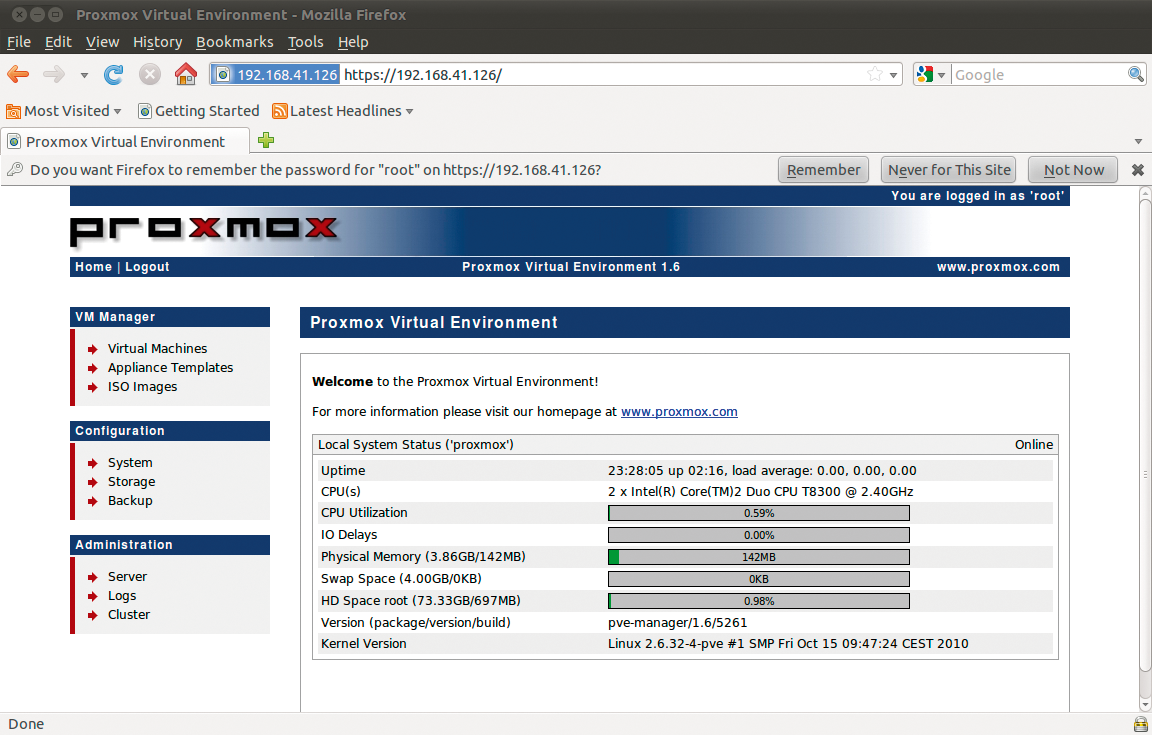

The graphical installer just needs a couple of mouse clicks to talk it into installing the system and doesn't pose any problems. All the data on the hard disk shown by the installer will be lost. Additionally, you can't influence the partitioning scheme, where the Proxmox installer automatically gives you an LVM (Logical Volume Manager) scheme. After choosing the country, time zone, and keyboard layout and specifying the administrative password and manually setting your IPv4 network parameters (Figure 3), the installer unpacks and deploys the basic system.

Finally, you just click on Reboot and the system comes back up ready for action. To manage it, you can surf to the IP address you entered during the installation. After installing the basic system, you will want to log in and update the system by typing:

apt-get update && apt-get upgrade

After the first web interface login, you will see options for switching the GUI language in the System | Options tab, if needed. Watch out for the tiny red save label, which is easy to overlook.

For hardware virtualization with KVM, Proxmox requires a 64-bit Intel or AMD CPU; however, the vendor unofficially offers a version based on a 32-bit Lenny, which only has OpenVZ support [5]. Alternatively, you can install Proxmox on an existing Lenny system [6]. The Proxmox hypervisor has very few open ports (442 for HTTPs, 5900 for VNC, and, optionally, 22 for SSH) and thus a minimal attack surface (Figure 4). To decide which services you want to provide, use the web interface in Administration | Server.

Templates

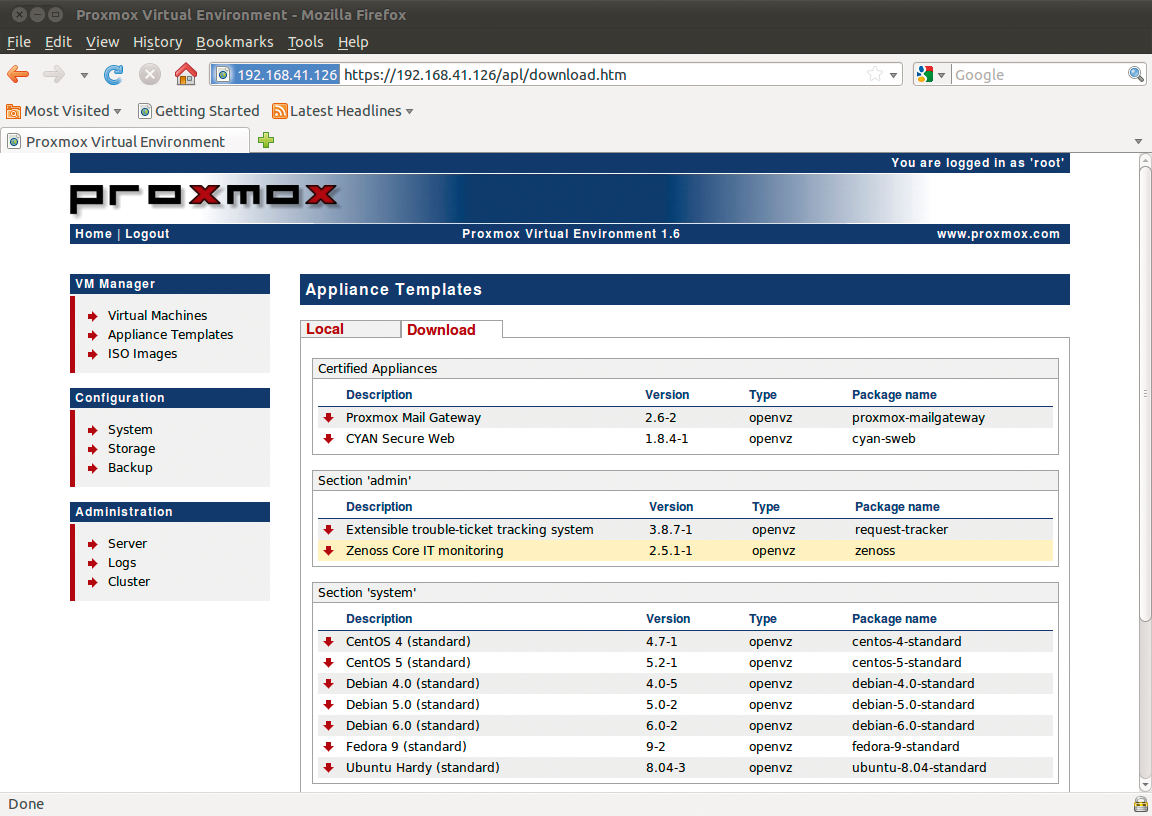

Cluster masters, nodes, and virtual OpenVZ containers, or KVM machines, are easily created in the web GUI. To create a virtual machine, you need to access the VM Manager via the Virtual machines menu. However, pre-built templates (or Virtual Appliances in Proxmox-speak) give you an easier option, and many of them exist for OpenVZ. You can easily download them through the web interface via VM Manager | Appliance Templates. The Download tab takes you to a useful collection of certified templates, such as a ready-to-run Joomla system in the www section. The Type shows you that this is an OpenVZ template (Figure 5).

Clicking the template name takes you to an overview page with detailed information. To do so, you can click start download. After this, when you create the virtual machine, you can select the Container Type (OpenVZ) and the template you just installed, debian-5.0-joomla_1.5.15-1, then type a hostname, a password, and the required network parameters. Then you can and click create to deploy a new virtualized Joomla server in a matter of minutes. Instead of the OpenVZ default, virtual network (venet), you might want to change to bridged Ethernet because many web services rely on functional broadcasting.

To launch the virtual machine, just click the red arrow in the list of virtual machines in the first tab, List, in VM Manager. If you operate Proxmox in a cluster, you can decide – when you create a virtual machine – which cluster node should house the virtual machine. For more details on networking, read the "Onto the Network" box.

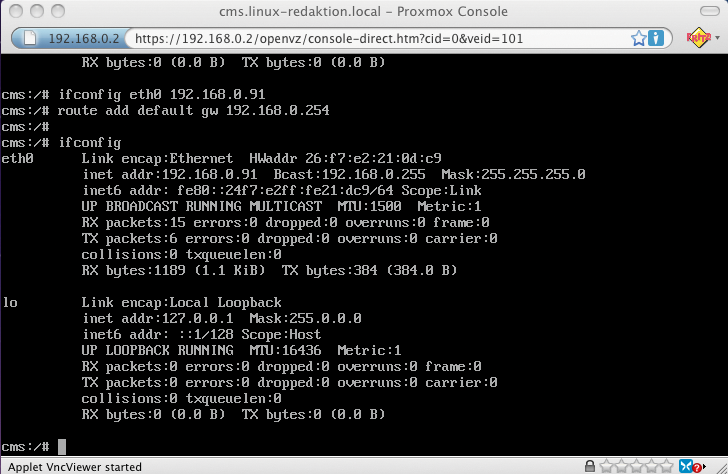

To start the virtual machines, the context menu in the virtual machine list for the selected virtual machine (red arrow) provides a Start item. Additionally, you can click the Open VNC console link in the edit dialog box for the virtual machines to tell Proxmox to open a Java VNC client in a pop-up window in your browser. Then you can use the VNC client to log in to the virtual machine quickly and easily (Figure 7). At this point, you can easily customize the virtual machine to match your requirements. If you opted for a bridged Ethernet device on creating the virtual machine, you only need to assign the required IP address and possibly the default gateway to the virtual NIC eth0. From now on, the administrator can easily log in to the new virtual machine with the use of SSH or over the local network.

Conclusions

Proxmox offers administrators an easily manageable virtualization platform for OpenVZ and KVM virtual machines. Although you can manage virtual clusters, servers, and machines with the web tools offered by VMware (vSphere) or Citrix (Xen), these tools do entail a substantial investment up front. Proxmox is open source. Additionally, Proxmox lets you set up clusters, provides a backup tool, and supports a variety of storage technologies (LVM Groups, iSCSI, or NFS sharing). However, if your corporate planning envisions desktop virtualization or cloud computing, and you need convenient deployment of virtual desktops or ISOs, commercial solutions will be the better solution in the long term. Proxmox VE offers surprising capability and features for free.