Virtual switching with Open vSwitch

Switching Station

Many corporations are moving their infrastructure to virtual systems. This process involves virtualizing centralized components like SAP systems, Oracle database servers, email systems, and file servers, thus facilitating administration. Additionally, administrators no longer need to shut down systems for maintenance, because the workloads can be migrated on the fly to other virtual hosts.

One big disadvantage of a virtual environment has always been the simplistic network structure. Although physical network switches support VLANs, trunking, QoS, port aggregation, firewalling, and so on, virtual switches are very simple affairs. VMware and Cisco, however, provided a solution in the virtual Cisco Nexus 1000V switch. This switch integrates with the VMware environment and offers advanced functionality.

An open source product of this caliber previously has not been available, but Open vSwitch tackles the problem. Open vSwitch supports Xen, KVM, and VirtualBox, as well as XenServer. The next generation of Citrix will also be moving to Open vSwitch.

Open vSwitch [1], which is based on Stanford University's OpenFlow project [2], is a new open standard designed to support the management of switches and routers with arbitrary software (see the "OpenFlow" box).Open vSwitch gives the administrator the following features on a Linux system:

- Fully functional Layer 2 switch

- NetFlow, sFlow, SPAN, and RSPAN support

- 802.1Q VLANs with trunking

- QoS

- Port aggregation

- GRE tunneling

- Compatibility with the Linux bridge code (brctl)

- Kernel and userspace switch implementation

But, before you can benefit from these features, you first need to install Open vSwitch. Prebuilt packages exist for Debian Sid (unstable). You can download packages for Fedora/Red Hat from my own website [4]. You can also install from the source code (see "Installation").

Although the packages provide start scripts for simple use, you will need to launch manually or create your own start script in case of a manual installation. The configuration database handles switch management (see Listing 1). The next step is to launch the Open vSwitch service:

ovs-vswitchd unix:/usr/local/var/run/openvswitch/db.sock

Listing 1: Configuration

01 ovsdb-server /usr/local/etc/ovs-vswitchd.conf.db \ 02 --remote=punix:/usr/local/var/run/openvswitch/db.sock \ 03 --remote=db:Open_vSwitch,managers \ 04 --private-key=db:SSL,private_key \ 05 --certificate=db:SSL,certificate \ 06 --bootstrap-ca-cert=db:SSL,ca_cert

You can now run the ovs-vsctl command to create new switches or add and configure ports. Because most scripts for Xen and KVM rely on the bridge utilities, and on the brctl command to manage the bridge, you will need to start the bridge compatibility daemon. To do this, load the kernel module and then start the service:

modprobe datapath/linux-2.6/brcompat_mod.ko ovs-brcompatd --pidfile --detach -vANY:console:EMER unix:/usr/local/var/run/openvswitch/db.sock

You can now use the bridge utilities to manage your Open vSwitch:

brctl addbr extern0 brctl addif extern0 eth0

Distribution scripts for creating bridges will work in the normal way. You can also use ovs-vsctl to manage the bridge. In fact, you can use both commands at the same time (Listing 2). If the brctl show command can't find some files in the /sys/ directory, the bridge utilities may be too new (e.g., on RHEL 6), and you might want to downgrade to RHEL 5.

Listing 2: Bridge Management

01 [root@kvm1 ~]# brctl show 02 bridge name bridge id STP enabled interfaces 03 extern0 0000.00304879668c no eth0 04 vnet0 05 [root@kvm1 ~]# ovs-vsctl list-ports extern0 06 eth0 07 vnet0

Until now, Open vSwitch has acted exactly like a bridge setup using the bridge utilities. Other configuration steps are needed to use the advanced features. All of the settings in the Open vSwitch configuration database can be handled by using the ovs-vsctl command.

NetFlow

Open vSwitch can export the NetFlows within the switch. To allow this, create a new NetFlow probe.

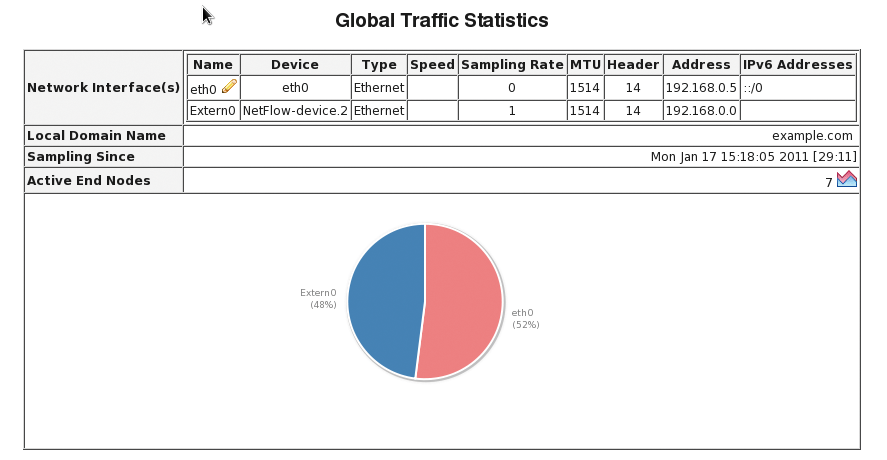

# ovs-vsctl create netflow target="192.168.0.5\:5000" 75545802-675f-45b2-814e-0875921e7ede

Then, link the probe with the extern0 bridge:

# ovs-vsctl add bridge extern0 netflow 75545802-675f-45b2-814e-0875921e7ede

If you previously launched a NetFlow collector (e.g., Ntop) on port 5000 of a machine with the address 192.168.0.5, you can now view the file (Figure 1). The configuration settings can be managed with the use of ovs-vsctl list bridge and ovs-vsctl list netflow and removed with ovs-vsctl destroy.

QoS

In many cases, administrators need to restrict the bandwidth of individual virtual guests, particularly when different customers use the same virtual environment. Different guests receive the performance they pay for based on Service Level Agreements.

Open vSwitch gives administrators a fairly simple option for restricting the maximum transmit performance of individual guests. To test this, you should first measure the normal throughput. The iperf tool is useful for doing so. You can launch iperf as a server on one system and as a client on a virtual guest (Listing 3).

Listing 3: Performance Measurement

## Server: ## iperf -s ## Client: # iperf -c 192.168.0.5 -t 60 -------------------------------------------------------- Client connecting to 192.168.0.5, TCP port 5001 TCP window size: 16.0 KByte (default) -------------------------------------------------------- [ 3] local 192.168.0.210 port 60654 connected with 192.168.0.5 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-60.0 sec 5.80 GBytes 830 Mbits/sec

You can now restrict the send performance. Note that the command expects you to enter the send performance in kilobits per second (kbps). Besides the send performance, you also need to specify the burst speed, which should be about a tenth of the send performance. The vnet0 interface in this case is the switch port to which the virtual guest is connected.

# ovs-vsctl set Interface vnet0 ingress_policing_rate=1000 # ovs-vsctl set Interface vnet0 ingress_policing_burst=100

You can test the results with iperf (Figure 2).

If you are familiar with the tc command and class-based QoS on Linux with various queuing disciplines, you can use this tool in combination with Open vSwitch. The man page provides various examples.

Mirroring

To run an Intrusion Detection System, you need a mirror port on the switch. Again, Open vSwitch gives you this option. To use it, you first need to create the mirror port and then add it to the correct switch. To create a mirror port that receives the traffic from all other ports and mirrors it on vnet0, use:

ovs-vsctl create mirror name=mirror select_all=1 output_port=e46e7d4a-2316-407f-ab11-4d248cd8fa94

The command

ovs-vsctl list port vnet0

discovers the output port ID that you need. The command

# ovs-vsctl add bridge extern0 mirrors 716462a4-8aac-4b9c-aa20-a0844d86f9ef

adds the mirror port to the bridge.

VLANs

You can also implement VLANs with Open vSwitch, and you have two options for doing so. Every Open vSwitch is VLAN-capable: If you add a port to the virtual switch, it is always a VLAN trunk port that provides tagged transport of all VLANs. To create an access port that transports a VLAN natively and without tags, enter:

ovs-vsctl add-port extern0 vnet1 tag=1

The brctl command doesn't let you create this kind of port directly. You'll need a fake bridge as a workaround. Open vSwitch supports fake bridges, which you can then assign to individual VLANs. Every port on a fake bridge is then an access port on the VLAN. To implement this, you first create a fake bridge as the child of a parent bridge:

# ovs-vsctl add-br VLAN1 extern0 1

The new fake bridge now answers to the name of VLAN1 and transports the VLAN with a tag of 1. You need to enable this and assign an IP address:

# ifconfig VLAN1 192.168.1.1 up

Each port you create on this bridge is an access port for VLAN 1, which means you can again use the brctl command.

Open vSwitch also can create a GRE tunnel between multiple systems and run VLAN trunking across it. Thus, you can move virtual machines to other hosts outside of the LAN. Communication takes place through the GRE tunnel.

Also, Open vSwitch can aggregate ports. Linux kernel developers call this process bundling; Cisco refers to it as EtherChannel. It lets the administrator combine multiple physical ports as a single logical port, which can then be used for load balancing and high availability.

Open vSwitch is an interesting project that is currently suffering from a lack of popularity and documentation. I hope the major distributions decide to incorporate the project and integrate it natively with their own tools, such as Libvirt. This functionality would remove the need to use the bridge compatibility daemon.