Fast system management from Puppet Labs

Orchestration

The Marionette Collective Framework (MCollective) [1] gives administrators the ability to launch job chains in parallel on a variety of systems. In contrast to similar tools, such as Func [2], Fabric [3], or Capistrano [4], MCollective relies on middleware based on the publish/subscribe method to launch jobs on various nodes. The middleware supported by the framework can be any kind of STOMP-based (Streaming Text Oriented Messaging Protocol) server implementation, for example, ActiveMQ [5] and RabbitMQ [6].

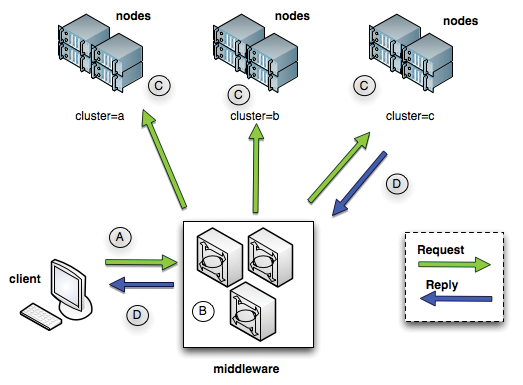

The administrator generates arbitrary job chains on a management system that are then sent to the middleware computer that – in turn – broadcasts the requests. Administrators can use filters to address subsets of the existing nodes. The low-level protocol between the systems is simple RPC, but it also handles authentication, authorization, and auditing of the individual requests (Figure 1). The middleware messaging protocol relies on this process.

MCollective uses agents on the individual nodes to launch the actions. The framework offers a large number of prebuilt agents and, assuming you have some knowledge of Ruby, you could write your own agents with additional functionality. The MCollective website offers exhaustive examples [7] to help you do so. In combination with the configuration management tools, such as Puppet [8], MCollective is an extremely powerful but easy-to-handle system management tool.

Thanks to its middleware approach, MCollective is capable of executing jobs at a very high speed. I have used the framework in an environment with more than 3,000 systems; running a job on all of the nodes rarely took more than 30 seconds.

YAML-based facts files are another boon that give administrators the ability to assign specific properties to the systems – for example, assigning them to a datacenter or a country. These properties can be used as filter criteria when generating jobs, and removes the need to specify host or domain names. If you use Puppet for configuration management, you can also use Puppet classes as filter criteria.

Installation and Configuration

The Puppet Labs website [9] offers various packages for RPM and Deb-based systems. To decide which package to install on which system, check out the following list of individual functionalities.

The examples are based on Red Hat Enterprise Linux 5, but are valid for any other supported system, though you may need to modify the package name slightly:

- Management-System (Workstation): The system acts as the client system for the administrator who creates job chains here. It depends on the packages

mcollective-commonandmcollective-client. - Middleware: This is where the messaging system runs. Besides the middleware itself (ActiveMQ, for example), it also requires the

mcollective-commonpackage. To resolve all Ruby dependencies, the system should also be able to access the EPEL repository [10]. - Nodes: These are the systems that MCollective will manage. This requires the

mcollective-commonandmcollectivepackages.

The first step is to install the middleware server. In this article, I will be using ActiveMQ as the middleware implementation. The easiest approach is to set up a Yum repository for the installation sources listed online [9] [10]. Then, you can install the messaging system by issuing yum install activemq. The Yum RPM front end resolves all the dependencies and installs the packages after a prompt.

Listing 1 shows an example of the ActiveMQ server configuration file; you need at least version 5.4. The user account which the MCollective client uses to access the server must be modified accordingly. ActiveMQ can also authenticate against an LDAP server, but to keep things simple I will just be using a local account in the server's configuration file for this. To avoid a single point of failure, the server also supports a high-availability configuration [11]. A final service activemq start wakes up the server.

Listing 1: /etc/activemq/activemq.xml

01 <beans 02 xmlns="http://www.springframework.org/schema/beans" 03 xmlns:amq="http://activemq.apache.org/schema/core" 04 xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" 05 xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-2.0.xsd 06 http://activemq.apache.org/schema/core http://activemq.apache.org/schema/core/activemq-core.xsd 07 http://activemq.apache.org/camel/schema/spring http://activemq.apache.org/camel/schema/spring/camel-spring.xsd"> 08 09 <broker xmlns="http://activemq.apache.org/schema/core" brokerName="localhost" useJmx="true"> 10 <managementContext> 11 <managementContext createConnector="false"/> 12 </managementContext> 13 14 <plugins> 15 <statisticsBrokerPlugin/> 16 <simpleAuthenticationPlugin> 17 <users> 18 <authenticationUser username="mcollective" password="marionette" groups="mcollective,everyone"/> 19 <authenticationUser username="admin" password="secret" groups="mcollective,admin,everyone"/> 20 </users> 21 </simpleAuthenticationPlugin> 22 <authorizationPlugin> 23 <map> 24 <authorizationMap> 25 <authorizationEntries> 26 <authorizationEntry queue=">" write="admins" read="admins" admin="admins" /> 27 <authorizationEntry topic=">" write="admins" read="admins" admin="admins" /> 28 <authorizationEntry topic="mcollective.>" write="mcollective" read="mcollective" admin="mcollective" /> 29 <authorizationEntry topic="mcollective.>" write="mcollective" read="mcollective" admin="mcollective" /> 30 <authorizationEntry topic="ActiveMQ.Advisory.>" read="everyone" write="everyone" admin="everyone"/> 31 </authorizationEntries> 32 </authorizationMap> 33 </map> 34 </authorizationPlugin> 35 </plugins> 36 37 <systemUsage> 38 <systemUsage> 39 <memoryUsage> 40 <memoryUsage limit="20 mb"/> 41 </memoryUsage> 42 <storeUsage> 43 <storeUsage limit="1 gb" name="foo"/> 44 </storeUsage> 45 <tempUsage> 46 <tempUsage limit="100 mb"/> 47 </tempUsage> 48 </systemUsage> 49 </systemUsage> 50 51 <transportConnectors> 52 <transportConnector name="openwire" uri="tcp://0.0.0.0:6166"/> 53 <transportConnector name="stomp" uri="stomp://0.0.0.0:6163"/> 54 </transportConnectors> 55 </broker> 56 </beans>

You need to install the mcollective-client and mcollective-common packages on the management system. The former contains the /etc/mcollective/client.cfg configuration file (Listing 2) and is responsible for communicating with the middleware.

Listing 2: /etc/mcollective/client.cfg

01 # main config 02 topicprefix = /topic/mcollective 03 libdir = /usr/libexec/mcollective 04 logfile = /dev/null 05 loglevel = debug 06 identity = rawhide.tuxgeek.de 07 08 # connector plugin config 09 connector = stomp 10 plugin.stomp.host = activemq.tuxgeek.de 11 plugin.stomp.port = 6163 12 plugin.stomp.user = unset 13 plugin.stomp.password = unset 14 15 # security plugin config 16 securityprovider = psk 17 plugin.psk = foobar

The second package provides the /etc/mcollective/server.cfg configuration file (Listing 3), which gives MCollective access to the individual server facts (Listing 4), assuming they are defined. Security-conscious admins will remove sensitive information from the global client configuration file and instead create an additional ~/.mcollective configuration file on the management system. This file must contain all the settings from the global configuration. For the server configuration file, you'll want to modify the privileges correspondingly – unfortunately, this is not possible for the client configuration file.

Listing 3: /etc/mcollective/server.cfg

01 # main config 02 topicprefix = /topic/mcollective 03 libdir = /usr/libexec/mcollective 04 logfile = /var/log/mcollective.log 05 daemonize = 1 06 keeplogs = 1 07 max_log_size = 10240 08 loglevel = debug 09 identity = rawhide.tuxgeek.de 10 registerinterval = 300 11 12 # connector plugin config 13 connector = stomp 14 plugin.stomp.host = activemq.tuxgeek.de 15 plugin.stomp.port = 6163 16 plugin.stomp.user = mcollective 17 plugin.stomp.password = marionette 18 19 # facts 20 factsource = yaml 21 plugin.yaml = /etc/mcollective/facts.yaml 22 23 # security plugin config 24 securityprovider = psk 25 plugin.psk = foobar

Listing 4: /etc/mcollective/facts.yaml

01 --- 02 stage: prod 03 country: de 04 location: dus 05 type: web

The systems that you manage with MCollective also need the mcollective-common package (this provides a server component of the framework, so to speak) and mcollective. The server configuration is identical with that of the management system; although you do need to modify the facts file correspondingly. To launch the server, issue the service mcollective start command. To distribute the configuration file and a modified facts files to the individual systems, you can use a tool like Puppet or Cfengine. You can also use a Red Hat Satellite or Spacewalk server for this.

Initial Tests

After installing the first few systems with the MCollective server, you can do some initial tests to check whether communication with a messaging system is working properly (Listing 5). The tests are performed on the management system using the mco tool; this is the MCollective controller.

Listing 5: Communications Test

01 # mco ping 02 www1.virt.tuxgeek.de time=1195.07 ms 03 ldap1.virt.tuxgeek.de time=1195.62 ms 04 spacewalk.virt.tuxgeek.de time=1195.62 ms 05 dirsec.virt.tuxgeek.de time=1195.62 ms 06 ipa.virt.tuxgeek.de time=1195.62 ms 07 cs1.virt.tuxgeek.de time=1195.62 ms 08 cs2.virt.tuxgeek.de time=1195.62 ms 09 [...] 10 11 # mco find --with-fact type=web 12 www1.virt.tuxgeek.de 13 www2.virt.tuxgeek.de 14 [...]

MCollective's modular approach gives administrators the ability to call various agents on the individual nodes. Depending on the task that you need to handle, a variety of agents can be used, most of which have their own client tools on the management system. MCollective comes with a number of agents, and you can download more from the community page [12].

An example of one of the goodies available from this page is the mc-service plug-in, which provides an agent written in Ruby by the name of services.rb; the agent must reside in the $libdir/mcollective/agent directory on the nodes. The $libdir is defined in the server.cfg file. The matching client application mc-service is located on the management system in the /usr/local/bin/ directory. Listing 6 shows a trial run with mc-service.

Listing 6: Plugin mc-service

01 # mc-facts location 02 Report for fact: location 03 04 dus found 100 times 05 txl found 594 times 06 muc found 10 times 07 ham found 26 times 08 fra found 154 times 09 10 Finished processing 884 / 884 hosts in 1656.94 ms

If you can't find a matching agent for the task on the MCollective community page, you can, of course, develop a new agent with matching clients. Some basic knowledge of Ruby is necessary for this part the SimpleRPC framework, which encapsulates the basic MCollective client and server structures will take care of all the rest. You can use the mc-call-agent to test your home-grown agents before you provide a client. This functionality can be a very useful thing to have if you are developing complex agents, and the MCollective website provides a how-to [13] on this topic.

Reporting

Of course, MCollective also supports comprehensive reporting, which starts with simple node statistics and finishes with very detailed listings of specific properties of the individual systems. The mc-facts tool, for example, provides statistics based on properties systems defined in the facts files (Listing 7), and the MCollective Controller mco also offers some interesting reporting functions.

Listing 7: Plugin mc-service

01 # mco ping 02 www1.virt.tuxgeek.de time=1195.07 ms 03 ldap1.virt.tuxgeek.de time=1195.62 ms 04 spacewalk.virt.tuxgeek.de time=1195.62 ms 05 dirsec.virt.tuxgeek.de time=1195.62 ms 06 ipa.virt.tuxgeek.de time=1195.62 ms 07 cs1.virt.tuxgeek.de time=1195.62 ms 08 cs2.virt.tuxgeek.de time=1195.62 ms 09 [...] 10 11 # mco find --with-fact type=web 12 www1.virt.tuxgeek.de 13 www2.virt.tuxgeek.de 14 [...]

Conclusions

MCollective is a very powerful tool for managing large-scale server landscapes, which are becoming increasingly common in virtual and cloud setups. If you only have a handful of systems to manage, you will probably find that the overhead for individual nodes is too high.

However, if you have to manage seriously large numbers of systems, you definitely will appreciate MCollective's modular concept and its benefits. Thanks to the ability to integrate your own agents with the framework and to deploy the complete environment with a configuration management tool of your own choice, the extent to which you can extend the functionality of the framework is virtually unlimited.