Workshop: Container virtualization with LXC on Ubuntu 10.04

In the Can

If the major virtualization solutions such as KVM, Xen, VMware, or VirtualBox are too much like overkill for your liking, or if you need to virtualize a single server (e.g., a print server or an intrusion detection system), we'll show you how to prepare the lightweight container virtualization system called Linux Containers on Ubuntu 10.04.

Because Linux Containers (LXC) is integrated into the kernel, you only need the userspace tools from the lxc package to take the software for a trial run. The following line in /etc/fstab

none /cgroup cgroup defaults 0 0

mounts the cgroup filesystem, which you additionally need in /cgroup.

The following command is all the root user needs to run a single command – such as a shell – in an application container:

lxc-execute -n foo -f /usr/share/doc/lxc/ examples/lxc-macvlan.conf /bin/bash

This code defines the container according to the lxc-macvlan.conf configuration file and launches the shell. The prompt shows you that the shell is running in a virtualized environment: It has a modified hostname. The list of processes output by ps auxw isn't exactly long, and it completely lacks kernel threads. If you change to the proc directory, you will notice that you have far fewer entries for processes than you would have on the host system.

Disposable and Reusable Containers

Creating a system container is more complex because you need to install and prepare a complete system for this purpose. Additionally, you will want to configure the network on the host. To do this, you need to install the following additional packages: debootstrap, bridge-utils, and libcap2-bin. As part of the network configuration, you need a bridge to reach the container under a separate IP address. Once you have the content from Listing 1 in your /etc/network/interfaces file, issuing

/etc/init.d/networking restart

enables the settings.

Listing 1: Modifying /etc/network/interfaces

01 auto lo 02 iface lo inet loopback 03 # LXC-Config 04 # The primary network interface 05 #auto eth0 06 #iface eth0 inet dhcp 07 auto br0 08 iface br0 inet dhcp 09 bridge_ports eth0 10 bridge_stp off 11 bridge_maxwait 5 12 post-up /usr/sbin/brctl setfd br0 0

Next, create a directory, for example, /lxc, in which to store the guest system's system files. A subdirectory below it represents the root filesystem in the new container:

mkdir -p /lxc/rootfs.guest

You also need a file by the name of /lxc/fstab.guest, which defines mountpoints to /etc/fstab in a similar fashion (Listing 2).

Listing 2: /lxc/fstab.guest

01 none /lxc/rootfs.guest/dev/pts devpts defaults 0 0 02 none /lxc/rootfs.guest/var/run tmpfs defaults 0 0 03 none /lxc/rootfs.guest/dev/shm tmpfs defaults 0 0

The next step is to prepare the guest system. Change to the directory you created in the previous step and use the Debian installer to create a minimal Linux system (a 64-bit variant in this example; for the 32-bit version, simply replace amd64 with i386):

debootstrap --arch amd64 lucid/lxc/rootfs.guest/http://archive.ubuntu.com/ubuntu

Next, you need to modify the new system: In the /lxc/rootfs.guest/lib/init/fstab file, comment out the lines that mount /proc, /dev, and /dev/pts. Then, assign a hostname by editing the /lxc/rootfs.guest/hostname file. In the example, we have simply called the system guest. To do this, just type the following line into a new file names /lxc/rootfs.guest/etc/hosts:

127.0.0.1 localhost guest

The next changes occur directly on the system; you need to run the chroot command to change temporarily to the new environment:

chroot /lxc/rootfs.guest /bin/bash

For logging in to the container later, use OpenSSH, which you can install in the form of the openssh-server package. All you need now are user accounts and a meaningful way to secure root privileges with some help from the sudo command. To start, create a user and add the user as a member of the admin group:

u=linuxmagazine; g=admin adduser $u; addgroup $g; adduser $u $g

Then, run the visudo command to edit the /etc/sudoers file. In the line

%sudo ALL=(ALL) ALL

replace sudo with admin and quit the chroot environment with exit.

Before you can test the container you prepared, you need to configure /lxc/conf.guest in LXC by adding the content from Listing 3 and modifying the IP address in line 8. The line

lxc-create -n guest -f /lxc/conf.guest

Listing 3: Container Configuration: conf.guest

01 lxc.utsname = guest

02 lxc.tty = 4

03 lxc.network.type = veth

04 lxc.network.flags = up

05 lxc.network.link = br0

06 lxc.network.hwaddr = 08:00:12:34:56:78

07 #lxc.network.ipv4 = 0.0.0.0

08 lxc.network.ipv4 = 192.168.1.69

09 lxc.network.name = eth0

10 lxc.mount = /lxc/fstab.guest

11 lxc.rootfs = /lxc/rootfs.guest

12 lxc.pts = 1024

13 #

14 lxc.cgroup.devices.deny = a

15 # /dev/null and zero

16 lxc.cgroup.devices.allow = c 1:3 rwm

17 lxc.cgroup.devices.allow = c 1:5 rwm

18 # consoles

19 lxc.cgroup.devices.allow = c 5:1 rwm

20 lxc.cgroup.devices.allow = c 5:0 rwm

21 lxc.cgroup.devices.allow = c 4:0 rwm

22 lxc.cgroup.devices.allow = c 4:1 rwm

23 # /dev/{,u}random

24 lxc.cgroup.devices.allow = c 1:9 rwm

25 lxc.cgroup.devices.allow = c 1:8 rwm

26 lxc.cgroup.devices.allow = c 136:* rwm

27 lxc.cgroup.devices.allow = c 5:2 rwm

28 # rtc

29 lxc.cgroup.devices.allow = c 254:0 rwm

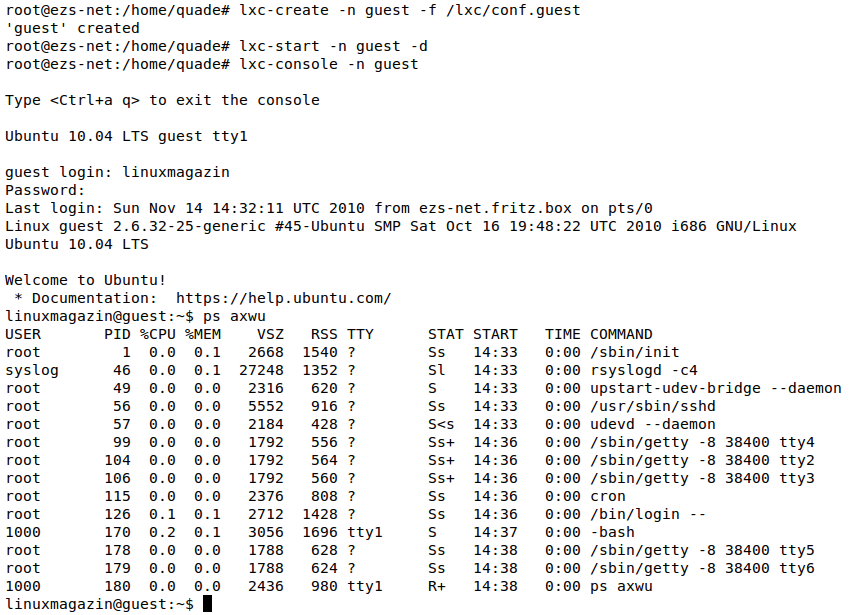

then tells LXC to prepare the configuration. This step is required before you can enable the system by typing:

lxc-start -n guest -d

The -d option runs the command in the background as a daemon. You now have two options for logging in to the virtualized system: either type

lxc-console -n guest

or, if the network works right away:

ssh linuxmagazine@192.168.1.69

Some patience is essential if you use lxc-console access. It can take a couple of minutes before the system container activates your account. After doing so, the container comes up with the information shown in Figure 1.

Recycling Containers

To stop the system again, run the lxc-stop -n guest command on the host. Whenever you change the /lxc/conf.guest configuration file, you need to delete the existing configuration by typing lxc-destroy -n guestname and then create a new one with lxc-create.

The LXC how-to [1] provides an overview of important steps, and information is available for Ubuntu quirks [2]. Also, an IBM kernel developer has written a useful article about LXC tools [3]. Once all of the configuration snippets are in the right place, LXC is a quick and easy alternative for isolating smaller services.