vSphere 5 vs. XenServer 6

Major LeaguePlayoffs

According to market researchers [1], VMware and Citrix currently account for between 80 and 88 percent of the US and UK hypervisor market (Figure 1) and about 71 percent in Western Europe. vSphere 5 [2] by VMware started to move into the server racks in the summer of 2011, but while I was researching this article, Citrix suddenly burst onto the scene with the XenServer 6.0 release [3].

![Market share (%) of primary hypervisors in the US and UK 2011Q2. (Source: V-index [1]) Market share (%) of primary hypervisors in the US and UK 2011Q2. (Source: V-index [1])](images/B01-MarketShare.png)

Although I would have liked to include the future version 3 of the Red Hat Enterprise Virtualization [4] product as a long-shot challenger in this test, after some internal discussion, Red Hat adopted an almost Apple-like stance and refused to open up the current beta test to the press.

Whereas VMware customers could run six or 12 cores per CPU socket in vSphere 4, depending on the license model, vSphere 5 introduces a new model that counts both the CPU sockets and VRAM. The VRAM licensing model relates to the current virtual machines and the RAM allocated to them. The idea was for customers with an Enterprise license to be able to use a maximum of 32GB of RAM per CPU socket (see Table 1, "Old" column). If you exceeded the limit, you had to invest in socket licenses. It seems that lobbying by important customers forced VMware to review these plans [5] and raise the VRAM limits (Table 1, "New" column).

Tabelle 1: vSphere 5 VRAM Limits (GB)

|

License |

Old |

New |

|---|---|---|

|

Essentials |

24 |

32 |

|

Essentials Plus |

24 |

32 |

|

Standard |

24 |

32 |

|

Enterprise |

32 |

64 |

|

Enteprise Plus |

48 |

96 |

Technology

XenServer 6.0, which is based on Xen 4.1, is capable of allocating 128GB of RAM and 16 virtual CPUs to a virtual machine [6]. According to VMware, vSphere 5 can assign a maximum of 32 virtual CPUs and 1TB of RAM [7] to a single instance – four times more than its predecessor.

If you are looking to migrate from version 4 to version 5, you don't need to update individual virtual machines, but you might want to anyway to benefit from the new features in version 8 of VMware's virtual machine hardware (VM Hardware) – such as UEFI and USB 3.0.

The process is similar to earlier migrations: Backup the VM, install the current VMware tools, shut down the VM, right-click the VM, choose Upgrade Virtual Hardware, switch on, and test.

In vSphere 5, VMware can also run Apple server operating systems as virtual machines. Officially, VMware supports Mac OS X v10.6 servers; you need a virtual machine with EFI for this because Apple switched to EFI many moons ago.

Version 8 of VM Hardware is the first release to support 3D graphics acceleration. On virtual Windows systems, it supports Aero functionality. Citrix takes this one step further and officially supports GPU passthrough – that is, the direct allocation of a physical graphics adapter to a virtual machine. Also, XenServer 6.0 has added seven operating systems to guest support.

XenServer 6.0 and vSphere 5 see both vendors extend partition sizes for virtual machines from 2 to 64TB (VMware). However, you can still only create virtual hard disks up to a maximum size of 2TB [8].

Another important change is that VMware has removed ESX Server from its portfolio. From now on, ESXi Server is the VMware gold standard. It offers a lean system that will easily fit on a USB stick or an SD card. VMware promises an easier update policy because it doesn't have to support two servers: ESX and ESXi. Citrix continues to offer a hypervisor product in the form of the lean XenServer.

High Availability

VMware completely reengineered clustering services, such as VMware high availability (HA), for vSphere 5. In the past, an isolated host could cause issues, creating a network failure, interrupting the availability of the ESX server, and thus preventing communication with other cluster members.

This setup caused involuntary virtual machine shutdowns after setting up the cluster. To prevent this, VMware now offers a function for offloading the cluster heartbeat to the storage layer. The precondition for this is having VMFS datastores (ESX vSphere-specific filesystems where the VMDK container files for the guest systems reside) [13].

ESXi servers are unable to communicate over the separate iSCSI or Fibre Channel SAN infrastructure in case of a network failure. This ensures that the setup will communicate on two different paths. Also, vSphere 5 has changed from a primary-secondary clustering concept to a master-slave model, wherein the first ESXi server is the master, and any other member of the cluster is assigned a slave role [14].

The new version now logs all HA activity in a single logfile – each member of the cluster writes the entire communication to /var/log/fdm.log on the fault domain manager. VMware's high-availability solution now uses IP addresses rather than DNS names. Previously, a crash of the high-availability cluster directly followed any DNS failure if the administrator had forgotten to add the cluster members' DNS entries to the /etc/hosts files on all of their ESX(i) servers.

Citrix adopted a quorum disk approach in an earlier version. The XenServer creates a virtual disk, known as the shared quorum disk, which all of the physical XenServers use to provide state information.

In contrast to the ESXi Server, which uses the SAN path as a backup only, Citrix banks on both approaches. All of the cluster members communicate simultaneously via LAN and SAN. In Version 6.0, Citrix can also use an NFS server to handle heartbeat communication.

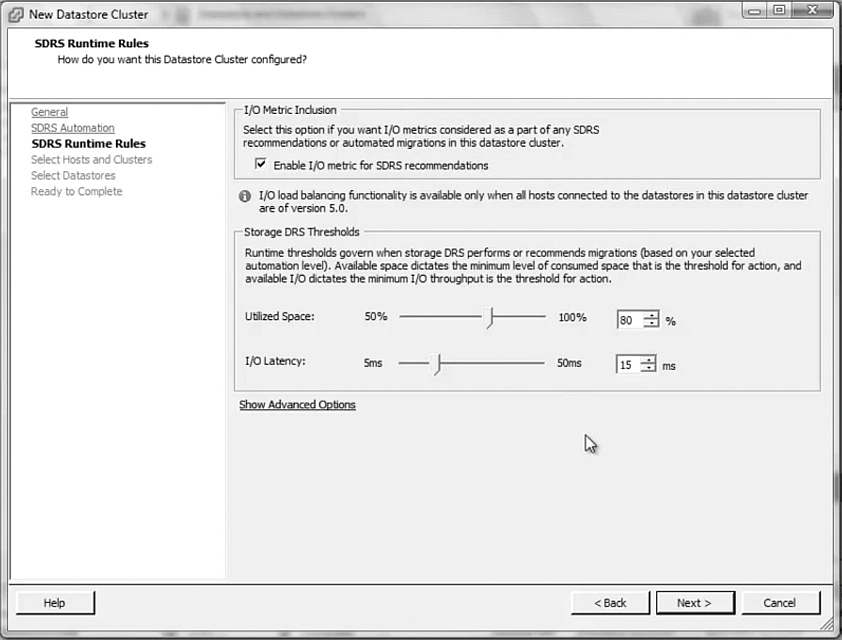

Storage DRS

A new function that extends the VMware cluster service portfolio and is included as of the Enterprise Plus edition is the Storage Distributed Resource Scheduler (SDRS). At the SAN level, SDRS automatically moves virtual systems.

A virtual machine is migrated at the disk level, if latency values are poor, to offload some of the work from a specific storage area. This function also guarantees that the VMFS partition always has a specified usage level (Figure 2).

Storage DRS is interesting for high-performance systems for which latency problems are a no-go. The more virtual systems you have using a disk area, the greater the latency values tend to become. If the I/O latency value increases for a LUN on SATA disks because of heavy traffic, vSphere moves the VMs from a LUN to a SAS or SSD LUN capable of handling the I/O load.

When to Redistribute

With the exception of HA, all the vSphere 5 components use vCenter during operations. This means that DRS for dynamic load balancing depends on vCenter working. If the service is unreachable, dynamic load balancing across the virtual machines fails – and this includes controlled distribution of the load across your ESXi Servers.

XenServer 6.0 uses a different strategy: A virtual appliance, which Citrix provides on the basis of the Linux system, distributes the virtual systems dynamically for each pool. The advantage is that, if the XenCenter happens to be unreachable, the virtual appliance remains in contact with all of the XenServers in the pool, thus continuing to guarantee load balancing across the virtual systems. This approach, known as Citrix Workload Balancing, requires a dedicated appliance for each pool.

Both VMware and Citrix have reworked their switch technology to improve data traffic control. VMware optimizes its distributed switch via a Switched Port Analyzer (SPAN, a term they obviously gleaned from Cisco) and the Link Layer Discovery Protocol (LLDP), which should be useful for both troubleshooting and monitoring. The Linux bridging that Citrix used until version 5.6 has been replaced by the Open vSwitch product to improve NIC bonding (two Network Interface Cards sharing a MAC address and a device).

Management Centers

All major virtualization landscapes require a centralized monitoring and management platform. Both vCenter and XenCenter offer the ability to manage multiple ESX or XenServer systems along with their virtual machines.

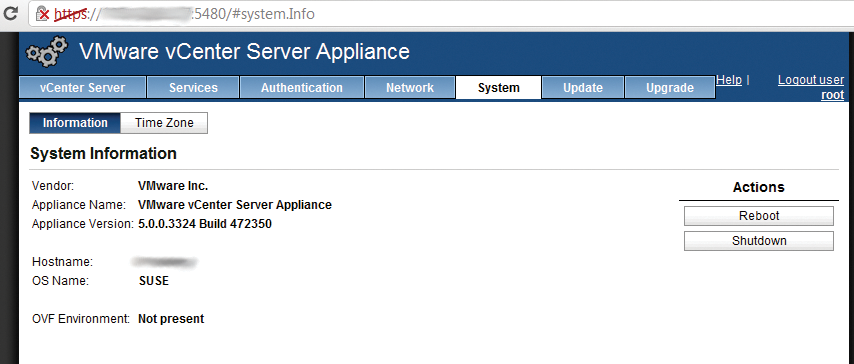

VMware required a Windows operating system for the management platform in all versions before vSphere 5. vSphere 5 now sees the introduction of an alternative vCenter in the form of a virtual appliance that uses the 64-bit version of SLES 11 SP1 as its platform (Figure 3).

Just a few clicks are all it takes to add systems to a vSphere environment. Within minutes, the system is up and running, and the administrator can manage ESX(i) and the virtual machines. A number of indicators point toward VMware favoring the vCenter solution with a Linux appliance in the future, and the vendor might even discontinue the Windows variant. This is not the case with XenServer 6, which still needs a Windows operating system.

To log in to both solutions, administrators still need a Windows client. VMware has obviously recognized the difficulty here and has upgraded its web client to solve the problem. The new client can switch ESX(i) Servers to maintenance mode and handle drag and drop migration on the basis of vMotion. This makes the web client useful for administrative work in the cloud where the customers that run the virtual systems will be handling administration themselves on the web.

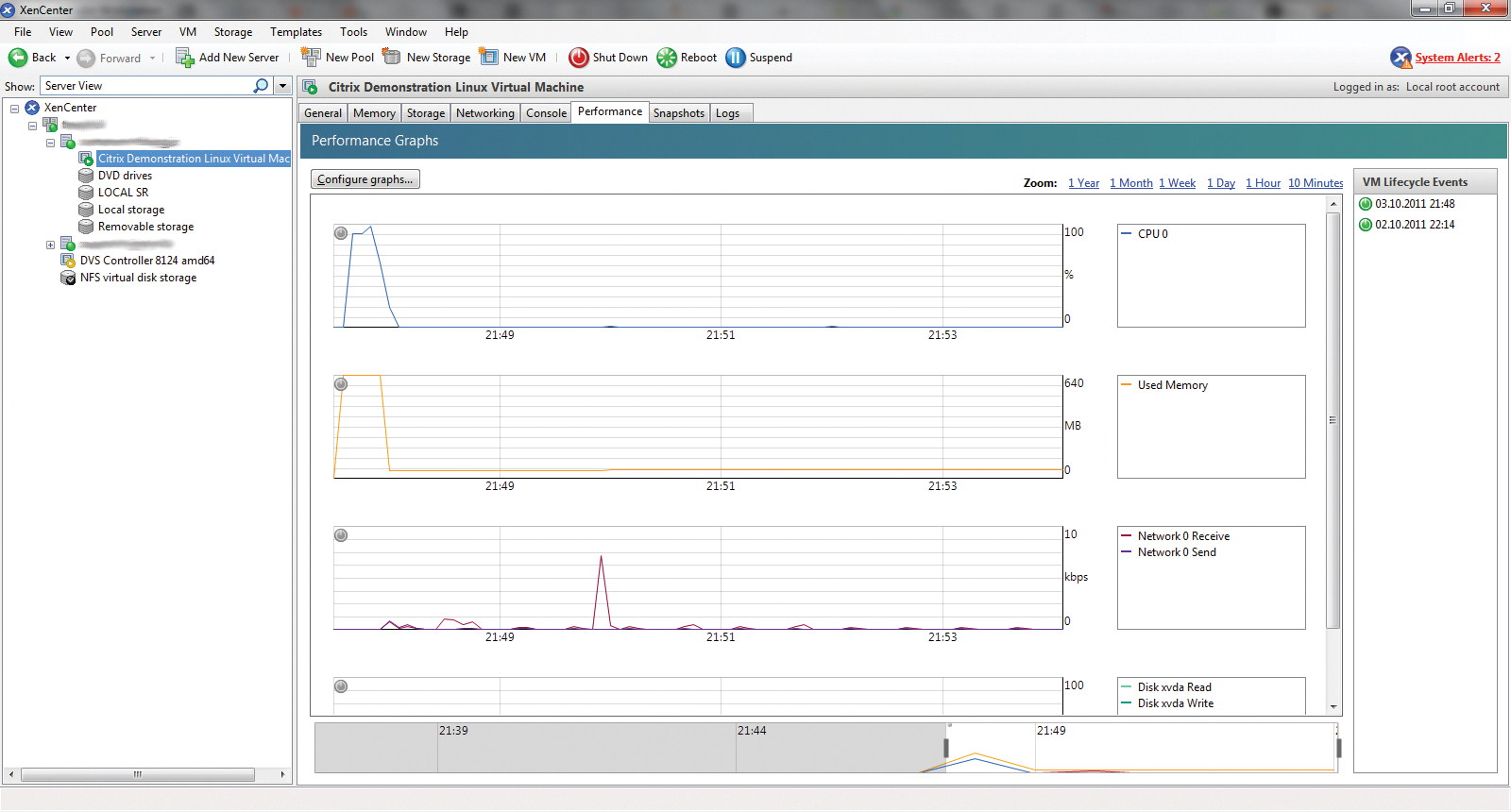

Monitoring

Both XenCenter and vCenter let the administrator monitor the systems they use (e.g., overall CPU load, RAM, network, and hard disk). If you are interested in the level of resources you have needed recently – for example, to plan resources for future needs and updates – the graphical performance display gives you a good idea (Figure 4). It shows resource use in terms of run time or in periods of days, weeks, months, or years.

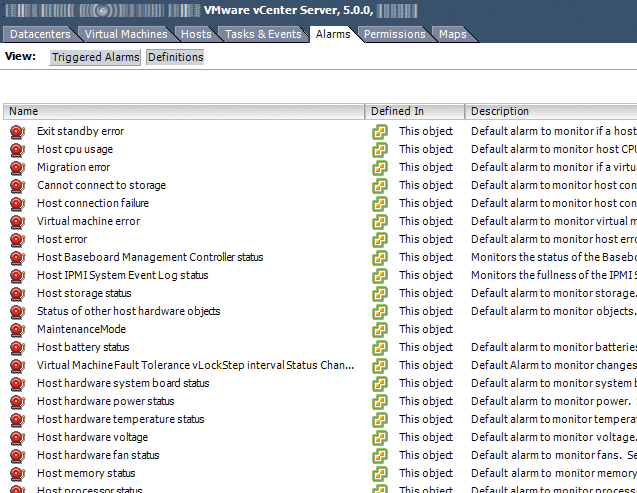

VMware vCenter has a number of predefined alarms (Figure 5). The system can alert the administrator via an SNMP trap – or by email in the case of ESX(i) Server failure – so the administrator can resolve the issue as quickly as possible. Additionally, vSphere logs events and manual tasks, which is extremely useful for system administrators faced with inexplicable system behavior.

One thing spoils the fun with VMware monitoring: Whereas the ESX platform previously allowed the administrator to install RPM packages on the host system to set up the hardware vendors' monitoring agents, the ESXi Server does not do so without vCenter.

The approach of modifying the firmware of an ESXi retrospectively is now restricted to the VMware Update Manager. It uses vCenter to distribute extensions to the ESXi. The administrator then installs a virtual appliance that can then focus on monitoring, for example, the hardware sensors.

If a hard disk in a RAID array fails, the server vendor's appliance can parse the hardware and send an SNMP trap or an email to the admin. Custom alarms by the hardware vendor supplement the alerts that are predefined by VMware. Some server vendors offer a customized version of XenServer that allow the administrator to install XenServer and the agents in one fell swoop, before proceeding to integrate them with the monitoring software.

Judging the Competition

VMware and Citrix have certainly added some interesting features to their products – and customers willing to pay the price will benefit from them. In terms of vSphere 5, you have to slowly but surely ask yourself whether a single back-end server is still state of the art for management because the dependencies continue to accumulate around vCenter.

Citrix's approach with the Clustered Management Layer is more promising, and it provides many functions in the form of virtual appliances, including workload balancing and the vSwitch controller. vSphere 5 is starting to cover its weak spot with the introduction of the vCenter Server Linux Virtual Appliance (vCSA) [15].

At the same time, both of these system management solutions are still fairly reticent in terms of their Linux strategy, with many functions only usable with the vSphere or XenCenter client for Windows. And only Red Hat knows what the imminent RHEV 3.0 will look like and whether a third king, or just a pawn, has entered the game.