Archiving email and documents for small businesses

Long-Term Storage

In many countries, business owners are required by law to archive email and electronic documents containing business content (see "Private Email Use in the Enterprise"). But even without legal constraints, orderly archiving of email and electronic documents can help reconstruct the facts of a business process without too much trouble, even years later.

File Format Requirements

Email often contains attachments created with various office applications, which can cause problems with long-term storage. The file formats these programs use are constantly changing.

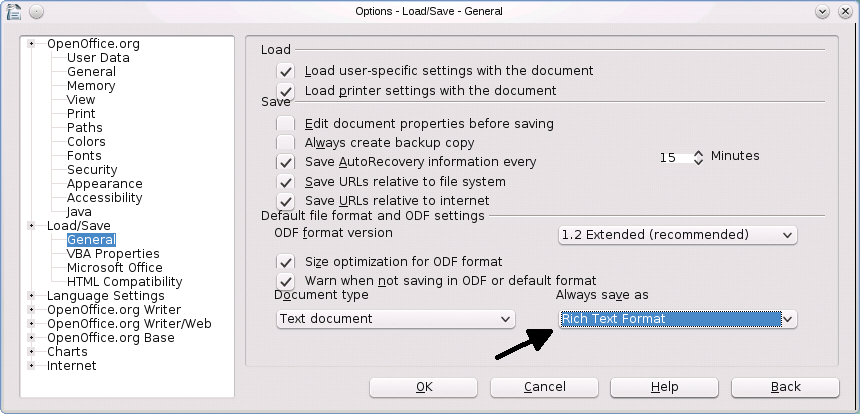

The information must be stored in a format that will remain readable for a long time. Storing in plaintext format offers the biggest benefits. Text files are easy to search, to store in databases, and to convert to a different character set. The use of Rich Text Format (RTF) as a storage format for office documents helps keep (most) of the formatting and offers the benefits of a plaintext file. In Open/LibreOffice, you can go to Tools | Options | Load/Save | General and select Rich Text Format as the default format in the Always save as field (Figure 1).

PDF files can be searched with the use of standard tools such as pdfgrep or pdftotext, but these tools will not work for every document because users can choose to prevent searching when they create documents. Files created using the print function or the CUPS PDF printer also cannot be searched using these standard tools because what looks like text is actually graphical material.

Capture Point for Email

The major league email archiving software grabs incoming and outgoing mail directly at the company's internal mail server. At this point, a copy of every message is created and archived. In a small business, you will not typically have the infrastructure for this because the email accounts are hosted by an external provider.

The email client needs to use the Maildir storage format to ensure a single file for each piece of email. One of the following methods can be used for archiving email in small businesses for one or multiple users:

- The content of the inbox and outbox is regularly copied to the archive at the local email client. This also works for multiple users.

- The mail to be archived is forwarded by the local email client to an archive account. This method requires cooperation on the part of the users.

- Incoming and outgoing email that is no longer needed for active correspondence is manually copied to an archive directory.

Storing Email and Documents

The archive directories are located on the system data partition or disk, and the content of these directories is backed up regularly. Additional copies of the archive to external media will allow for evaluation of the data on a different system some time later.

Two methods of storing data include the following:

- Storing all email in an SQL database. MIME encoding removes the need to use the BLOB field type, which improves the speed of the database. To back up externally, you will typically need to perform a dump. And, you need to watch out for RDBMS version changes, which can cause extra work.

- Storing all email and documents in a directory, which can be accessed using suitable search tools. Again, there is little risk of problems when performing a search even years later.

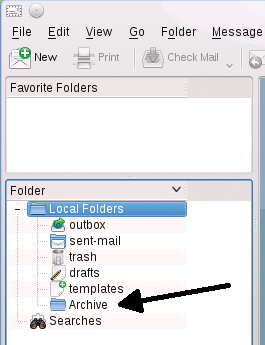

To store email and documents, you will need to create an Archive folder in the email client. This is a link to a directory to which multiple users have access (Figure 2).

Sample Script split.sh

To allow searching of email with attachments, the Base64-encoded attachments need to be decrypted and stored as individual files. Integrating ripmime [1] automates this process in a shell script.

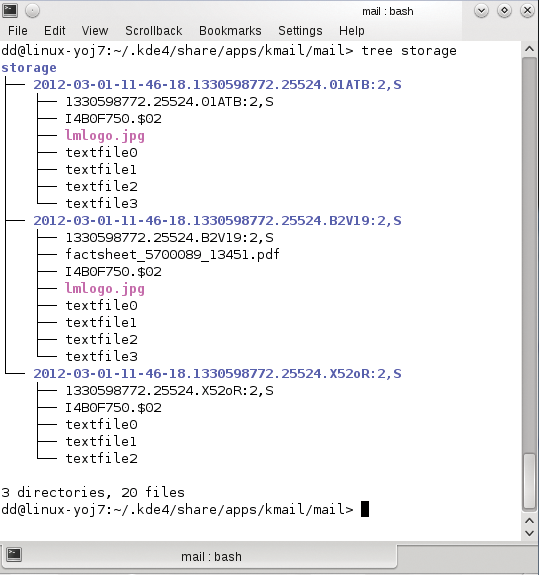

The split.sh script (Listing 1) pushes the email messages out of the Archive directory into the storage directory. The email messages will no longer be accessible to the users now. Each email is assigned a separate subdirectory for storage. This prevents accidental overwriting of attachments with the same name, and you can see which attachment belongs to which piece of mail.

Listing 1: split.sh

01 #! /bin/sh 02 for i in `ls -1 archive/cur` 03 do 04 # Define name component for the individual mail directory 05 pref=`date +%Y-%m-%d-%H-%M-%S` 06 07 # Push mail to storage 08 mv archiv/cur/$i storage 09 10 # Create storage directory for email 11 mkdir storage/$pref.$i 12 13 # Extract attachments from mail 14 ripmime -i storage/$i -d storage/$pref.$i 15 16 # Push original mail into storage directory 17 mv storage/$i storage/$pref.$i 18 done

The directory name also contains the creation date, which is useful if you are viewing the data manually and need data edited within a certain period. The script only shows the basic principle of the function, which still needs to be refined.

I applied the sample script in split.sh to three different email messages, one of which had an attachment. Figure 3 shows the directory structure in the storage directory after running the script.

storage after running the script.Surface Mail in the Electronic Archive

Written correspondence and other documents can also be stored in the electronic archive. You need to scan the documents, store them in PDF format, and tag them with search keys. The search keys are entered manually. You can use automated optical character recognition (OCR), but this will require some manual revision. In a production environment, the search terms are added in dialogs with fixed terms (incoming, customer ID, etc.) and free text.

The Archive.sh script (Listing 2) shows the basic workflow. To begin, gscan2pdf [2] scans the document. The program also lets you integrate OCR, in this case, gocr [3]. You can edit the scan results with an editor and store the results in the clipboard. After saving and quitting gscan2pdf, a text editor is launched. You can then insert the clipboard content and add the search keys (customer ID, date, complaint, return number, etc.). The shell script uses pdflatex [4] to convert the content into a searchable PDF document, then pdftk [5] merges this with the scanned PDF document. After viewing the results, you push the PDF file into the archive transfer directory, where it is picked up and moved to the target directory.

Listing 2: Archive.sh

01 #! /bin/sh

02

03 cd /home/incominggoods/archive

04

05 # Scan

06 gscan2pdf

07

08 # Create empty text for editor

09 echo "Overwrite this and enter search keys!" > searchkeys.tex

10

11 # Edit search keys

12

13 gedit searchkeys.tex

14

15

16 # Create Latex document

17

18 echo "\documentclass[10pt,a4paper]{article}

19 \usepackage[utf8]{inputenc}

20 \usepackage{ngerman}

21 \usepackage[official,right]{eurosym}

22 \\\begin{document}" > att1.tex

23 echo "\end{document}" > att3.tex

24

25 # Merge Latex file components

26

27 cat att1.tex searchkey.tex att3.tex > attach.tex

28 pdflatex attach.tex

29

30 # Generate filename from date and random number

31

32 dn=$(date +%Y-%m-%d-%H-%M-%S.$$)

33

34 # Merge PDF files

35

36 pdftk A=copy.pdf B=attach.pdf CAT A B output archive$dn.pdf

37

38

39 # Check the results by displaying the PDF file

40 evince archive$dn.pdf

Accessing Archived Data

Access to the archived data is provided by a web server, which the users can access on the local network. The service must provide protection against unauthorized access and reading. Potential approaches include encrypting communication and restricting access to localhost. Users can use either the console or SSH, NoMachine, or RDP on the local network.

Namazu

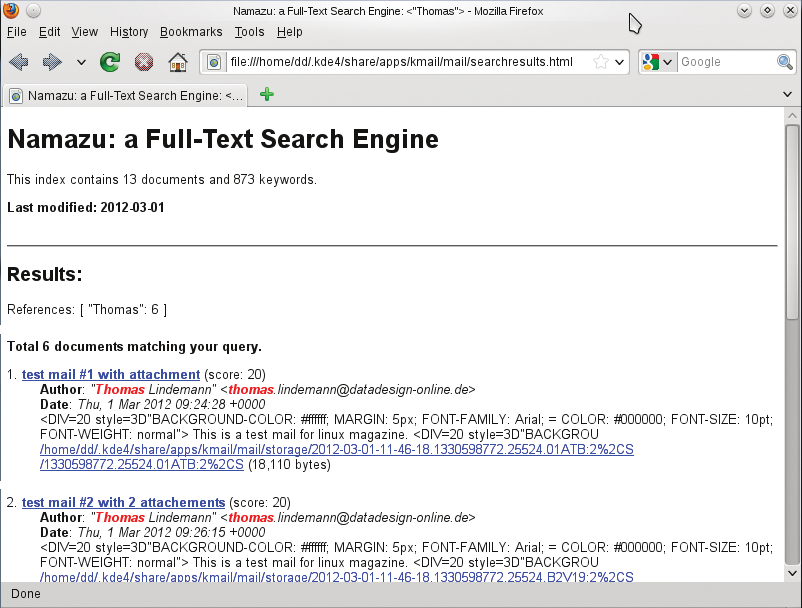

Namazu [6] is a full-text search engine that lets you search the archive. The program collaborates with web servers, and the package also includes mknmz for creating an index. It requires namazu to find the desired documents.

On Debian, the configuration files are located under /etc/namazu:

-

mknmzrc: Define the maximum file size and text scope to which indexing is performed:

$FILE_SIZE_MAX = 900000000; $TEXT_SIZE_MAX = 900000000;

This value is based on experience. Other configuration options relate to weighting the results, supported and non-supported file types, directory locations, and display options.

-

namazurc: Enter at least theIndex,Template, andLangdetails here.

The project website has an exhaustive and very readable guide. The index is built after issuing the mknmz command. The program can also handle MIME-encoded mail with the --decode-base64 option. The sample shell script indexbuilder.sh

#! /bin/sh mknmz -a storage/* -O namazu/index

uses the -a option, which allows searching of all files. The storage location for the index is specified by the -O option.

In this shell script, mknmz outputs a message for each document. At the end of the run, a statistic is shown. When new documents are added, information for the documents is added to the existing index. The index is not normally rebuilt.

The search.sh script (Listing 3) shows a minimalist query function. The call to namazu points to the configuration file (-f). The output is limited to 500 matches (-n) and is returned in HTML format (-h). See Figure 4 for the results.

Listing 3: search.sh

01 #! /bin/sh 02 while true; 03 do 04 clear 05 06 # Search key 07 echo -n "Enter search key: ";read sube 08 09 # Query 10 namazu -f/etc/namazu/namazurc -n 500 -h \""$sube\"" > searchresults.html 11 12 # Display 13 iceweasel searchresults.html & 14 15 # Stop or continue 16 echo -n "<<<<<<<<<< Press Ctrl C to stop >>>>>>>>>>>>>>>>"; read wn 17 done

Alternatives

Instead of using Namazu, you can search text files (RTF format) with grep and PDF files with pdfgrep [7]. The pdftotext tool extracts text from PDF files, and you can then use the resulting text file in a database application.