Getting started with I/O profiling

Profiles in Storage

Storage is one of the largest issues – if not the largest issue – in high-performance computing (HPC). All aspects of HPC storage are critical to the overall success or productivity of systems: high performance, high reliability, access protocols, scalability, ease of management, price, power, and so on. These aspects, and, perhaps more importantly, combinations of these aspects, are key drivers in HPC systems and performance.

With so many options and so many key aspects to HPC storage, a logical question you might ask is: Where should I start? Will a NAS (Network Attached Storage) solution work for my system? Do I need a high-performance parallel filesystem? Should I use a SAN (Storage Area Network) as a back end for my storage, or can I use less expensive DAS (Direct Attached Storage)? Should I use InfiniBand for the compute node storage traffic or will GigE or 10GigE be sufficient? Should I use 15,000rpm drives or 7,200rpm drives? Do I need SSDs (solid state drives)? Which filesystem should I use? Which I/O scheduler should I use within Linux? Should I tune my network interfaces? How can I take snapshots of the storage, and do I really need snapshots? How can I tell if my storage is performing well enough? How do I manage my storage? How do I monitor my storage? Do I need a backup or just a copy of the data? How can I monitor the state of my storage? Do I need quotas, and how do I enforce them? How can I scale my storage in terms of performance and capacity? Do I need a single namespace? How can I do a filesystem check, how long will it take, and do I need really one? Do I need cold spare drives or storage chassis? What RAID level do I need (file or object)? How many hot spares are appropriate? SATA versus SAS? And on and on.

When designing or selecting HPC storage, these are some of many questions to consider, but you might notice one item I left out of this laundry list: I did not discuss applications.

Designing HPC storage, just as with designing the compute nodes and network layout, starts with the applications. Although designing hardware and software for storage is very important, without considering the I/O needs of your application mix, the entire process is just an exercise with no real value. You are designing a solution without understanding the problem. So, the first thing you really should consider is the I/O pattern and I/O needs of your applications.

Some people might think that understanding application I/O patterns is a futile effort because, with thousands of applications, they think it's impossible to understand the I/O pattern of them all. However, it is possible to rank applications, beginning with the few that use the most compute time or seemingly use a great deal of I/O. Then, you can begin to examine their I/O patterns.

Tools to Help Determine I/O Usage

You have several options for determining the I/O patterns of your applications. One obvious way is to run the application and monitor storage while it is running. Measuring I/O usage on your entire cluster might not always be the easiest thing to do, but some basic tools can help you. For example, tools such as sysstat utilities sar, iostat, and nfsiostat [1]; iotop [2]; and collectl [3] can be used to help you measure your I/O usage. (This list of possible tools is by no means exhaustive.)

iotop

With iotop, you can get quite a few I/O stats for a particular system. It runs on a single system, such as a storage server or a compute node, and measures the I/O on that system for all processes, but on a per-process basis, allowing you to watch particular processes (such as an HPC application).

One way to use this tool is to run it on all of the compute nodes that are running a particular application, perhaps as part of a job script. When the MPI job runs, you will get an output file for each node, but before collecting them together, be sure you use a unique name for each node. Once you have gathered all of the output files, you should sort the data to get only the information for the MPI application. Finally, you can take the data for each MPI process and create a time history of the I/O usage of the application and perhaps find which process is creating the most I/O.

Depending on the storage solution you are using, you might be able to use iotop to measure I/O usage on the data servers. For example, if you are using NFS on a single server, you could run iotop on that server and measure I/O usage for the nfsd processes.

However, using iotop to measure I/O patterns really only gives you an overall picture without a great deal of detail. For example, it is probably impossible to determine whether the application is doing I/O to a central storage server or to the local node. It is impossible to use iotop to determine how the application is doing the I/O. Moreover, you really only get an idea of the throughput and not the IOPS that the application is generating.

iostat

Iostat allows you to collect quite a few I/O statistics, as well, and even allows you to specify a particular device on the node, but it does not separate out I/O usage on a per-process basis (i.e., you get an aggregate view of all I/O usage on a particular compute node). However, iostat gives you a much larger array of statistics than iotop. In general, you get two types of reports with iostat. (You can use options to get one report or the other, but the default is both types of reports.) The first report has CPU usage, and the second report shows device utilization. The CPU report contains the following information:

- user: Percentage of CPU utilization that occurred while executing at the user level (this is application usage).

-

%nice: Percentage of CPU utilization that occurred while executing at the user level with

nicepriority. - %system: Percentage of CPU utilization that occurred while executing at the system level (kernel).

- %iowait: Percentage of time the CPU or CPUs were idle, during which the system had an outstanding disk I/O request.

- %steal: Percentage of time spent in involuntary wait by the virtual CPU or CPUs while the hypervisor was servicing another virtual processor.

- %idle: Percentage of time the CPU or CPUs were idle and the systems did not have an outstanding disk I/O request.

The values are computed as system-wide averages for all processors when your system has more than one core (which is pretty much everything today).

The second report prints out all kinds of details about device utilization (physical device or a partition). If you don't use a device on the command line, then iostat will print out values for all devices (alternatively, you can use ALL as the device). Typically the report output includes the following:

- Device: Device name.

- rrqm/s: Number of read requests merged per second that were queued to the device.

- wrqm/s: Number of write requests merged per second that were queued to the device.

- r/s: Number of read requests issued to the device per second.

- w/s: Number of write requests issued to the device per second.

- rMB/s: Number of megabytes read from the device per second.

- wMB/s: Number of megabytes written to the device per second.

- avgrq-sz: Average size (in sectors) of the requests issued to the device.

- avgqu-sz: Average queue length of the requests issued to the device.

- await: Average time (milliseconds) for I/O requests issued to the device to be served. This includes the time spent by the requests in a queue and the time spent servicing them.

- svctm: Average service time (milliseconds) for I/O requests issued to the device.

- %util: Percentage of CPU time during which I/O requests were issued to the device (bandwidth utilization for the device). Device saturation occurs when this values is close to 100%.

These fields are specific to the options used, as well as to the device.

Relative to iotop, iostat gives more detail on the actual I/O, but it does so in an aggregate manner (i.e., not a per-process basis). Perhaps more importantly, iostat reports on a device basis, not a mountpoint basis. Because the HPC application is likely writing to a central storage server mounted on the compute node, it is not very likely that the application is writing to a device on each node (however, you can capture local I/O using iostat), so the most likely use of iostat is on the storage server or servers.

nfsiostat

A tool called nfsiostat, which is very similar to iostat, is part of the sysstat collection, but it lets you monitor NFS-mounted filesystems, so this tool can be used for MPI applications that are using NFS as a central filesystem. It produces the same information as iostat (see previous). In the case of HPC applications, you would run nfsiostat on each compute node, collect the information, and process it in much the way you did with iotop.

sar

Sar is one of the most common tools for gathering information about system performance. Many admins commonly use sar to gather information about CPU utilization and I/O and network information on systems. I won't go into detail about using sar because there are so many articles around the web about it, but you can use it to examine the I/O pattern of HPC applications at a higher level.

Like iotop and nfsiostat, you would run sar on each compute node and gather the statistical I/O information, or you could just let it gather all information and sort out the I/O statistics. Then, you could gather all of that information together and create a time history of overall application I/O usage.

collectl

Collectl is sort of an uber-tool for collecting performance statistics on a system. It is oriented more for HPC than sar, iotop, iostat, or nfsiostat, because it has hooks to monitor NFS and Lustre. But, it also requires that you run it on all nodes to get an understanding of what I/O load is imposed throughput the cluster.

Like sar, you run collectl on each compute node and gather the I/O information for that node. However, it allows you to gather information about processes and threads, so you can capture a bit more information than with sar. Then, you have to gather all of the information for each MPI process or compute node and create a time history of the I/O pattern of the application.

A similar tool called collectd [4] is run as a daemon to collect performance statistics much like collectl.

These tools can help you understand what is happening on individual systems, but you have to gather the information on each compute node or for each MPI process and create your own time history or statistical analysis of the I/O usage pattern. Moreover, they don't do a good job, if at all, of watching IOPS usage on systems, and IOPS can be a very important requirement for HPC systems. Other tools can help you understand more detailed I/O usage, but at a block level, allowing you to capture more information, such as IOPS.

blktrace

Blktrace [5] can watch what is happening on specific block devices, so you can use this tool on storage servers to watch I/O usage. For example, if you are using a Linux NFS server, you could watch the block devices underlying the NFS filesystem, or if you are using Lustre [6], you could use blktrace to monitor block devices on the OSS nodes.

Blktrace can be a very useful tool because it also allows you to compute IOPS (I think it's only "Total IOPS"). Also, a tool called Seekwatcher [7] can be used to plot results from blktrace. An example on the blktrace website illustrates this.

Obviously, no single tool can give you all the information you need across a range of nodes. Perhaps a combination of iotop, iostat, nfsiostat, and collectl coupled with blktrace can give you a better picture of what your HPC storage is doing as a whole. Coordinating this data to generate a good picture of I/O patterns is not easy and will likely involve some coding. But, if you assume that you can create this picture of I/O usage, you have to correlate it with the job history from the job scheduler to help determine which applications are using more I/O than others.

However, these tools only tell you what is happening in aggregate, focusing primarily on throughput, although blktrace can give you some IOPS information. They can't really tell you what the application is doing in more detail, such as the order of reads and writes, the amount of data in each read or write function, and information on lseeks or other I/O functions. In essence, what is missing is the ability to look at I/O patterns from the application level. In the next section, I'll present a technique and application that can be used to help you understand the I/O pattern of your application.

Determining I/O Patterns

One of the keys to selecting HPC storage is to understand the I/O pattern of your application. This isn't an easy task to accomplish overall, and several attempts have been made over the years to understand I/O patterns. One method I employ is to use strace [8] (system trace) to profile the I/O pattern of an application.

Because virtually all I/O from an application will use system libraries, you can use strace to capture the I/O profile of an application. For example, the command

strace -T -ttt -o strace.out [application]

on an application doing I/O might output a line like this:

1269925389.847294 write( 17, " 37989 2136595 2136589 213" ..., 3850) = 3850 <0.000004>

This single line has several interesting parts. First, the amount of data written appears after the equals sign (in this case, 3,850 bytes). Second, the amount of time used to complete the operation is at the very end in the angle brackets (< >) (in this case, 0.000004 seconds). Third, the data sent to the function is listed inside the quotes and is abbreviated, so you don't see all of the data. This can be useful if you are examining an strace of an application that has sensitive data. The fourth piece of useful information is the first argument to the write() function, which contains the file descriptor (fd) to the specific file (in this case, it is fd 17). If you track the file associated with open() functions (and close() functions), you can tell on which file the I/O function is operating.

From this information, you can start to gather all kinds of statistics from the application. For example, you can count the number of read() or write() operations and how much data is in each I/O operation. This data can then be converted into throughput data in megabytes per second (or more). You can also count the number of I/O functions in a period of time to estimate the IOPS needed by the application. Additionally, you can do all of this on a per-file basis to find which files have more I/O operations than others. Then, you can perform a statistical analysis of this information, including histograms of the I/O functions. This information can be used as a good starting point for understanding the I/O pattern of your application.

However, if you run strace against your application, you are likely to end up with thousands, if not hundreds of thousands, of lines of output. To make things a bit easier, I have developed a simple program in Python that goes through the strace output and does this statistical analysis for you. The application, with the ingenious name "strace_analyzer" [9], scans the entire strace output and creates an HTML report of the I/O pattern of the application, including plots (using matplotlib [10]).

To give you an idea of what the HTML output from the strace_analyzer looks like, a snippet of the first part of the major output (without plots) is available online [11]. It is only the top portion of the report; the rest of the report contains plots – sometimes quite a few.

The HTML output from strace_analyzer contains plots, tables, and analyses of processes that give you all sorts of useful statistical information about the application running. One subtle thing to notice about using strace to analyze I/O patterns is that you are getting the strace output from a single application. Because I'm interested in HPC storage here, many of the applications will be MPI [12] applications, for which you get one strace output per MPI process.

Getting strace output for each MPI process isn't difficult and doesn't require that the application be changed. You can get a unique strace output file [13] for each MPI process, but again, you will get a great deal of output in each file. To help with this, strace_analyzer creates an output file (a "pickle" in Python-speak), and you can take the collection of these files for an entire MPI application and perform the same statistical analysis across the MPI processes. The tool, called "MPI strace Analyzer" [14] produces an HTML report across the MPI processes. A full report is available online [15] from an eight-core LS-DYNA [16] run that uses a simple central NFS storage system.

What Do I Do with This Information?

Once you have gathered all of this statistical information about your applications, what do you do with it? The answer is: You can do quite a bit. The first thing I always look for is how many processes in the MPI application actually do I/O, primarily write(). You will be very surprised that many applications have only a single MPI process, typically the rank-0 process, doing all of the I/O for the entire application. However, in a second class of applications, a fixed number of MPI processes perform I/O, and this number is less than the total number of MPI processes. Finally, a third class of applications have all, or virtually all, processes writing to the same file at the same time (MPI-IO [17]). If you don't know whether your applications fall into one of these three classes, you can use mpi_strace_analyzer to help determine that.

Just knowing whether your application has a single process doing I/O or whether it uses MPI-IO is a huge step toward making an informed decision about HPC storage, because running MPI-IO codes on NFS storage is really not recommended, although it is possible. Instead, the general rule of thumb is to run MPI-IO codes on parallel distributed storage that have tuned MPI-IO implementations. (Please note that these are general rules and that it is possible to run MPI-IO codes on NFS storage and non-MPI-IO codes on parallel distributed storage).

Other useful information obtained from examining the application I/O pattern, such as:

- Throughput requirements (read and write),

- IOPS requirements (write IOPS, read IOPS, total IOPS),

- Sizes of

read()andwrite()function calls (i.e., the distribution of data sizes), - Time spent doing I/O versus total run time, and

-

lseekinformation

can be used to determine not only what kind of HPC storage you need, but also how much performance you need from the storage.

Is your application dominated by throughput performance or IOPS? What is the peak IOPS obtained from the strace output? How much time is spent doing I/O compared with total run time?

If you look at the specific example online, you can make the following observations from the HTML report:

- The MPI process associated with

file_18597spends the largest amount of time doing I/O. However, it is only 1.15% of the total run time. The best improvement on wall clock time I could ever make by adding more I/ O capability to the system is only 1.15%. - If you examine Table 1, which counts the number of times a specific I/O function is called (functions with all-zero output has been removed for space reasons), you can see that the MPI processes associated with

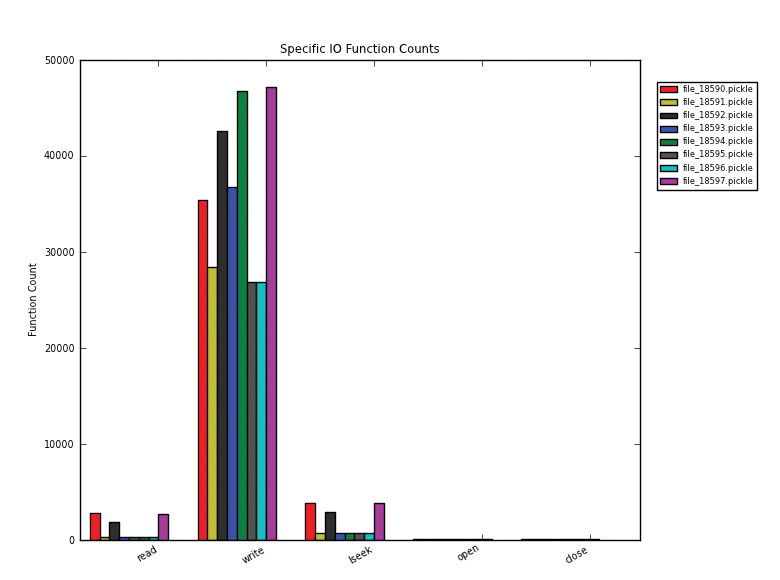

file_18597have the largest number oflseek(),write(),open(),fstat(), andstat()function calls of all of the processes. However, as can be seen in Figure 1, which plots the major I/O functions [read(),write(),lseek(),open(), andclose()], the MPI process associated withfile_18590(red) also does quite a bit of I/O.

Tabelle 1: I/O Function Command Count

|

Command |

file_18590.pickle |

file_18591.pickle |

file_18592.pickle |

file_18593.pickle |

file_18594.pickle |

file_18595.pickle |

file_18596.pickle |

file_18597.pickle |

|---|---|---|---|---|---|---|---|---|

|

|

3,848 |

766 |

2,946 |

762 |

739 |

738 |

757 |

3,883 |

|

|

0 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

|

|

72 |

70 |

73 |

70 |

70 |

72 |

69 |

121 |

|

|

77 |

74 |

78 |

74 |

74 |

76 |

73 |

120 |

|

|

35,369 |

28,438 |

42,656 |

36,786 |

46,779 |

26,834 |

26,883 |

47,224 |

|

|

8 |

6 |

8 |

6 |

6 |

6 |

6 |

10 |

|

|

5 |

5 |

5 |

5 |

5 |

7 |

7 |

19 |

|

|

1,384 |

143 |

1,084 |

143 |

143 |

144 |

144 |

1,058 |

|

|

8 |

7 |

8 |

7 |

7 |

7 |

7 |

36 |

|

|

36 |

36 |

36 |

36 |

36 |

36 |

36 |

643 |

|

|

2,798 |

350 |

1,896 |

347 |

334 |

330 |

325 |

2,728 |

|

|

5 |

4 |

5 |

4 |

4 |

5 |

4 |

10 |

|

|

21 |

21 |

21 |

21 |

21 |

22 |

26 |

31 |

|

|

3,848 |

766 |

2,946 |

762 |

739 |

738 |

757 |

3,883 |

|

Table 2 from the MPI strace Analyzer report; functions with all-zero output have been removed for space reasons. The numbers in bold are maximum counts. |

||||||||

- To help resolve whether

file_18590orfile_18597(lavender) has the more dominant I/O process, examine Figure 2, which plots the total amount of data written by each function, with the average (± standard deviation) plotted as well. This figure shows thatfile_18597did the majority of data writing for this application (1.75GB out of about 3GB, or almost 60 percent).

- In Table 2, you'll see that most of the data is passed in 1 to 8KB chunks (all-zero rows >100MB have been removed), with the vast majority in 1KB or smaller chunks. This indicates that the application is doing a very large number of small writes (which could influence storage design).

Tabelle 2: Write Function Calls for Each File as a Function of Size

|

IO_Size_Range |

file_18590.pickle |

file_18591.pickle |

file_18592.pickle |

file_18593.pickle |

file_18594.pickle |

file_18595.pickle |

file_18596.pickle |

file_18597.pickle |

|

0KB < < 1KB |

30,070 |

23,099 |

37,496 |

31,502 |

42,044 |

22,318 |

22,440 |

40,013 |

|

1KB < < 8KB |

5,105 |

5,144 |

4,968 |

5,094 |

4,566 |

4,358 |

4,294 |

5,897 |

|

8KB < < 32KB |

14 |

14 |

14 |

15 |

14 |

14 |

14 |

49 |

|

32KB < < 128KB |

64 |

64 |

63 |

61 |

53 |

47 |

44 |

439 |

|

128KB < < 256KB |

0 |

2 |

0 |

0 |

0 |

0 |

0 |

2 |

|

256KB < < 512KB |

2 |

2 |

2 |

3 |

2 |

2 |

2 |

3 |

|

512KB < < 1MB |

3 |

2 |

2 |

3 |

2 |

4 |

3 |

4 |

|

1MB < < 10MB |

87 |

87 |

87 |

84 |

74 |

67 |

62 |

787 |

|

10MB < < 100MB |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

2 |

|

Table 4 from the MPI strace Analyzer report; all-zero rows for sizes >100MB are not shown. The numbers in bold are maximum counts. |

||||||||

|---|---|---|---|---|---|---|---|---|

- The

write()function statistics (see the "Write Statistics" box) report that the whole application only wrote a little more than 3GB of data, with an average data size of a little more than 10KB per write.

- The same thing can be done by analyzing the

read()function output. The majority of data is read in the 1 to 10MB range. It turns out that a number of theread()function calls were the result of loading shared objects (.so) files, which are read and loaded into memory. - The last section of the report (see the "IOPS Statistics" box) shows that the Peak Write IOPS was about 2,444, which is fairly large considering that a typical 7,200rpm SAS or SATA drive can only handle about 100 IOPS. The Read IOPS number was low (153), and the Total IOPS number was high as well (2,444). You would think this application needs a fair amount of IOPS performance because of the very small writes being performed, and you might presume that you need to have 25, 7,200rpm SAS or SATA drives to meet the IOPS requirement of the application. However, don't forget that the best wall clock improvement possible for this application is 1.15 percent, so spending so much on drives will only improve the overall performance of the application by a small amount. It might be better to use that money to buy another compute node, if the application scales well, to improve performance.

Summary

HPC storage is definitely a difficult problem for the industry right now. Designing systems to meet storage needs has become a headache that is difficult to cure. However, you can make this headache easier to manage if you understand the I/O patterns of your applications.

In this article, I talked about different ways to measure the performance of your current HPC storage system, your applications, or both, but this process requires a great deal of coordination to capture all of the relevant information and then piece it together. Some tools, such as iotop, iostat, nfsiostat, collectl, collectd, and blktrace, can be used to understand what is happening with your storage and your applications. However, they don't really give you the details of what is going on from the perspective of the application. Rather, these tools are all focused on what is happening on a particular server (compute node). For HPC, you would have to gather this information for all nodes and then coordinate it to understand what is happening at an application (MPI) level.

Using strace can give you more information from the perspective of the application, although it also requires you to gather all of this information on each node in the job run and coordinate it. To help with this process, two applications – strace_analyzer and mpi_strace_analyzer – have been written to sort through the mounds of strace data and produce some useful statistical information.