OpenStack workshop, part 1: Introduction to OpenStack

What OpenStack Really Wants

The end of September was marked by the popping of champagne corks down at the OpenStack project: Right on schedule, the project released a new version of its own cloud environment, OpenStack 2012.2 Folsom, adding many of the features that had been painstakingly developed and maintained over the preceding months. OpenStack is reliable when it comes to its self-imposed release cycle: Every six months – in October and April – a new version of the Python software sees the light of day.

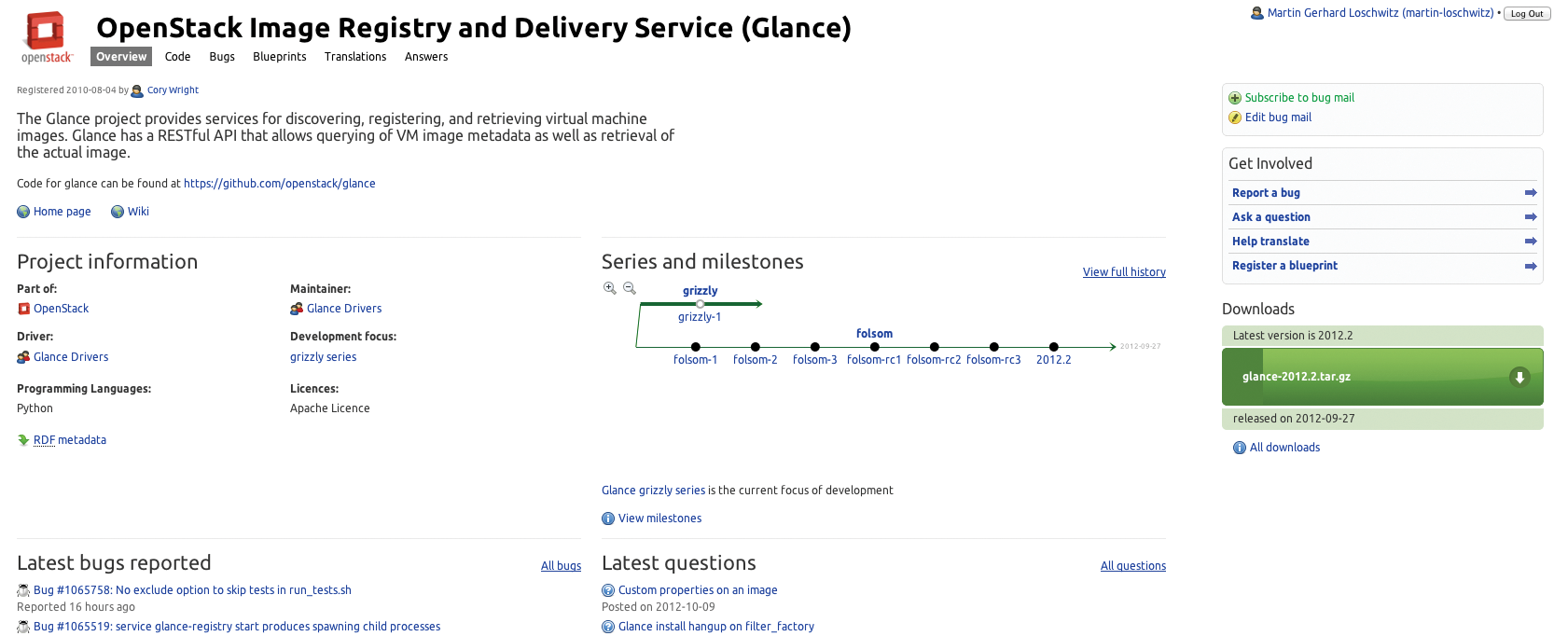

The fact that the release cycle and the release data match those of Ubuntu is no coincidence: Mark Shuttleworth himself has stated that the projects are designed to be, and are, closely linked. Thus, it's also no accident that the entire OpenStack project relies on development tools by Canonical and develops almost exclusively in Launchpad (Figure 1). When Ubuntu made the whole thing more or less official back in May 2011 and dropped Eucalyptus in favor of OpenStack as its cloud solution, a murmur went through the developer community.

One, Two, Three …

Some observers found the whole thing rather strange at the time. Why would Canonical force a well-functioning solution to walk the plank and replace it with an environment, which, at the time, was hardly "enterprise-ready"? Come to think of it, what was the OpenStack developers' motivation for entering a market that other projects, such as Eucalyptus and OpenNebula, had already divided up among themselves? Was there really room for another "big player"?

The answers to all these questions became clear in April 2012: Canonical positioned Ubuntu 12.04 as the first enterprise distribution with long-term support that includes a well-integrated cloud solution, and, because Canonical had been around from the start of OpenStack, it was able to influence many of the seminal developments in OpenStack. Ubuntu has thus cast its own role in the cloud industry: If you want a cloud with Linux, only Ubuntu currently gives you the entire system along with support from a single source.

A direct comparison of the Eucalyptus, OpenNebula, and OpenStack development models shows the greatest differences between the projects. Whereas Eucalyptus and OpenNebula are backed by individual companies that have built communities around their products, but otherwise tend keep the covers on their products, the OpenStack cloud was designed as a collaborative project that was open to all comers from the outset. Although some scoff that the three environments ultimately do the same thing, only OpenStack can claim to have found more than 180 official supporters in the industry in the past two years – including such prominent names as Dell and Deutsche Telekom. The initial project by GitHub and NASA has thus turned into quite a successful beast.

What the Cloud Needs

Certainly, OpenStack is a permanent fixture in the cloud landscape. However, how well does OpenStack currently support the cloud? You can only answer this question meaningfully if it is clear what the cloud is supposed to do, and that has not been clearly defined precisely because the term has been bandied about the IT industry for more than two years.

Most infrastructure providers want to sell two services to their customers by introducing cloud environments: computational power in the form of virtual machines and storage space for data on the web.

Virtualization is more important than storage offerings á la Dropbox. However, a virtualization solution is still not a cloud. What the environment additionally needs to offer, according to the ISPs, is automation – meaning that once services are set up by the service provider, customers can stop or start them independently, with no further intervention on the part of the provider. For this to happen, the environment needs a user interface that is usable by non-IT professionals. These "self-servicing portals" are probably the biggest difference between traditional virtualization environments, such as VMware and the like, and cloud software, such as OpenStack, even though solutions such as oVirt by Red Hat illustrate that the borders here are starting to blur.

So, what solutions have the OpenStack developers designed to face the above challenges?

OpenStack Is Modular

In principle, the following applies: The OpenStack architecture is modular, and each task is assigned to a single component that provides the required functionality. In OpenStack-speak, a distinction is made between the actual core components of the software and the add-on tools. The add-ons handle features needed in individual use cases for which the majority of users have no interest.

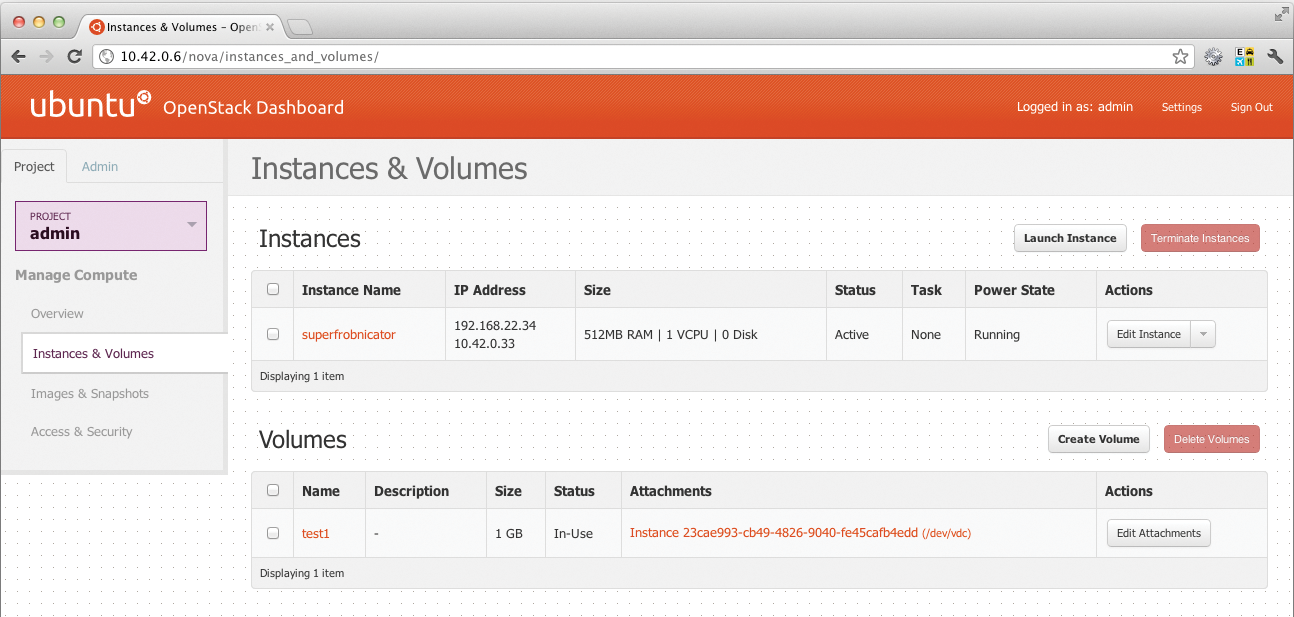

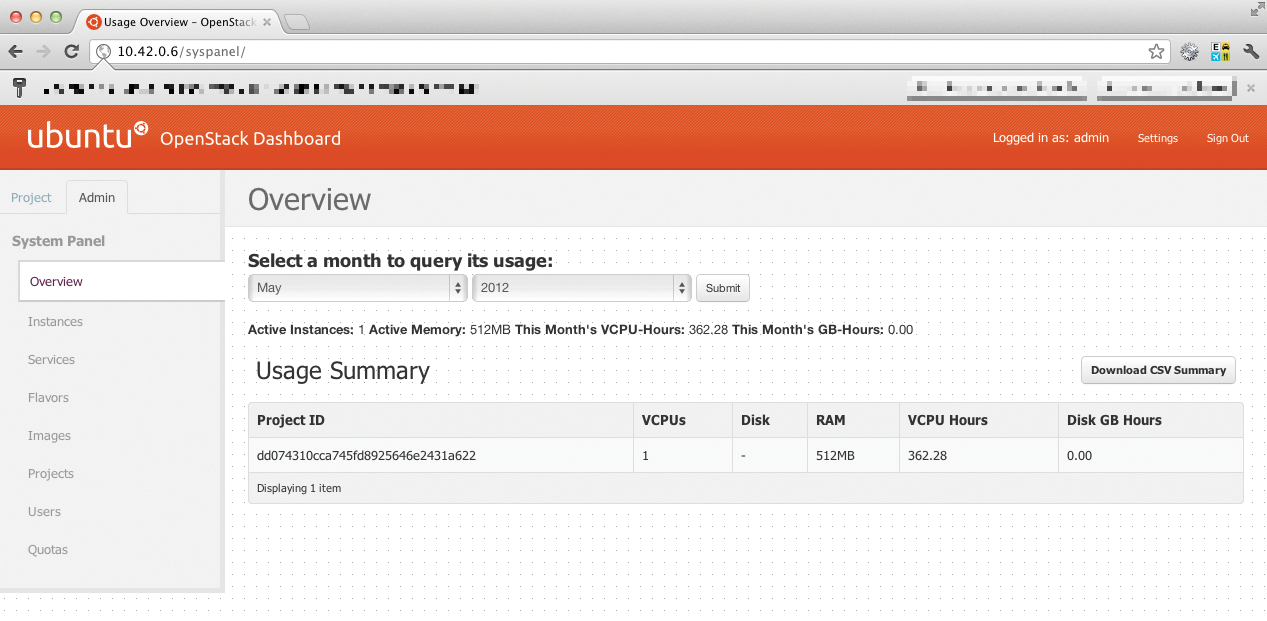

The "OpenStack" name is an umbrella for the core components. Additionally, the "incubated projects" category applies to software that will become part of the core in the foreseeable future, but whose development has not yet progressed to a stable stage. The number of core components has increased in Folsom. Besides Keystone (authentication), Glance (images), Nova (cloud controller), Dashboard (web interface; Figures 2 and 3), and Swift (storage), they now include Quantum, which takes care of the network in the cloud, and Cinder, which provides memory in the form of block storage to virtual machines.

Keystone Who?

Keystone is the component that leads the dance. Within OpenStack, Keystone offers a standardized interface for authentication.

It makes sense that you need the most granular user management system possible in the cloud and its associated infrastructure. On the one hand are the cloud provider's admins, who take care of daily maintenance and management in the cloud. On the other hand are the customers who want to manage their virtual systems and online storage autonomously.

Customers are assigned to two categories in OpenStack: The tenants are the companies that use the cloud services. The users belong to one or more tenants and can have different roles. For example, it is possible for a user to act as the administrator for a tenant and create new virtual systems for this tenant, whereas another user with the same tenant can only see the virtual systems that are already running.

This approach is analogous to classic "user groups" and "users" on Linux systems, and it allows for a better understanding of OpenStack's rights allocation system.

Keystone is the central point of contact for all other services when it comes to user authentication. To be able to talk to Keystone, however, all other OpenStack members need to authenticate against Keystone using a fixed password. The admin token, which is defined directly in the Keystone configuration file (keystone.conf), is all-important – if you possess it, you can do what you please in the cloud.

The Keystone developers also have thought about the connection to existing systems for user management, and LDAP plays an important role here. If a company already has a complete LDAP authorization system, you can map it in Keystone. After doing so, user permissions can be assigned by setting the appropriate LDAP flags.

Incidentally, in OpenStack, each core project has two names. One is the official project name, which describes the function of the component; in Keystone's case, this is Identity. The other is the code name, that is, Keystone. If you are searching the web for information about one of the OpenStack components, it makes much more sense to look for the code names because they are considerably more widely used than are the official names.

Glance: Ubuntu Shaken, Not Stirred

If you have ever tried to set up a virtual machine manually on Linux, you know that this task is tedious and is basically the same as installing an OS on a physical disk. This obstacle collides with the requirement that less experienced users should also be able to set up new virtual systems for use in the cloud just by pointing and clicking in a web interface. Cloud providers remedy this situation by providing prebuilt images of operating systems for use in their environment. When users want to start a new VM via the web interface, they simply select a suitable operating system image and log in to the new system shortly thereafter.

In OpenStack, the Glance component handles the task of provisioning operating system images. Glance consists of two parts: glance-api and glance-registry. The API is the interface to the outside world, whereas the registry takes care of managing existing images and the related database. Glance offers a variety of options when it comes to storing images. In addition to storing locally on the system on which Glance is running, it also supports the OpenStack Swift and Ceph storage back ends, as well as anything that is compatible with Amazon's S3.

Glance supports several image formats: Besides the classic KVM QCOW format, Glance also supports VMware images out of the box. It can basically run operating system images of anything that runs on a real x86 box. The only prerequisite is that the image must be a "real" image of a hard disk drive, including the Master Boot Record. If you have built images for Amazon's AWS, you can also use them in Glance and leverage the ability to boot different disk images with different kernels – at least for Linux images.

A Quantum of Networking

Quantum took over the job of network management in the Folsom release of OpenStack. Up to and including OpenStack 2012.1 (Essex), Quantum was an incubated project, but now the developers have brought it up to par for use in production environments. Quantum handles various network issues that ISPs need to solve in the context of server virtualization. For example, a new virtual machine will usually need an IP address, which can be either a private or a public address. It is also desirable, for example, to isolate individual tenants on VLANs. This ensures that a company will have access only to its own VMs and not to VMs set up by other companies running on the same virtualization nodes.

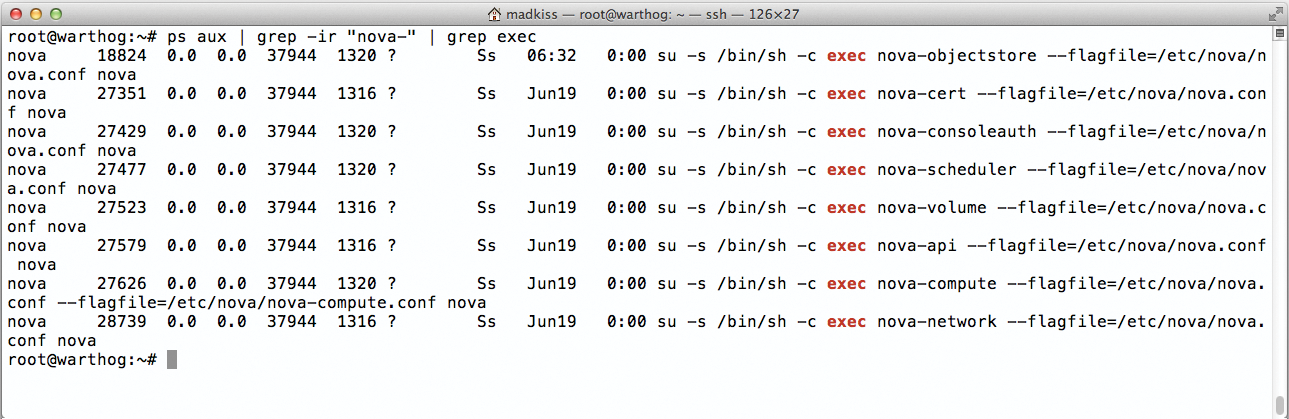

Quantum thus replaces the old nova-network implementation, which belonged to the Nova computing component (Figure 4; more on Nova later). The solution is technically sophisticated: Quantum leverages new technologies, such as Open vSwitch [1], to abstract the physical network configuration completely from the "virtual" network configuration. A corresponding Quantum plugin runs on any hypervisor, communicating with the Quantum Server instance of OpenStack and receiving its configuration from there. Facing the virtualizer, each plugin behaves like a physical switch. VMs whose network interfaces are connected with a virtual switch can be connected arbitrarily across various hypervisor nodes.

A virtual VLAN configuration is also possible if customers want to implement their own network topology. Whereas VLANs previously had to be isolated at the hardware level, Quantum now handles this entire configuration. Moreover, Open vSwitch is not the only protocol supported by Quantum. The service already supports Cisco's UCS/Nexus implementation and can fully exploit its capabilities in combination with suitable hardware.

Cinder: Block Storage for VMs

Virtual machines within a cloud environment require persistent memory in the form of block devices that act as hard disks. However, the typical storage architecture is completely contrary to the idea behind the cloud. Whereas a cloud setup contains a large number of equivalent nodes, storage is typically centrally grouped at one location. Here is where Cinder enters the game: It serves up centrally managed storage, neatly portioned in hard disk-sized chunks, to the virtual machines in the cloud.

Cinder was also an integral part of the Nova computing component in Essex and only made its way into OpenStack as a core component in Folsom. Cinder supports several storage back ends. Block storage that is part of a volume group is the default configuration; in particular, this covers Fibre Channel-connected SANs or DRBD setups. Block storage is transported from the storage host to the computing nodes by iSCSI. Thanks to KVM's hot-plug functionality, it then becomes a part of the virtual machine. If you prefer Ceph instead, you can leverage the native Ceph interface. Cinder is also one of the OpenStack projects whose feature set will grow considerably in the next few months to provide native support for additional storage back ends. For example, there is talk of a direct Fibre Channel connection, which avoids the need to detour via the block device layer.

Nova: The Brain of the Cloud

OpenStack Compute, code-named Nova, is the central computing component. Because Cinder (formerly nova-volume) and Quantum (as a replacement for nova-network) are separate projects in the Folsom release, Nova can now focus on managing the virtual systems within an OpenStack environment. It encompasses all the other components and takes care of starting and stopping the virtual machines in the cloud when the user issues the appropriate commands at the command line or in the web interface. The service is modular; besides nova-api as a front end, Nova also includes the actual computing component nova-compute. The nova-compute component runs on each virtualization node and waits for instructions from the API component.

The nova-compute scheduler is also part of Nova; it knows what hypervisor nodes are up and running and which VMs are where in the cloud. If you want to add a new VM, it is precisely this scheduler that decides which host to run it on. Incidentally, in the background, nova-compute relies on the proven libvirt to manage the virtual systems. The OpenStack developers deserve kudos at this point for not re-inventing the wheel and instead relying on proven technologies.

Dashboard: The User Interface

The most attractive cloud environment is useless if non-nerds can't use it. The OpenStack Dashboard, code-named Horizon, takes care of this situation. It provides a self-servicing portal that is written in Python (keyword Django), just like all other OpenStack components. The Dashboard offers tenants in an OpenStack installation the ability to launch VMs at the press of a button within the web browser. The only precondition is that a WSGI-enabled web server must exist – for example, Apache with mod_wsgi loaded. Combining the Dashboard with memcached is also useful to prevent performance bottlenecks.

From a user perspective, the Dashboard not only supports starting and stopping VMs, it also lets users create images of active VMs in Glance and manage hardware profiles for the virtual system. It also offers a rudimentary statistics function; whether or not to extend this as a complete billing API is a subject of discussion right now. Ultimately, development relies on OpenStack being able to link seamlessly with existing billing systems, so it can bill users automatically for the services they consume.

Swift: The Other Side of the Cloud

The six previously described components form the core of OpenStack take care of the virtualization side. However, as I mentioned at the outset, storage is also of interest. Storage solutions like Dropbox or Amazon EC2 are just as much part of the cloud as virtual machines, and Swift handles this functionality in OpenStack. Swift originated with Rackspace – also the home of GitHub – and is that company's contribution to the project.

Swift has been in production use at Rackspace for some time now, so it is regarded by many developers as the most sophisticated OpenStack component to date. Under the hood, Swift works much like Ceph: The program converts files stored in Swift to binary objects, which are then distributed across a large cluster of storage nodes and inherently replicated in the process. Because Swift has a compatibility interface to Amazon's S3 protocol, any tools developed for S3 will also work with Swift. In other words, if you want to provide online storage OpenStack-style, Swift is the right tool for the job.

RabbitMQ and MySQL

Each Open Stack installation includes two components that are not directly part of OpenStack: MySQL and RabbitMQ. All OpenStack components, with the exception of Swift, use MySQL to maintain their internal databases. Because all OpenStack components are written in Python, the developers rely on the Python sqlalchemy module to implement database access from within their applications. Although sqlalchemy also supports other databases, such as PostgreSQL, almost all current OpenStack installations use MySQL – as stated by the official documentation.

RabbitMQ is the part of the setup that, in particular, helps Nova and Quantum implement communications across all the nodes of the virtualization setup. If you do not like Erlang, or have had some bad experience with RabbitMQ, you can opt for a different implementation of the AMQP standards: OpenStack also works really well with Qpid.

The Touchy Topic of High Availability

OpenStack basically offers all the components you need to implement a modern cloud, but it was flawed at one time. In terms of high availability (HA), the young cloud was anything but competitive. In fact, OpenStack almost completely lacked a usable HA solution at that time. During testing, OpenStack was oblivious to the fact that a hypervisor had failed and taken down several VMs with it; it was equally indifferent to the fact that any of the core components – Nova, Glance, Keystone, etc. – had bitten the dust.

Besides, it's not as if no useful high-availability tools for Linux systems were around at the time. A complete HA toolbox exists in the guise of the Linux HA Cluster Stack, and it was just waiting to be used in the context of OpenStack.

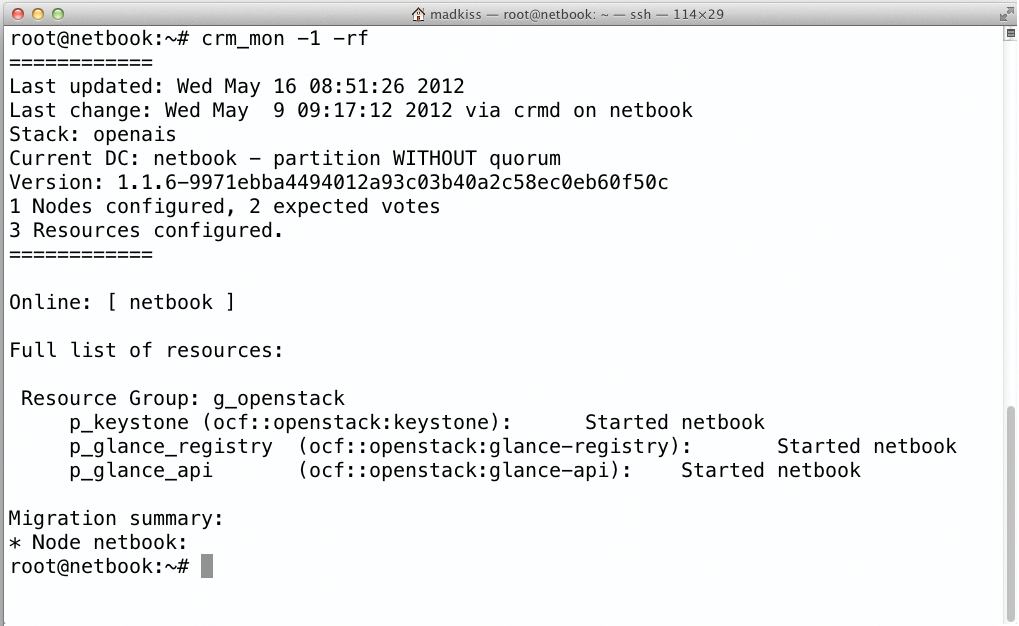

Now, nearly a year after the first test, the situation is not quite as bleak. Specific resource agents that make the individual OpenStack components usable in Pacemaker are now available in the form of the openstack-resource-agents repositories [2]. I personally designed the first agents for Keystone and two parts of Glance [3], and my call for developers to gradually develop agents for the other services was answered, in particular, by two developers from France: Emilia Macchi and Sebastien Han. Thus, it is no longer a problem to use Pacemaker to manage and monitor all of the OpenStack core services (Figure 5).

On top of this, the individual services also support distributed deployment. No core components need to reside on the same system as long as they are connected to each other on a network. In theory, it would even be conceivable to run all instances of all core projects on every node in an OpenStack cloud, as long as they could access the same high-availability MySQL database and the same RabbitMQ.

However, things are not quite as rosy in terms of high availability of virtual machines. In this field, two opposing philosophies – the American and the European ideas of what is actually a good thing for the cloud – are still doing battle.

In the United States, the cloud is regarded in particular as a tool for creating massively scalable IT setups. A classic example is the famous mail-order company, which has to cushion a far greater load in the pre-Christmas period than during the rest of the year. To cope with this additional load, the company simply starts up new virtual machines and distributes the load to them.

The prerequisite is that the individual VMs do not contain variable data – instead, all virtual instances always come from the same image. The logic then is that, if all the VMs use the same image, it does not really matter if a single virtual machine fails. In almost no time, you can create a new VM on the fly to replace the old VM completely.

The European view is different. There, providers of IT infrastructure almost always link the introduction of a cloud with data center consolidation. This means replacing huge amounts of iron and running what used to be physical systems on virtual machines. The previously described, scale-out logic no longer works here. Specific customer systems are not generic; they contain variable data and cannot be replaced easily with a new instance of a fresh image at a later stage. In this scenario, the failure of a VM, or of a hypervisor with many VMs, is far more painful.

VM-HA? Cinder to the Rescue

However, this difference of opinion does not necessarily lead to a standstill when it comes to the topic of high availability for the virtual machines. One major flaw in the implementation of a corresponding routine – the non-HA-capable implementation of the old nova-volume – is about to disappear. Cinder is ditching some of the conceptual difficulties of its predecessor to make it much easier to create highly available storage volumes.

So far, high-availability volumes have failed because nova-volume could not be launched flexibly on an arbitrary cluster node because the MySQL database belonging to Nova stored the hostname of the exporting server. If a volume was initially exported by server1, for example, nova-volume was unable to export the same volume from server2, even if the data was accessible locally on a SAN or DRBD drive.

Cinder will probably have solved this problem before the next OpenStack version, called "Grizzly." If you rely on Ceph as a storage back end for Cinder, instead of a block device-based method, this problem disappeared for you some months ago. Ultimately, there is "just" one part missing in nova-compute, and that is a part that takes care of starting the VMs from one failed hypervisor on another one. It can be assumed that the OpenStack developers will also address this problem in the next release cycle.

The Future of OpenStack

The OpenStack developers met in San Diego in October to announce the launch of the next release cycle. Right now, OpenStack certainly has the greatest momentum of all cloud environments in the FOSS world. The project is almost constantly present on the relevant forums and as a field of work, and the establishment of the OpenStack Foundation in September 2012 indicates that the project is on the upswing.

The foundation will give OpenStack a more formal framework than in the past and will lay the groundwork for a sponsorship program. The idea is to offer membership to large corporations and allow them a substantial say in development. The money collected in this way will go into developing and promoting OpenStack. In other words, if you are actively involved in the OpenStack scene, you can look forward to some exciting experiences in the near future.