OpenStack workshop, part 2: OpenStack cloud installation

A Step-by-Step Cloud Setup Guide

Because of the diversity of cloud systems on the markets right now, choosing the right system for your needs can be rather difficult. Often, however, the suffering only really starts once you've left this step behind and chosen OpenStack. After all, OpenStack does not exactly enjoy the reputation of being the best-documented project on the open source scene. The documentation has become a whole lot better recently, not least because of the tenacity of Anne Gentle, the head of the documentation team; however, it still is lacking in some places. For example, it is still missing a document that explains the installation of an OpenStack cloud from installing the packages through setting up the first VM. In this article, I'll take a step-by-step look at setting up an OpenStack cloud.

Don't Panic!

OpenStack might look frightening, but you can use most of its components in the factory default configuration. In this article, I deal with the question of which OpenStack components are necessary for a basic OpenStack installation and how their configuration works. The goal is an OpenStack reference implementation consisting of three nodes: One node is used as the cloud controller, another acts as a node for the OpenStack network service, Quantum, and the third node is a classic hypervisor that houses the virtual machines in the environment.

Required Packages

Also in this article I assume you are using Ubuntu 12.04. But, if you are looking to deploy OpenStack Folsom, the default package source in Ubuntu 12.04 is not very useful because it only gives you packages for the previous version, Essex. Fortunately, the Ubuntu Cloud Team for Precise Pangolin has its own repository with packages for Folsom, which you can install in the usual way. For the installation to work, you need the ubuntu-cloud-keyring package, which contains the Cloud Team's GPG key. Then, add the following entry to /etc/apt/sources.list.d/cloud.list:

deb http://ubuntu-cloud.archive.canonical.com/ubuntu precise-updates/folsom main

This step ensures that the required package lists are actually transferred to the system's package manager. The installation of individual packages then follows the usual steps, using tools such as apt-get or aptitude (see the "Prerequisites" box).

The Cloud Network

OpenStack Folsom includes Quantum as the central component for the network. This component helps virtualize networks. For it to fulfill this role, you need some basic understanding of how Quantum works and what conditions must exist on the individual nodes to support the Quantum principle.

In general, the following definition applies: Quantum gives you a collection of networks that supports communication between the nodes themselves and also with the virtual machines. The Quantum developers distinguish between four different network types (Figure 1).

The management network is the network that the physical servers in the OpenStack installation use to communicate with one other. This network handles, for example, internal requests to the Keystone service, which is responsible for authentication within the setup. In this example, the management network is 192.168.122.0/24, and all three nodes have a direct connection to this network via their eth0 network interfaces. Here, "Alice" has the IP address 192.168.122.111, "Bob" has 192.168.122.112, and "Charlie" is 192.168.122.113. Additionally, this example assumes that the default route to the outside world for all three machines also resides on this network, and that the default gateway in all cases is 192.168.122.1 (Figure 2).

On top of this is the data network, which is used by the virtual machines on the compute host (Bob) to talk to the network services on Charlie. Here, the data network is 192.168.133.0/24; Bob has an IP address of 192.168.133.112 on this network and Charlie has 192.168.133.113 – both hosts use their eth1 interfaces to connect to the network. The interfaces also act as bridges for the virtual machines (which will use IP addresses on the private network 10.5.5.0/24 to talk to one another).

Next is the external network: The virtual machines will later draw their public IP addresses from this network. In the absence of genuine public IPs, this example uses 192.168.144.0/25. Because in Quantum, public IP addresses are not directly assigned to the individual VMs (instead, they use the network node and its iptables DNAT rules for access to it), the host for Quantum (i.e., Charlie) needs an interface on this network. Quantum automatically assigns an IP, so Charlie only needs to have an eth2 interface for this. Later in the configuration, Quantum will learn that this is the interface it should use for the public network.

Finally, you have the API network: This network is not mandatory, but it does make the APIs of the OpenStack services available to the outside world via a public interface. The network can reside in the same segment as the external network (e.g., you might have all of the 192.168.144.0/24 network available; at the Quantum level, the public network is then defined as 192.168.144.0/25, and the 192.168.144.129/25 network is available as the API network). If you need the OpenStack APIs to be accessible from the outside, Alice must have an interface with a matching IP on this network.

Enabling Asynchronous Routing

A very annoying default setting in Ubuntu 12.04 sometimes causes problems, especially in setups with OpenStack Quantum. Out of the box, Ubuntu sets the value for the rp_filter sys control variable to 1. This means a reply packet for a network request can only enter the system using exactly the interface on which the original request left the system. However, in Quantum setups, it is quite possible for packets to leave via a different interface than the response uses to come into the system. It is therefore advisable to allow asynchronous routing across the board on Ubuntu. The following two entries in /etc/sysctl.conf take care of this:

net.ipv4.conf.all.rp_filter = 0 net.ipv4.conf.default.rp_filter = 0

Of course, you also need to enable packet forwarding:

net.ipv4.ip_forward=1

Then, reboot to ensure that the new configuration is active.

iptables and Masquerading

Finally, you need to look at the firewall configuration on the host side. The iptables rules should never prevent traffic on the individual interfaces. If, as in the example, you have a gateway for the external network that is not a separately controlled router from the provider but a local computer instead, you need to configure rules for DNAT and SNAT on this machine to match your setup.

NTP, RabbitMQ, and MySQL

The good news here is that NTP and RabbitMQ require no changes after the installation on Alice; both services work immediately after the install using the default values.

However, the situation is a little different for MySQL: The OpenStack services need their own database in MySQL, and you have to create it manually. Listing 1 gives you the necessary commands. The example assumes that no password is set for the root user in MySQL. If your local setup is different, you need to add the -p parameter to each MySQL call so that the MySQL client prompts for the database password each time. Also, MySQL must be configured to listen on all interfaces – not only on the localhost address 127.0.0.1. To do this, change the value of bind_address = to 0.0.0.0 in /etc/mysql/my.cnf.

Listing 1: Creating Databases

01 mysql -u root <<EOF 02 CREATE DATABASE nova; 03 GRANT ALL PRIVILEGES ON nova.* TO 'novadbadmin'@'%' 04 IDENTIFIED BY 'dieD9Mie'; 05 EOF 06 mysql -u root <<EOF 07 CREATE DATABASE glance; 08 GRANT ALL PRIVILEGES ON glance.* TO 'glancedbadmin'@'%' 09 IDENTIFIED BY 'ohC3teiv'; 10 EOF 11 mysql -u root <<EOF 12 CREATE DATABASE keystone; 13 GRANT ALL PRIVILEGES ON keystone.* TO 'keystonedbadmin'@'%' 14 IDENTIFIED BY 'Ue0Ud7ra'; 15 EOF 16 mysql -u root <<EOF 17 CREATE DATABASE quantum; 18 GRANT ALL PRIVILEGES ON quantum.* TO 'quantumdbadmin'@'%' 19 IDENTIFIED BY 'wozohB8g'; 20 EOF 21 mysql -u root <<EOF 22 CREATE DATABASE cinder; 23 GRANT ALL PRIVILEGES ON cinder.* TO 'cinderdbadmin'@'%' 24 IDENTIFIED BY 'ceeShi4O'; 25 EOF

After you have created the databases and changed the IP address appropriately, you can now start with the actual OpenStack components. The commands shown in Listing 1 create the required MySQL databases.

OpenStack Keystone

Keystone is the OpenStack authentication component. It is the only service that does not require any other services. Thus, it makes sense to begin with the Keystone installation on Alice. Directly after installing the Keystone packages, it is a good idea to edit the Keystone configuration in /etc/keystone/keystone.conf in your preferred editor.

It is important to define an appropriate value as the admin token in the admin_token = line. The admin token is the master key for OpenStack: Anyone who knows its value can make changes in Keystone. It is therefore recommended to set the permissions for keystone.conf so that only root can read the file. In this example, I will be using secret as the admin token.

Keystone also needs to know where to find its own MySQL database. This is handled by the SQL connection string, which is defined in the keystone.conf [SQL] block. In the default configuration, the file points to an SQLite database – in this example, MySQL resides on Alice; you need to create an entry to reflect the previously created MySQL database as follows:

[sql] connection = mysql://keystonedbadmin:Ue0Ud7ra@192.168.122.111/keystone idle_timeout = 200

Keystone also needs to know how to save its service definitions, so your keystone.conf should also contain the following entries:

[identity] driver = keystone.identity.backends.sql.Identity [catalog] driver = keystone.catalog.backends.sql.Catalog

This step completes keystone.conf. After saving and closing the file, the next step is to create the tables that Keystone needs in its database with the custom tool: keystone-manage db_sync. When you are done, type service keystone restart to restart the service, which is then ready for use.

After the configuration, it makes sense to create a set of tenants and users. In real life, you would not do this manually; instead you would use pre-built scripts. A custom script matching this article can be found online [1]. It uses the secret key previously added to keystone.conf to set up a tenant named admin and a matching user account that also has secret as its password. The script also creates a "service" tenant containing users for all services; again secret is the password for all of these accounts. Simply download the script and run it on Alice at the command line.

Endpoints in Keystone

Keystone manages what is known as the Endpoint database. An endpoint in Keystone is the address of an API belonging to one of the OpenStack services. If an OpenStack service wants to know how to communicate directly with the API of another service, it retrieves the information from this list in Keystone. For admins, this means you have to create the list initially; another script handles this task [2]. After installing the script on disk, you can call it as shown in Listing 2.

Listing 2: Endpoints

01 ./endpoints.sh 02 -m 192.168.122.111 03 -u keystonedbadmin 04 -D keystone 05 -p Ue0Ud7ra 06 -K 192.168.122.111 07 -R RegionOne 08 -E "http://192.168.122.111:35357/v2.0" 09 -S 192.168.122.113 10 -T secret

The individual parameters are far less cryptic than it might seem. The -m option specifies the address on which MySQL can be accessed, and -u, -D, and -p supply the access credentials for MySQL (the user is keystonedbadmin, the database keystone, and the password Ue0Ud7ra). The -K parameter stipulates the host on which Keystone listens, and -R defines the OpenStack region for which these details apply. -E tells the script where to log in to Keystone to make these changes in the first place. The -S parameter supplies the address for the OpenStack Object Storage solution, Swift; it is not part of this how-to but might mean some additions to the setup later on. -T designates the admin token as specified in keystone.conf. A word of caution: The script is designed for the data in this example; if you use different IPs, you will need to change it accordingly. Once the endpoints have been set up, Keystone is ready for deployment in OpenStack.

Storing Credentials

Once you have enabled Keystone, you need to authenticate any further interaction with the service. However, all OpenStack tools for the command line use environmental variables, which make it much easier to log in to Keystone. After defining these variables, you don't need to worry about manual authentication. It makes sense to create a file named .openstack-credentials in your home folder. In this example, it would look like Listing 3.

Listing 3: Credentials

01 OS_AUTH_URL="http://192.168.122.111:5000/v2.0/" 02 OS_PASSWORD="secret" 03 OS_TENANT_NAME="admin" 04 OS_USERNAME="admin" 05 OS_NO_CACHE=1 06 07 export OS_AUTH_URL OS_PASSWORD 08 export OS_TENANT_NAME OS_USERNAME 09 export OS_NO_CACHE

You can then use .openstack-credentials to add this file to the current environment. After this step, OpenStack commands should work at the command line without annoying you with prompts.

Glance Image Automaton

Clouds can't exist without operating system images: To help users launch virtual machines quickly, and without needing specialist knowledge, administrators need to provide matching images; otherwise, you can forget the Cloud. Glance handles this task in OpenStack. The service comprises two individual components: the API (glance-api) and the registry (glance-registry). The API provides an interface for all other OpenStack services, whereas the registry takes care of managing the Glance database.

You can start by editing the glance-api configuration file, which resides in /etc/glance/glance-api.conf. Open the file in your preferred editor and look for a [keystone_authtoken] near the end. OpenStack admins will see this frequently – each service needs to log in to Keystone first, before it can leverage Keystone to talk to the users. Also, this is the correct place in glance-api.conf to define the required credentials. One advantage is that the configuration looks very similar for nearly all OpenStack services and is identical in some cases.

In the remainder of this article, you can assume the following: auth_host will always be 192.168.122.111, admin_tenant_name will always be service, and admin_password will always be secret. admin_user is always the name of the OpenStack service logging in to Keystone (i.e., glance in the present case, nova for OpenStack Nova, quantum for OpenStack Quantum, and so on). Some services will ask you for an auth_url – in the scope of this article, this is always http://192.168.122.111:5000/v2.0/. If your local setup uses different IPs, you need to use them for auth_host and auth_url.

As in keystone.conf previously, the SQL statement in glance-api.conf is in a line that starts with sql_connection. After installing Glance, you now have an SQLite database; in the present example, the SQL connection is:

sql_connection = mysql://glancedbadmin:ohC3teiv@192.168.122.111/glance

Farther down in the file, you will find a section named [paste_deploy]. Glance needs to know which authentication method to use and where to find the setting details. For glance-api.conf, the correct answer for config_file = in this section is thus /etc/glance/glance-api-paste.ini, and a value of keystone will do the trick for flavor=. After you make these changes, the file is now ready for use.

You will need to edit /etc/glance/glance-registry.conf in the same way: The values for the various auth_ variables are the same as for glance-api, and the database connection and the text for flavor= are identical. You only need a different entry for config_file; the entry for glance-registry.conf is /etc/glance/glance-registry-paste.ini. This completes the configuration of the Glance components. Now, it's high time to create the tables in the Glance databases. glance-manage will help you do this:

glance-manage version_control 0 glance-manage db_sync

Next, restart both Glance services by issuing

service glance-api restart && service glance-registry restart

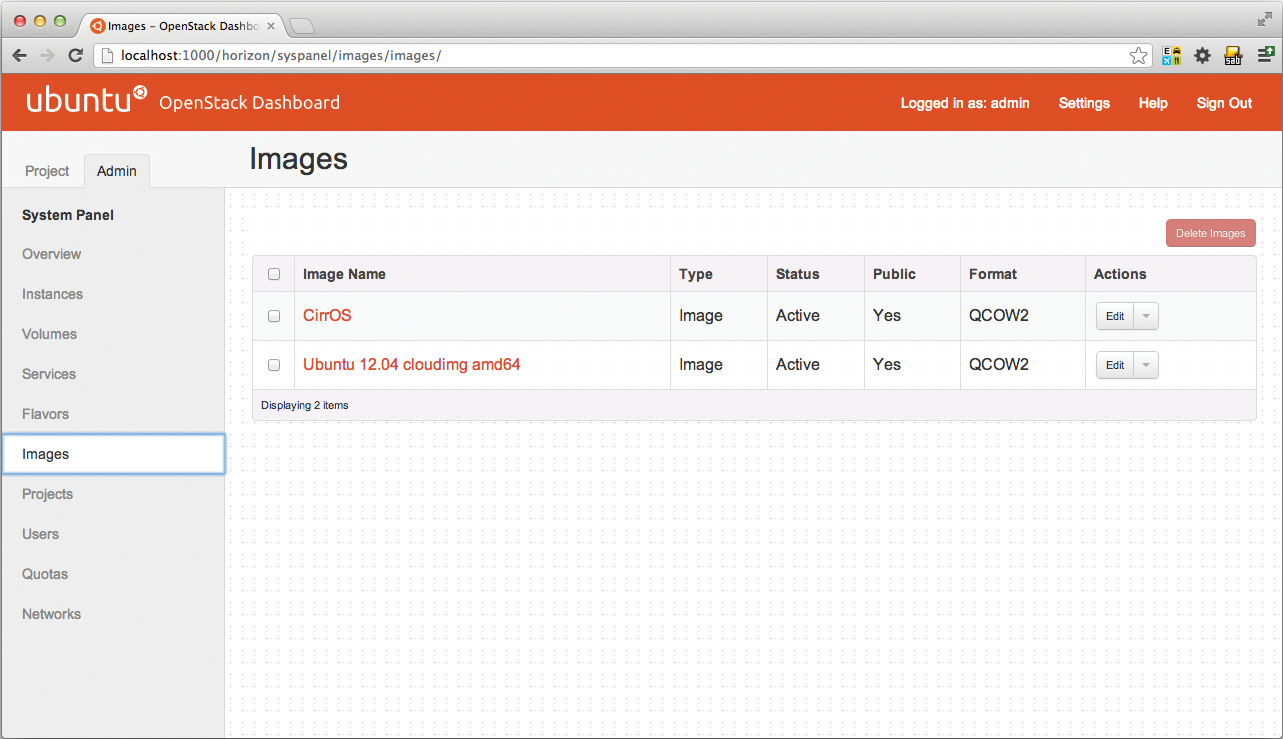

The image store is now ready for an initial test image. Fortunately, the Glance client lets you download images directly off the web. To add an Ubuntu 12.04 Cloud image to the image store, just follow the commands in Listing 4.

Listing 4: Integrating an Image

glance image-create --copy-from http://uec-images.ubuntu.com/releases/12.04/ release/ubuntu-12.04-server-cloudimg-amd64-disk1.img --name="Ubuntu 12.04 cloudimg amd64" --is-public true --container-format ovf --disk-format qcow2

After you've completed this step, glance image-list should show you the new image – once a value of ACTIVE appears in the Image field, the image is ready for use.

Quantum: The Network Hydra

The Quantum network service confronts administrators with what is easily the most complex configuration task. For it to work, you need to set up services on all three hosts, starting with Alice, where you need to run the Quantum Server itself and a plugin. This example uses the Plugin OpenVSwitch plugin; thus, you need to configure the Quantum Server itself and the OpenVSwitch plugin (Figure 3).

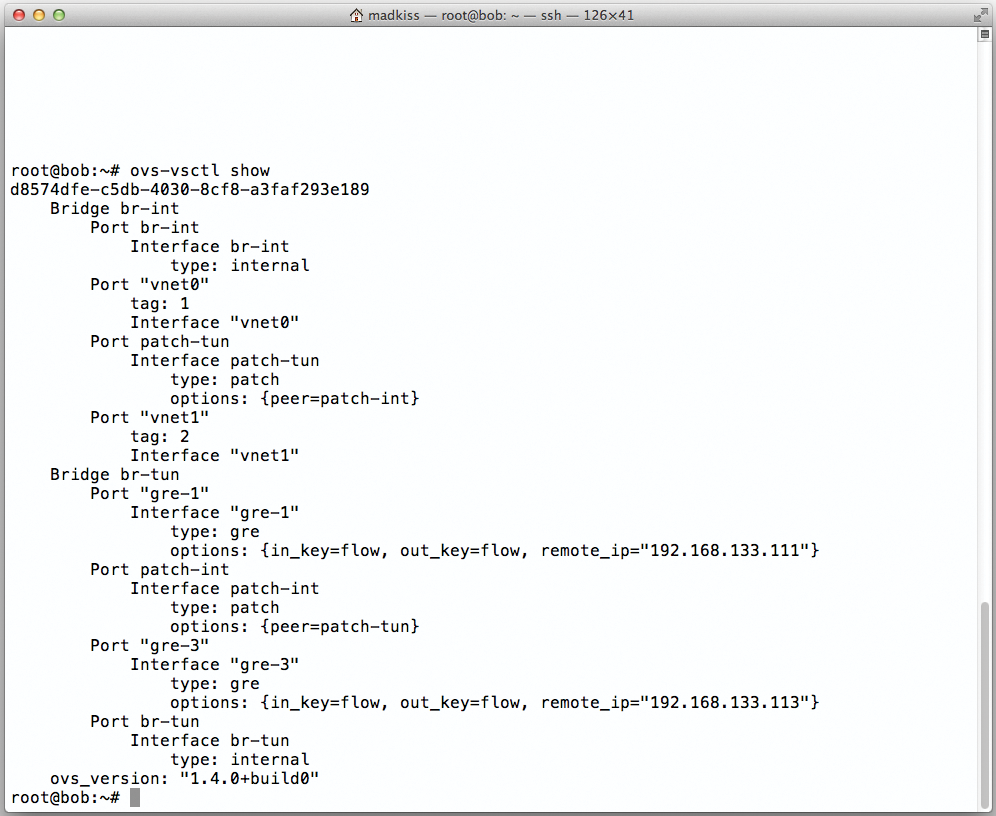

ovs-vsctl show command shows you what changes Quantum makes to the OpenVSwitch configuration.After the installation, start by looking at /etc/quantum/api-paste.ini. The [filter:authtoken] section contains entries that will be familiar from Glance; you need to replace them with values appropriate to your setup. The auth_port must be 35357 in this file.

This step is followed by the OVS plugin configuration: It uses a file named /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini for its configuration. In the file, look for the line starting with sql_connection, which tells Quantum how to access its own MySQL database. The correct entry here is:

sql_connection = mysql://quantumdbadmin:wozohB8g@192.168.122.111/quantum

For simplicity's sake, I would save this file now and copy it to bob, although you will need some changes for the hosts Bob and Charlie later on. You can also copy /etc/quantum/api-paste.ini as-is to the two nodes.

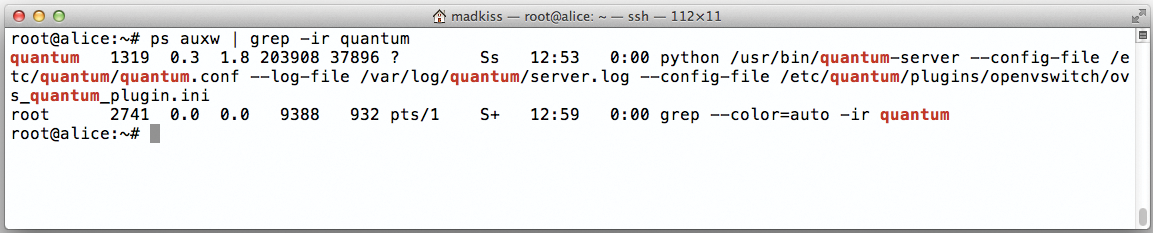

Next, you can launch the Quantum Server (Figure 4), including the OpenVSwitch plugin on Alice; service quantum-server start does the trick.

Bob is not running a Quantum server. However, because Bob is the computing node, it definitely needs the quantum-plugin-openvswitch-agent, which is the agent for the OpenVSwitch plugin. Bob will use the agent later to receive relevant network information from Alice (quantum-server) and Charlie (DHCP and L3 plugins) to configure its own network correctly. The package for this agent should already be in place.

The next step is thus the agent configuration, which is in the familiar file /etc/quantum/plugins/openvswitch/ovs_quantum_plugin.ini. Look for the # Example: bridge_mappings = physnet1:br-eth1 line and add the following lines behind it to make sure the agent works correctly on Bob:

tenant_network_type = gre tunnel_id_ranges = 1:1000 integration_bridge = br-int tunnel_bridge = br-tun local_ip = 192.168.133.112 enable_tunneling = True

These lines tell the OpenVSwitch agent to open a tunnel automatically between Bob and the network node Charlie; the hosts can then use the tunnel to exchange information.

After completing the changes to ovs_quantum_plugin.ini, you should make a copy of the file on Bob and paste it to Charlie; you will need to replace the IP address of 192.168.133.112 with 192.168.133.113 for Charlie.

On both hosts – Bob and Charlie – additionally remove the hash sign from the # rabbit_host line in /etc/quantum/quantum.conf and add a value of 192.168.122.111. This is the only way of telling the agents on Bob and Charlie how to reach the RabbitMQ Server on Alice.

Finally, Charlie needs some specific changes because it is running the quantum-l3-agent and the quantum-dhcp-agent. These two services will later provide DHCP addresses to the virtual machines, while using iptables to allow access to the virtual machines via public IP addresses (e.g., 192.168.144.0/25). The good news is that the DHCP agent does not need any changes to its configuration; however, this is not true of the L3 agent. Its configuration file is /etc/quantum/l3_agent.ini.

Creating External Networks

First, you need to add the values for the auth_ variables to the configuration in the normal way. Farther down in the file, you will also find an entry for # metadata_ip =; remove the hash sign and, for this example, add a value of 192.168.122.111. (I will return to the metadata server later on.)

The configuration file needs some more modifications, which you can't actually make right now. To do so, you would need the IDs for the external router and the external network, and they will not exist until you have created the networks. This then takes you to the next step, which is creating the networks in Quantum. You will be working on Alice for this process.

Because creating the networks also involves a number of commands, I have again created a script [3]. It creates the networks required for the present example: a "private" network, which is used for communications between the virtual machines, and the pseudo-public network, 192.168.144.0/25, on which the virtual machines will have dynamic IP addresses ("floating IPs") later on.

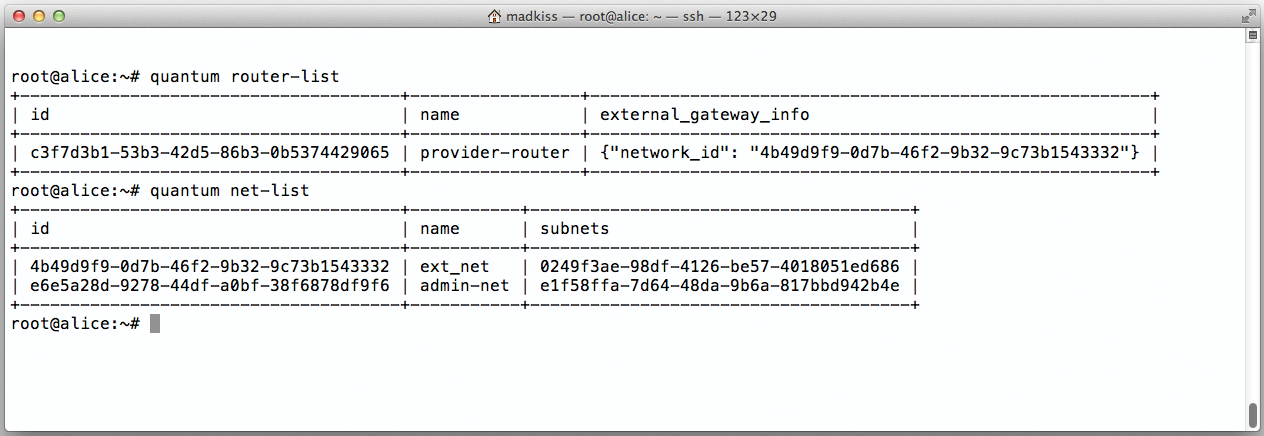

After downloading and running the script, you need to discover Quantum's internal router ID for the floating network and the ID of the floating network itself. To display the first of these values, you can type quantum router-list. The value for ID in the line with the provider-router is what you are looking for. You need to add this value to the /etc/quantum/l3_agent.ini file that you already edited on Charlie.

The floating network ID can be queried using quantum net-list – the value in the ID field is of interest here; its name is ext_net. Add this value to /etc/quantum/l3_agent.ini following gateway_external_net_id = . You also need to uncomment the two values you will be changing so that the Quantum agents actually see them.

This completes the Quantum configuration files, and it's nearly time to start up Quantum. You just need the internal bridges on Bob and Charlie that OpenVSwitch will use to connect the Quantum interfaces to the local network configuration. Working on Bob and Charlie, type ovs-vsctl add-br br-int to create a bridge for internal communication between the virtual machines. Charlie additionally needs a bridge to the outside world: ovs-vsctl add-br br-ext and ovs-vsctl add-port br-ext eth2 take care of the required configuration.

At the end of this process, you need to restart all the agents on Bob and Charlie: restart quantum-plugin-openvswitch-agent is required on Bob and Charlie. Additionally, you need to run restart quantum-l3-agent and restart quantum-dhcp-agent on Charlie to tell the agents to reload their configurations.

Block Storage with Cinder

Compared with configuring Quantum, configuring Cinder is a piece of cake. This component was also around in the Essex version of OpenStack, where it was still called nova-volume and was part of the Computing component. It now has a life of its own. For Cinder to work, an LVM volume group by the name of cinder-volumes must exist on the host on which Cinder will be running. Cinder typically resides on the cloud controller, and in this example, again, the program runs on Alice. Cinder doesn't really mind which storage devices are part of the LVM volume group – the only important thing is that Cinder can create volumes in this group itself. Alice has a volume group named cinder-volumes in this example.

After installing the Cinder services, you come to the most important part: The program needs an sql_conn entry in /etc/cinder/cinder.conf pointing the way to the database. The entry needed for this example is:

sql_connection = mysql://cinderdbadmin:ceeShi4O@192.168.122.111:3306/cinder

This is followed by /etc/cinder/api-paste.ini – the required changes here follow the pattern for the changes in api-paste.ini in the other programs. The service_ entries use the same values as their auth_ counterparts. The admin_user is cinder.

Cinder also needs tables in its MySQL database, and the cinder-manage db sync creates them. Next, you can restart the Cinder services:

for i in api scheduler volume; do restart cinder-"$i"; done

Finally, you need a workaround for a pesky bug in the tgt iSCSI target, which otherwise prevents Cinder from working properly. The workaround is to replace the existing entry in /etc/tgt/targets.conf with include /etc/tgt/conf.d/cinder_tgt.conf. After this step, Cinder is ready for use; the cinder list command should output an empty list because you have not configured any volumes yet.

Nova – The Computing Component

Thus far, you have prepared a colorful mix of OpenStack services for use, but the most important one is still missing: Nova. Nova is the computing component; that is, it starts and stops the virtual machines on the hosts in the cloud. To get Nova up to speed, you need to configure services on both Alice and Bob in this example. Charlie, which acts as the network node, does not need Nova (Figure 5).

The good news is that the Nova configuration, /etc/nova/nova.conf, can be identical on Alice and Bob; the same thing applies to the API paste file in Nova, which is called /etc/nova/api-paste.ini. As the compute node, Bob only needs a minor change to the Qemu configuration for Libvirt in order to start the virtual machines. I will return to that topic presently.

I'll start with Alice. The /etc/nova/api-paste.ini file contains the Keystone configuration for the service with a [filter:authtoken] entry. The values to enter here are equivalent to those in the api-paste.ini files for the other services; the value for admin_user is nova. Additionally, the file has various entries with volume in their names, such as [composite:osapi_volume]. Remove all the entries containing volume from the configuration because, otherwise, nova-api and cinder-api might trip over one another. After making these changes, you can copy api-paste.ini to the same location on Bob.

nova.conf for OpenStack Compute

Now you can move on to the compute component configuration in the /etc/nova/nova.conf file. I have published a generic example of the file to match this article [4]; explaining every single entry in the file is well beyond the scope of this article. For an overview of the possible parameters for nova.conf, visit the OpenStack website [5]. The sample configuration should work unchanged in virtually any OpenStack environment, although you will need to change the IP addresses, if your local setup differs from the setup in this article. Both Alice and Bob need the file in /etc/nova/nova.conf – once it is in place, you can proceed to create the Nova tables in MySQL on Alice with:

nova-manage db sync

This step completes the Nova configuration, but you still need to make some changes to the Qemu configuration for Libvirt on Bob, and in the Libvirt configuration itself. The Qemu configuration for Libvirt resides in /etc/libvirt/qemu.conf. Add the lines shown in Listing 5 to the end of the file. The Libvirt configuration itself also needs a change; add the following lines at the end of /etc/libvirt/libvirtd.conf:

Listing 5: Qemu Configuration

cgroup_device_acl = ["/dev/null", "/dev/full", "/dev/zero","/dev/random", "/dev/urandom","/dev/ptmx", "/dev/kvm", "/dev/kqemu","/dev/rtc", "/dev/hpet","/dev/net/tun", ]

listen_tls = 0 listen_tcp = 1 auth_tcp = "none"

These entries make sure that Libvirt opens a TCP/IP socket to support functions such as live migration later on. For this setup to really work, you need to replace the libvirtd_opts="-d" in /etc/default/libvirt-bin with libvirtd_opts="-d -l".

Then, restart all the components involved in the changes; on Alice, you can type the following to do this:

for i in nova-api-metadata nova-api-os-computenova-api-ec2nova-objectstorenova-schedulernova-novncproxynova-consoleauthnova-cert;do restart "$i"; done

On Bob, the command is

for i in libvirt-bin nova-compute; do restart $i; done

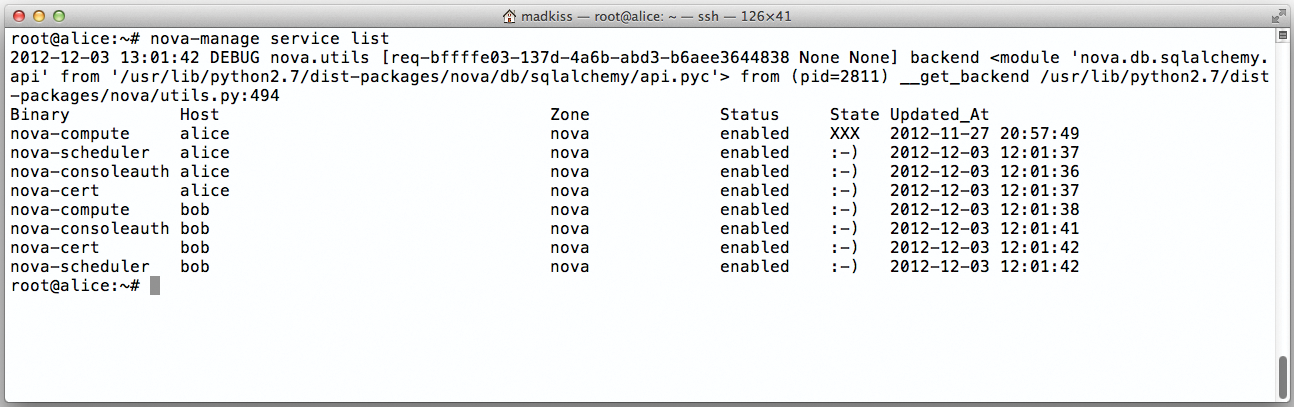

Next, typing nova-manage service list should list the Nova services on Alice and Bob. The status for each service should be :-).

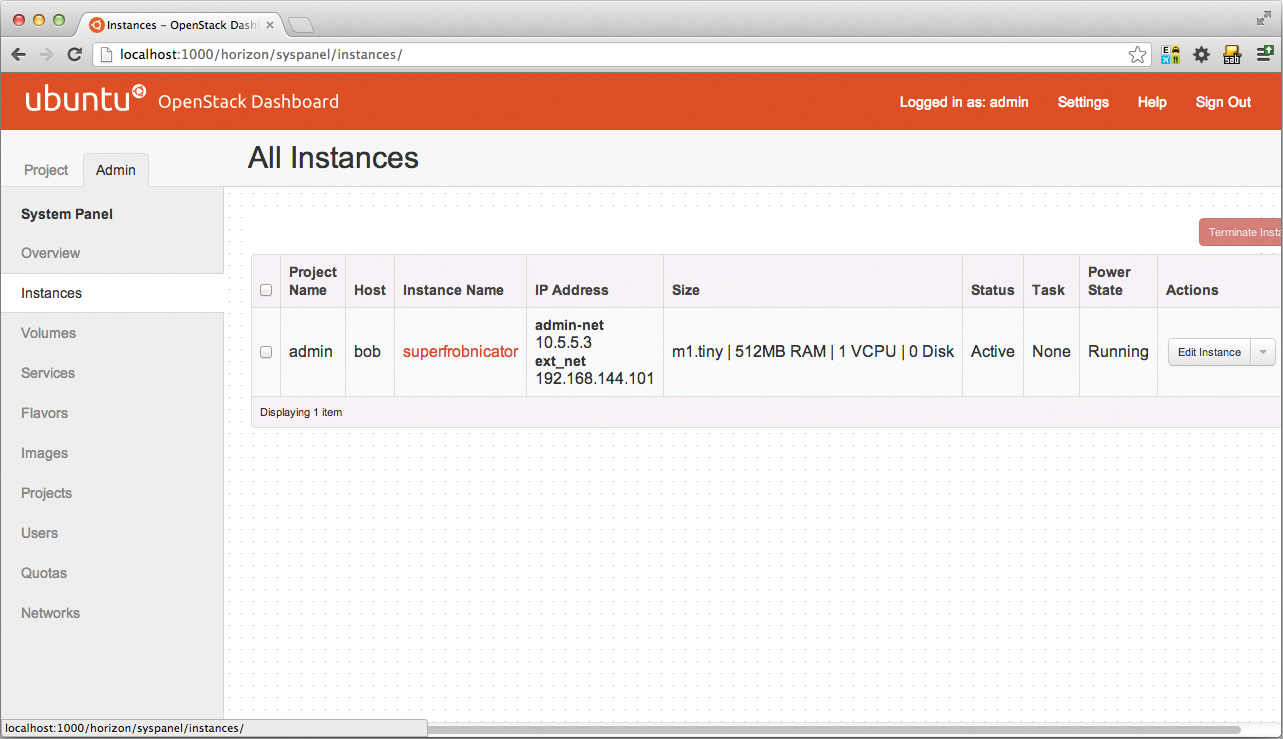

The OpenStack Dashboard

Your cloud is basically ready for service; you can start virtual machines at the console, but to round off the setup, you still need OpenStack Dashboard (Figure 6), which lets end users create virtual machines as a Service Servicing Portal. After installing the required packages on Alice, take a look at the /etc/openstack-dashboard/local_settings.py file. A couple of entries are needed at the end of this file for the dash to work correctly:

OPENSTACK_HOST = '192.168.122.111' QUANTUM_ENABLED = True SWIFT_ENABLED = True

Next, restart the Apache2 web server by typing restart apache2 so that the dashboard reloads its configuration. Directly after doing so, the web interface is available on http://192.168.122.111/horizon (Figure 7). You can log in as admin with a password of secret.

The Thing About Metadata Servers

Virtual machines that are created from special images – that is, from images officially prepared for cloud environments – all have one thing in common: During the boot process, they send HTTP requests to discover information about themselves from the cloud metadata server. This approach was first used in Amazon's EC2 environment; it ensures that the virtual machine knows its own hostname and has a couple of parameters configured when the system starts (e.g., the settings for the SSH server, to start).

The Ubuntu UEC images are a good example for the use of this feature: A machine created from an Ubuntu image for UEC environments runs cloud-init when it starts up. The approach is always the same: An HTTP request for the URL http://169.254.169.254:80 queries the details from the cloud controller. For this to work, you need a matching iptables rule to forward the correct IP address on the computing nodes to the correct cloud controller using DNAT.

The correct controller in this example is nova-api-metadata, which listens on port 8775 on Alice. The good news is that the L2 agent automatically configures the DNAT rule on the compute nodes. The bad news is that you need to configure a route for the return channel from the cloud controller – that is, Alice – to the virtual machines. The route uses the private VM network as its network (e.g., 10.5.5.0/24) and the IP address that acts as the gateway IP for virtual machines on the network host as the gateway. This value will vary depending on your configuration.

To discover the address, type quantum router-list to discover the ID of the router used by the external network. Once you have this ID, typing:

quantum port-list -- --device_id ID --device_owner network:router_gateway

will give you the gateway – in this example, it is 192.168.144.100. To set the correct route on Alice, you would then need to type:

ip route add 10.5.5.0/24 via 192.168.144.100

After this, access to the metadata server will work. The setup basically routes the packages around the cloud to the cloud controller – you can assume that this fairly convoluted process will be replaced by a new procedure in a future version of OpenStack.

Future

The OpenStack installation I looked at in this article gives you a basic setup that builds a cloud from three nodes. The third part of this series will look at topics such as high availability for individual services and how to assign "official" IP addresses to the virtual machines. It will also explain how to extend the basic setup to provide more computing nodes. Additionally, the next article will look at some of the options that Quantum offers as a network service, which were beyond the scope of this part of the series.