Unicode migration with an Oracle database

Out of Babylon

Oracle offers several possibilities for migrating to Unicode. However, some of them are omitted (i.e., the "Oracle Database Migration Utility for Unicode," or DMU for short, which Oracle has offered since 2011) in a production scenario, because enterprise requirements do not envisage the necessary downtime or because the system requirements for the product (release and patch status) are not met. In contrast, the procedure presented here migrates very large databases in a very short time.

Why Unicode?

Every database is created with a certain code page. En route from the application to the database, the characters in the data are translated: The application code page is mapped to that of the database. However, this is only the case if you have character data types (CHAR, VARCHAR2, CLOB, LONG, NCHAR, NVARCHAR2, NCLOB), because you do not want to convert binary data, of course.

Conversion takes place automatically in transit (via Oracle Net); for example, a Euro sign typed on a Windows machine (0x80 in WIN-1252) is entered in the database as 0xA4 – if you create the database with the ISO-8859P15 character set. However, if the database was created with a Unicode character set, then the Euro sign is stored as a three-byte sequence (0xE282AC) and therefore requires three times as much space as before.

In the past, most databases in Europe were created with a one-byte character set (i.e., ISO-8859 or MSWIN-1252).

In the course of globalization, companies now increasingly need global databases. Oracle has thus recommended for some time that enterprises use Unicode as the default character set. Many modern applications (e.g., Java applications) also use Unicode by default. The same applies to many of today's operating systems, such as Microsoft Windows 7 or Linux. However, when you're converting an Oracle database to Unicode, several difficulties can occur. I will discuss some of these issues and their solutions in this article.

Column Overrun

When designing tables, the column width must be defined as a certain number of characters, not bytes. Fields suitable for this purpose can be created with the CHAR data type:

CREATE TABLE status ( statusid CHAR(1),description VARCHAR2(50));

However, does the 1 here mean a length of one byte or one character? The Oracle database uses the parameter NLS_LENGTH_SEMANTICS for this definition, and it has to be set appropriately to char or byte. Because these semantics only became available in Oracle 9i, and previously only byte could be used, the length semantics are still set to byte for many older applications (or for applications that have been migrated across several Oracle releases.) In a Unicode migration, this can lead to a situation in which a record cannot be inserted, for example, because it contains a non-standard character, with the result:

ORA-02374: conversion error loading table "DEMO"."STATUS" ORA-12899: value too large for column STATUSID (actual: 2, maximum: 1)

In this case, the status column contains "Ü" as the ID, but this is a two-byte character in Unicode.

In new applications, you would thus always explicitly state the length semantics. Old applications need to be modified in the course of the migration; you would make this change

CREATE TABLE status ( statusid CHAR(1 CHAR),description VARCHAR2(50 CHAR));

in the previous example.

Maximum Data Type Length

The Oracle documentation often states that the CHAR data type has a length of 2,000 characters and VARCHAR2 a length of 4,000 characters. But this is not true, because the maximum length is expected in bytes, that is, a VARCHAR2 field can be a maximum of 4,000 bytes long.

CREATE TABLE status ( statusid CHAR(1 CHAR),description VARCHAR2(4000 CHAR));

In this case, therefore, the length semantics are wrong, because the description field can actually only be 4,000 bytes. (Despite this, Oracle still allows what could be a misleading definition.)

If this field is filled up to the last character and contains non-standard characters, which are longer than one byte in Unicode, then the conversion will fail.

Instead, you need to convert the data type to LONG, or preferably CLOB. The table definition would then look like this:

CREATE TABLE status ( statusid CHAR(1 CHAR),description CLOB)LOB (description) STORE AS (ENABLE STORAGE IN ROW);

for the previous example.

Standard Types Instead of National Characters

If the database only occasionally needs to store special characters, such as Arabic or Kanji, you can also use what are known as national language data types (NCHAR, NVARCHAR2, or NCLOB).

Converting the database to Unicode removes this need and the "N" data types can be converted back to "normal" data types. To do this, you simply modify the CREATE command for the corresponding table.

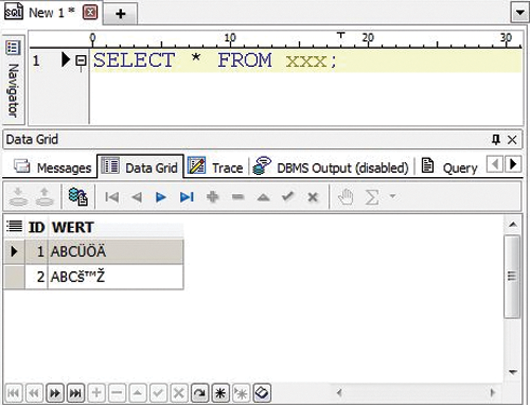

Corrupters

Corrupters can be a difficult point to master because even if the database did everything correctly, the data are still a mess. This can happen when two systems transfer data without converting. For example, say you create the database with the WE8MSWIN1252 character set, and a client sets the variable NLS_LANG=GERMAN_GERMANY.WE8MSWIN1252 (i.e., on the command line in DOS); the database then assumes that no transcoding is necessary because the two character sets are the same. The DOS client thinks that the characters in the query are okay, because no conversion occurs in the return path either. Now, when you use a Windows client for a query (e.g., Dell Toad for Oracle), you suddenly discover that you have just saved a bunch of hieroglyphics (Figure 1).

This error can only be fixed by unloading the data via a corresponding interface and reloading with the "right" character set. This makes the migration considerably more difficult. Fortunately, these errors are rather rare today because they are quickly identifiable in graphical tools.

Migration

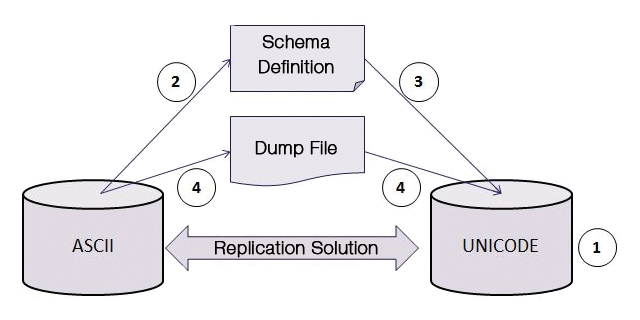

The actual migration takes place in the following four steps. Figure 2 represents the sequence schematically.

Create a new database. The new database is set up to reflect enterprise, and Oracle, specifications with a new character set (typically AL32UTF8). One pleasant side effect is that the database is completely reorganized, which mean that superfluous data (added to the database, for example, by installing sample schemas or older applications) can now be eliminated.

Export the schema definitions as an ASCII file. The next step is to export the schema definitions without the length semantics as an ASCII file (Figure 3). This gives you the option of cleaning up.

Oracle offers a package named dbms_metadata for this task. I have had very good experiences with Toad for Oracle. The tool uses a graphical interface to facilitate the process of creating the complete definition, and multiple schemas, as an ASCII file and copying it to the editor. You can then use find and replace, if needed, to make changes (for example converting NVARCHAR2 to VARCHAR2).

Import the schema definitions. Next, run the script you just created with the schema definition against the new database. Before doing so, set the NLS_LENGTH_SEMANTICS parameter for the entire session:

SQL> ALTER SESSION SET NLS_LENGTH_SEMANTICS=CHAR;

This means that all character columns (i.e., CHAR, VARCHAR2, etc.) that do not use explicit length semantics use CHAR as their length semantics. And, non-standard characters will once again fit in the fields.

Import records. The last step is to import the records – that is, to transfer the table content conveniently via Export/Import or Data Pump. When you import the data, be sure to ignore the commands that would create the tables, because they already exist. Also, you must turn off foreign key constraints because it is impossible to determine the data insertion sequence. Otherwise, you might discover that detailed records (e.g., the address of a person) cannot be inserted because the master data for the person (i.e., first name, last name, etc.) is not yet available. The only thing you are interested in is that all data are inserted at the end of the import process; the address details should then be related to the person again.

Required Downtime

Of course, you can't ignore the downtime required for a migration of this kind. In the case of critical databases, it is thus advisable to use replication software that logs the changes in the old database and replicates them to the new database after it has been set up.

In 2010, a major German automobile manufacturer used Shareplex for Oracle by Dell (formerly Quest) to migrate an approximately 10TB database with less than 2 hours of downtime. And in 2011, it migrated a smaller but highly critical database without any downtime at all from an ISO character set to Unicode. In both cases, the company would have been able to fail back to the older database for several weeks after the conversion.