OpenStack workshop, part 3:Gimmicks, extensions, and high availability

Wide Load

The second part of this workshop [1] was, admittedly, not much fun – before an OpenStack installation sees the light of day, the admin has about a thousand things to take care of. However, the reward for all this effort is a cloud platform that seamlessly scales horizontally and uses state-of-the-art technology, such as Open vSwitch, to circumnavigate many technical problems that legacy virtualization environments face.

Once the basic installation is in place, it's time to tweak: How can cloud providers add value to their software? How can you future-proof the installation? How can you best mitigate an infrastructure failure in OpenStack? This third episode of the OpenStack workshop delves into the depths of these questions, beginning with some repairs that might well see some cloud customers getting very excited.

Quantum, Horizon, and Floating IPs

An elementary function in OpenStack is floating IPs, which let you assign public IP addresses to virtual machines as needed so they're accessible from the Internet. Public IPs can be assigned dynamically to the existing instances. The origin of this function lies with the idea that not every VM will be accessible from the Internet: On one hand, you might not have enough IPv4 addresses in place; on the other hand, a database usually doesn't need a public IP. In contrast, web servers (or at least their upstream load balancers) really should be accessible on the web.

In the previous version of OpenStack, "Essex," the world was more or less still in order in this respect: Nova Network took care of the network itself as a component of the OpenStack computing environment, and Quantum was nowhere in sight. Assigning floating IPs was an internal affair in Nova, and the Horizon web interface, for example, is expressly designed for the job: If a customer wants to assign a floating IP in the dashboard of a VM, Horizon sends the command to Nova and relies on the correct actions taking place.

Problems with Quantum

In "Folsom," this arrangement suffered somewhat with Quantum. Now, not Nova, but Quantum, is responsible for floating IP addresses, as can be seen in several places: In the original version of Folsom (i.e., 2012.2), assigning floating IPs only worked at the command line and with a quantum command. As an example, Listing 1 shows the individual steps required.

Listing 1: Assigning Floating IPs with Quantum

root@alice:~# quantum net-list

+--------------------------------------+-----------+------------------

| id | name | subnets |

+--------------------------------------+-----------+------------------

| 42a99eb6-3de7-4ffb-b34e-6ce622dbdefc | admin-net|928598b5-43c0-... |

| da000609-a85f-4d46-8abd-ff649c4cb173 | ext_net |0f5c7b17-65c10-... |

+--------------------------------------+-----------+------------------

root@alice:~# quantum floatingip-create da000609-a85f-4d46-8abd-...

Created a new floatingip:

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| fixed_ip_address | |

| floating_ip_address | 192.168.144.101 |

| floating_network_id | da000609-a85f-4d46-8abd-ff649c4cb173 |

| id | 52706c89-2906-420c-a719-514c267cfbd4 |

| port_id | |

| router_id | |

| tenant_id | dc61d2b9e6c44e6a9a6615fb5c045693 |

root@alice:~# quantum port-list

+--------------------------------------+------+-------------------+

| 0c478fa6-c12c-... | | fa:16:3e:29:33:18 |

+-----------------------------------------------------------------+

| fixed_ips |

+-----------------------------------------------------------------+

{"subnet_id": "0f5c7b17-65c1-", "ip_address": "192.168.144.101"} |

+-----------------------------------------------------------------+

...

root@alice:~# quantum floatingip-associate 52706c89-2906-420c-a719-514c267cfbd4 c1db7d5e-c552-4a80-82e4-3da94e83cbe8

Associated floatingip 52706c89-2906-420c-a719-514c267cfbd4

If this looks cryptic to you, that's because it is. To begin, you need to discover the ID of your external network (in this example, ext_net) before creating a floating IP on this network with quantum floatingip-create. Next, you need to know the port on the virtual Quantum switch to which the VM you are assigning the floating IP is connected. In this example, it is the VM with IP address 10.5.5.3. Finally, the VM is assigned its IP. Because Quantum uses UUIDs throughout, the whole process is genuinely intuitive.

Joking aside, this process is virtually impossible for non-geeks to master, and cloud platforms precisely target non-geeks. In other words, a solution is needed. The dashboard that allows users to assign IPs easily is not yet ready for Quantum and stubbornly attempts to use Nova to handle IP assignments, even in a Quantum setup. The developers do not envisage adding Quantum compatibility until the "Grizzly" version of the dashboard is released. Still, don't despair, because with a bit of tinkering, you can easily retrofit the missing function.

The Nova component in the first Folsom maintenance release (2012.2.1) comes with a patch that forwards floating IP requests directly to Quantum, if Quantum is used. If you want floating IPs in Quantum, you should therefore first install this version (for Ubuntu 12.4, it is now available in the form of packages in the Ubuntu Cloud repository). That's half the battle – the other half relates to the patch.

The Touchy Topic of UUIDs

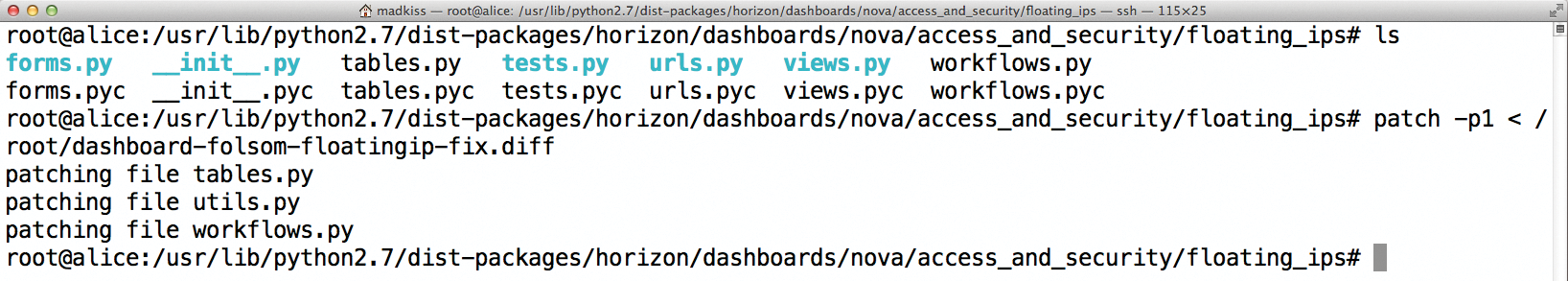

The dashboard assigns floating IPs directly. Whereas Nova Network could use this, Quantum needs, instead of the IP, the corresponding UUID from the IP part of the command. A patch backported from Grizzly [2] ensures that the dashboard can deal with IPs that are given in the form of UUIDs. The patch is available on the dashboard host in the folder /usr/lib/python2.7/dist-packages/horizon/dashboards/nova/access_and_security/floating_ips (Figure 1). You can apply it with:

patch -p1 < patch

Assigning floating IPs will then work. When the user clicks in the dashboard, the dashboard passes the command to Nova, which forwards it to Quantum. Quantum takes care of the rest, and the VM is assigned its floating IP (Figure 2).

Meaningful Storage Planning

A classic approach in cloud computing environments is to allow them to scale laterally seamlessly. When the platform grows, it must be possible to add additional hardware and thus increase the capacity of the installation. However, this approach collides with classic storage approaches because neither typical SAN storage nor suitable replacement structures using NBD or DRBD will scale to the same dimensions as Ceph.

What is really interesting is a pairing of a cloud platform such as OpenStack and an object storage solution. OpenStack itself offers one in the form of Swift, but Swift suffers from an inability to access the storage as a block device. This is where Ceph enters the game; Ceph allows block access. The two OpenStack components that have to do with storage – Glance and Cinder – provide a direct connection to Ceph, but how does the practical implementation work?

Glance and Ceph

In the case of Glance, the answer is: easy as pie. Glance, which is the component in OpenStack that stores operating system images for users, can talk natively to Ceph. If an object store is already in place, nothing is more natural than storing those images in it. For this to work, only a few preparations are necessary.

Given that Ceph pool images exists and the Ceph user client.glance has access to it, the rest of the configuration is easy. For more details about user authentication in Ceph, check out my CephX article published online [3].

Any host that needs to run Glance with a Ceph connection needs a working /etc/ceph/ceph.conf. Glance references this to retrieve the necessary information about the topology of the Ceph cluster. Additionally, the file needs to state where the keyring with the password belonging to the Glance user in Ceph can be found. A corresponding entry for ceph.conf looks like this:

[client.glance]

keyring = /etc/ceph/keyring.glance

The /etc/ceph/keyring.glance keyring must contain the user's key, which looks something like:

[client.glance]

key = AQA5XRZRUPvHABAABBkwuCgELlu...

Then, you just need to configure Glance itself by entering some new values in /etc/glance/glance-api.conf. The value for default_store= is rbd. If you use client.glance as the username for the Glance user in Ceph, and images as the pool, you can close the file now; these are the default settings.

If other names are used, you will need to modify rbd_storage_user and rbd_store_pool accordingly lower down in the file. Finally, you can restart glance-api and run glance-registry.

Cinder is the block storage solution in OpenStack; it supplies what are not actually persistent VMs with non-volatile block memory. Cinder also has a native back end for Ceph. The configuration is a bit more extensive because Cinder handles memory allocation directly via libvirt. Libvirt itself is thus the client that logs in directly to Ceph.

If CephX authentication is used, the virtualization environment must therefore know it has to introduce itself to Ceph. So far, so good. Libvirt 0.9.12 introduced a corresponding function.

Cinder

The implementation of Cinder is somewhat more complicated. The following example assumes that the Ceph user in the context of Cinder is client.cinder and that a separate cinder pool exists. As in the Glance example, you need a keyring called /etc/ceph/keyring.cinder for the user, and this must be referenced accordingly in ceph.conf. To generate a UUID at the command line, use uuidgen; libvirt stores passwords with the UUID name. The example uses 95aae3f5-b861-4a05-987e-7328f5c8851b. The next step is to create a matching secret file for libvirt – /etc/libvirt/secrets/ceph.xml in this example; its content is shown in Figure 3. Always replace the uuid field with the actual UUID, and if the local user is not client.cinder, but has a different name, also adjust the name accordingly. Now enable the password in libvirt:

virsh secret-define /etc/libvirt/secrets/ceph.xml

Thus far, libvirt knows there is a password, but it is still missing the real key. The key is located in /etc/ceph/keyring.cinder; it is the string that follows key=. The following line tells libvirt that the key belongs to the password that was just set,

virsh secret-set-value <UUID> <key>

which here is:

virsh secret-set-value 95aae3f5-b861-4a05-987e-7328f5c8851b AQA5jhZRwGPhBBAAa3t78yY/0+1QB5Z/9iFK2Q==

This completes the libvirt part of the configuration; libvirt can now log in to Ceph as client.cinder and use the storage devices there. What's missing is the appropriate Cinder configuration to make sure the storage service actually uses Ceph.

The first step is to make sure Cinder knows which account to use to log in to Ceph so it can create storage devices for VM instances. To do this, modify /etc/init/cinder-volume.conf in your favorite editor so that the first line of the file reads

env CEPH_ARGS="--id cinder"

(given a username of client.cinder). The second step is to add the following four lines to the /etc/cinder/cinder.conf file:

volume_driver=cinder.volume.driver.RBDDriver rbd_pool=images rbd_secret_uuid=2a5b08e4-3dca-4ff9-9a1d-40389758d081 rbd_user=cinder

After restarting the cinder services – cinder-api, cinder-volume, and cinder-scheduler – the Cinder and Ceph team should work as desired.

The best OpenStack installation is not worth a penny if the failure of a server takes it down. The setup I created in the previous article [1] still has two points of failure: the API node and the network node.

High Availability

The good news is that Folsom is nothing like as clueless in terms of high availability (HA) as earlier OpenStack versions were. Efforts made by yours truly and others to push toward integrating OpenStack services with Pacemaker clusters now mean that the major part of the OpenStack installation can be designed for HA. Incidentally, this is another reason to rely on object storage solutions like Ceph: They come with HA built in, meaning that admins do not need to worry about redundancy.

The remaining problem concerns the reliability of the OpenStack infrastructure and of the VMs themselves. For the infrastructure component, Pacemaker is an obvious choice (Figure 4): It provides a comprehensive toolbox that lets admins configure services redundantly on multiple machines. Almost all OpenStack services can thus be upgraded to high availability, including Keystone, Glance, Nova, Quantum, and Cinder [4]. Anyone planning an OpenStack HA setup, however, should not forget two other components: the database, typically MySQL, and the message queue (i.e., RabbitMQ or Qpid).

HA for MySQL and RabbitMQ

Several HA options are available for MySQL. The classical method is for MySQL to store its data on shared storage, such as DRBD, with mysqld migrating between two computers. Depending on the size of the database journal, a failover in this kind of scenario can take some time; moreover, the solution does not scale horizontally. Natively integrated solutions such as MySQL Galera are more meaningful; they ensure that the database itself takes care of replication. A detailed article about Galera was published in a previous ADMIN issue [5].

The situation is similar with the RabbitMQ messaging queue: Again, you could easily implement a failover solution relying on shared storage, which in this case would be preferable to the HA solution that RabbitMQ offers out of the box. In the past, "mirrored queues" have repeatedly been found to be prone to error. If you are considering making RabbitMQ highly available in a Pacemaker setup, you would do better to choose a solution in which the rabbitmq-server migrates between the hosts and /var/lib/rabbitmq is located on shared storage. If you work with more than two nodes and Ceph, you can use CephFS to mount the RabbitMQ data on /var/lib/rabbitmq and resolve the problem of non-scaling storage at a glance.

The remaining overhead in an HA setup consists almost exclusively of integrating the existing OpenStack components with a classic Pacemaker setup. It is beyond the scope at this point to explain a bare metal Pacemaker configuration.

Worth particular notice is that resource agents in line with the OCF standard are now available for almost all OpenStack components. You can unzip them on a system into the /usr/lib/ocf/resource./openstack folder like this:

cd /usr/lib/ocf/resource.d mkdir openstack cd openstack wget -O- https://github.com/madkiss/openstack-resource-agents/archive/master.tar.gz | tar -xzv --strip-components=2 openstack-resource-agents-master/ocf chmod -R a+rx *

To reveal the help text for the nova-compute resource agent, then, you would type:

crm ra info ocf:openstack:nova-compute

The rest is plain sailing.

For each OpenStack service, you need to integrate a resource with the Pacemaker configuration so that services that belong together are grouped (Listing 2, lines 28-33). To make sure all the important services run on the same host, colocation and order constraints set the ratio of resources to one other (lines 38-51). It is worth mentioning that the resource for the Quantum openvswitch plugin agent is a clone resource (line 34): Every service should run on all OpenStack nodes, on which virtual machines should basically also be bootable.

Listing 2: Pacemaker Configuration for OpenStack

01 node alice 02 node bob 03 node charlie 04 primitive p_IP ocf:heartbeat:IPaddr2 params cidr_netmask="24" ip="192.168.122.130" iflabel="vip" op monitor interval="120s" timeout="60s" 05 primitive p_cinder-api upstart:cinder-api op monitor interval="30s" timeout="30s" 06 primitive p_cinder-schedule upstart:cinder-scheduler op monitor interval="30s" timeout="30s" 07 primitive p_cinder-volume upstart:cinder-volume op monitor interval="30s" timeout="30s" 08 primitive p_glance-api ocf:openstack:glance-api params config="/etc/glance/glance-api.conf" os_password="hastexo" os_username="admin" os_tenant_name="admin" os_auth_url="http://192.168.122.130:5000/v2.0/" op monitor interval="30s" timeout="30s" 09 primitive p_glance-registry ocf:openstack:glance-registry params config="/etc/glance/glance-registry.conf" os_password="hastexo" os_username="admin" os_tenant_name="admin" keystone_get_token_url="http://192.168.122.130:5000/v2.0/tokens" op monitor interval="30s" timeout="20s" 10 primitive p_keystone ocf:openstack:keystone params config="/etc/keystone/keystone.conf" os_password="hastexo" os_username="admin" os_tenant_name="admin" os_auth_url="http://192.168.122.130:5000/v2.0/" op monitor interval="30s" timeout="30s" 11 primitive p_mysql ocf:heartbeat:mysql params binary="/usr/sbin/mysqld" additional_parameters="--bind-address=0.0.0.0" datadir="/var/lib/mysql" config="/etc/mysql/my.cnf" log="/var/log/mysql/mysqld.log" pid="/var/run/mysqld/mysqld.pid" socket="/var/run/mysqld/mysqld.sock" op monitor interval="120s" timeout="60s" op stop interval="0" timeout="240s" op start interval="0" timeout="240s" 12 primitive p_nova-api-ec2 upstart:nova-api-ec2 op monitor interval="30s" timeout="30s" 13 primitive p_nova-api-metadata upstart:nova-api-metadata op monitor interval="30s" timeout="30s" 14 primitive p_nova-api-os-compute upstart:nova-api-os-compute op monitor interval="30s" timeout="30s" 15 primitive p_nova-cert ocf:openstack:nova-cert op monitor interval="30s" timeout="30s" 16 primitive p_nova-compute-host1 ocf:openstack:nova-compute params additional_config="/etc/nova/nova-compute-host1.conf" op monitor interval="30s" timeout="30s" 17 primitive p_nova-compute-host2 ocf:openstack:nova-compute params additional_config="/etc/nova/nova-compute-host2.conf" op monitor interval="30s" timeout="30s" 18 primitive p_nova-compute-host3 ocf:openstack:nova-compute params additional_config="/etc/nova/nova-compute-host3.conf" op monitor interval="30s" timeout="30s" 19 primitive p_nova-consoleauth ocf:openstack:nova-consoleauth op monitor interval="30s" timeout="30s" 20 primitive p_nova-novnc upstart:nova-novncproxy op monitor interval="30s" timeout="30s" 21 primitive p_nova-objectstore upstart:nova-objectstore op monitor interval="30s" timeout="30s" 22 primitive p_nova-scheduler ocf:openstack:nova-scheduler op monitor interval="30s" timeout="30s" 23 primitive p_quantum-agent-dhcp ocf:openstack:quantum-agent-dhcp op monitor interval="30s" timeout="30s" 24 primitive p_quantum-agent-l3 ocf:openstack:quantum-agent-l3 op monitor interval="30s" timeout="30s" 25 primitive p_quantum-agent-plugin-openvswitch upstart:quantum-plugin-openvswitch-agent op monitor interval="30s" timeout="30s" 26 primitive p_quantum-server ocf:openstack:quantum-server params os_password="hastexo" os_username="admin" os_tenant_name="admin" keystone_get_token_url="http://192.168.122.130:5000/v2.0/tokens" op monitor interval="30s" timeout="30s" 27 primitive p_rabbitmq ocf:rabbitmq:rabbitmq-server params mnesia_base="/var/lib/rabbitmq" op monitor interval="20s" timeout="10s" 28 group g_basic_services p_mysql p_rabbitmq 29 group g_cinder p_cinder-volume p_cinder-schedule p_cinder-api 30 group g_glance p_glance-registry p_glance-api 31 group g_keystone p_keystone 32 group g_nova p_nova-api-ec2 p_nova-api-metadata p_nova-api-os-compute p_nova-consoleauth p_nova-novnc p_nova-objectstore p_nova-cert p_nova-scheduler 33 group g_quantum p_quantum-server p_quantum-agent-dhcp p_quantum-agent-l3 34 clone cl_quantum-agent-plugin-openvswitch p_quantum-agent-plugin-openvswitch 35 location lo_host1_prefer_alice p_nova-compute-host1 10000: alice 36 location lo_host2_prefer_bob p_nova-compute-host2 10000: bob 37 location lo_host3_prefer_charlie p_nova-compute-host3 10000: charlie 38 colocation co_g_basic_services_always_with_p_IP inf: g_basic_services p_IP 39 colocation co_g_cinder_always_with_g_keystone inf: g_cinder g_keystone 40 colocation co_g_glance_always_with_g_keystone inf: g_glance g_keystone 41 colocation co_g_keystone_always_with_p_IP inf: g_keystone p_IP 42 colocation co_g_nova_always_with_g_keystone inf: g_nova g_keystone 43 colocation co_g_quantum_always_with_g_keystone inf: g_quantum g_keystone 44 order o_cl_quantum-agent-plugin-openvswitch_after_g_keystone inf: g_keystone:start cl_quantum-agent-plugin-openvswitch:start 45 order o_g_basic_services_always_after_p_IP inf: p_IP:start g_basic_services:start 46 order o_g_cinder_always_after_g_keystone inf: g_keystone:start g_cinder:start 47 order o_g_glance_always_after_g_quantum inf: g_quantum:start g_glance:start 48 order o_g_keystone_always_after_g_basic_services inf: g_basic_services:start g_keystone:start 49 order o_g_keystone_always_after_p_IP inf: p_IP:start g_keystone:start 50 order o_g_nova_always_after_g_glance inf: g_glance:start g_nova:start 51 order o_g_quantum_always_after_g_keystone inf: g_keystone:start g_quantum:start

VM Failover with OpenStack

The three p_nova-compute instances (Listing 2, lines 16-18) need a special mention; they allow you to restart virtual machines on other hosts if the original host on which the VMs were running is no longer available. Previously this was a sensitive issue in OpenStack because the environment itself – at least in Folsom – did not initially notice the failure of a node. But the back door used in the example in this article lets you retrofit a similar function. Each instance of nova-compute allows you to specify additional configuration files. Their values overwrite existing values, and the last one wins. A host= entry tells a compute instance what its name is; if this value is not set, Nova usually assumes the hostname of the system. The trick is to remove the mapping between the hostname and the VMs running on it. Whether the Nova compute instance named host1 runs on node 1, node 2, or node 3 is initially irrelevant, and VMs that are running on host1 can be started on any server, as long as it has a Nova compute instance that thinks it is host1.

The nova.conf used in the previous article [1] also includes the resume_guests_state_on_host_boot = true option; this means that VMs on a host are set to the state in which Nova last saw them after launching nova-compute. In plain talk: If server 1 is running a nova-compute with host=host1 set, Nova will remember which VMs run on this host1. If server 1 crashes, in this example, Pacemaker restarts the nova-compute host1 instance on another server, and Nova then boots the VMs that previously lived on server 1 on the other server.

For this approach to work, all nova-compute instances need access to the same Nova instances directory. The example here solves the problem by mounting /var/lib/nova/instances on a CephFS on all servers, thus providing the same files to all hosts (Figure 5).

/var/lib/nova/instances directory is a CephFS mount on all hosts; all the servers thus see the same VM data.A universal caveat applies to OpenStack Pacemaker setups like any other Pacemaker installation: Keep the versions of the programs identical between the computers. Any config files required by services must also be in sync between the hosts. If these conditions are met, a high-availability OpenStack setup is no problem at all.

Looking Forward

The one very noticeable issue with the suggested VM HA mechanism is that it only allows a collective move of all VMs from the failed host to another host. It would make more sense for OpenStack to take care of this itself and use its own scheduler to distribute the VMs to multiple hosts with free resources. Folsom is unlikely ever to have this function, but Grizzly already implements a corresponding feature: The evacuate function will stop precisely this gap. It is still too early to investigate its suitability for everyday use, but if you intend to take a closer look at OpenStack in the future, you have plenty to look forward to.