Review: Accelerator card by OCZ for ESX server

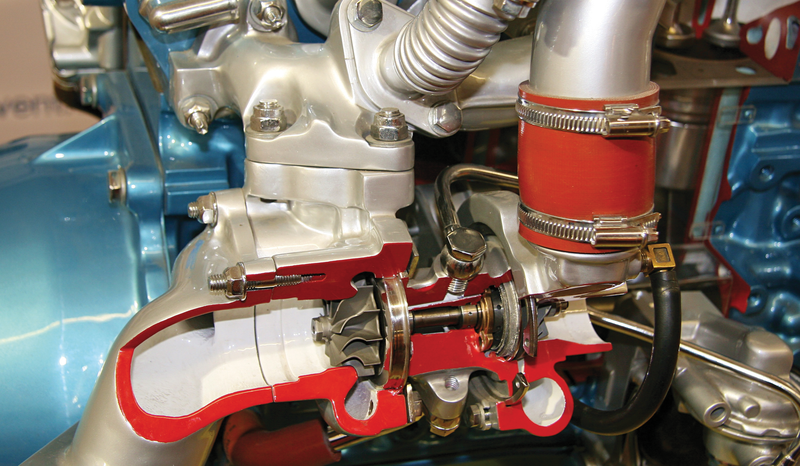

Turbocharger for VMs

Manufacturer OCZ advertises VXL, its storage acceleration software, with crowd-pulling arguments: It runs without special agents in applications on any operating system, reducing traffic to and from the SAN by up to 90 percent and allowing up to 10 times as many virtual machines per ESX host than without a cache. The ADMIN test team decided to take a closer look.

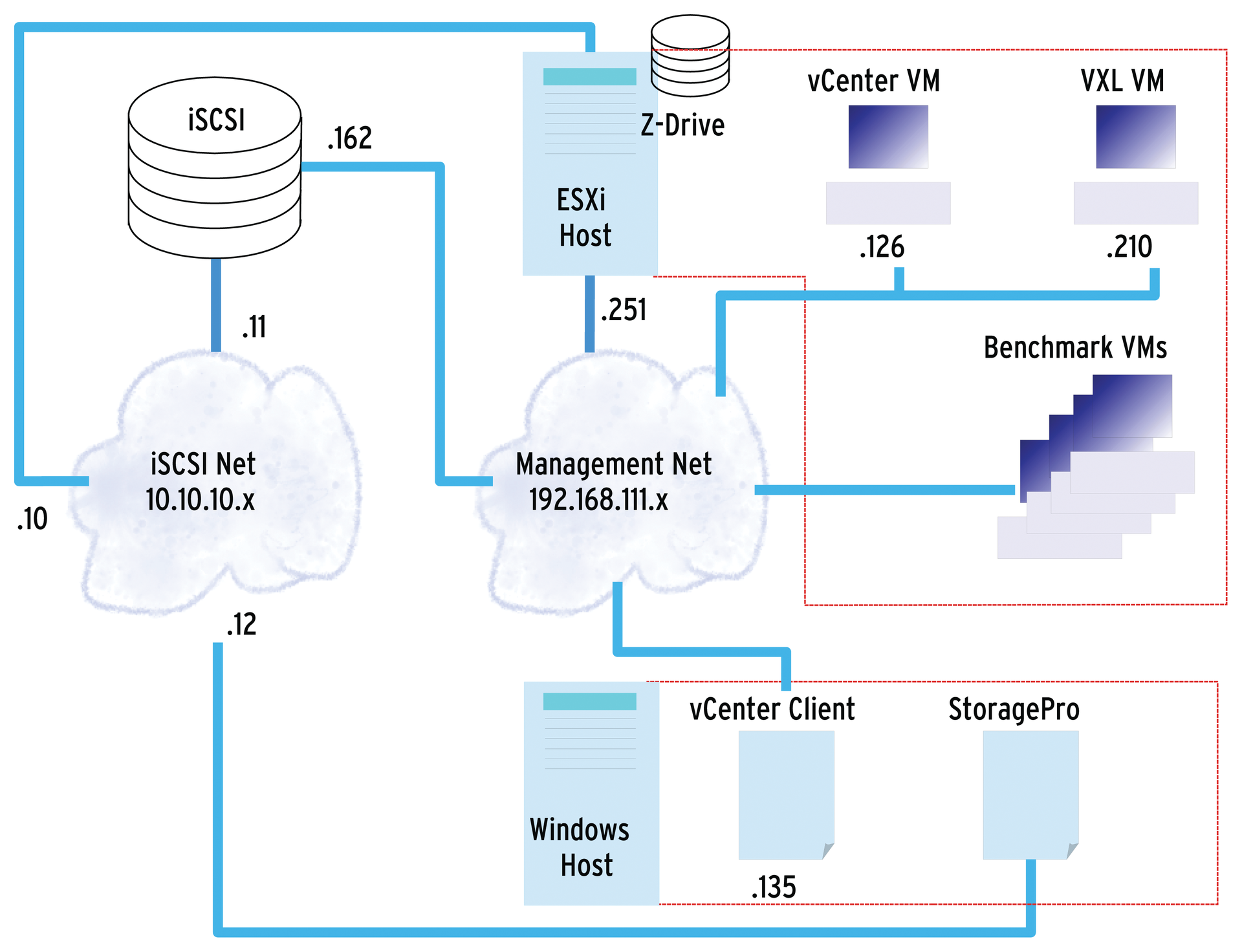

The product consists of an OCZ Z-Drive R4 solid state storage card for a PCI Express slot in the virtualization host and associated software. The software takes the form of a virtual machine running on the ESX server that you want to benefit from the solution: This component handles the actual caching.

Additionally, a Windows application exists for the cache control settings. The virtual machine runs on Linux and starts a software tool called VXL. The Windows application (which, incidentally, can also run on a virtual machine) and the VXL virtual machine need access to a separate iSCSI network that connects the storage to be accelerated on the virtualization host (Figure 1). The administrator communicates with the ESX server, its VMs, and the VXL components on a second management network.

Knowing How

I'll start by saying that the software configuration is anything but trivial. The somewhat complex architecture contains many tweaks for calibrating the caching mechanism. With this complexity, it is very easy to achieve a working, but not optimum, solution that sacrifices a large chunk of the possible acceleration. Letting the vendor help you, at least during the initial setup, is certainly not a bad idea, especially considering that the "VXL and StoragePro Installation and Configuration Guide" contains no explanation of the functionality, no hints about the meanings and purpose, or the whys and wherefores of the configuration steps. It does not explain the coherencies or provide a roadmap or a target, and does not even fully document the options. The entire guide consists only of a series of more or less sparsely annotated screenshots. In other words, if you encounter a problem en route, you have no orientation: You do not know what you want to achieve, how the components should cooperate, or what features they should have.

Unsuspecting admins likely will be caught by this trap solely because the descriptions are ambiguous – at least in places – and because some of the software components react in unusual and unexpected ways. For example, when you set up the required virtual VXL machine, a configuration script does not apply the default values it suggests when you press Enter, as you would assume. At the same time, it secretly filters the user input without giving you any feedback. Unless you hit upon the really wacky idea of actually writing down what you see, you will probably think that the script is hanging; after all, it does not respond to keystrokes. All of this impairs the utility value of documentation and the user friendliness of the software, at least in the setup phase.

Just Rewards

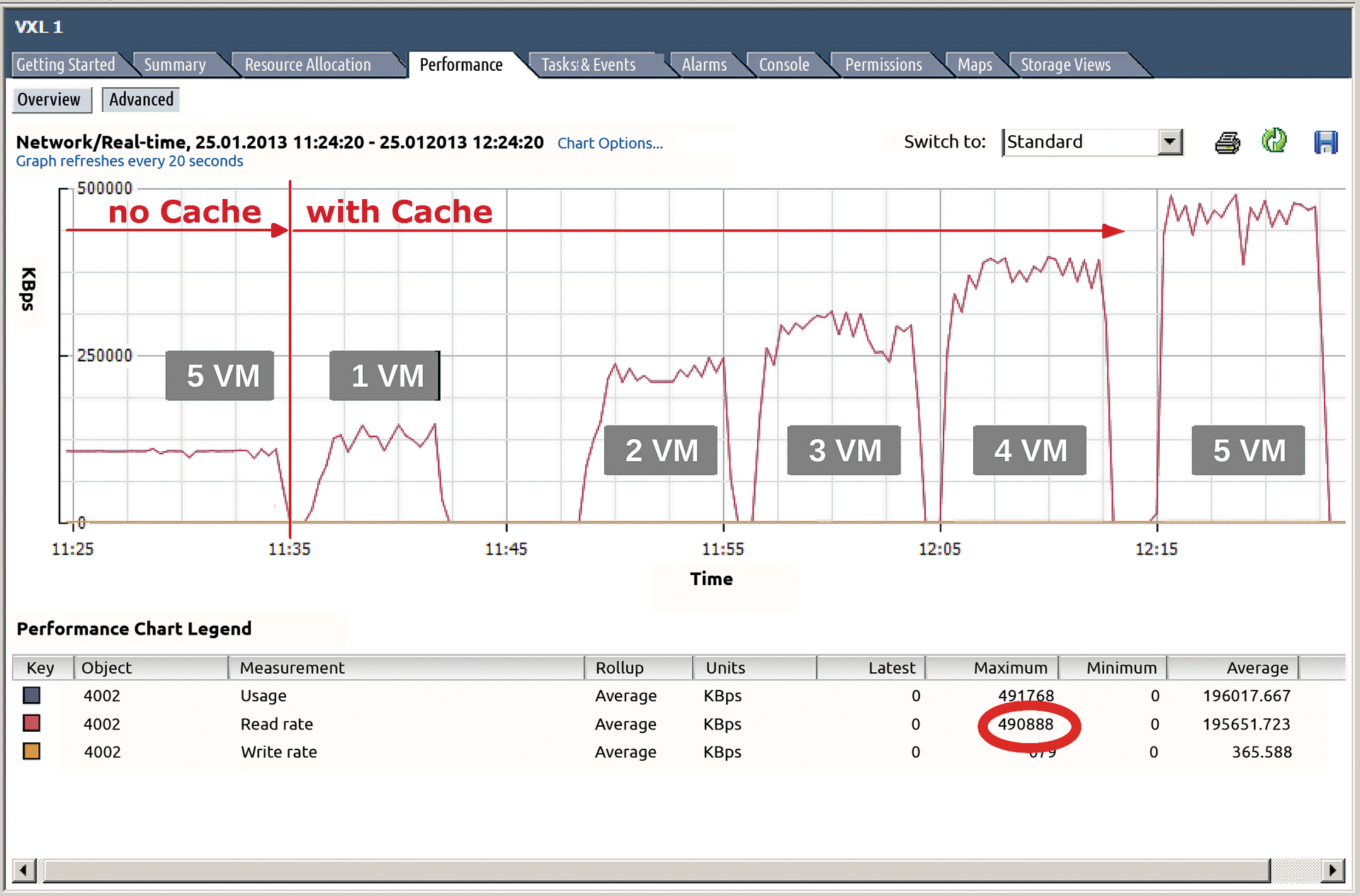

Once the software is properly configured and customized, which involves some major effort, and you try to boost the speed – given a matching workload – then the achievable data rates are unquestionably impressive. Thanks to the cache, at least five clients can read around 100MBps from the iSCSI targets, resulting in an aggregated read rate of 500MBps; without the accelerator card, no more than 100MBps crosses the iSCSI network in any situation (Figure 2).

At 500MBps, the going would start to get tough, even for SATA 3.0 (and even older versions running at 150 and 300MBps would have long since given up), but iSCSI with Gigabit Ethernet (GbE), which you could possibly extend to 10GbE, still has enough room to grow.

For an initial overview, I employed the well-known file copy utility dd,

dd if=./testfile1 of=/dev/null bs=64k iflag=direct

which sets a block size of 64KB and bypasses the filesystem buffer cache if possible. Next, I launched this command simultaneously on one, two, three, four, and five virtual machines, which were supported by the VXL cache in one case and were not supported in the other case. In each constellation, the dd file copy completed 10 rounds. From the results, I eliminated the smallest and largest values, respectively, and then computed the arithmetic mean from the remaining eight measurements.

At Speed

From the outset, the read performance of the unaccelerated volumes (46.6MBps) was less than half of the accelerated versions (97.66MBps) with one VM running. As the number of VMs competing for I/O increased, the performance of the unaccelerated version drops off much faster, and with five VMs working in parallel, it is less than a third of the rate achieved with cache. The results are far clearer in a benchmark with IOzone:

iozone -i1 -I -c -e -w -x -s2g -r128k -l1 -u1 -t1 -F testfile

I also ordered IOzone to read a 2GB test file, exclusively and using direct I/O. I set the record size to 128KB with IOzone running in throughput mode, using exactly one process. The mean values were computed as described above. Figure 3 shows the corresponding results.

Writing Is Poison

Thus far, I have only considered read benchmarks – and for a good reason: Although VXL also caches writes, they must be confirmed to the host to ensure consistency as soon as they are completed on the physical media. This means that the cache offers virtually no speed benefits – instead the writes occupy cache space that parallel read operations can no longer use.

The effect is that even workloads with 50 percent write operations more or less wipe out the performance benefits on the cache-accelerated volume. Although write operations fortunately do not slow down the system to below the uncached performance, the speed gains are lost. For more information, see the "How We Tested" box.

Of course, there are many read-heavy workloads, especially in today's buzz disciplines like big data. Even in everyday situations, such as simultaneous booting of many VMs, read operations outweigh writes. Here is where the VXL cache finds its niche and where it will contribute to significant performance gains and better utilization of the ESX server, whose storage can thus tolerate a much greater number of parallel VMs.

The VXL cache also is useful in environments where write operations can be focused on specific volumes. Newer versions of the software allow admins to partition the SSD so that only part of it is used as cache, while another part acts as a normal SSD. Write-intensive operations can then be routed to the SSD drive, whereas the cache on the same memory card is mainly used to read parts of a database (e.g., some indices). The vendor's benchmarks with long-term data warehouse queries on MS SQL Server 2012 thus show speed gains of up to 1,700 percent [1], thanks to the use of the VXL software.

Conclusions

When read and write operations are fairly balanced, cannot be separated, and use the same medium, this caching solution does not make sense. However, when reads outweigh writes, or can at least be separated from the write operations, and many VMs or processes are involved, the OCZ solution accelerates the data transfer by a factor of five or more while reducing the data transfer to and from SAN and allowing the ESX Server by VMware to support more virtual machines in parallel – provided it otherwise has sufficient resources. The cache can also serve multiple hosts or be mirrored to a second Z-Drive and supports migration of VMs between virtualization hosts.

Of course, these benefits come at a price. For the tested version, the cost was EUR 2,560 for a VXL license, including one year's support and no additional features (e.g., cache mirroring), plus EUR 4.5/GB of flash on the cache card, which is available in sizes from 1.2 to 3.2TB.