All you ever wanted to know about hard drives but never dared ask

Heavy Rotation

Introduced by IBM in the 1950s, hard drives rapidly became the primary permanent storage technology of the computer industry and have retained this primacy for the past 50 years. Despite the mounting challenge posed by solid-state drives, the world's hunger for storage space continues to grow unabated, and the relative cost and performance of HDDs and SSDs are not expected to change in the medium term, making an understanding of this storage technology a key instrument of performance tuning.

The primary observation from which to start is that modern CPUs operate at speeds that make a spinning disk drive resemble the race between a rocket and a turtle: Whereas the CPU can access data in the L1 cache at effectively zero latency, RAM can as be much as 20 times slower than the L2 cache, which is itself five times as slow as its L1 counterpart [1]; this is the von Neumann bottleneck.

Spinning disk media come in at 13,700 times the latency of the L1 cache. Although hard disks remain impressive achievements of mechanical engineering, their moving parts simply cannot keep up with electrons, and the best performance decision is the one that avoids critical-path access to permanent storage altogether [2]. An unprintable infographic [3] makes this point very poignantly (be sure to zoom in at the very top of the picture); optimization is simply a race to get to wait on I/O faster if permanent storage access is not architected properly.

Disks store data in concentric tracks recorded using a constant angular velocity scheme referred to as ZCAV [4]. Unlike optical media, which use constant linear velocity, hard disks do not need to alter speed as they access different parts of the platter.

This approach has interesting consequences, in that comparatively more data is stored on the outer tracks of a drive, which house more sectors in this storage scheme. The result is higher data transfer rates, making the initial partitions of a drive faster than those allocated closer to the hub of the disk (Figure 1).

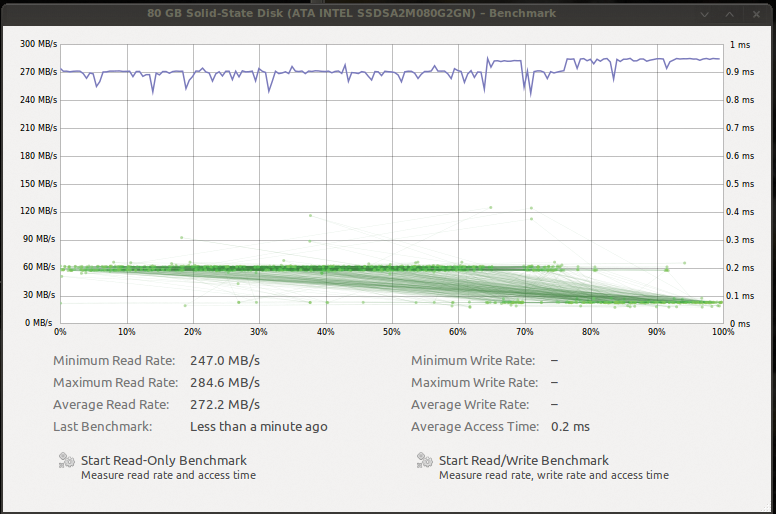

The higher data density of the outer tracks results in a considerable data transfer performance penalty as the benchmark proceeds inward, scanning the drive. Figure 2 shows the same system benchmarking an SSD drive, which is not affected by physical geometry considerations.

Common consumer 7,200rpm disk drives can achieve sustained data transfer rates around 1Gbps and can comfortably operate within the established SATA 3Gbps bus limit, but the performance advances of the latest SSDs require a speedier SATA 6Gbps interface – check your top bus speed before needlessly retrofitting an older system with a top-notch SSD. External drive performance is also similarly capped by bus speeds (see a dramatic example in Figure 3).

The data center practice of using only the outermost tracks of a drive to increase performance is called "short stroking" [5]. Short stroking couples the highest throughput of the outer tracks of a drive with a restricted seek range for disk heads, at the price of using only a portion of the drive's full capacity. The results benefit mostly sustained read performance, with other benchmarks seeing limited effect.

More expensive enterprise hard drives reach as high as 15,000rpm, reducing latency to half of the 4ms a 7,200rpm unit delivers – to 2ms. My laptop's older SSD comparatively delivers a 0.2ms average access time. More significant facets of enterprise disks lie in their different firmware behavior: Whereas an enterprise disk will fail quickly if a disk sector has become unreliable, thereby avoiding protracted delays and timeouts, a consumer drive will make repeated, strenuous efforts to read a bad sector before remapping it [6].

Both these behaviors have clear advantages in different situations, but you should be aware of the differences should you plan to use consumer drives in a server environment; generally, do not use consumer drives with timeouts exceeding 30 seconds in this application because RAID does not look kindly on unresponsive drives.

An important trade-off found in modern controllers is issuing write completion messages when data reaches the track cache, not the disk itself. This results in fsync(2) incorrectly believing that data has been permanently stored when it is still in-flight and can result in data loss in a power outage.

Systems not battery-backed or otherwise protected might operate reliably by disabling the write cache, but you should expect a 50 percent performance impact in the bargain [7]. Similar is the effect of running 4,096-sector drives in 512-byte emulation mode, which requires performing a read for each sector write, resulting in a write performance degradation of at least 50 percent.