What's left of TLS

Incomplete Security

The TLS protocol (formerly SSL) is the basis of secure communications on the Internet. Every website that is accessed via HTTPS uses TLS in the background. However, TLS is getting on in years. Many design decisions were found to be unfavorable after extensive analyses, and the security of the protocol has been questioned. The reactions to these findings have been mostly patchwork. Small changes to the protocol have prevented attacks so far, but the problem is fundamental.

A Brief History of SSL

SSL (Secure Socket Layer) was originally developed by Netscape. In 1995, when the World Wide Web was still in its infancy, the former monopolist browser released the SSL encryption protocol version 2.0 (SSLv2). Version 1 existed only internally in Netscape. Numerous security vulnerabilities were discovered in SSLv2 after a short time.

SSLv2 supported many encryption algorithms that were already deemed insecure at the time, including the Data Encryption Standard (DES) in its original form with a key length of only 56 bits. The 1990s, when SSLv2 was being developed, were the hot phase of the "Crypto Wars." Strong encryption technologies were forbidden in the United States. Many states talked about only allowing strong encryption under state control – with a third key, which would be deposited with the secret service.

After that, Netscape published SSLv3 to fix at least the worst of the security problems. Although SSLv2 is only of historical importance today and has been disabled by virtually all modern browsers, its successor is still in use and you can still find web servers that only support SSLv3.

It was not until later that SSL was standardized. This process also involved renaming it to TLS (Transport Layer Security), which caused much confusion. In 1999, the IETF standardization organization published the TLS protocol version 1.0 in RFC 2246 (TLSv1.0). The now 14-year-old protocol is still the basis of the majority of encrypted communications. It later became known that the use of CBC encryption mode in TLSv1.0 was error-prone, but the problems long remained theoretical.

TLSv1.1, which was designed to iron out the worst weaknesses of CBC, followed in 2006. Two years later, TLSv1.2 was released, and it broke with some TLS traditions. TLSv1.1 and TLSv1.2 still exist in various niches. Support for the two protocols is still virtually non-existent many years later – and vulnerabilities that were once regarded as theoretical are now celebrating a comeback.

Block Ciphers, Stream Ciphers

Communicating over a TLS connection basically involves a hybrid solution between public key and symmetric encryption. Initially, the server and client negotiate a symmetric key via what is typically an RSA-secured connection. The key is then used for encrypted and authenticated communication.

Although the initial connection nearly always relies on an RSA key, the subsequent communication relies on a real zoo of algorithm combinations. Adding to the confusion is that naming does not follow a uniform pattern. One possible algorithm is, say, ECDHE-RSA-RC4-SHA. This means: an initial connection using RSA, then a key exchange using Diffie-Hellman with elliptic curves, then data encryption via RC4, and data authentication using SHA1.

A basic distinction is made in encryption between block ciphers and stream ciphers. A block cipher encrypts only a first block of a fixed length, for example 256 bits. A stream cipher can be applied directly to a data stream as it occurs in the data connection.

Block ciphers are the significantly more common approach. As a rule, the Advanced Encryption Standard (AES) is used today. This algorithm was published by the U.S. standards organization NIST after a competition between cryptographers in 2001.

Because a block cipher always encrypts a block of fixed length, it cannot be applied directly to a data stream. There are various modes, and in the simplest case, you just encrypt one block at a time. This Electronic Codebook Mode (ECB) cannot be recommended because of safety considerations.

How CBC and HMAC Work

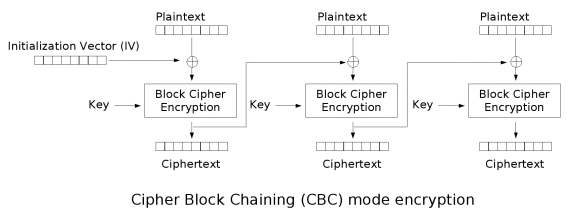

The most popular and most common mode today is Cipher Block Chaining mode (CBC). First, the initialization vector (IV) is generated as a random value and bit-wise XORed with the first data block. The result is encrypted. The next block of data is then also XORed with the encrypted first block (the schema is shown in Figure 1).

However, encryption with CBC only warrants that the data cannot be read by an attacker. The authenticity of the data is not guaranteed. Therefore, the HMAC method is used in a TLS connection before encryption with CBC. A hash is formed from the message and the key and then transmitted.

The combination of HMAC and CBC proved to be surprisingly shaky. The decision first to use HMAC and then CBC especially turned out to be a mistake. The problem was that by introducing a defective data block based on the returned error message, an attacker can discover whether padding, which is filling up the missing bytes in a block, or the HMAC hash check causes an error. Earlier versions of TLS sent different error messages in these cases.

By sending a large number of faulty data blocks, an attacker could find out details about the key that was used. This form of attack was named "Padding Oracle." Later, the system was changed so that an attacker always received the same error message. But along came the next problem: The response time still allowed the attacker to find out whether verification failed in the data block during padding or in the HMAC hash.

These methods are the basis for recently disclosed attacks like BEAST [1] and Lucky Thirteen [2]. Detailed changes in the protocol implementations prevented these attacks in the short turn, but that turned out to be anything but simple. It was shown, for example, that one of the changes introduced to prevent the BEAST attack just enabled a form of the Lucky Thirteen attack.

Authentication and Encryption

More modern block encryption modes combine authentication and encryption in a single step. One of these modes is called the Galois/Counter Mode (GCM, Figure 2). Although this mode is considered relatively complicated, GCM is free of patent claims, in contrast to other methods, and has no known security problems.

The AES-GCM process is supported in version 1.2 of TLS. In all previous versions of TLS, all block ciphers rely on CBC. The authors of the BEAST attack published yet another attack on TLS. The CRIME attack [3] also targets a timing issue. Here, the authors take advantage of a weakness in the TLS compression routine. Based on the response time of a server, they can draw conclusions as to the contents of a data block.

TLS data compression is rarely used because many browsers do not support it. For server administrators, it is best simply to disable it.

In addition to TLS block ciphers, older versions also support RC4 stream encryption. The method was developed by Ron Rivest and was not originally intended for publication. But, in 1994, the RC4 encryption source code appeared in a newsgroup. Since then, it has become very popular because it is relatively simple and very fast.

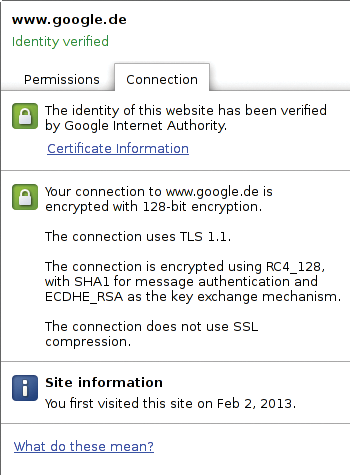

The use of RC4 is also possible in TLS and is actually the only option in older versions of the standard if you want to avoid the use of CBC. Therefore, many experts until recently recommended setting up TLS connections exclusively on RC4. Large websites such as Google rely on RC4 as the primary algorithm, and the credit card standard PCI DSS required server administrators to disable all CBC algorithms and to accept only connections via RC4. This was a mistake, as it turned out. At the Fast Software Encryption Workshop in March, a team led by cryptographer Dan Bernstein presented an attack on RC4 and TLS [4].

In RC4 stream encryption, certain bits in some places do not appear randomly, but with increased frequency. Statistical analysis thus lets attackers draw conclusions about the content. A potential target could be, say, a cookie in a web application.

Unlike the vulnerabilities in CBC, there is probably no easy way to fix the problems in RC4 in the scope of TLS without breaking the standard. The recommendation to use RC4 instead of CBC is no longer an option.

Perfect Forward Secrecy

One possibility that some TLS algorithms offer is a key exchange. This approach ensures that the real key for data transmission is never transmitted across the wire. After the data transmission, the temporary key is destroyed. The big advantage here is that even if an attacker hijacks the connection and later gains possession of the private key, he cannot decrypt the content. This property is called Perfect Forward Secrecy. It is generally desirable, and the most common method for this is a Diffie-Hellman key exchange.

TLS supports this, but, annoyingly, the Apache web server does not let you define the parameter length for the key exchange. A standard length of 1024 bits is specified but is not enough. In processes such as Diffie-Hellman, which are based on the discrete logarithm problem, key lengths of 1024 bits should be avoided and at least 2048 bits should be used. Alternatively, TLS provides a key exchange with elliptic curve Diffie-Hellman. This method is considered secure even with much shorter key lengths.

In the Face of Vulnerabilities

It should be emphasized that the probability that the attacks discussed so far will lead to problems in real-world applications is quite low. However, the authors of the Lucky Thirteen attack explicitly say they expect further surprises with CBC. Even the authors of the attack on RC4 assume that improved attack methods will soon exist.

In the long term, it is thus desirable to completely banish CBC. This tactic also dramatically reduces the zoo of encryption modes that TLS supports. In TLSv1.1 and all earlier versions, all block cipher methods use CBC. All processes using 3DES, IDEA, or CAMELLIA thus drop away. For reasons of backward compatibility, it is now typically impossible to rely on TLSv1.2 alone (Figure 3), which leaves only the problematic RC4 stream cipher.

In TLSv1.2, only AES encryption is supported in both (the problematic) CBC mode and the more modern GCM mode (Galois/Counter Mode). Both are also offered with and without Perfect Forward Secrecy.

In the best case, you would want to disable everything except the GCM algorithms; however, this approach only works for applications in which you have control over all clients and know that they support TLSv1.2. The pragmatic compromise was to continue to rely provisionally on CBC-based methods and ensure that only the current version of the SSL library – in most cases OpenSSL – is used, with all security updates in place.

Your Apache Configuration

On the Apache web server, you can set the supported TLS modes through the options SSLProtocol and SSLCipherSuite. A configuration that uses only TLS 1.2 and only GCM algorithms with Perfect Forward Secrecy is enabled, as shown in Listing 1. The problematic compression is disabled.

Listing 1: Configuration Example

SSLProtocol -SSLv2 -SSLv3 -TLSv1 -TLSv1.1 +TLSv1.2 SSLHonorCipherOrder on SSLCipherSuite ECDHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES256-GCM-SHA384 SSLCompression off

A more realistic approach for production operation is shown in Listing 2. All medium- and low-strength algorithms are switched off, as are algorithms that offer no authentication. Also, the now quite old SSL version 3 is disabled. TLS version 1.0 has long been supported by all browsers, so activation of the old SSL versions should no longer be necessary.

Listing 2: Practical Settings

SSLProtocol -SSLv2 -SSLv3 +TLSv1 +TLSv1.1 +TLSv1.2 SSLCipherSuite HIGH:!MEDIUM:!LOW:!aNULL@STRENGTH SSLCompression off

One restriction is that algorithms that perform a key exchange with elliptic curves (ECDHE) are only supported in Apache version 2.4 or newer, but only a few distributions offer this version out of the box. The options I mentioned still work; unsupported algorithms are then simply ignored.

If you want to avoid 1024-bit Diffie-Hellman, you can either install an experimental patch from Apache [5] or delete the DHE-RSA-AES256-GCM-SHA384 method from the configuration and only offer a key exchange via elliptic curves. Disabling the vulnerable compression is not an option in older versions of Apache. It was introduced for the 2.2 series in version 2.2.24.

For the GCM algorithms in TLS 1.2 to work, you need a version of OpenSSL newer than 1.0.1 – which is now available in most Linux distributions. Then, you can check the configuration page with the SSL test by Qualys [6]. You will see numerous comments if something is wrong with your configuration and a rating for the security of your configuration. However, considering the BEAST attack, I would advise against using the recommendations given on the site. The online test was still recommending the RC4 algorithm when this issue went to press.

The Bottom Line

TLS is getting on in years. The technology currently used in browsers and web servers is something no one would develop today. Recent attacks put a spotlight on its vulnerabilities. In practical terms, however, you do not really need to worry. The attacks are complicated and only possible in conjunction with circumstances that are fairly infrequent. Web application security is still typically endangered by far more mundane problems. Cross-site scripting vulnerabilities and SQL injection attacks, for example, which have nothing to do with cryptography, are a far more serious threat that cannot be prevented even with the best encryption.

However, this does not mean you should take the whole thing too lightly. The fact that the most important cryptographic protocol today is so vulnerable is certainly not a good thing, and more advanced exploits are very likely only a matter of time.

The solution to most of the problems discussed here has been around for a long time. It is a five-year-old standard called TLS version 1.2, which no one uses. The problems that dog TLS have long been known, which demonstrates one of the difficulties in developing secure network technologies: As long as there are no practical attacks, many programmers and system administrators see no reason to switch to newer standards and update their systems. They prefer to wait until they have clear evidence of an exploit and their systems are vulnerable in the field. This mindset does not make much sense, but it is, unfortunately, very widespread.