Manage Linux containers with Docker

Full Load

Docker is a new open source tool [1] designed to change how you think about cloud deployments. It provides functionality to create and manage virtual machine images that can be shared, modified, and easily deployed to bare metal servers, cloud hosts, or local environments. The technology that Docker depends on is present in recent Linux kernels, meaning that you can use Docker on any machine running a recent kernel [2]. Docker promises to enable "run anywhere" deployments for a wide class of applications that have previously been very hard to package and maintain.

Container Ship

For example, a typical LAMP-stack application might depend on a specific version of PHP, a particular Apache configuration, or the installation of certain operating system packages. Deploying the application to varied platforms can be difficult because of differences in package management or conflicts with the configuration used by other applications running in the same environment.

Docker's approach is to isolate components inside "containers," allowing your Apache process to have its own copy of the operating system, free from conflicts with other processes. These containers can be saved as "images" that can be worked on locally, shared with others, and ultimately deployed on your servers. Instead of requiring a correctly configured environment in which to run, your application can be deployed with a container that provides everything it needs.

The key technology enabling this approach is LXC, or Linux Containers [3]. Unlike a hypervisor-based virtual machine like Xen, LXC doesn't provide a full virtual machine with hardware emulation; instead, it provides a separate process space and network interface for applications to run in. This setup comes with a cost – you can't run non-Linux operating systems under LXC – but it can offer advantages in terms of lower resource usage and considerably faster startup times compared with a full VM.

Docker bundles LXC together with some other supporting technologies and wraps it in an easy-to-use command-line interface. Using containers is a bit like trying to use Git with just commands like update-index and read-tree, without familiar tools like add, commit, and merge. Docker provides that layer of "porcelain" over the "plumbing" of LXC, enabling you to work with higher level concepts and worry less about the low-level details.

In particular, Docker's commands include diff, which can compare a container's filesystem with that of its parent image; commit, which creates a new image from the changes made to a container; and push, which shares a copy of your image with the Docker Index [4], a repository of publicly available images.

Not Everything Fits

Docker is not suited to all use cases, though. Containers can't do everything that a full virtual machine can do, such as emulate different architectures, and you can't run a non-Linux kernel inside a container.

Security is also a work in progress. For example, "user namespaces," a vital component for preventing illegitimate access to the host system's resources, are only available from version 3.8 of the kernel. However, even if you don't want to use Docker as a virtualization solution, it's still valuable as a way of bundling together an application and its dependencies. Docker's other major issue is that it depends on the Aufs filesystem [5], which is not present in many kernels.

Loading Containers

In this article, I'll look at creating an image for serving LAMP-stack web applications as an example. Before getting started, you'll need to install Docker. If you're running Ubuntu 12.10 or 13.04, you can skip straight to the installation instructions on the website. Other users can either run in a virtual machine or access the unofficial Docker packages that exist for openSUSE 12.3 [6] and Gentoo [7].

The next step is to choose a base image for your container. I chose a CentOS base image, but you could easily use Ubuntu, Debian, or any other distribution. Docker provides a "registry" of publicly available images, and you can download images using the docker pull command:

$ docker pull centos

Once you have downloaded your base image, you can start a new container:

$ docker run -i -t centos /bin/bash

This command runs Bash as an interactive process – you can now type commands and execute applications within your container. Because the CentOS base image is only a minimal installation, it doesn't include PHP or MySQL, so I'll need to install them:

$ yum install httpd php php-common php-cli php-pdo php-mysql php-xml php-mbstring mysql mysql-server

The container now contains a working PHP, Apache, and MySQL installation. Before doing anything else, save your container as an image. This step will allow you to create new containers with your stack preinstalled, which means you don't need to download those packages every time you create a new container. Type exit to quit the Bash process, and you will return to your normal command prompt. Next, you can run

docker ps -a

to get some output similar that shown in Listing 1.

Listing 1: Output of docker ps -a

01 $ docker ps -a 02 ID IMAGE COMMAND CREATED STATUS COMMENT PORTS 03 1758290cbef2 centos:latest /bin/bash 8 minutes ago Exit 0

The ID shown in the listing is used to identify the container you were using, and you can use this ID to tell Docker to create an image, using docker commit <id> <name>, for example:

docker commit 1758290cbef2 LAMP

Run docker images to see your new image in the list (Listing 2).

Listing 2: Output of docker images

01 $ docker images 02 REPOSITORY TAG ID CREATED 03 LAMP latest 7c34f69c3f93 9 seconds ago

Now you can start a new container using this image by running:

docker run -i -t LAMP /bin/bash

Once the Bash prompt appears, you can run:

$ php -v

to check that PHP is working.

A web server isn't much use if it doesn't serve web pages, though. To support network access, Docker provides the ability to forward ports from containers to the host. Exit from the previous container and start a new one with port 80 forwarded:

docker run -i -t -p :80 LAMP /bin/bash

If you're running Docker inside a VM, you'll need to forward port 80 on the VM to another port on the VM's host machine.

Before you can serve anything, you need to create a page. From the Bash prompt, do:

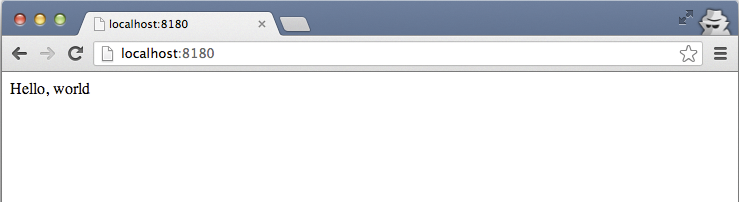

$ echo "<?php echo 'Hello, world'; ?>" > /var/www/html/index.php

Now, you're ready to start Apache:

$ /sbin/service httpd start

With Apache running, you should be able to access the site as shown in Figure 1. From here, you can install open source web applications, such as Drupal, or WordPress, or anything else.

Conclusions

Docker provides an alternative to virtual machines for packaging, testing, and deployment. It builds on established, albeit new in Linux, container technologies to provide process and network isolation. Additionally, by adding tools to manage images, track changes in containers, and distribute new images to others, it offers a solution that is greater than the sum of its parts. Docker's real magic is that it turns deployable images into something that can be shared and compared in a way that's quite similar to how people share and compare source code.

Docker is currently "alpha" software, and the Docker team does not claim that the product is ready for production. Features such as the ability to mount disk volumes from the host system into guest containers are still in development. A project to integrate Docker as a back-end provider for Vagrant is underway but not yet usable. The "build file" system, which enables the scripting of image creation, is effective but could be easier to use, and cleaning up after a failure to build an image is time consuming.

However, the Docker team is running a very open development process on GitHub [8]. Functionality for mounting volumes inside containers is being developed in response to feedback from users, after initially being rejected, and new feature ideas are debated in public.

In the long-term, the Docker team promises support for a wider range of kernels and for different back-end technologies, offering alternatives to LXC and Aufs. A plugin architecture is being discussed that could enable better integration with existing cloud provisioning tools. Right now, Docker is a great idea, and the current implementation offers just enough functionality to demonstrate the potential of container-based deployment. With the features already in the pipeline, Docker could be one of 2013's biggest open source projects.