Asynchronous PostgreSQL

Sophisticated

One problem with web applications is often observed in practice: Without much effort (and without much thought) developers put together a web application with a database as a back end. Over time, the amount of data grows and eventually the system become sluggish. Impatient users press the reload button over and over again. To remedy this, the database administrator first creates indexes in the database and then purchases new technology, which helps – at least for a while. In the long term, the combination of a database at the edge of its capacity and an impatient user can very quickly lead to an application that takes frequent breaks.

Finding the Root Cause

The application in the example here is quite conservative: the Apache web server, mod_cgi, some CGI programs in Perl, and the PostgreSQL database. Apache HTTPD provides the Timeout directive to deal with user-induced denial-of-service problems like this and allows monitoring of the web server's I/O channels, such as the TCP connection to the browser or the stdout channel of a CGI program. If no data is transmitted over the specified period of time, the server terminates the request with an error. When releasing the resources allocated for the request, it also stops the CGI program. For this purpose, it first sends a SIGTERM signal to the program. If that does not help, this is followed a few seconds later by a SIGKILL.

This approach is simple but has two major drawbacks. First, the web server does not notice whether the browser closes the connection in the meantime because it does not expect any data to come in on the browser connection. Second, you cannot release the occupied resources in the database simply by terminating the CGI program.

This is what really happens: The browser sends a request, and the CGI program launches and sends the database query, which takes some time. The impatient user presses the button to reload the page.

Although the browser terminates the current request, the server doesn't see this because it is waiting for the CGI program. Now the timeout occurs and the program is terminated. Unfortunately, the database will not notice this and will continue the SQL query. A new request from the user then initiates a second query against the database, which is just as slow. After a few more clicks, the system is a goner.

Without changing mod_cgi or adding another module, it is impossible to terminate the request on the web server when the browser closes the connection. However, it is feasible to complete the database query as soon as the web server sends the SIGTERM signal. In this article, I'll show you how this is done. To follow the examples, you need a database in which the PL/pgSQL language is enabled. I used PostgreSQL version 8.4.

Test Environment

If you have a large test database available for which you can formulate SQL queries that take a few seconds or minutes to complete, you can use that. If not, PostgreSQL provides the pg_sleep function. The plpgsql function from Listing 1 does nothing but consume a huge amount of computing time so that the associated process will assume one of the top positions in the top output. For testing, you can call the burncpu program with psql:

r2=> select burncpu('30s');

burncpu

-----------------

00:00:30.000053

The results take 30 seconds to deliver. The CPU load table shows the associated database process for this time (Figure 1).

Listing 1: burncpu

01 CREATE OR REPLACE FUNCTION burncpu (tm INTERVAL) 02 RETURNS INTERVAL AS $CODE$ 03 DECLARE 04 stmp TIMESTAMP := now()+tm; 05 i INT; 06 BEGIN 07 WHILE clock_timestamp()<stmp LOOP 08 i:=1; 09 END LOOP; 10 RETURN clock_timestamp()-now() 11 END; 12 $CODE$ LANGUAGE plpgsql;

Another ingredient you need is the CGI program shown in Listing 2. You might need to install the Perl modules common::sense, DBI, and DBD::Pg if they are not in place – recent Linux distributions should have them in their software repositories – and you also need to customize the login information for the database.

Listing 2: burn0.pl

01 #!/usr/bin/perl

02

03 use common::sense;

04 use DBI;

05

06 print "Status: 200\nContent-Type: text/plain\n\n";

07 $|=1; $|=0; # flush

08

09 my $dbh=DBI->connect('dbi:Pg:dbname=r2', 'ipp', undef, {RaiseError=>1});

10

11 my $sth=$dbh->prepare('select burncpu(?)');

12 $sth->execute((($ENV{QUERY_STRING}+0) || .5).'s');

13

14 while( my $row=$sth->fetchrow_arrayref ) {

15 print "@$row\n";

16 }

Workaround

The script is simple, but the attentive reader might wonder what line 7 is good for: It bypasses a bug present at least in Apache 2.2.21 compiled with the prefork multiprocessing module (MPM). If a CGI program does not output anything on stdout or stderr, the web server runs into the timeout when trying to read stdout; then, when it tries to read from stderr, it runs into the timeout again.

Line 7 ensures that two lines are written on stdout; thus, only one timeout interval is needed. Unfortunately, this prevents the correct HTTP error code 504 being sent. Instead, the web server returns an empty response.

You need to install the program as burn0.pl in a directory configured for CGI scripts. In what follows, I assume the web server is running on localhost and the URL path /cgi/ is mapped to the CGI directory:

$ curl http://localhost/cgi/burn0.pl 00:00:00.50023

The output shows the amount of computing time the database engine consumed. You can pass in the desired time as a CGI parameter:

$ curl http://localhost/cgi/burn0.pl\?3 00:00:03.000207

In my httpd.conf file, I set a timeout of five seconds. If the SQL query runs for 4.9 seconds, the server should still provide a reasonable answer, but not after 5.1 seconds (Listing 3).

Listing 3: Timeout Test

$ time curl http://localhost/cgi/burn0.pl\?4.9 00:00:04.900198 real 0m4.958s user 0m0.003s sys 0m0.006s $ time curl http://localhost/cgi/burn0.pl\?5.1 curl: (52) Empty reply from server real 0m5.044s user 0m0.005s sys 0m0.005s

To observe the effect described above, call burn0.pl with the parameter 60. The curl command is finished after five seconds, but you can watch the database process eating CPU cycles for a full minute.

Help

The attentive programmer will therefore want to do something to make sure the SIGTERM terminates not only the CGI program but also the database process. One variant would simply be to kill it. PostgreSQL starts a separate process for each user, so that is equivalent to aborting the current transaction.

You now have three problems to solve:

- The SIGTERM must be treated promptly.

- The PID of the process database has to be found.

- The database process must be stopped.

As a solution to the first problem, an experienced Perl programmer will probably immediately think of $SIG{TERM}.

Unfortunately, that does not work here because modern Perl versions only deliver asynchronous signals at secure points in the program. The middle of a C function being called does not qualify as such.

The handler will thus not be called until $sth->execute returns in line 12. The POSIX::sigaction module, however, lets you install the signal handler so that the signal is delivered promptly.

Network Problem

A glance at the documentation for DBD::Pg [1] discloses a solution to the second problem. The driver provides the PID of the database process in $dbh->{pg_pid}.

Another problem arises, however, if the database can be running on another computer. Even if the CGI process has the necessary rights to send a signal to the database process, it cannot terminate the process on another computer.

However, the PostgreSQL documentation [2] reveals a database function for this purpose: pg_cancel_backend(int).

Final Product

Listing 4 shows the complete program, burn1.pl. There are some new lines: 5 and 12 to 22. Line 22 installs the sig function as a signal handler for SIGTERM. When the signal arrives, line 13 opens a new connection to the database and calls the pg_cancel_backend function. After this the program terminates. The call to

$ curl http://localhost/cgi/burn1.pl\?120

now returns after five seconds, and the database process is also gone.

Listing 4: burn1.pl

01 #!/usr/bin/perl

02

03 use common::sense;

04 use DBI;

05 use POSIX qw(SIGTERM);

06

07 print "Status: 200\nContent-Type: text/plain\n\n";

08 $|=1; $|=0; # flush

09

10 my $dbh=DBI->connect('dbi:Pg:dbname=r2', 'ipp', undef, {RaiseError=>1});

11

12 sub sig {

13 my $db2=DBI->connect('dbi:Pg:dbname=r2', 'postgres', undef, {RaiseError=>1});

14 my $st2=$db2->prepare('select pg_cancel_backend(?)');

15 $st2->execute($dbh->{pg_pid});

16 if( !$st2->fetchrow_arrayref->[0] ) {

17 warn "couldn't stop backend PID=$dbh->{pg_pid}\n";

18 }

19 exit 1;

20 }

21

22 POSIX::sigaction(SIGTERM, POSIX::SigAction->new(\&sig)) or die "Error setting SIGTERM handler: $!\n";

23

24 my $sth=$dbh->prepare('select burncpu(?)');

25 $sth->execute((($ENV{QUERY_STRING}+0) || .5).'s');

26

27 while( my $row=$sth->fetchrow_arrayref ) {

28 print "@$row\n";

29 }

However, this solution has serious drawbacks. The first is a security problem. Only the administrator of the database is allowed to call pg_cancel_backend. The program thus logs in as user postgres for the second connection. From the viewpoint of security, a CGI program should not have administrative rights to the database.

Moreover, the POSIX::sigaction style of signal handling causes the same problems that prompted the introduction of safe signals. In the given case, it is probably irrelevant because, when the signal arrives, the process is very likely stuck in a read, recv, or similar system call, waiting for data. Additionally, the signal handler terminates the program. In other words, the signal does not interrupt any critical areas, such as malloc, which would lead to a memory management error followed by a crash. Also, there are no memory leaks because the program terminates anyway. Generally, however, use of POSIX::sigaction is discouraged in Perl programs.

A third problem arises from the need to open a second database connection just to terminate an existing one. It is rather like taking a sledgehammer to crack a nut, and you could hit limits so that the new connection cannot be opened.

Another Way

Preferably, you do not want to wait for the database inside the DBI driver. Instead, you should only initiate the operation there, thus avoiding the problems associated with signal handling. Also, you need a feature that tells whether or not the operation has completed. The best technique is not to query this regularly but to poke it at reasonable points in time. All this can be implemented with DBD::Pg.

Listing 5 shows the results. Line 5 imports the constant PG_ASYNC, which is passed to the driver in line 19 together with the SQL command. Thus, the behavior of the subsequent execute command changes; it no longer waits for the database operation to complete. Now it's the responsibility of the program to use $Dbh-> pg_ready to check periodically whether the request has completed and, possibly, to read the result.

Listing 5: burn2.pl

01 #!/usr/bin/perl

02

03 use common::sense;

04 use DBI;

05 use DBD::Pg qw/PG_ASYNC/;

06 use IO::Select;

07

08 print "Status: 200\nContent-Type: text/plain\n\n";

09 $|=1; $|=0; # flush

10

11 my $dbh;

12

13 $SIG{TERM}=sub {

14 $dbh->pg_cancel if $dbh and $dbh->{pg_async_status}==1;

15 };

16

17 $dbh=DBI->connect('dbi:Pg:dbname=r2', 'ipp', undef, {RaiseError=>1});

18

19 my $ sth = $ dbh-> prepare ('select burncpu (?)' {pg_async=>PG_ASYNC});

20 $sth->execute((($ENV{QUERY_STRING}+0) || .5).'s');

21

22 my $sel=IO::Select->new($dbh->{pg_socket});

23 while( $sel->can_read and !$dbh->pg_ready) {

24 warn "not ready, yet. keep polling\n";

25 }

26 if( $dbh->{pg_async_status}==1 ) {

27 $dbh->pg_result;

28

29 while( my $row=$sth->fetchrow_arrayref ) {

30 print "@$row\n";

31 }

32 } elsif( $dbh->{pg_async_status}==-1 ) {

33 warn "query cancelled\n";

34 }

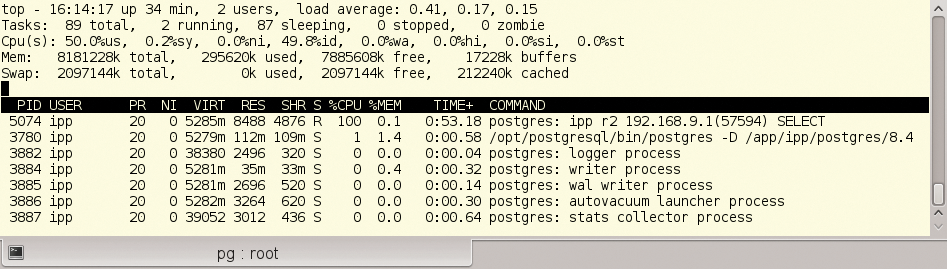

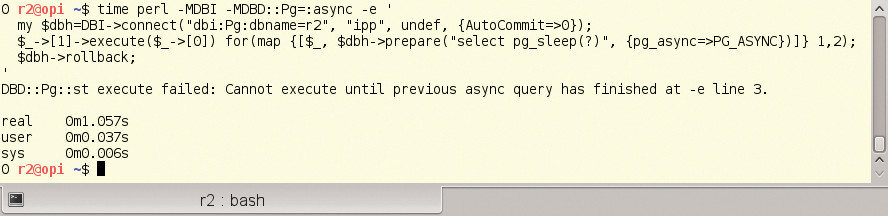

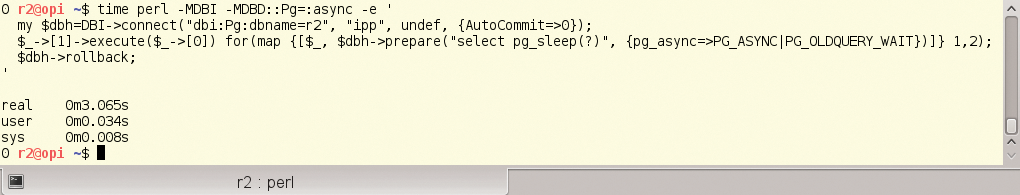

When I first learned of this option, I suspected that it could also execute multiple SQL commands in parallel on a database connection. Unfortunately, this is not true. Each database process can run only one SQL statement at a time. Thanks to PG_ASYNC, execute immediately returns with an error if you try to run a second command while the first is still running. Figure 2 shows the effect with two statements: The first sleeps for one second and the second for two seconds. The complete call only takes one second, then an error message appears. So, only the first statement is executed. The pg_async key in the hash passed to prepare is a bit field. PG_ASYNC can thus be modified with one of the other two constants. If you pass in

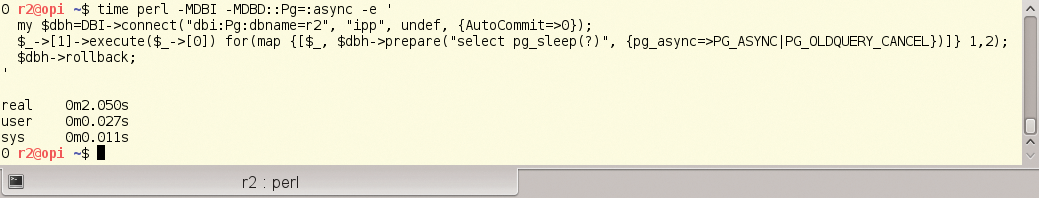

PG_ASYNC | PG_OLDQUERY_CANCEL

or

PG_ASYNC + PG_OLDQUERY_CANCEL

the command currently running is aborted and the new one started. The example in Figure 3 takes two seconds. Only the second statement is executed. PG_OLDQUERY_WAIT waits for the end of the statement currently running before the next one starts. The command in Figure 4, therefore, needs three seconds.

PG_ASYNC flag, the script can only run one SQL statement at any given time.

PG_ASYNC|PG_OLDQUERY_CANCEL tells the script to stop processing the old query and start a new one.

PG_ASYNC|PG_OLDQUERY_WAIT tells a Perl script to wait for the end of the first query.One question remains in the CGI program: When is it reasonable to use the pg_ready function? In a loop, over and over again? That would work, but it would be a huge waste of CPU time. To counteract this, you could sleep in the loop for a while (see the example for pg_ready in the DBD::Pg documentation), but it would slow down the program unnecessarily.

Solutions

The right way to approach the problem is as follows: The medium for the exchange of data between DBD::Pg and the database is a TCP connection. Once the database is done processing, it usually sends data to the client, so you wait for I/O activity on the socket and call pg_ready every time something happens. The DBD::Pg driver conveniently provides the socket descriptor in $dbh->{pg_socket}. Caution: This is not a file handle, but a Unix file descriptor. Line 22 generates an IO::Select object.

The script waits in $sel->can_read (line 23) until data arrives in the connection and then calls pg_ready. If the function returns "false," the program simply continues to wait.

The code at this point assumes that the socket is no longer readable after the pg_ready call. I'm not sure whether that is always guaranteed. In my experiments, the cycle was never executed; that is, the results were in place when the data arrived. Lines 26 and 32 evaluate $dbh->{pg_async_status}. A value of 1 indicates an asynchronous SQL command is active, 0 means the last command was synchronous, and -1 tells you the last command was asynchronous and has been aborted.

If the command has not been canceled, $dbh->pg_result is called. This function returns exactly the same as $dbh->execute for synchronous commands. The return value is ignored here. The call itself is necessary though; otherwise, fetchrow_arrayref does not return a result. For database updates especially, the return value is often important.

What happens now if Apache sends the SIGTERM signal while the program is waiting in $sel->can_read in line 23 (or more precisely, in the underlying system call select)? The system call is aborted. Even before $sel->can_read returns, the program flow reaches a safe point where the signal handler can be called. The signal handler determines that an asynchronous request is active and therefore calls $dbh->pg_cancel (see the box "What Exactly Does pg_cancel Do?"). This function tells the database process to cancel the current query and waits for confirmation, then $sel->can_read returns. The following pg_ready call returns "true," but the query was canceled; therefore, the program flow ends up in line 33.

Better But Not Good

Although the program now looks quite useful, it still has a weak point that is only obvious when you understand in detail how safe signal handling in Perl works. You will see that it is not really suitable for this case.

What problem is it that the safe signals in Perl are actually trying to solve? When a signal arrives, the signal handler is called. This can happen at any time. Now, if the program is about to change some global variables, and the signal handler wants to manipulate the same variables, chaos is inevitable.

The prime example of this is malloc. The program is trying to use this function to allocate more memory. Depending on the implementation, it manages several global lists or similar structures to do so; however, if the signal handler also needs memory, precisely the situation described has occurred. Languages such as Perl rely heavily on memory management to allocate and free memory for variables as needed. Therefore, it is hardly possible to write a signal handler in Perl that does not work with global structures.

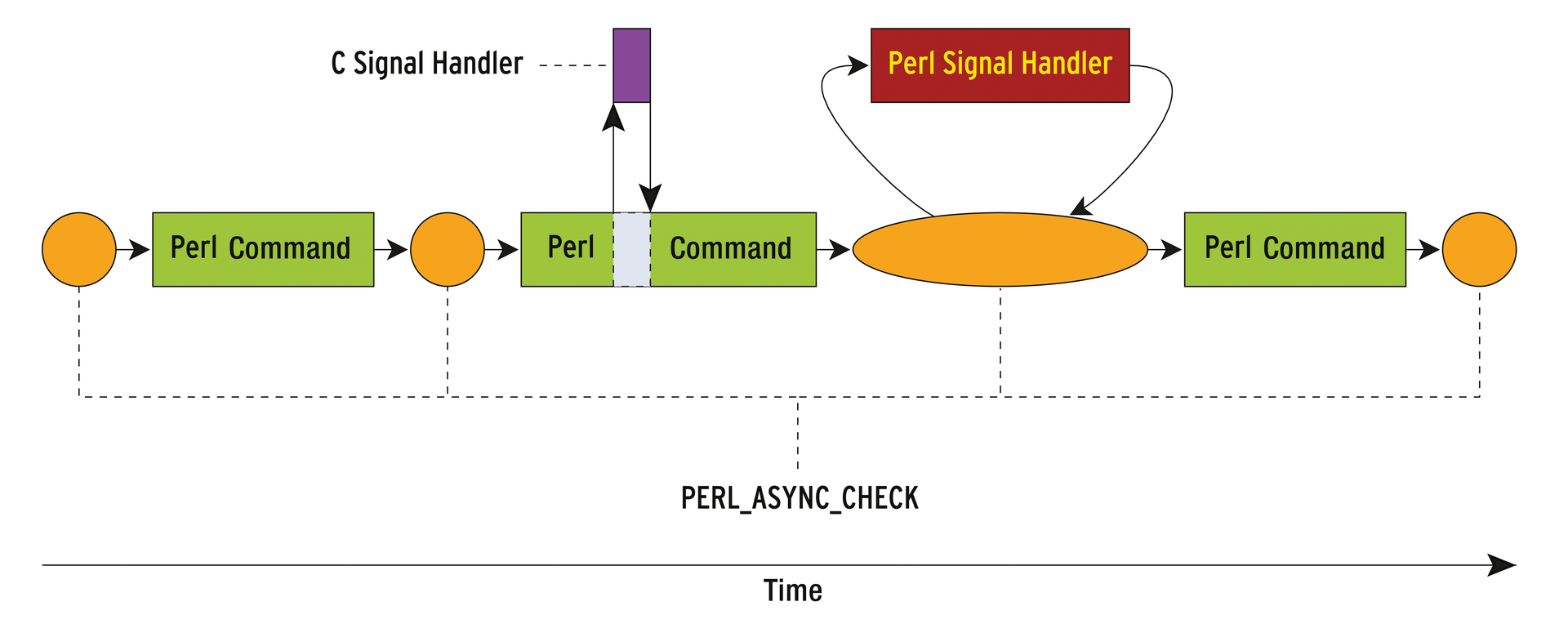

Delayed Processing

For safe signal handling, Perl now internally manages a number of flags. If a script installs a handler for a signal, the interpreter does not call the Perl function when the signal arrives. Instead, the signal handler is in fact an internal function, which only sets one of the flags. At appropriate points, the Perl interpreter then checks, using the macro PERL_ASYNC_CHECK, whether any of the flags are set and, if so, calls the signal handler in Perl. Figure 5 represents this schematically. Because the signal handler now only changes its own data structures, there are no more unexpected program crashes. In this sense, the signal treatment is safe.

Unfortunately, timely delivery of signals can get stuck in the pipeline. The problem results from a race condition. The can_read call in line 23 calls the Perl select command at some time. Within this command, what happens at the C level is approximately this:

PERL_ASYNC_CHECK; /* Some other C commands */ select(...); <-- Syscall

A check is performed to see if signals are pending. This check is followed by a series of commands to convert the parameters of the Perl select command into parameters that the kernel select call understands. Finally, the kernel is called, and the process is blocked. But, what happens if the signal arrives between PERL_ASYNC_CHECK and the kernel call? In this case, the C-level signal handler sets its bit as usual. However, it cannot prevent the process blocking in the system call. The signal is therefore not delivered to the Perl handler until the system call returns. For the CGI program, it will look as if the SIGTERM has disappeared. Apache then sends the SIGKILL shortly thereafter, and the CGI program aborts, but the database process continues.

Correct Signal Handling

The problem is that the program waits for two things at the same time: Signals and data from the socket. Three possible approaches could solve this problem:

- The kernel is told to send a signal when the socket becomes ready to be read [3].

- The signal is mapped to a kind of file descriptor that becomes readable when a signal is received.

- The kernel implements a system call, to which the expected signals and file descriptors are passed; it works much like

select[4].

All three types are implemented on Linux and, thanks to additional modules from CPAN, can also be used with Perl. In the following discussion, I will focus on the second approach.

A fairly compatible method of implementing this on a Unix-like system is known as the "self-pipe trick" [5]. First, you create a pipe for each expected signal. Both ends of the pipe are switched to non-blocking mode. For this purpose, the signal handler to be implemented in C then writes an arbitrary byte to the pipe. This can go wrong if the pipe is full, but it will not block the process because the file descriptor is non-blocking. Now the read end of the pipe, and other file descriptors, can be monitored with an ordinary select, poll, or epoll call.

Economical

This method is very wasteful, however. To manage one bit of information, a multiple-kilobyte buffer is created in the kernel. The Linux developers have implemented an antidote for this in the form of the system calls signalfd and eventfd. The CPAN Linux::FD module [6] supports both.

For Perl programmers, the AnyEvent module [7] with libev [8] as the back end is more convenient. This uses eventfd if the call is available; otherwise, a self-pipe is created.

Listing 6 shows the program. The interesting part here is played by the query function. If you're not familiar with AnyEvent, see the "AnyEvent – A Brief Overview" box at this point.

Listing 6: burn3.pl

01 #!/usr/bin/perl

02

03 use common::sense;

04 use DBI;

05 use DBD::Pg qw/:async/;

06 use AnyEvent;

07 use POSIX qw/SIGTERM SIGINT SIG_BLOCK SIG_UNBLOCK/;

08

09 sub query {

10 my $sql=pop;

11 state $dbh||=DBI->connect('dbi:Pg:dbname=r2', 'ipp', undef, {RaiseError=>1});

12 my $stmt=$dbh->prepare($sql, {pg_async=>PG_ASYNC});

13

14 my $done=AE::cv;

15 my $cancel=sub {

16 $dbh->pg_cancel if $dbh->{pg_async_status}==1;

17 $done->send;

18 };

19 my $pg_w=AE::io $dbh->{pg_socket}, 0, sub {

20 $dbh->pg_ready and $done->send;

21 };

22

23 my $sigblock=POSIX::SigSet->new(SIGTERM, SIGINT);

24 POSIX::sigprocmask SIG_BLOCK, $sigblock;

25 my @sig_w=map {AE::signal $_, $cancel} qw/TERM INT/;

26 $stmt->execute(@_);

27 POSIX::sigprocmask SIG_UNBLOCK, $sigblock;

28

29 $done->wait;

30

31 return $dbh->{pg_async_status}==1 ? ($dbh->pg_result, $stmt) : ();

32 }

33

34 print "Status: 200\nContent-Type: text/plain\n\n";

35 $|=1; $|=0; # flush

36

37 my ($rc, $sth)=query($ENV{QUERY_STRING} || '1s', 'select burncpu(?)');

38

39 if( defined $rc ) {

40 while( my $row=$sth->fetchrow_arrayref ) {

41 print "@$row\n";

42 }

43 } else {

44 warn "query cancelled\n";

45 }

Signals in View

Line 19 installs a watcher for the database socket, which ends the main event loop in line 29 once the result of the SQL operation exists. The two signal watchers created in line 25 are more interesting. The $cancel function, which they call, cancels the database operation and ends the event loop. Again, to avoid a race condition, the initialized signals must not occur between installing the handler and the end of the execute operation, that is, between lines 25 and 26. The two sigprocmask calls in lines 24 and 27 ensure this.

What happens in detail when Apache sends the SIGTERM signal? If it occurs before line 24, the program just stops, but because no SQL query is running, this is not critical. Between lines 24 and 27, delivery is delayed at the kernel level. If the signal arrives in this period, the process does not see it until after line 27. Here, the signal handlers are already installed, and the SQL operation is initiated. The signal handler installed by AnyEvent at the C level writes to eventfd or the self-pipe and returns. Now, at least, one file descriptor, eventfd, is ready to be read. That is, the epoll system call used by the main event loop reports this. The signal watcher is called.

Conclusions

The pitfalls you can encounter with a simple CGI program are amazing. Fortunately, the query function from final version can be fairly easily shifted out into a module and reused. Attentive programmers should know what to look for and what the potential pitfalls are. Unfortunately, all of the work so far only solves half the problem. If the timeout does not occur on the web server, but instead the problem is sitting in front of the screen reloading the page again and again, you will very quickly have a bottleneck in the database (see "What About Performance?"). With mod_perl instead of mod_cgi you can quite easily identify the disappearance of the browser. The timeout on the user side could thus be detected quickly and the current SQL operation aborted, which is the next solution I'll tackle.