OpenNebula – Open source data center virtualization

Reaching for the Stars

OpenNebula [1] separates existing cloud solutions into two application categories – infrastructure provisioning and data center virtualization [2] – and places itself in the latter group. This classification allows for clear positioning compared with other solutions – a topic I will return to later in this article.

What Is OpenNebula?

OpenNebula relies on various established subsystems to provide resources in the areas of virtualization, networking, and storage. This demonstrates a significant difference from alternative solutions like OpenStack and Eucalyptus, both of which favor their own concepts – as exemplified in storage by OpenStack via Swift.

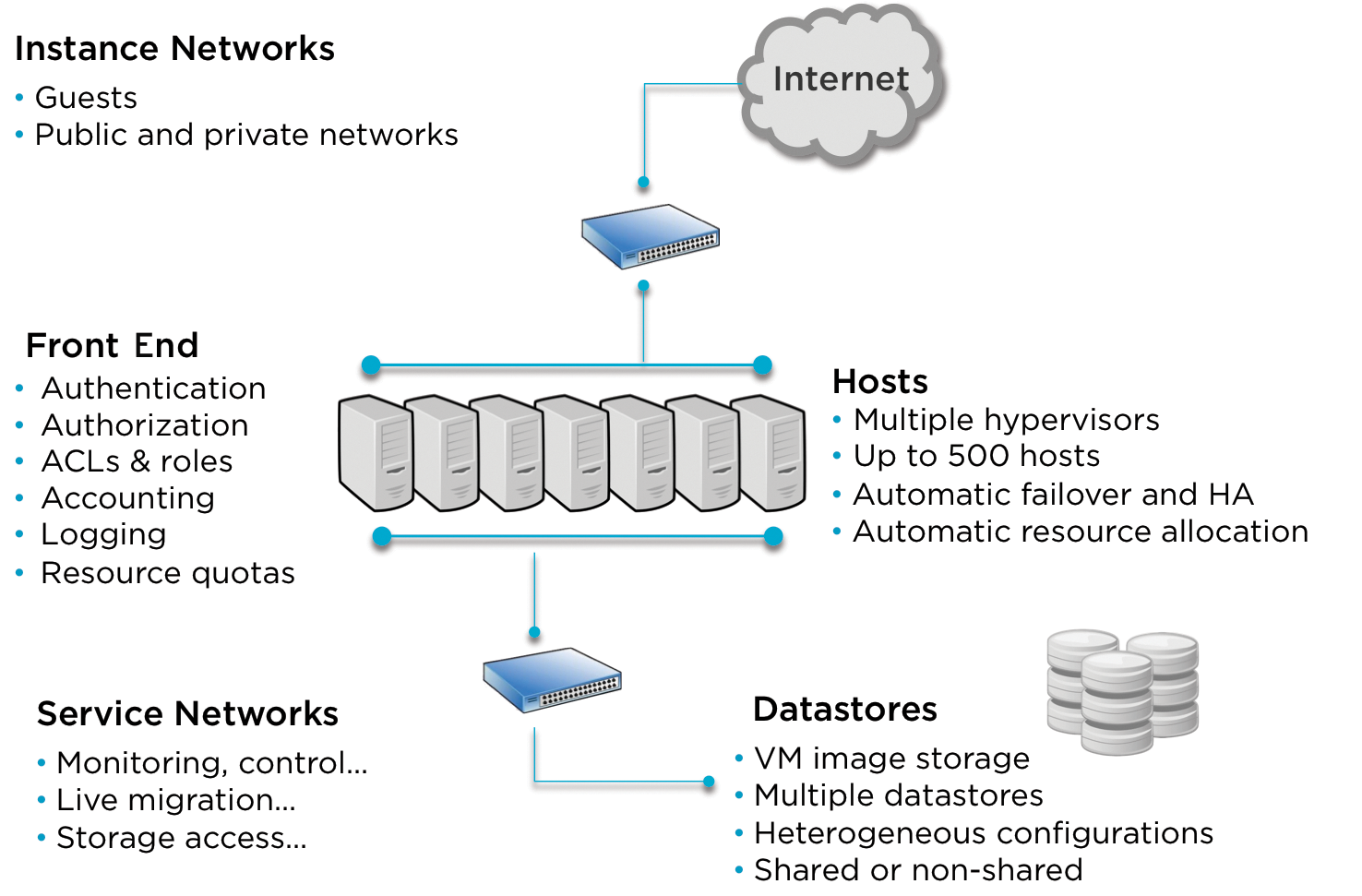

In OpenNebula, these subsystems (Figure 1) are linked by a central daemon (oned). In combination with a user and role concept, the components are provided via a command-line interface and the web interface. This approach makes host and VM operations independent of the subsystem and allows for transparent control of Xen, KVM, and VMware. Mixed operations with these hypervisors are also possible – OpenNebula hides the available components in each case using a uniform interface. This transparent connection of different components shows the strength of OpenNebula: its high level of integration.

Structure

An important feature of OpenNebula is the focus on data center virtualization with an existing infrastructure. The most important requirement is to support a variety of infrastructure components and their dynamic use.

This approach is easily seen in the datastores. Their basic idea is simple: For example, although a test system can be copied at any time from the central image repository on a hypervisor, the last run-time environment must be recovered for a DB server. The multiply configurable definition of datastores within an OpenNebula installation provides the ability to adapt to these different life cycles. Thus, a persistent image can be located on an NFS volume while a volatile image is copied to the hypervisor at start time.

The configuration and monitoring stacks are completely separate in OpenNebula. A clear-cut workflow provides computing resources and then monitors their availability. Failure of the OpenNebula core has no effect on the run-time status of the instances, because commands are issued only when necessary.

Monitoring itself is handled by local commands depending on the hypervisor. Thus, the core regularly polls all active hypervisors and checks whether the configured systems are still active. If this is not the case, they are restarted.

By monitoring hypervisor resources like memory and CPU, a wide range of systems can be rapidly redistributed and restarted in case of failure. Typically, the affected systems are distributed so quickly in the event of a hypervisor failure that Nagios or Icinga do not even alert you to this within the standard interval. Of course, you do need to notice the hypervisor failure.

Self-management and monitoring of resources are an important part of OpenNebula and – compared with other products – already very detailed and versatile in their use. A hook system additionally lets you run custom scripts in all kinds of places. Thanks to the OneFlow auto-scaling implementation, dependencies can be defined and monitored across system boundaries as of version 4.2. I will talk more about this later.

Installation

The installation of OpenNebula is highly dependent on the details of the components, such as virtualization, storage, and network providers. For all current providers, however, the design guide [3] provides detailed descriptions and instructions that help avoid classic misconfigurations. The basis is an installation composed of four components:

- Core and interfaces

- Hosts

- Image repository and storage

- Networking

The Management Core (oned), in collaboration with the appropriate APIs and the web interface (Sunstone), forms the actual control unit for the cloud installation. The virtualization hosts do not need to run any specific software, with the exception of Ruby, but access via SSH must be possible to retrieve status data later, or – if necessary – to transfer images to all participating hosts.

Different approaches use shared or non-shared filesystems for setting up image repositories. Which storage infrastructure is appropriate is probably the most important decision to make at this point, because switching later is a huge hassle.

A scenario without a shared filesystem is conceivable, but this does mean sacrificing live migration capability. If a host were to fail, you would need to deploy the image again, and the volatile data changes would be lost.

Installation of the components can be handled using the appropriate distribution packages [4] or from the sources [5] for all major platforms, and is described in great detail on the project page. After installing the necessary packages and creating an OpenNebula user, oneadmin, you then need to generate a matching SSH key. Next, you distribute the key to the host systems – all done! If everything went according to plan, the one start command should start OpenNebula, and you should be able to access the daemon without any problems using the command line.

Configuration and Management

The first step after installing the system is to add the required hypervisor to provide virtual systems. Xen, KVM, and VMware are currently supported. Depending on the selected hypervisor, you still need to extend the configuration in the central configuration file /etc/one/oned.conf to ensure correct control of the host system. In the case of KVM, the communication is handled by libvirt; it handles both the management and monitoring functions as an interface.

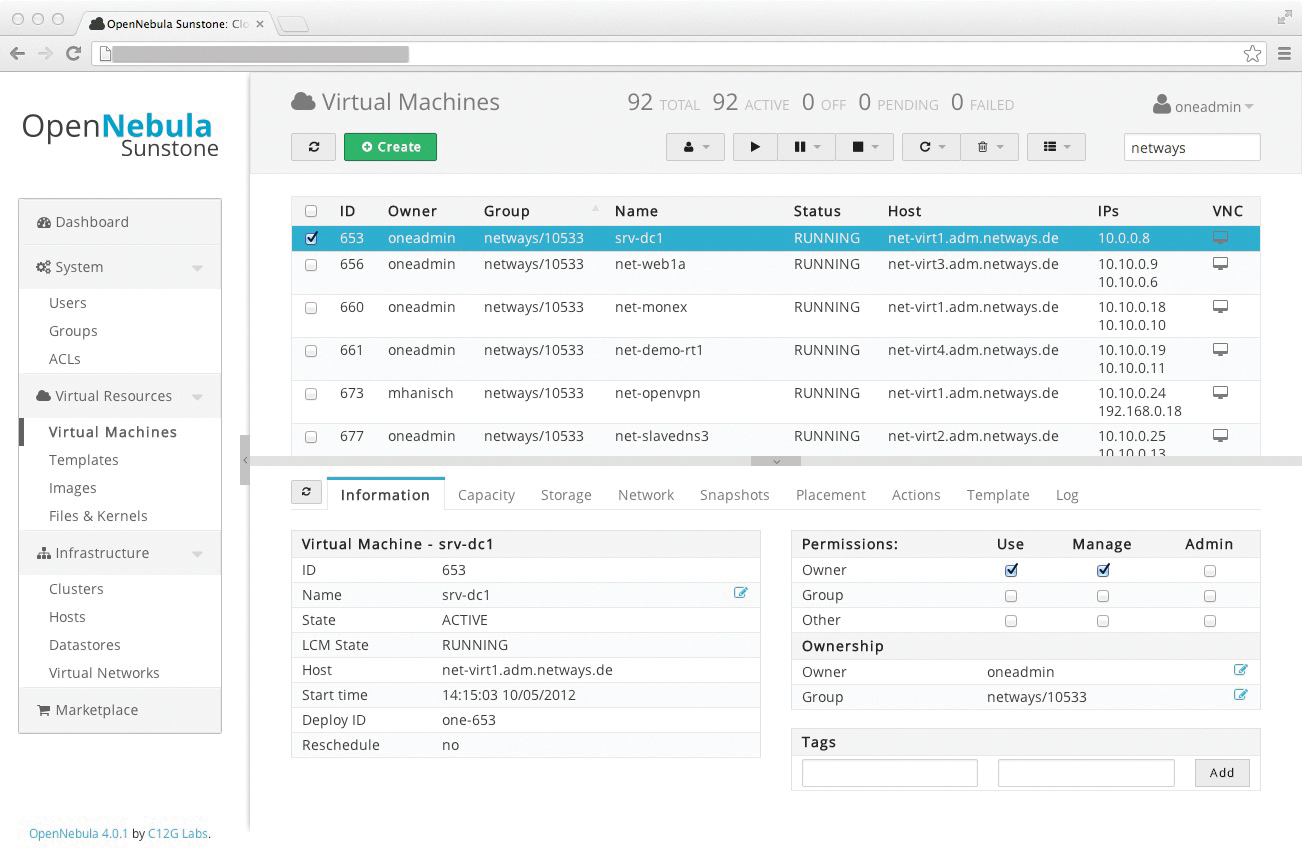

Although OpenNebula 4.2 comes with a very nice new web console (Figure 2) and a self-service portal, you can still manage all components using the command line. Most commands work with host and VM IDs to identify and control these components safely.

Integrating OpenNebula with a configuration management database is a breeze – you can then automatically generate VMs after they have been created in the administration interface. Also, Puppet, Chef, and CFEngine can be used to control the cloud environment.

The available feature set provides all the functionality you will need, although you will need some training for the procedures and functions of the various subsystems, which can take some time. OpenNebula also supports hybrid operations with different host operating systems or outsourcing of resources to other private zones or to public cloud providers; all of these tasks can be managed in a single administrative console.

Cloud Shaping

As the size of a cloud environment grows, dependencies relating to users, locations, and system capabilities increasingly must be taken into account. OpenNebula offers three basic concepts: groups, virtual data centers (VDCs), and zones.

Groups let you join systems with similar capabilities to form logical units. Thus, you can define conditions for the operation of certain systems and group systems that access the same sub-infrastructure, such as the datastore, VLANs, and hypervisors. As a simple example, a KVM system with GlusterFS storage can, of course, only be run on servers with a KVM hypervisor and GlusterFS access. Groups ensure that exactly these conditions are met.

If you need to provide a collection of resources to a number of users or a customer, without relying on groups, then the use of VDC is the solution. Arbitrary resources can be grouped to form virtual data centers and for access authorized via ACLs. These cross-zone arrays not only let you isolate noisy neighbors and support partial allocation of resources, they also are a very good basis for individual billing of resources. Additionally, the subdivision of all resources into individual private clouds can easily be reverted using VDCs.

As a third concept for grouping large installations, OpenNebula provides zones. Zones (oZones) allow central monitoring and configuration of individual OpenNebula installations. This setup allows complete isolation of individual areas across version borders and simultaneous central control of the environment.

This separation may take the application profile into consideration but also the location or customer. Even if you group together a large number of OpenNebula installations, independent control of the individual systems is possible. Thus, maximum freedom is guaranteed in the individual environment while allowing central aggregation and monitoring.

New in Version 4.2

Version 4.0 already introduced many new features to OpenNebula. Especially in the area of the virtualization layer, OpenNebula is capable of holding sway with commercial solutions thanks to features such as real-time snapshots and capacity resizing. The web interface revamp that I already mentioned is certainly the most obvious change; it now combines the previously separate views for admin and self service in a single interface.

Particularly noteworthy are the two new components: OpenNebula Gate and OpenNebula Flow. Gate allows users to share information between VMs and OpenNebula using a security token. A URL created during template generation can thus transfer application metrics to OpenNebula and visualize them using Sunstone. An example would be periodically transferring the active connections of a virtualized load balancer to OpenNebula.

It makes sense to leave the subsequent processing of information to the new Flow component. Flow, which was previously available as the AppFlow extension, has been an integral part since version 4.2 and has been greatly expanded in the scope of the acquisition. With the help of Flow, you can apply both static and dynamic rules based on OpenNebula Gate values.

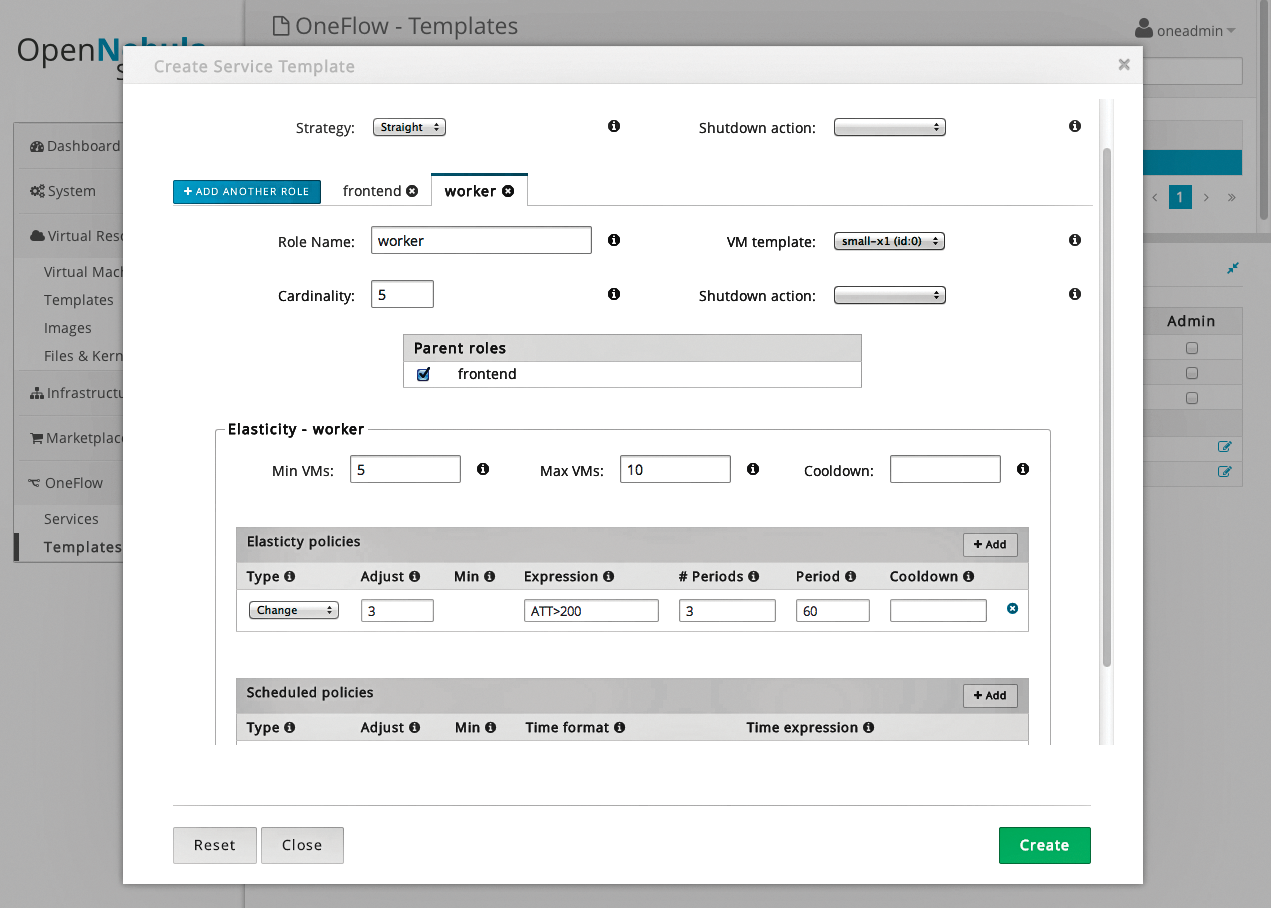

OpenNebula separates actions into Scheduled Policies and Elasticity Policies (Figure 3). Scheduled Policies allow, as the name suggests, scheduled changes to resource pools. Thus, application servers will shut down at night or on the weekend when their performance is not needed, based on static rules.

Elasticity Policies can make changes to pools based on rules and taking Gate values into consideration. After defining the minimum and maximum size of a virtual machine pool, virtual machines are restarted or shut down on the basis of rules. Using expressions and period rules, you can then modify the available resources at variable intervals.

Listing 1 shows the use of a dynamic rule based on connections. The number of active connections passed in by OpenNebula Gate is checked and – in the first sub-rule – results in two new systems being started after the rule has been exceeded three times. The second part of rule allows the reduction of the pool after the number of connections has dropped by a certain percentage.

Listing 1: Changing Resources

01 {

02 "name": "ONE-SCALE",

03 "deployment": "none",

04 "roles": [

05 {

06 "name": "appserver",

07 "cardinality": 2,

08 "vm_template": 0,

09

10 "min_vms" : 5,

11 "max_vms" : 10,

12

13 "elasticity_policies" : [

14 {

15 // +2 VMs when the exp. is true for 3 times in a row,

16 // separated by 10 seconds

17 "expression" : "CONNECTION > 2000",

18

19 "type" : "CHANGE",

20 "adjust" : 2,

21

22 "period_number" : 3,

23 "period" : 10

24 },

25 {

26 // -10 percent VMs when the exp. is true.

27 // If 10 percent is less than 2, -2 VMs.

28 "expression" : "CONNECTION < 2000",

29

30 "type" : "PERCENTAGE_CHANGE",

31 "adjust" : -10,

32 "min_adjust_step" : 2

33 }

34 ]

35 }

36 ]

37 }

By combining dynamic application information with the capabilities of the OpenNebula Core, the management of complex application scenarios has no limits. The syntax is self-explanatory, and rules can be used for a variety of scenarios in a short time. Thus, OpenNebula meets critical application requirements stipulated by providers and supports demand-driven resource allocation. Because OpenNebula also has an interface to AWS, systems can also be outsourced to AWS in a hybrid cloud model.

Why OpenNebula?

OpenNebula is a powerful management and provisioning platform for the virtualized data center. Familiarizing yourself with the stack and the subsystems makes the cloud more tangible, and components that have been in use for years are treated to a whole new set of clothes under OpenNebula.

However, cloud computing does not necessarily mean outsourcing all services to third-party clouds; instead, it offers rich opportunities for your own operations. OpenNebula is gaining in popularity as an open source alternative to VMware [6]. The use of heterogeneous components and the high level of integration in particular characterize OpenNebula, helping it offer a comprehensive architectural approach, even for established IT infrastructures.