OpenStack: Shooting star in the cloud

Assembly Kit

The IT news is beginning to sound like a scene from the movie "Being John Malkovich" in which the hero undertakes a journey into himself and notices that every spoken sentence is replaced by "John Malkovich." In IT, every sentence is cloud, cloud, cloud.

And increasingly, "cloud" means the OpenStack cloud. The open cloud environment supported by the OpenStack project has become everybody's darling. But is the hype justified? This article takes a close look at one of the most interesting projects in the FOSS world.

Reality Check: OpenStack

OpenStack is not a monolithic program. In fact, the name OpenStack now designates a very comprehensive collection of components that join forces to take care of automation. The various OpenStack components each handle a specific part of the overall system. The OpenStack world defines the following automation tasks:

- User management

- Image Management

- Network management

- VM management

- Block storage management for VMs

- Cloud storage

- The front end to the user

Each of these tasks is assigned to an OpenStack component. Incidentally: Components in OpenStack always have two names: an official project name and a codename. The article uses the much more commonly used codenames to designate the various OpenStack components.

User Management: Keystone

For customers to be able to configure their services correctly within a cloud computing environment, the system must offer them a user management system. In OpenStack, Keystone [1] ensures that users can log in with their credentials.

Keystone doesn't just implement a simple system based on users and passwords; it also provides a granular schema for assigning permissions. At the top are the tenants that typically represent the corporate level in the cloud environment: the cloud provider's customers. Then there are the users, each of whom is a member of a tenant; different users may well have different rights. For instance, the company's boss might be authorized to start or stop VMs or to assign new admins, while the lowly sys admin can only start or stop virtual machines. Keystone lets you build this kind of schema – and for multiple tenants.

Keystone is also responsible for internal communication with other OpenStack services. For one OpenStack service to be able to talk to another, it must first authenticate with Keystone. Keystone middleware exists for this purpose. The middleware is a kind of Python plugin that any internal or external component can use to safely handle Keystone authentication. Speaking of Python, like all other OpenStack services, Keystone is 100 percent written in Python.

For the cloud to succeed, much communication takes place between the individual OpenStack components. But, because clouds need to scale, it would be very impractical to use static IPs for this communication. Thus, Keystone maintains a kind of phone book within the OpenStack cloud: The endpoint directory lists OpenStack services and where to reach them. If a service wants to talk to another service, it simply asks Keystone and receives the desired information in almost no time. If the address of a service changes later, the admin only changes the address in Keystone and does not have to change all configuration files for each service on each host.

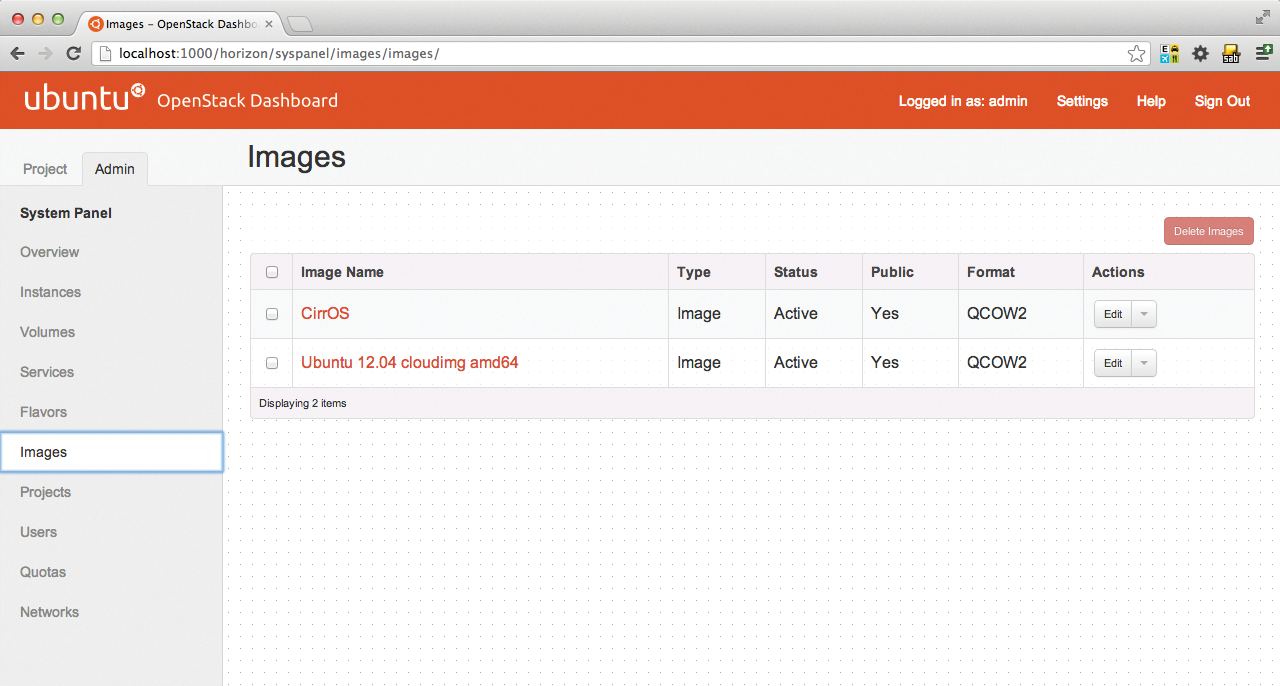

Image Management: Glance

Cloud offerings designed for use in the web interface must meet one key requirement: Barriers must be low. The customer must be able to start a new VM at the press of a button; otherwise, the entire environment is of no use to the customer.

It is hardly reasonable to expect the customer to manually handle the installation of an operating system on the VM – often enough, customers will not have the knowledge necessary to install the virtual machine. The admin is naturally not interested in taking on the task manually.

Glance [2] provides a solution to the problem: The admin uses Glance in a cloud environment to create prebuilt images, which are then selected by the user as required for new VMs. The admin only needs to do the work once when setting up the image, and the subject of operating systems does not worry the customer.

Under the hood, Glance consists of two dashboard services, an API, and a tool that takes care of image management (Figure 1). This classification is often found in a slightly modified form throughout OpenStack. Keystone is basically nothing more than an HTTP-based API that is addressed using the ReSTful principle.

Glance is one of the more inconspicuous OpenStack components, and admins very rarely have to use it after the first install. It supports multiple image formats, including, of course, the KVM Qcow2 format, VMware's VMDK images, but also Microsoft's VDI format from Hyper-V. Admins can easily convert existing systems into Glance-compatible images (raw images).

Glance has its field day when users need to start a new VM: The service copies the image for this VM to the hypervisor host on which the VM runs locally. Of course, for administrators, this means that Glance images should not be too large; otherwise, it takes an eternity until the image reaches the hypervisor. Incidentally, many distributions offer ready-made Glance images: For example, Ubuntu comes with UEC images [3].

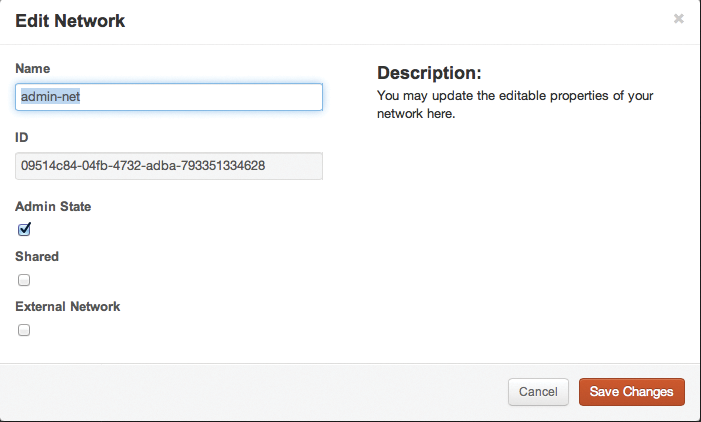

Network Management: Neutron

If you plumb the depths of OpenStack, sooner or later you will end up on Neutron's doorstep (Figure 2). Neutron does not enjoy the best reputation and is regarded as overly complicated; however, this has much less to do with the component itself than with its extensive tasks. It makes sure that the network works as it should in an OpenStack environment.

The issue of network virtualization is often underestimated. Typical network topologies are fairly static, and usually have a star-shaped structure. Certain customers are assigned specific ports, and customers are separated from each other by VLANs. In cloud environments, this system no longer works. On one hand, it is not easy to predict which compute nodes a customer's VM will be launched on; on the other hand, this kind of solution does not scale well.

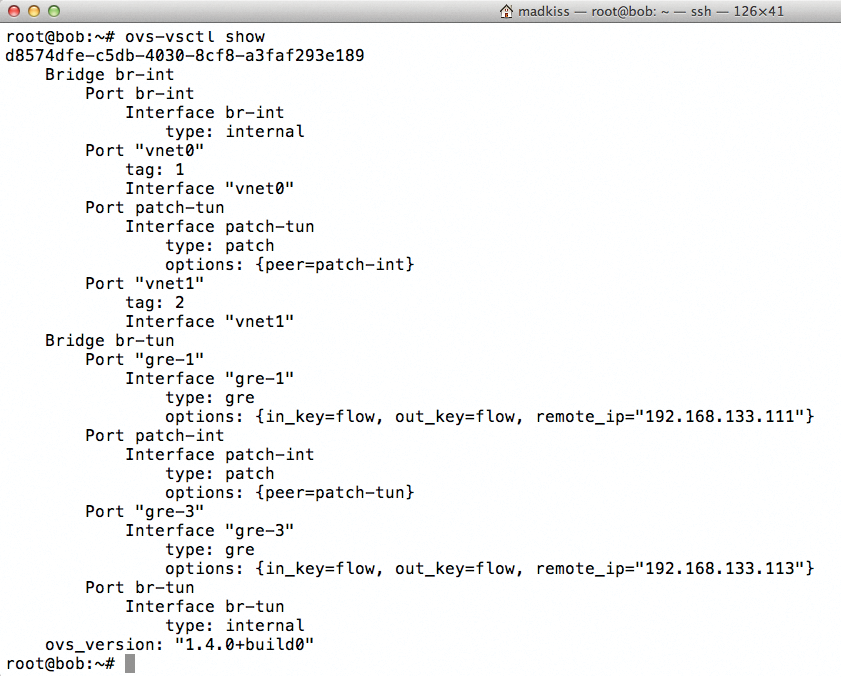

The answer to this problem is Software Defined Networking (SDN), which basically has one simple goal: Switches are just metal, VLANs and similar tools are no longer used, and everything that has to do with the network is controlled by software within the environment.

The best-known SDN solution is OpenFlow SDN [4] (see the article on Floodlight elsewhere in this issue) with its corresponding front-end Open vSwitch [5].Neutron is the matching part in OpenStack, that is, the part that directly influences the configuration of Open vSwitch (or another SDN stack) from within OpenStack (Figure 3).

In practical terms, you can use Neutron to virtualize a complete network without touching the configuration of the individual switches; the various plugins also make it possible to modify switch configurations directly from within OpenStack.

Just like OpenStack, Neutron [6] is also modular: The API is extended by a plugin for a specific SDN technology (e.g., the previously mentioned Open vSwitch). Each plugin has a corresponding agent on the computing node side to translate the plugin's SDN commands.

The generic agents for DHCP and L3 both perform specific tasks. The DHCP agent ensures that VMs are assigned IP addresses via DHCP when a tenant starts; L3 establishes a connection to the Internet for the active VMs. Taken to an extreme, it is possible to use Neutron to allow every customer to build their own network topology within their cloud.

Customer networks can also use overlapping IP ranges; there are virtually no limits to what you could do. The disadvantage of this enormous feature richness, however, is that it takes some knowledge of topics such as Software Defined Network operations to understand what Neutron actually does – and to troubleshoot if something fails to work as it should.

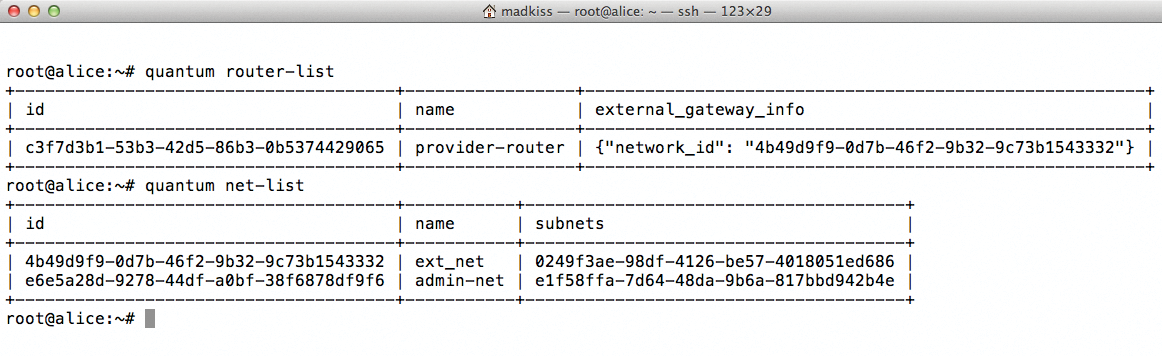

Incidentally, if you have worked with OpenStack in the past, you might be familiar with Neutron under its old name, Quantum (Figure 4). (A dispute over naming rights in the United States led to the new name Neutron.)

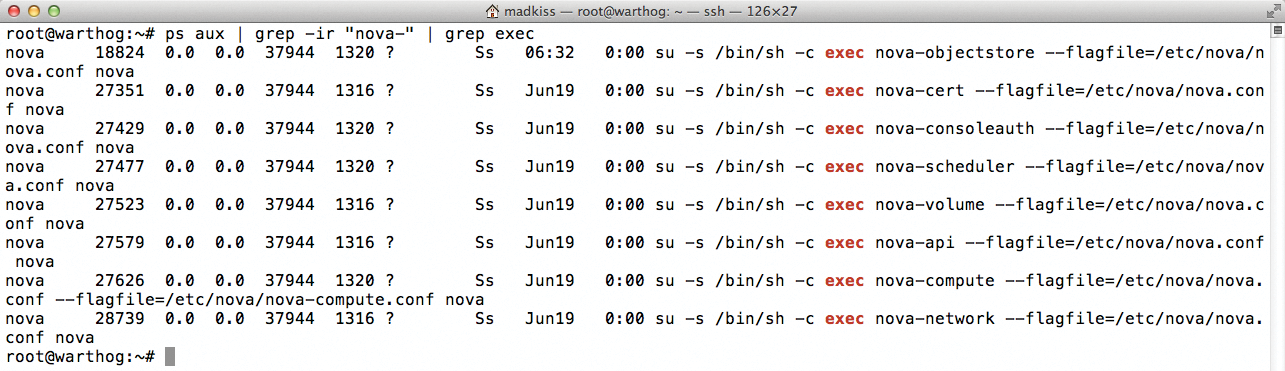

VM Management: Nova

The components I have looked at thus far lay the important groundwork for running virtual machines in the cloud. Nova (Figure 5) [7] is now added on top as an executive in the OpenStack cloud. Nova is responsible for starting and stopping virtual machines, as well as for managing the available hypervisor nodes.

When a user tells the OpenStack cloud to start a virtual machine, Nova does the majority of the work. It checks with Keystone to see whether the user is allowed to start a VM, tells Glance to make a copy of the image on the hypervisor, and forces Neutron to hand out an IP for the new VM. Once all of that has happened, Nova then starts the VM on the hypervisor nodes and also helps shut down or delete the virtual machine – or move it to a different host.

Nova comprises several parts. In addition to an API by the name of Nova API, Nova provides a compute component, which does the work on the hypervisor nodes. Other components meet specific requirements: nova-scheduler, for example, references the configuration and information about the existing hypervisor nodes to discover the hypervisor on which to start the new VM.

OpenStack has no intention of reinventing the wheel; Nova makes use of existing technologies when they are available. It is deployed, along with libvirt and KVM, on Linux servers, where it relies on the functions of libvirt and thus on a proven technology, instead of implementing its own methods for starting and stopping VMs. The same applies to other hypervisor implementations, of which Nova now supports several. In addition to KVM, the target platforms include Xen, Microsoft Hyper-V, and VMware.

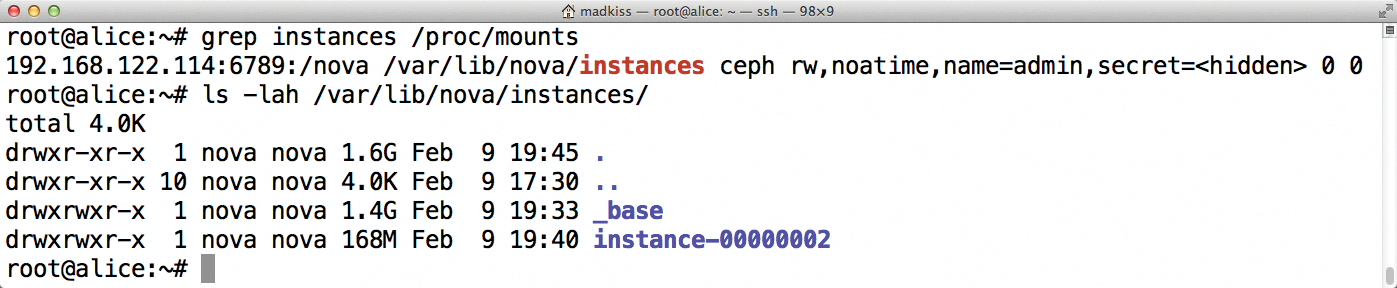

Block Storage for VMs: Cinder

Finally, there is Cinder [8]; although its function is not a obvious at first glance, you will understand the purpose if you consider the problem. OpenStack generally assumes that virtual machines are not designed to run continuously. This radical idea results from the approach mainly found in the US that a cloud environment should be able to quickly launch many VMs from the same image – this principle basically dismisses the idea that data created on a virtual machine will be permanently stored. In line with this, VMs initially exist only as local copies on the filesystems of their respective hypervisor nodes. If the virtual machine crashes, or the customer deletes it, the data is gone. The principle is known as ephemeral storage, but it is no secret that reality is more complicated than this.

OpenStack definitely allows users to collect data on VMs and store the data persistently beyond a restart of the virtual machine. This is where Cinder enters the game (Figure 6): Cinder equips virtual machines with persistent block storage. The Cinder component supports a variety of different storage back ends, including LVM and Ceph, but also hardware-SANs, such as IBM's Storewize and HP's 3PAR storage.

Depending on the implementation, the technical details in the background can vary. Users can create storage and assign it to their virtual machines, which then access the storage much like they are accessing an ordinary hard drive. On customer request, VMs can boot from block devices, so that the entire VM is backed up permanently and can be started, for example, on different nodes.

Cloud Storage: Swift Dashboard

Almost all the components presented in this article deal with the topic of virtualization. However, conventional wisdom says that a cloud must also provide additional, on-demand storage, which users can access when needed using a simple interface. Services such as Dropbox or Google Drive are proof that such services enjoy a large fan base. In OpenStack, Swift [9] ensures that users have cloud storage.

Swift, which is developed by hosting provider Rackspace, gives users the ability to upload or download files to or from storage via a ReSTful protocol. Swift is interesting for corporations because it is an object store, which stores data in the form of binary objects and scales seamlessly on a horizontal axis.

If space becomes scarce, companies simply add a few storage nodes with fresh disk space to avoid running out of storage space. Incidentally, you can operate Swift without the other OpenStack components – this OpenStack service thus occupies a special position, because the other services are mutually interdependent.

The Front End: Horizon

The final component I'll describe for this tour is the front end for the actual users: The most beautiful cloud environment in the world would be worth nothing if novice users could not control it without prior knowledge. Horizon makes OpenStack usable: The Django-based web interface allows users to start and stop virtual machines as well as configure various parameters associated with the use of the cloud (Figure 7). When somebody needs to start a new VM, users turn to Horizon, as they do when assigning a public IP to a VM [10].

The Community

OpenStack set much store in being a community project. Unlike Eucalyptus or OpenNebula, OpenStack is not backed by a corporation that steers the development of the platform. OpenStack has been a community project from the outset. It grew out of a collaboration between NASA and Rackspace, which is also famous as the host behind GitHub. NASA contributed the part that dealt with virtualization; Rackspace threw in Swift as a storage solution.

Since then, much has happened: NASA is not involved in OpenStack development, but hundreds of other companies have joined OpenStack movement, including the likes of Red Hat, Intel, and HP.

The project still attaches great importance to the community; for example, there is an OpenStack Foundation, some of whose board members are elected directly by project members. Each of the components described in this article has a Project Technical Lead (PTL), who is democratically elected.

OpenStack also attaches great importance to allowing anyone to contribute. If you want to, you will definitely find something meaningful to contribute to OpenStack. At the OpenStack design summits, which are held twice a year, the project celebrates its roots in the community and invites developers to listen to keynotes, poring over design concepts that will lay down the future roadmap for development.

More Information

You will find more information about the OpenStack components on the individual pages of OpenStack website. The documentation of the project is quite impressive [11], and the OpenStack project's wiki offers a plethora of detailed information. For its bug tracking, OpenStack uses Launchpad. The Launchpad Questions feature lets you post more stubborn issues, which are often addressed directly by one of the project developers.

The official IRC channel of the OpenStack project is #openstack on Freenode. You will find experienced OpenStack users on IRC who might be able to help with specific problems.

If you want to try OpenStack, you will find complete installation instructions on the web, and you can get started almost immediately. For a test environment, you just need three virtual machines; you don't even need your own hardware.