Oracle Database 12c: Cloud computing with multitenant architecture

Pluggable Database

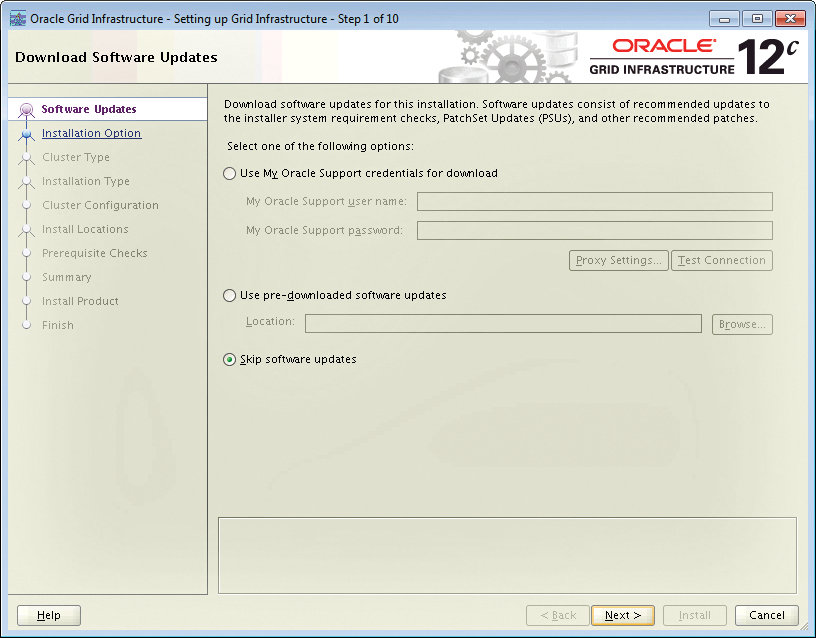

Around five years of development went into the new release of the Oracle database, and the current version, Oracle 12c [1], has been available for download from the Oracle Technology Network (OTN) [2] since June. The software was actually intended for earlier release, but more tests were required to make sure it was as bug-free as possible before it reached the markets.

The new release is available for Linux and Solaris, and versions for Windows, IBM AIX, and HPUX will follow shortly. A version is also planned for BS2000 platforms. The manufacturer intends to provide special upgrade wizards and will even support a direct step up from the legacy releases 8i and 9i to 12c. The condition for this is that the database must have the current patch level.

Beyond the known licensing models, Oracle also will offer a private cloud service with flexible prices. Whether this model will be adopted by customers, and how it will be received, is an interesting question: Many enterprise customers are rather skeptical about public cloud models and were so before the recent PRISM scares. In the future, however, along with the public cloud, there will be easy and flexible ways to consolidate the database landscape in-house with the new multitenant architecture [3]. The new multitenant architecture is thus a precondition for "Database as a Service."

New Paradigm

One of the most important new features is the multitenant capability [4] of the new architecture. This feature is designed to facilitate cloud computing at the enterprise level, whether in a public or private cloud. Additionally, it can reduce hardware resources and administrative overhead.

This architecture will primarily benefit those customers with numerous individual instances in use. Up to 253 databases can then be packaged into an instance. Thus, hardware capabilities such as memory, CPU capacity, and storage can be shared and are more efficient. This approach also facilitates the process of applying patches, upgrading, and backup and recovery (Figure 1).

The pluggable database (PDB) concept sees a fundamental change to the previous Oracle paradigm, according to which an instance (or multiple instances of a cluster) could only open precisely one database. This limitation changes with the introduction of the pluggable database; an instance now can open and operate multiple databases. Oracle is thus targeting maximum database consolidation without the need for virtualization. In our opinion, the new architecture can make host-level virtualization superfluous in large environments.

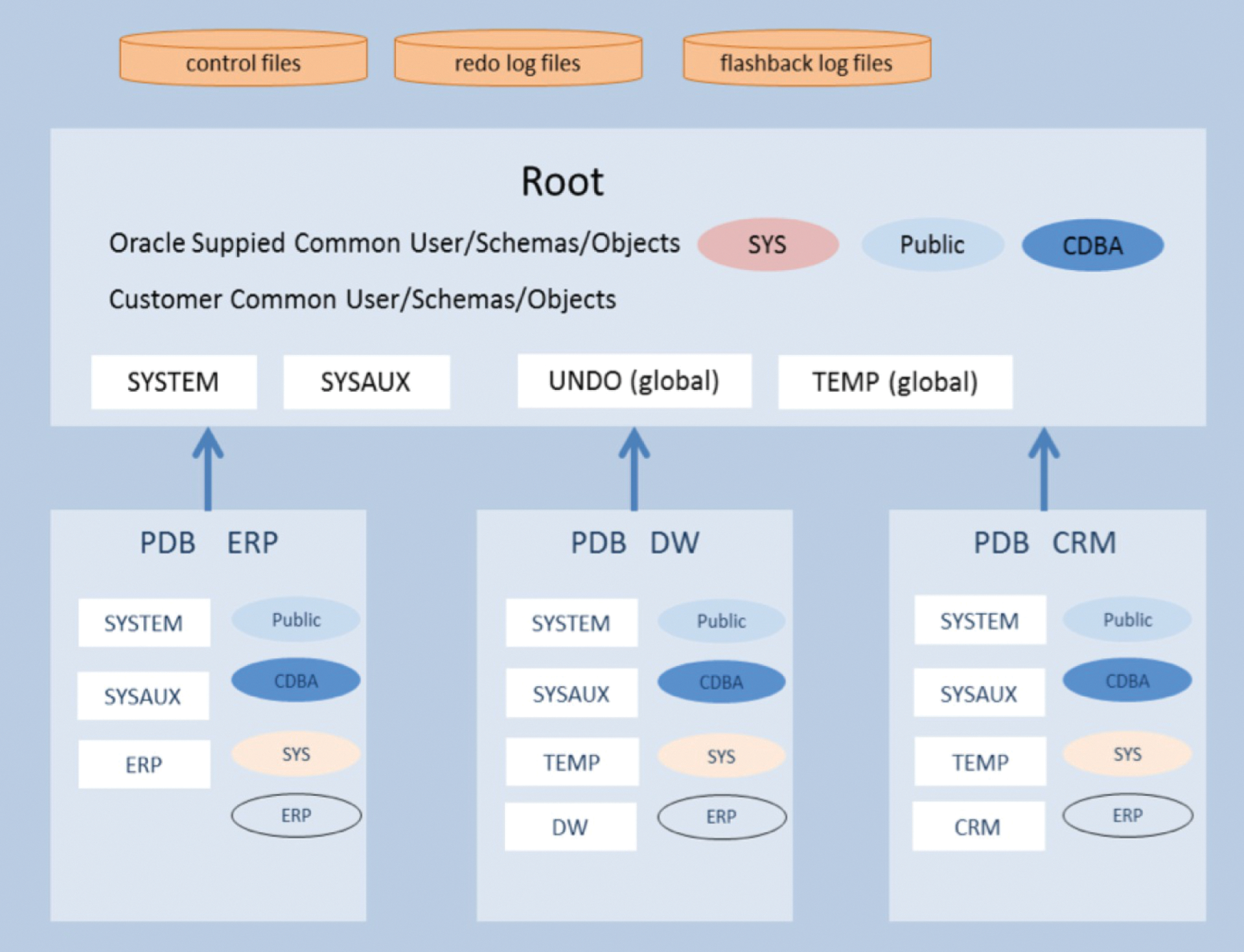

To achieve this, Oracle has extended its database by adding another layer; in the future, there will be container databases (CDBs) that house up to 253 PDBs (Figure 2). To the user, these appear to be normal databases, and they behave that way, too. Modification of the application code is not necessary except in isolated cases because the namespace of each database is individual.

The CDB is itself an instance with associated memory and processes (e.g., the LGWR log writer, the DBWn database writer, the CKPT checkpoint, etc.). The PDBs, on the other hand, have no separate background processes; processing is done by the background processes of the container database.

The data dictionary, with the metadata, stores information such as users and permissions, information about tables, indexes, views, and all other database objects – as in earlier releases. The dictionary is saved in the CDB in a multitenant architecture. Internally, PDBs only store pointers to the corresponding regions in the container database. The container database defines the data dictionary objects. The actual data of the data dictionary, such as the rows in the OBJ$ table, are stored in the pluggable databases so it can be included when copying the database.

More Efficient Through Containers

This concept allows the separation of metadata (i.e., the description of the data dictionary) from the actual data and makes it possible to transport individual PDBs as required. The PDBs can be either installed within one container or distributed between multiple containers. Another benefit is that upgrades will be faster as of version 12c: Instead of upgrading the data dictionary with catproc, admins will just need to "plug" the PDB into a version 12c+1 container database to upgrade to the next version.

As described previously, individual PDBs do not have their own memory areas or processes. This design results in better utilization of resources because significantly fewer processes compete for the available CPU cores – and thus fewer context switches are needed. Far more memory is available for the buffer cache or shared pool because the minimum 350MB of SGA applies to the operation of an instance of the CDB and not for the PDBs.

Some simple math can help clarify this concept: For example, 10 databases in version 11g need at least 10x350MB, or around 3.5GB, of memory to be able to start each instance. The buffer pool and the shared pool have very little space in which to fulfill their functions. As a result, at least 6x10 background processes are competing for the CPU. If you now use the concept of pluggable databases and allow the 10 databases to run as PDBs in a CDB, you have just six processes and need just 350MB of memory to operate the CDB. The extra 3.15GB, compared with the old architecture, can be used in the new concept for the buffer cache or shared pool.

Resource Management

Distribution of resources between different databases is handled by the Oracle Resource Manager [5], which is enabled by default. Also by default, each database has a fair share of the resources: Two databases each receive one half, three each have one third of the resources, four each a quarter, and so on. This distribution pattern can be adjusted if required, and these adjustments can be made within the PDBs. For example, guaranteed shares of the CPU or CPU load limits are configurable; however, a limitation of the I/O load is only possible with Oracle's own Exadata hardware.

The physical storage structure of the databases in storage has not changed to any great extent; thus, the control files, redo logs, flashback logs, and the UNDO tablespace are still at container level. The SYSTEM and SYSAUX tablespace in the database container provides the global data dictionary; the same tablespaces in the individual PDBs, however, only store the actual data dictionary payload. The temporary tablespace – which is comparable to the swap space at operating system level – can be created globally for all databases or locally. User tablespaces can be created at all levels for data storage.

Because the namespace for each database is individual, access across PDB boundaries is not possible except through database links – even if the data is in the same CDB. When using this technology, you should generally not store user objects in the container database, because they would have to be transferred manually in case of a migration. Oracle plans to disable this option completely some time in the future.

In terms of storage space management, it is possible to limit the maximum assignable space per database so that management by less experienced DBAs is possible within these constraints.

Rights and Roles

Management of users and roles is, of course, critical in a highly consolidated environment. When using pluggable databases, you need to distinguish between common and local users and roles. Users and roles are considered common – or rather global – if they are created at CDB level and therefore inherited by all existing and future PDBs. You can identify these users by their prefix of C##. These global users can connect to each PDB to which they have access, thanks to respective grants. At the PDB level, rights can be extended beyond those authorizations derived from common roles. Thus, it is possible to give a global user DBA privileges in one database while that same user cannot even connect with any other database.

The link between users and databases is handled by the Listener process or via the Scan Listener, just as in older releases. By default, precisely one service with the same name exists for each pluggable database, but more services can be added.

Limitations

With all of these advantages, limitations are bound to occur in the operation of this environment. Obviously, all PDBs that share a CDB must use the same database version, and all databases must use the same character set – different character sets are not possible. The obvious choice here is Unicode: To facilitate migration to Unicode, the maximum column width of a VARCHAR2 column has been expanded from 4Kb to 32Kb as a new feature in 12c. Errors that were previously caused by reaching the maximum column width when converting to Unicode are thus a thing of the past.

At the same time, there are restrictions on the individually modifiable database parameters per pluggable database. An overview of the modifiable parameters can be obtained with the query:

select name from v$parameter where ISPDB_MODIFIABLE ='TRUE' order by 1

Mainly the tuning parameters, such as OPTIMIZER_*** and PARALLEL_***, and the NLS parameters are customizable for each PDB.

Support for Cloud Architecture

Considering the fact that this version is the first to make multitenant architecture available with container and pluggable databases, support by the Oracle tools is already advanced. For example, the pluggable database is completely Real Application Cluster (RAC)-capable; RMAN can handle backups and restores at PDB level – including recovery down to table level. Additionally, integration into standby databases with Data Guard [6] is supported. The only restriction for Data Guard is that a move is only possible at container level, and automatic integration of a new PDB on the standby site is only possible in conjunction with the commercial Active Data Guard option.

Implementing Databases

Creating a PDB first requires that you have a 12c database as a container. If the DBA does not explicitly select this or specify the ENABLE PLUGGABLE DATABASE keyword, the result is a normal database without the possibility of housing new databases. In this case, you need to create a new database. Creating a PDB always involves copying an existing PDB. This is either the Seed Database PDB$SEED, which is implicitly created when creating the container and cannot be changed, or some other PDB. This database, however, must have OPEN READ-ONLY status while the copy is being made.

Additionally, a PDB administrator must specify who owns the PDB_DBA role. This role does not have any permissions by default, but additional permissions and roles can be assigned when creating the PDB if desired. Before the PDB can be created, the uniqueness of the resulting file name must be ensured. This step is done either manually via the FILE_NAME_CONVERT parameter or automatically using OMF. For simplicity, we recommend using OMF and ASM here (Listing 1).

Listing 1: Creating a PDB

SQL> select * from CDB_PDBS order by pdb_id; PDB_ID PDB_NAME DBID CON_UID GUID STATUS CREATION_SCN =====================================================================; 2 PDB$SEED 4062623230 4062623230 E0C9D94CE3B6497BE04380B0A8C06105 NORMAL 1720734 1 SQL> select name from v$datafile order by 1; ====================================================================; DATA01/CDB1/DATAFILE/sysaux.256.820013801 DATA01/CDB1/DATAFILE/sysaux.257.820013845 DATA01/CDB1/DATAFILE/undotbsl.259.820013893 DATA01/CDB1/DATAFILE/users.258.820013891 DATA01/CDB1/DD7C48AA5A4404A2E04325AAE80A403C/DATAFILE/sysaux.265.820013923 DATA01/CDB1/DD7C48AA5A4404A2E04325AAE80A403C/DATAFILE/system.266.820013923 6 rows selected ; SQL> create pluggable database pdb001 admin user admin identified by; Pluggable database created. SQL> alter pluggable database pdb001 open; Pluggable database altered. SQL> select * from CDB_PDBS order by pdb_id; PDB_ID PDB_NAM DBID CON_UID GUID STATUS CREATION_SCN CON_ID ------ -------- ---------- ---------- ------------------------------------------------------------- 2 PDB$SEED 4062623230 4062623230 E0C9D94CE3B6497BE04380B0A8C06105 NORMAL 1720734 1 3 PDB001 1700339437 1700339437 E0D0BE79135B75B0E04380B0A8C00F14 NORMAL 1956354 1 SQL> select name from v$datafile; NAME --------------------------------------------------------------------------- +DATA01/CDB1/DATAFILE/sysaux.256.820013801 +DATA01/CDB1/DATAFILE/system.257.820013845 +DATA01/CDB1/DATAFILE/undotbs1.259.820013893 +DATA01/CDB1/DATAFILE/users.258.820013891 +DATA01/CDB1/DD7C48AA5A4404A2E04325AAE80A403C/DATAFILE/sysaux.265.820013923 +DATA01/CDB1/DD7C48AA5A4404A2E04325AAE80A403C/DATAFILE/system.266.820013923 +DATA01/CDB1/E0D0BE79135B75B0E04380B0A8C00F14/DATAFILE/sysaux.271.820043565 +DATA01/CDB1/E0D0BE79135B75B0E04380B0A8C00F14/DATAFILE/system.275.820043565

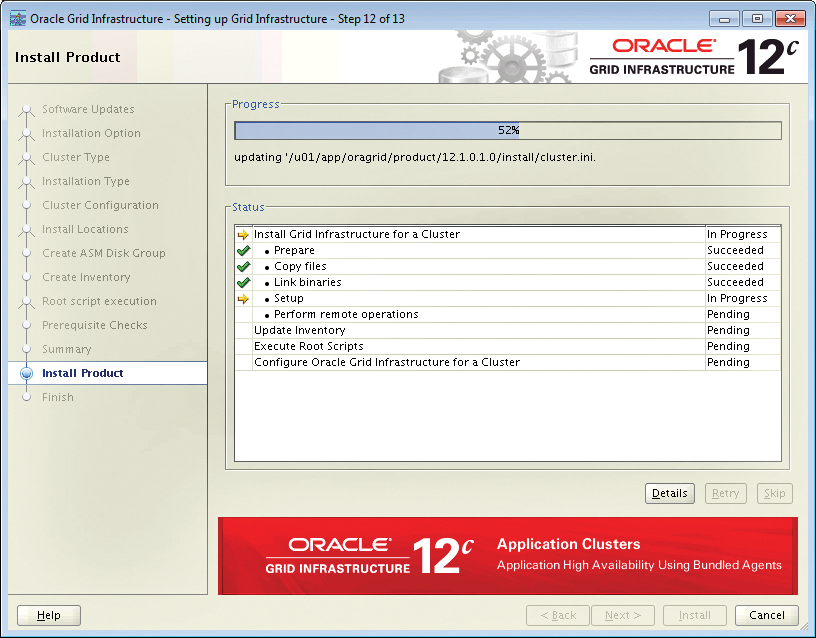

As you can see from Listing 1, PDBs must be opened manually before use and after each start of the instance. You can write a trigger for this or use the grid infrastructure. Oracle Restart then registers resources and their dependencies, monitors where appropriate, or starts and stops in the correct order in the usual way. Services handle this process for PDBs. As soon as you create a service and associate it with a PDB, the associated database is automatically opened when the corresponding service starts.

The information available at PDB and CDB level follows the principle of visibility. For example, an AWR report – run against a PDB – only contains information about the PDB in question. However, an AWR report at CDB level contains information on all databases. The situation is similar for additional views that use the CDB_ prefix. They contain information about all PDBs.

Upgrade Paths

You have three possible approaches for shifting from a non-PDB to a PDB: You can export/import using Data Pump (as of 10g Release 1, older versions may need to make an intermediate step), use GoldenGate, or convert a non-CDB to a PDB. The 10g or 11g database must be upgraded to 12c (Figure 3).

During the conversion process, the database can be converted to a PDB using the DBMS_PDB package (after upgrading to 12c) and then plugged into a CDB. In-place upgrades are possible so far from 10.2.0.5, 11.1.0.7, 11.2.0.2, and higher; the result is initially a non-CDB.

Use of this new concept requires the Enterprise Edition and presupposes the additional multitenancy license. At list price, the license costs as much as the Enterprise Edition itself. The Standard Edition can also use PDBs; however, the number of possible databases is limited to one. This may sound like a bad joke, but the next version, 12.2, will no longer have classical non-CDB databases; thus, non-PDB databases need to be converted – even in the Standard Edition.

Hierarchical Storage Management

Also new in version 12c is the ability to apply rules automatically to tables, partitions, or blocks based on the last access (read and write, full-table scan, or single access) that describe what will happen to the object after a specific deadline elapses. These rules envision, for example, that after a variable number of days since the last read/write, full-table scan, or single-row access, the table, partition, or block in question is either compressed or moved to a different tablespace.

For example, it is possible to compress a partition to save space after 30 days without access using advanced compression. After 60 days without access, compression can be increased again through the use of (E)HCC, and after 90 days without access, the already compressed partition can be shifted to another tablespace that resides on a slower and cheaper storage. In this way, the database can separate frequently used data from infrequently used data, automatically compress the latter, and shift it to cheaper storage.

Flex Cluster/Flex ASM

Also new to version 12c is the concept of Flex ASM (Automatic Storage Management). This feature enables the use of an ASM instance that does not run locally on the server. The data is transmitted over a network (Ethernet or InfiniBand). In extreme cases, Flex ASM enables consolidation and separation of database storage by establishing a central storage cluster that is accessible to all other databases in the enterprise. Flex ASM is a prerequisite for another new feature that increases the number of nodes, and thus the computing power available in a RAC, without each node needing access to the shared storage: Flex Cluster.

Flex clusters consist of hub-and-leaf nodes. A hub node is a node with direct access (e.g., via LAN) to storage. In comparison, a leaf node only has indirect access to the storage via Flex ASM, but it is still a full member of the cluster.

Application Continuity

A few years ago, the introduction of Transparent Application Failover (TAF) revolutionized contemporary cluster concepts by allowing SELECT statements canceled by a node failure to continue transparently to the user on a remaining node (under certain conditions). In version 12c, Oracle extends this concept to all transactions, dubbing this feature Application Continuity.

In the best case, a failure of one node in the RAC goes completely unnoticed by the user – no matter what kind of transaction they just performed. However, this requires adjustments to the client and is linked to the use of certain classes and libraries. Currently JDBC Thin, UCP, and WebLogic are supported. Work is in progress on support for PeopleSoft, Siebel, and Oracle Fusion.

Little Gems

Besides all the big new features, some smaller useful extensions are now included:

- You can now move a data file online.

- Besides the known

SYSDBAandSYSOPERroles, additional roles exist for more granular assignment of existing permissions: SYSBACKUPDBA for backup and recovery, SYSDGDBA for Data Guard, and SYSKMDBA for wallet management. - Thanks to the far sync standby option, Data Guard has the ability to support synchronous replication over distances greater than 40 to 100km; this involves a local hub synchronously accepting the data and then asynchronously transferring the data to the remote standby site. The switchover itself occurs between the primary and remote standbys – the local hub is not involved.

- Cancelled switchovers can now be resumed.

- DML on temporary tables in a standby database does not cause a redo and allows data to be stored in temporary tables in a standby database.

- Sequences of the primary DB can be used in standby mode.

- Database upgrades without downtime are (almost) automatic.

- The size of the PGA can be limited by the

PGA_AGGREGATE_SIZE_LIMITparameter. - The patch inventory can be queried directly from within the database.

- ACFS (ASM Cluster File System) supports storage of all data files. ACFS snapshots can be writable.

Conclusions

Oracle has come up with something good after a long development period. Besides the many small improvements, the major architecture change toward pluggable and cloud databases opens up many possibilities for use. Whether and to what extent these changes are adopted by the users remains to be seen. After initial reluctance, a widespread move is likely to follow.