Udev with virtual machines

Technical Knockout

Any admin who has ever cloned a virtual SUSE system has probably encountered the following issue: The newly cloned VM hangs when booting and waits for the default devices (Figure 1). At the end of the extended boot procedure, the configured network interface (NIC) eth0 on the original has mutated into a NIC named eth1, and the numbers of other NICs have been increased by one. A similar thing happens with almost all other Linux distributions, and the problem is independent of the hypervisor. Thus, the clone loses its network configuration, and you have to revise it manually at the console.

The blame is quickly placed on udev, but the udev device manager is actually doing its job. During the kernel's hardware detection phase, udev loads all the modules asynchronously in no particular order. The process depends on several conditions – the PCI bus topology and the device drivers and the way they look for their hardware – that can lead to infinitely changing device names. If, for example, eth0 and eth1 are reversed, the consequences can be serious depending on the system – from security problems to failure of central services.

Permanently Set

This situation thus leads to a requirement for persistent device names. Once they are established, network cards should retain their configuration permanently, regardless of whether more cards are added or taken away. Many distributions solve this requirement with udev using persistent net rules. They are found in the /etc/udev/rules.d directory and ensure consistent device naming by assigning names on the basis of the device's MAC address.

Problem: Virtualization

This solution causes problems in the cloud because, as a rule, a single Linux VM is cloned multiple times. Each new clone is assigned new virtual hardware, and VMware, libvirt, or the administrator generate new MAC addresses for the virtual NICs to avoid duplicate MAC addresses on the network. The new interfaces are given new names in ascending order because the original names are already reserved for other MAC addresses. This approach reveals a major drawback of this concept: An installed and configured Linux cannot be run on some other (virtual) hardware, because its configuration then also changes. The system administrator needs to set up the cloned system manually.

The often proposed and easy solution of deleting the 70-persistent-net.rules file before cloning is, unfortunately, not very sustainable. In many distributions, a new rule file is generated by a persistent net generator rule the next time you clone. Making your own changes to these rules in the /lib/udev directory is also less than useful – they are overwritten when you update the udev package. Table 1 shows the names and locations of the rules for different distributions.

Tabelle 1: Udev Storage Locations

|

Distribution |

Path |

|---|---|

|

Ubuntu 12.10, Debian 7.0, SLES 11 SP2 |

* |

|

openSUSE, Red Hat 6 |

* |

|

Chakra Linux 2013.01 |

* |

When working with udev rules, please note that a race condition can occur between the kernel and udev rules if both attempt to assign eth*-type names: Who names the first network interface, the kernel or userspace? It is thus also advisable to use names different from eth* for the NICs in your own udev rules, such as net0 or wan1. As is often the case in the open source world, alternatives to the persistent network rules have been developed by different parties in different ways; I will look at them in more detail.

Dell's Solution

In a whitepaper [1], Dell describes a specially developed software package called biosdevname. This auxiliary tool for udev assigns device names according to where the hardware is located. This ensures consistent and meaningful names for your network cards at the same time: NICs on the motherboard start with a prefix of em, followed by the port number starting at one. PCI cards have the following naming scheme: p<Slotnumber>p<Portnumber>. Examples of this are em1 for the first internal interface and p4p1 for the first port on a network card in slot number 4.

The utility acquires the necessary information using the System Management BIOS Specification (SMBIOS [2]). This specification describes where the BIOS stores information on slots and network cards. If the BIOS does not support the corresponding entries, biosdevname resorts to the IRQ routing table.

Systemd Solution as of v197

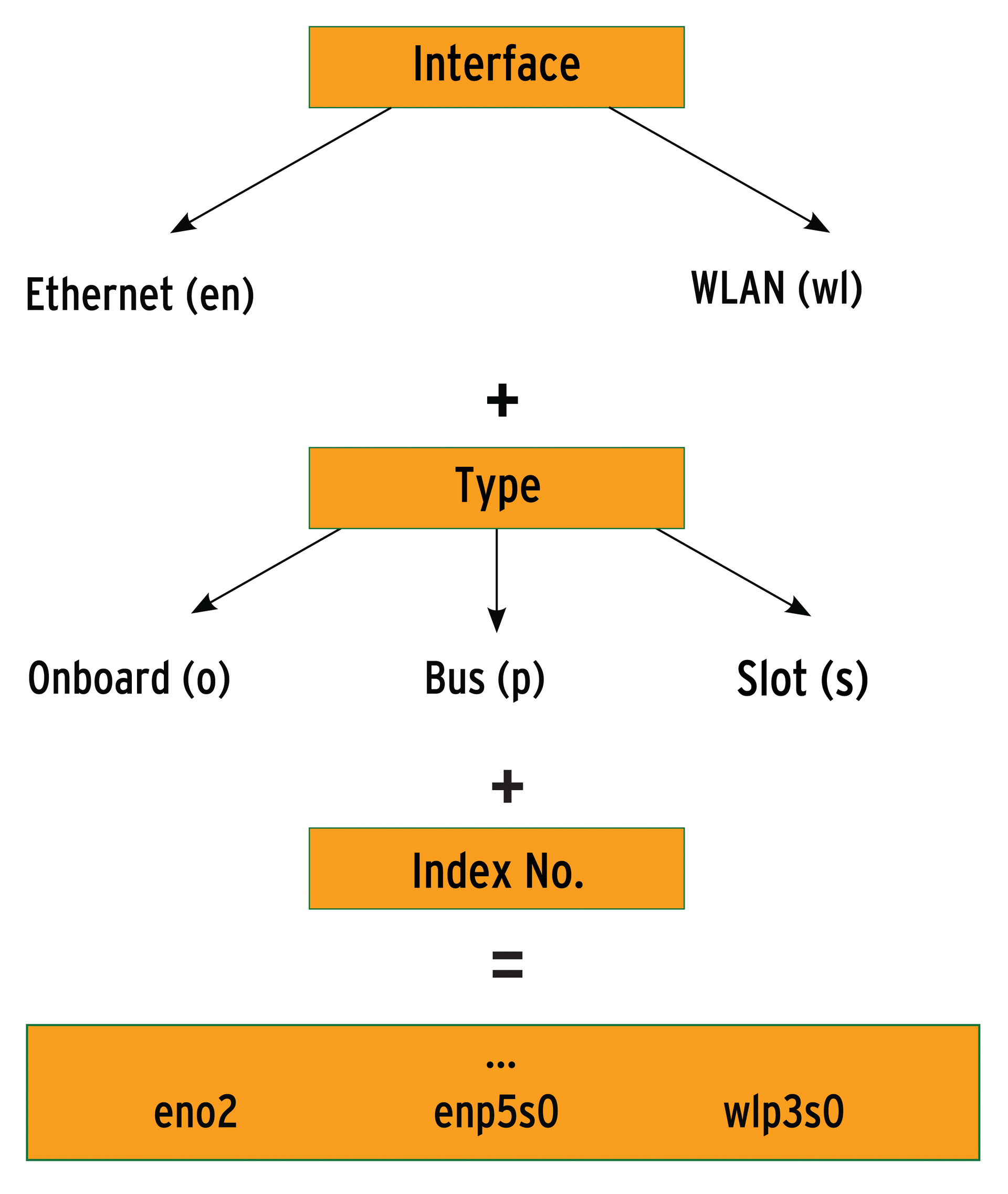

Since the V197 version of systemd, radical changes have been made to deal with the problem by providing predictable network interface names [3]. In a similar approach to biosdevname, NICs obtain their names according to their unique location in the (virtual) hardware (Figure 2).

The name starts with two characters for the type of interface: en for Ethernet, wl for WLAN, ww for WWAN. The type is then distinguished: o<Index> represents an onboard interface with an ordinal number; s<Slot> is a slot card with an ordinal number, and p<Bus>s<Slot> reveals the location of the PCI card. Examples of the results are, say, enp2s0 or enp2s1. This shows just a small part of the naming scheme; more information can be found online [4] [5].

Aftermath

However, some entities have fallen foul of this naming revolution. Programs in the enterprise environment in particular, but also many (installation) scripts, expect a traditional scheme of network names based on a pattern of eth* and thus suddenly fail to work. A proposed solution [3] involves copying 80-net-name-slot.rules

cp /usr/lib/udev/rules.d/80-net-name-slot.rules \ /etc/udev/rules.d/80-net-name-slot.rules

and editing as shown in Listing 1 so that they assign device names in line with the traditional scheme.

Listing 1: 80-net-name-slot.rules

ACTION=="remove", GOTO="net_name_slot_end"

SUBSYSTEM!="net", GOTO="net_name_slot_end"

NAME!="", GOTO="net_name_slot_end"

NAME=="", ENV{ID_NET_NAME_ONBOARD}!="", PROGRAM="/usr/bin/name_dev.py $env{ID_NET_NAME_ONBOARD}", NAME="%c"

NAME=="", ENV{ID_NET_NAME_SLOT}!="", PROGRAM="/usr/bin/name_dev.py $env{ID_NET_NAME_SLOT}", NAME="%c"

NAME=="", ENV{ID_NET_NAME_PATH}!="", PROGRAM="/usr/bin/name_dev.py $env{ID_NET_NAME_PATH}", NAME="%c"

LABEL="net_name_slot_end"

The PROGRAM directive calls a small Python script that maps to the old names (Listing 2). The script can be adapted to your environment by simply changing the key-value pairs in line 4. The following conditions are now met:

- The network configuration is no longer dependent on chance.

- The traditional naming scheme is maintained.

- Systems can be cloned in the cloud indefinitely.

You can try out this systemd version with Chakra Linux [6] as of version 2013.01; it will take some time for other distributions to catch up. Fedora 19 will also use this version.

Listing 2: name_dev.py

01 #!/usr/bin/env python

02 import sys

03

04 dict = {'enp2s0':'eth0', 'enp2s1':'eth1'}

05

06 if sys.argv[1] in dict:

07 print dict[sys.argv[1]]

08 else:

09 print(sys.argv[1])

10 exit(0)

Workaround

Despite renewed efforts, you often have to cope with the generator rules in everyday life (Table 1). Fortunately, the udev ruleset is powerful enough to drag itself out of the dirt. The trick used in Listing 3 relies on the fact that no other applicable rules are executed after assigning a name to a device. Thus, the udev rule simply assigns the name proposed by the kernel (eth*) with the corresponding kernel ordinal number (%n).

Listing 3: 70-persistent-net.rules

Rules for KVM:

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="52:54:00:*", KERNEL=="eth*", NAME="eth%n"

Rules for VMware:

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:50:56:*", KERNEL=="eth*", NAME="eth%n"

Rules for Xen:

SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="00:16:3E:*", KERNEL=="eth*", NAME="eth%n"

By setting a MAC address pattern that matches the MAC addresses automatically generated by the hypervisor, you can specify the rule in more detail. If you add appropriate mapping to this rule, it is also possible to use a virtual machine template for a different network configuration than for the clones. Existing persistent net rules are not overwritten by the net generator or by updates.

As you know, there's an exception to every rule, including this one: The udev rules assume that the hypervisor (or hardware) always enables the devices in the configured order and that they are thus known to the kernel in the same order. For KVM and VMware, this assumption works; with Hyper-V, however, it seems not to be the case [7]. The only solution is then the biosdevnames package or manual rework.

These rules work around the generators for persistent net rules and thus remove the disadvantages that accompany them. Another advantage of this approach is that the traditional device names (eth*) are kept; thus, applications and scripts that rely on precisely these names continue to work. However, the problem remains that the kernel assigns device numbers to the devices in the order of their appearance during the boot process. The rules in Listing 3 can therefore only be used successfully in environments in which this order is always the same.

Conclusions

Each of the approaches has its advantages and disadvantages. The workaround for the cloud has proven to be fine for SLES, Ubuntu, VMware, KVM, and hardware blades. Unfortunately, nothing like a worry-free solution exists in the udev environment. Biosdevnames and systemd break with the old conventions.

For systemd, the solution is to modify the rule in conjunction with a helper script. Systemd will probably assert itself with this approach in the next couple of versions of the corresponding distributions. Things will also be exciting in the Ubuntu camp, where the powers that be are apparently sticking with the existing udev rules. However, an admin-friendly approach in which you can assign appropriate and consistent names for your network cards just by editing a configuration file would be a nice thing to have.