High availability for RESTful services with OpenStack

Switchman

High availability has become a fundamental part of the server room. The cost for a single failure is often so significant that a properly implemented high-availability (HA) system is a bargain in comparison. Linux admins can use the Linux HA stack with Corosync and the Pacemaker cluster manager [1], a comprehensive toolbox for reliably implementing high availability. However, other solutions are also available. For example, if you need to ensure constant availability for HTTP-based services, load balancer systems are always recommended – especially for services based on the REST principle, which currently are very popular.

HA Background

High availability is a system state in which an elementary component can fail without causing extensive downtime. A classic approach to high availability is a failover cluster based on the active/passive principle. The cluster relies on multiple servers, ideally with identical hardware, the same software, and the same configuration. One computer is always active, and the second computer is on standby to take over the application that previously ran on the failed system. One elementary component in a setup of this kind is a cluster manager such as Pacemaker, which takes care of monitoring the servers, and if necessary, restarts the services on the surviving system.

For failover to work, a few system components need to collaborate. Installing the application on both servers with an identical configuration is mandatory. A second important issue is the data store: If the system is a database, for example, it needs to access the same data from both computers. This universal access is made possible by shared storage, in the form of a cluster filesystem like GlusterFS or Ceph, a network filesystem such as NFS, or a replication solution such as DRBD (if you have a two-node cluster). See the box called "SSL in a Jiffy" for a look at how to add SSL encryption to the configuration.

Good Connections

Another issue involves connecting the client to the highly available services: A real HA solution should not require clients to change their configurations after the failover to connect to the new server. Instead, admins mostly work with virtual IPs or service IPs: An IP address is tied to a service and can always be found on the host on which this service is actually running at any given time. Whenever a client connects to this address, it will always find the expected service.

If the software uses stateful connections, that is, if the client-server connection exists permanently, the client should have a feature that initiates an automatic reconnect. The clients for the major databases, that is, MySQL or PostgreSQL, are just a couple of examples. Stateless protocols like HTTP are much easier to handle: Here the client opens a separate connection for each request to the server. Whether it talks to node A or node B for the first request does not matter, and if a failover occurs in the meantime, the client will not even notice.

A REST Load Balancer

RESTful-based services are the subject of much attention, and cloud computing has only increased the interest. More and more manufacturers of applications have stopped using their own on-wire protocol for communication between service and client; instead, they prefer to use the existing and eternally ubiquitous HTTP protocol. Then, you "only" need a defined API for a client to call server URLs in a standard way, possibly sending specific headers in the process, and the server knows exactly what to do – lo and behold, you have a RESTful interface. Because HTTP is one of the most tested protocols on the Internet, smart developers put some thought into the issue of RESTful high availability a long time ago. The common solution to achieve high availability and, at the same time, to scale-out with HTTP services is load balancers.

The basic idea of a RESTful web load balancer is simple: A piece of software runs on a system and listens on the address and the port that actually belongs to the application. This software acts as the load balancer. In the background are the back-end servers, that is, the systems that run the actual web server. The load balancer accepts incoming connections and distributes them in a specified way to the back-end servers. This approach ensures an equal load with no idle systems.

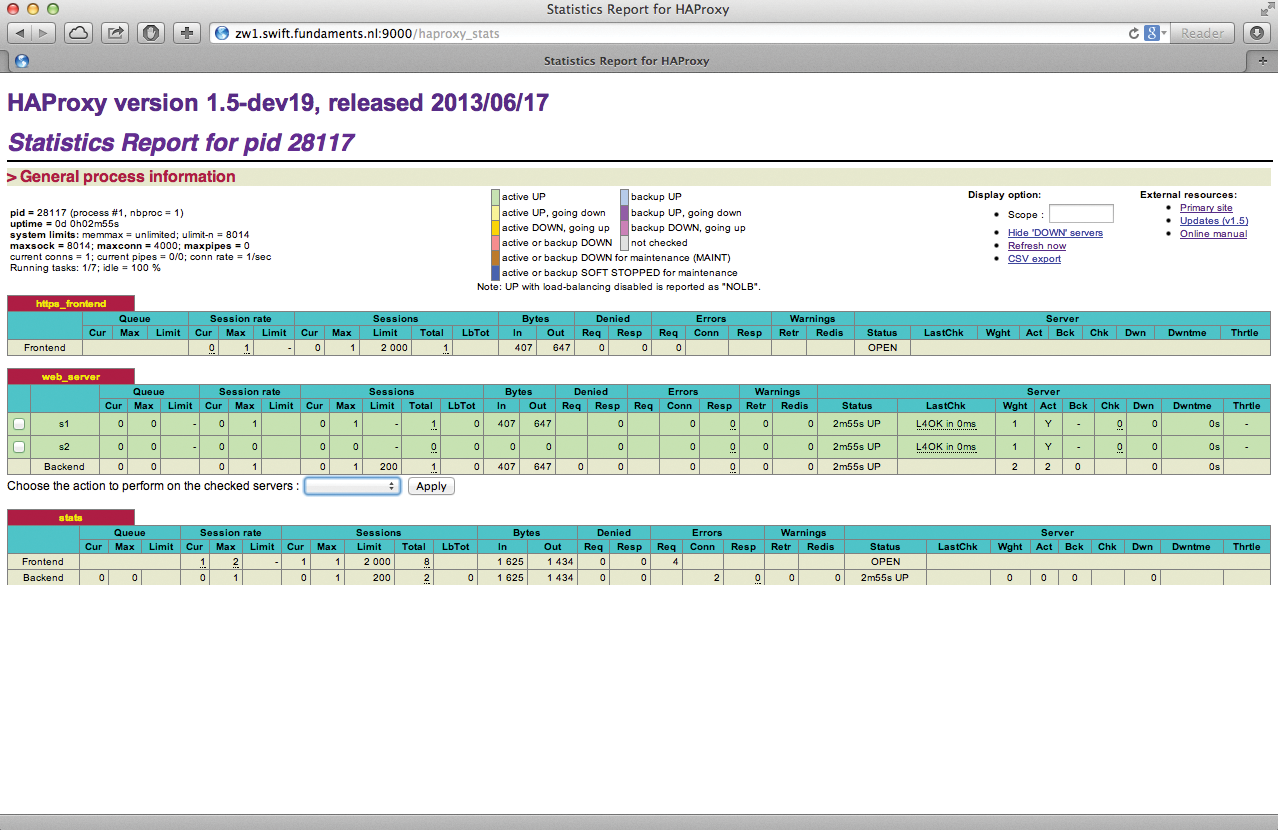

HAProxy is a prominent load balancer that lets you quickly create load balancer configurations (Figure 2). Listing 1 shows a sample configuration for HAProxy that supports SSL and forwards incoming requests on port 80 to three different back-end servers.

Listing 1: haproxy.cfg

01 global 02 log 127.0.0.1 local0 03 maxconn 4000 04 daemon 05 uid 99 06 gid 99 07 08 defaults 09 log global 10 mode http 11 option httplog 12 option dontlognull 13 timeout server 5s 14 timeout connect 5s 15 timeout client 5s 16 stats enable 17 stats refresh 10s 18 stats uri /stats 19 20 frontend https_frontend 21 bind www.example.com:443 ssl crt /etc/haproxy/www.example.com.pem 22 mode http 23 option httpclose 24 option forwardfor 25 reqadd X-Forwarded-Proto:\ https 26 default_backend web_server 27 28 backend web_server 29 mode http 30 balance roundrobin 31 cookie SERVERID insert indirect nocache 32 server s1 10.42.0.1:443 check cookie s1 33 server s2 10.42.0.1:443 check cookie s2

If the back-end servers are configured so that a web server or a RESTful service is listening on each computer, a team comprising HAProxy and the back-end servers is already a complete setup. It is not important whether the RESTful service itself needs a web server as an external service, which is the case, for example, with a RADOS gateway (part of Ceph), or whether the service itself listens on a port, as in the case of OpenStack, where all API services take control over the HTTP or HTTPS port themselves (Figure 3).

High Availability for the Load Balancer

The HAProxy setup presented here comes with a small drawback, which the admin still has to take care of: Although access to the web server is possible as long as one back-end server is up, a failure of the load balancer would bring down the system. The task is thus to prevent the load balancer from becoming a nasty Single Point of Failure (SPOF) in the installation – and at this point, Pacemaker comes back into play. If you are planning a load balancer configuration, you should be at least fundamentally comfortable with Pacemaker – unless you are using a commercial balancer solution that automatically handles HA.

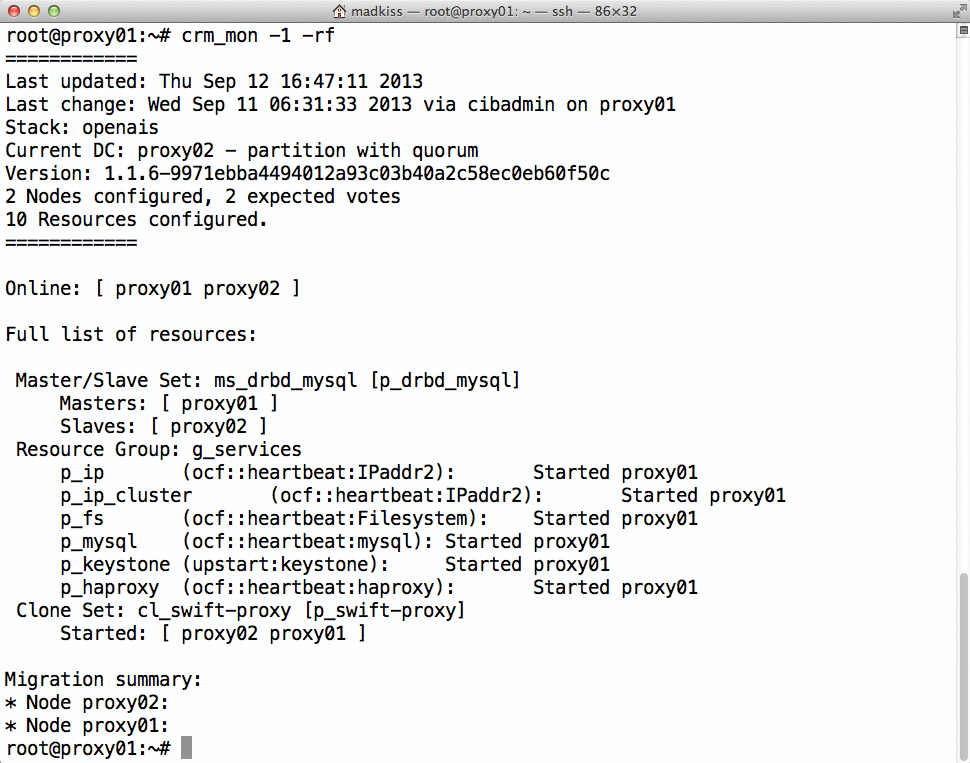

Pacemaker offers a very useful function in terms of the web server processes on the back-end hosts. Pacemaker can automatically periodically check whether the processes are still running, and a clone directive means that this can happen simultaneously for all back-end servers. Depending on the size of the setup, Pacemaker provides true added value, but be careful: If you are using Pacemaker, 30 nodes is the maximum size for a cluster of back-end servers. A set comprising an Apache resource and a clone directive looks like the following:

primitive p_apache ocf:lsb:apache2 op monitor interval="30s" timeout="20s" clone cl_apache p_apache

In practical terms, admins have two tacks for coming to grips with the problem. Variant 1 envisages running the load balancer software in its own failover cluster and giving Pacemaker the task of ensuring HAProxy functionality. Using a clone directive, you could even run HAProxy instances on both servers and combine the installation with DNS round-robin balancing. This solution would let you avoid having one permanently idle node in the installation. Listing 2 contains a sample Pacemaker configuration for such a solution with a dedicated load balancer cluster. The big advantage is that the load caused by the balancers themselves remains separate and does not influence the servers on which the application is running.

Listing 2: Pacemaker with DNS RR HAProxy

primitive p_ip_lb1 oct:heartbeat:IPaddr2 \

params ip="208.77.188.166" cidr_netmask=24 iflabel="lb1" \

op monitor interval="20s" timeout="10s"

primitive p_ip_lb2 oct:heartbeat:IPaddr2 \

params ip="208.77.188.167" cidr_netmask=24 iflabel="lb2" \

op monitor interval="20s" timeout="10s"

primitive p_haproxy ocf:heartbeat:haproxy \

params conffile="/etc/haproxy/haproxy.cfg" \

op monitor interval="60s" timeout="30s"

clone cl_haproxy p_haproxy

location p_ip_lb1_on_alice p_ip_lb1 \

rule $id="p_ip_lb1_prefer_on_alice" inf: #uname eq alice

location p_ip_lb2_on_bob p_ip_lb2 \

rule $id="p_ip_lb2_prefer_on_bob" inf: #uname eq bob

Variant 2 assumes one of the existing back-end servers additionally runs the load balancer. Again, combined with Pacemaker, this approach ensures that a load balancer is running on one of the nodes and that the platform is thus available for incoming requests. If the load caused by the balancer is negligible in size, such a solution is especially useful if you have very little hardware available. However, a configuration with a separate balancer cluster is technically preferable.

OpenStack Example

Thus far in this article, I have mainly dealt with the question of how to achieve high availability for RESTful-based APIs using a load balancer. The method described previously leads to an installation featuring multiple servers with a complete load balancer setup including back-end hosts. The description so far, however, has ignored the specifics of individual RESTful solutions. Especially in a cloud context, where REST interfaces are currently experiencing a heyday, the use of these interfaces is often highly specific. OpenStack is a good illustration: Each of the OpenStack services has at least one API or is itself an API. What is commonly described as an "OpenStack cloud" is actually a collection of several components working together.

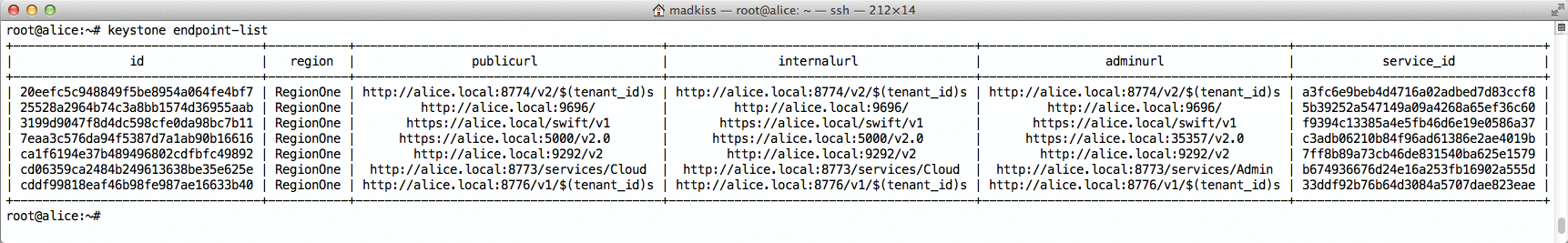

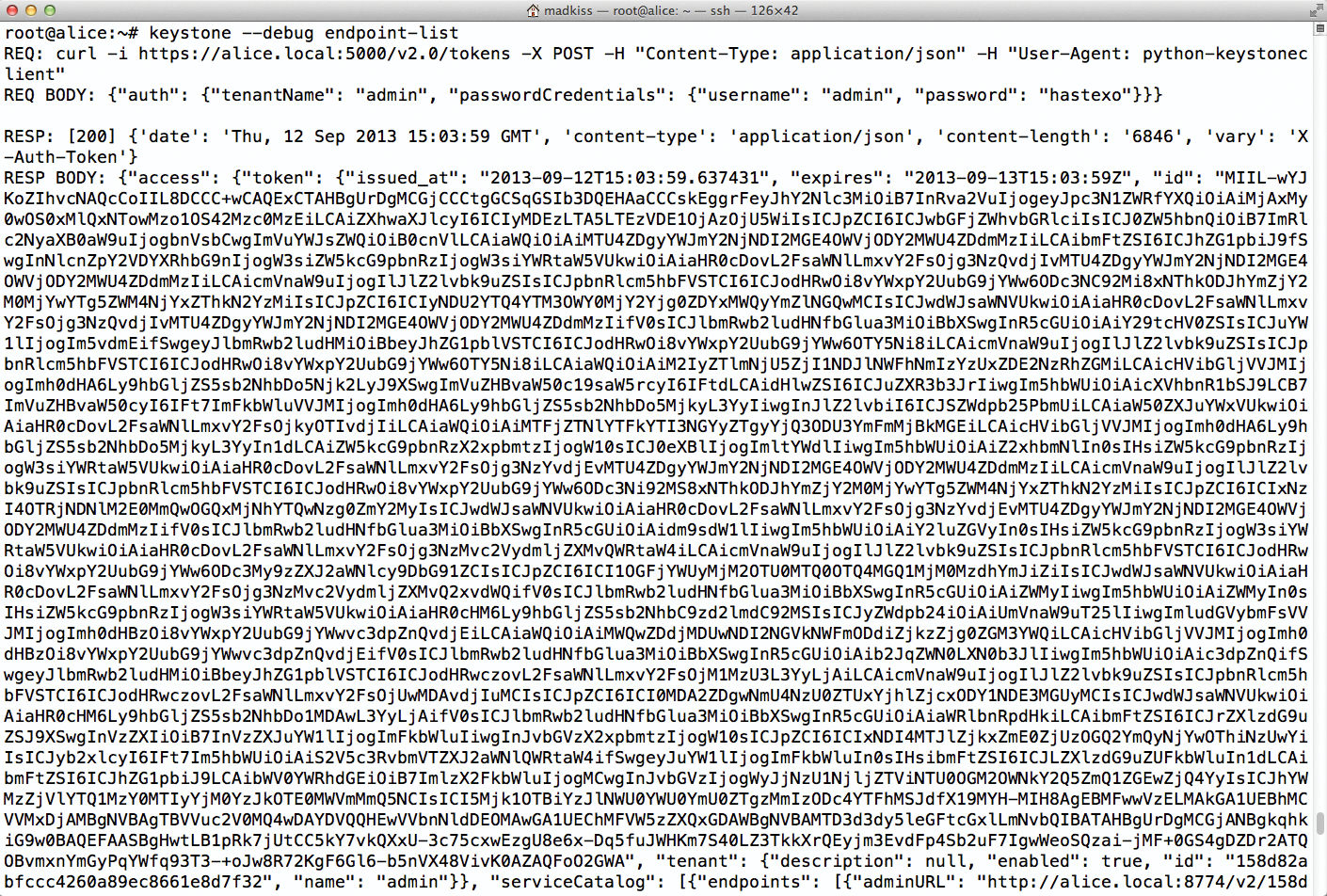

The main focus of OpenStack is on horizontal scalability. Users communicate constantly with the individual components, and the services themselves talk to one another. To allow this communication, the services need to know the addresses at which OpenStack components such as Glance and Nova reside. OpenStack's Keystone identity service is used for this task (Figure 4); Keystone maintains the list of endpoints in its database. OpenStack developers call the URL of a RESTful API, through which it accepts commands from the outside endpoint. The highlight: The endpoint database is not static. For example, to change the address at which Nova is reachable, you can simply redirect the endpoint in Keystone. This redirect provides for much greater flexibility than would hard-coded values in the configuration files. By design, each client in OpenStack retrieves the endpoint of a service before it connects to that service.

alice.local, the setup works as desired.High availability for RESTful services using a load balancer requires more than just installing additional RESTful services and HAProxy. Luckily, any number of instances of the API services can exist simultaneously in OpenStack, as long as they access the same database in the background. Note, however, that the endpoint configuration is adapted for HA in OpenStack's Keystone identity service. In concrete terms, if the IPs of the APIs themselves were registered in the endpoint database previously, you need to enter references for the load balancers. On receiving requests, Keystone thus directs the users to the load balancers, which then open the connection to the back-end servers, where the actual OpenStack APIs are running. The schema shown in Figure 5 can apply to both internal and external links.

Conclusions

The fact that cloud computing solutions such as OpenStack, Eucalyptus, and CloudStack rely on RESTful interfaces greatly facilitates high availability. A solution is available for any problem if you use HTTP as the underlying protocol. After all, HTTP has been around for 20 years, and someone is bound to have found the solution you need. When it comes to standalone APIs, all you need to achieve scale-out and HA are multiple web servers and a balancer. If you are looking for HA at the load balancer level, you can use Pacemaker and avoid the burden of a complex cluster configuration. If you already operate a cloud environment, follow the approach detailed in this article to retrofit high availability and thus secure your installations against failure.