S.M.A.R.T., smartmontools, and drive monitoring

Working Smart

S.M.A.R.T. (self-monitoring, analysis, and reporting technology) [1] is a monitoring system for storage devices that provides some information about the status of the drive as well as the ability to run self-tests. The intent of S.M.A.R.T. is for the drive to collect presumably useful information on its state or condition, with the idea that the information can be used to predict impending drive failure.

The S.M.A.R.T. standard was based on previous work by various drive manufacturers to provide health information about their drives. The information was specific to the manufacturer, making life difficult for everyone, so the S.M.A.R.T. standard was developed to provide a set of specific metrics and methods that could be communicated to the host OS. The original standard was very comprehensive, with standard data for all devices, but the final standard is just a shadow of the original.

To be considered S.M.A.R.T., a drive just needs the ability to signal between the internal drive sensors and the host system. The standard provides no information about what sensors are in the drive or how this data is exposed to the user. At the lowest level, S.M.A.R.T. provides a simple binary bit of information – the drive is OK or the drive has failed. This bit of information is called the "S.M.A.R.T. status." The drive fail status doesn't necessarily indicate that the drive has failed; rather, the drive might not meet its specifications. Whether this means the drive is about to fail (i.e., not work) is defined by the drive manufacturer, so S.M.A.R.T. is not a panacea.

In addition to the S.M.A.R.T. status, virtually all drives provide additional details on the health of the drive via S.M.A.R.T. attributes. These attributes are completely up to the drive manufacturers because they are not part of the standard. This becomes even more obvious when comparing spinning drives to solid state drives, which have very different characteristics and health information. Thus, each type of drive must be scanned for various S.M.A.R.T. attributes and possible values. Along with S.M.A.R.T. attributes, the drives can also contain some self-tests with the results stored in the self-test log. These logs may be scanned or read to track the state of the drive. Moreover, you can also tell the drives to run self-tests.

Attributes have a threshold value beyond which the drive will not pass under ordinary conditions (sometimes lower values are better and sometimes larger values are better), so the attributes are difficult to read. These threshold values are only known to the manufacturer and might not be published.

Additionally, each attribute returns a raw value, the measurement of which is up to the drive manufacturer, and a normalized value that spans from 1 to 253. A "normal" attribute value also is completely up to the manufacturer. It's not too difficult to get S.M.A.R.T. attributes from various drives, and the values are easy to interpret. Wikipedia [1] has a pretty good list of common attributes and their meanings.

All of the features and capabilities of S.M.A.R.T. drives sound great, but how does one collect S.M.A.R.T. attribute information or control drive self-tests and obtain the logs?

smartmontools

Fortunately for Linux types, an open source project, smartmontools [2], allows you to interact with the S.M.A.R.T. data in drives on your systems. Using this tool, you can query the drives to gather information about them, or you can test the drives and gather logs. This kind of information can be an administrator's dream, because it can be used to keep track of the system configuration as well as the state of the drives (more on that later).

Smartmontools is compatible with all S.M.A.R.T. features and supports ATA/ATAPI/SATA-3 to -8 disks and SCSI disks and tape devices. It also supports the major Linux RAID cards, which can sometimes cause difficulties because they require vendor-specific I/O control commands. Check the smartmontools page for more details on your specific card.

For this article, I used a freshly installed CentOS 6.4 [3] distribution on a newly built system with smartmontools installed using yum. I also made sure the smartmontools daemon, smartd, starts with the system by using:

chkconfig smartd on

I will illustrate some smartmontools tricks by exploring two types of drives in my system. The first is a Samsung 840 SSD (120GB), and the second is a Seagate Barracuda 3TB SATA drive. I want to test both types of drives, so you can see how the output differs, particularly for SSDs that don't have rotating media.

First Steps

Before using smartmontools, you might want to read the man pages, but they are rather long, so I hope to give you a few quick tips on getting started; then, you can read the man pages as needed. The first thing I like to do is an "inquiry" on the drives. The output for the Samsung SSD is shown in Listing 1.

Listing 1: Samsung SSD Inquiry

[root@home4 ~]# smartctl -i /dev/sdb smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF INFORMATION SECTION === Device Model: Samsung SSD 840 Series Serial Number: S19HNSAD620517N LU WWN Device Id: 5 002538 5a005092e Firmware Version: DXT08B0Q User Capacity: 120,034,123,776 bytes [120 GB] Sector Size: 512 bytes logical/physical Device is: Not in smartctl database [for details use: -P showall] ATA Version is: 8 ATA Standard is: ATA-8-ACS revision 4c Local Time is: Sun Oct 13 09:11:24 2013 EDT SMART support is: Available - device has SMART capability. SMART support is: Enabled

Note that the command for using smartmontools is smartctl. As this output shows, the drive is S.M.A.R.T. capable, but not in the smartmontools database. Recall that the S.M.A.R.T. attributes can vary from one vendor to another. The smartmontools community contributes information to the database for various drives so that other people can use the information.

The Seagate hard drive inquiry output is shown in Listing 2. Even though this device is in the database, using the command smartctl -P show doesn't show anything (there are no presets for the drives, i.e., no preset S.M.A.R.T. output information).

Listing 2: Seagate HD Inquiry

[root@home4 ~]# smartctl -i /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF INFORMATION SECTION === Model Family: Seagate Barracuda (SATA 3Gb/s, 4K Sectors) Device Model: ST3000DM001-1CH166 Serial Number: Z1F35P0G LU WWN Device Id: 5 000c50 050b954c3 Firmware Version: CC27 User Capacity: 3,000,592,982,016 bytes [3.00 TB] Sector Sizes: 512 bytes logical, 4096 bytes physical Device is: In smartctl database [for details use: -P show] ATA Version is: 8 ATA Standard is: ACS-2 (unknown minor revision code: 0x001f) Local Time is: Sun Oct 13 09:12:15 2013 EDT SMART support is: Available - device has SMART capability. SMART support is: Enabled

After performing an inquiry on a drive, I like to check its health. This is pretty simple using the -H option. Listings 3 and 4 show the output for the SSD and the hard drive. You can see that both drives pass their overall health self-assessment.

Listing 3: SSD Health Check

[root@home4 ~]# smartctl -H /dev/sdb smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF READ SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED

Listing 4: Hard Drive Health Check

[root@home4 ~]# smartctl -H /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF READ SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED

If the output says FAIL, your best bet is to copy immediately all data from that drive and take it out of service. Although the drive might be able to function for some time, you can't be sure, so it's best to get the data off that drive immediately.

As an administrator of an HPC system, you could put this health status check in a cron job to run periodically. You could even create a metric as part of a monitoring tool, such as Ganglia [4], to show the status of all of the drives, either in a central storage system or in the compute nodes.

Potentially, a drive's S.M.A.R.T. system has a huge number of attributes. You can discover those attributes using the -a option. The output can be quite long, but an example for the Seagate drive is shown in Listing 5.

Listing 5: Attribute Discovery (abridged)

[root@home4 ~]# smartctl -a /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF INFORMATION SECTION === Model Family: Seagate Barracuda (SATA 3Gb/s, 4K Sectors) Device Model: ST3000DM001-1CH166 Serial Number: Z1F35P0G LU WWN Device Id: 5 000c50 050b954c3 Firmware Version: CC27 User Capacity: 3,000,592,982,016 bytes [3.00 TB] Sector Sizes: 512 bytes logical, 4096 bytes physical Device is: In smartctl database [for details use: -P show] ATA Version is: 8 ATA Standard is: ACS-2 (unknown minor revision code: 0x001f) Local Time is: Sun Oct 13 09:48:13 2013 EDT SMART support is: Available - device has SMART capability. SMART support is: Enabled === START OF READ SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED General SMART Values: Offline data collection status: (0x00) Offline data collection activity was never started. Auto Offline Data Collection: Disabled. Self-test execution status: ( 0) The previous self-test routine completed without error or no self-test has ever been run. Total time to complete Offline data collection: ( 584) seconds. Offline data collection capabilities: (0x73) SMART execute Offline immediate. Auto Offline data collection on/off support. Suspend Offline collection upon new command. No Offline surface scan supported. Self-test supported. Conveyance Self-test supported. Selective Self-test supported. SMART capabilities: (0x0003) Saves SMART data before entering power-saving mode. Supports SMART auto save timer. Error logging capability: (0x01) Error logging supported. General Purpose Logging supported. Short self-test routine recommended polling time: ( 1) minutes. Extended self-test routine recommended polling time: ( 344) minutes. Conveyance self-test routine recommended polling time: ( 2) minutes. SCT capabilities: (0x3085) SCT Status supported. SMART Attributes Data Structure revision number: 10 Vendor Specific SMART Attributes with Thresholds: ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE 1 Raw_Read_Error_Rate 0x000f 117 099 006 Pre-fail Always - 130040264 3 Spin_Up_Time 0x0003 095 095 000 Pre-fail Always - 0 4 Start_Stop_Count 0x0032 100 100 020 Old_age Always - 11 5 Reallocated_Sector_Ct 0x0033 100 100 010 Pre-fail Always - 0 [...] 199 UDMA_CRC_Error_Count 0x003e 200 200 000 Old_age Always - 0 240 Head_Flying_Hours 0x0000 100 253 000 Old_age Offline - 170901043675205 241 Total_LBAs_Written 0x0000 100 253 000 Old_age Offline - 11336138150 242 Total_LBAs_Read 0x0000 100 253 000 Old_age Offline - 5864598746 SMART Error Log Version: 1 No Errors Logged SMART Self-test log structure revision number 1 No self-tests have been logged. [To run self-tests, use: smartctl -t] SMART Selective self-test log data structure revision number 1 SPAN MIN_LBA MAX_LBA CURRENT_TEST_STATUS 1 0 0 Not_testing 2 0 0 Not_testing 3 0 0 Not_testing 4 0 0 Not_testing 5 0 0 Not_testing Selective self-test flags (0x0): After scanning selected spans, do NOT read-scan remainder of disk. If Selective self-test is pending on power-up, resume after 0 minute delay.

The Seagate has more attributes than the Samsung SSD. If you read the list, some items might look alarming. For example, under TYPE you see some things listed as Pre-fail. This does not mean the drive is getting ready to fail; it means the drive has not "failed" that category. Also note that because an attribute might be listed as "failed" at some point, that does not mean the drive really has failed. Whether the drive has failed is defined by the drive manufacturer.

The first attribute, Raw_Read_Error_Rate, is the rate of hardware read errors that occurred when reading data from a drive. The value of the attribute is 117, its worst value is 99, and the threshold is 006.

Does this mean the read error rate is 117 when the threshold is 6? Not necessarily, because the absolute values being examined are meaningless without knowing their definitions. What you should do is track that attribute and see when or if it changes.

Testing

One thing S.M.A.R.T. can do is run self-tests on drives. Even though the self-test details are manufacturer dependent, they can be useful, because at least they cause the drives to do some sort of testing to determine whether they are "OK" or not. S.M.A.R.T. performs two main tests – a short self-test and a long self-test – which you can schedule in a cron job.

Before I run self-tests, I want to set a couple of options on the drives to make sure I capture the data. To set the options, you use smartctl (Listing 6):

smartctl -s on -S on /dev/sdb

Listing 6: Setting Options

[root@home4 ~]# smartctl -s on -S on /dev/sdb smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF ENABLE/DISABLE COMMANDS SECTION === SMART Enabled. SMART Attribute Autosave Enabled.

The first option "turns on" S.M.A.R.T., and the second stores the results between reboots. The lowercase -s on enables S.M.A.R.T. on a particular device (/dev/sdb in this case). The uppercase -S on tells the device to save the S.M.A.R.T. attributes between power cycles.

If you look closely in the output of the S.M.A.R.T. attributes, you will see some estimated times for the short self-test and the long self-test. For the Seagate drive, the short self-test takes about one minute, and the long self-test takes about 344 minutes. These are only estimates, but in my experience, for a quiet system, they have proven to be pretty good estimates. Running the short self-test on the Seagate drive is simple (Listing 7):

smartctl -t short /dev/sdd

Listing 7: Short Self-Test

[root@home4 ~]# smartctl -t short /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF OFFLINE IMMEDIATE AND SELF-TEST SECTION === Sending command: "Execute SMART Short self-test routine immediately in off-line mode". Drive command "Execute SMART Short self-test routine immediately in off-line mode" successful. Testing has begun. Please wait 1 minutes for test to complete. Test will complete after Sun Oct 13 09:57:27 2013 Use smartctl -X to abort test.

You can check whether a test is done is by issuing the command

smartctl -l selftest /dev/sdd

(Listing 8). Note that the output tells you how much of the test remains to finish (20% in this case). When the test is done, the output looks like Listing 9. You really want to see the message Completed without error in the output.

Listing 8: Check for Finished Test

[root@home4 ~]# smartctl -l selftest /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF READ SMART DATA SECTION === SMART Self-test log structure revision number 1 Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error # 1 Short offline Self-test routine in progress 20% 61 -

Listing 9: Finished Short Self-Test

[root@home4 ~]# smartctl -l selftest /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF READ SMART DATA SECTION === SMART Self-test log structure revision number 1 Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error # 1 Short offline Completed without error 00% 61 -

Running the long self-test is very similar to running the short test (Listing 10):

smartctl -t long /dev/sdd

Listing 10: Long Self-Test

[root@home4 ~]# smartctl -t long /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF OFFLINE IMMEDIATE AND SELF-TEST SECTION === Sending command: "Execute SMART Extended self-test routine immediately in off-line mode". Drive command "Execute SMART Extended self-test routine immediately in off-line mode" successful. Testing has begun. Please wait 344 minutes for test to complete. Test will complete after Sun Oct 13 16:04:31 2013 Use smartctl -X to abort test.

Although the test did take quite some time, when it completed, the output was as shown in Listing 11. Again, the test completed without error.

Listing 11: Finished Long Self-Test

[root@home4 laytonjb]# smartctl -l selftest /dev/sdd smartctl 5.43 2012-06-30 r3573 [x86_64-linux-2.6.32-358.18.1.el6.x86_64] (local build) Copyright (C) 2002-12 by Bruce Allen, http://smartmontools.sourceforge.net === START OF READ SMART DATA SECTION === SMART Self-test log structure revision number 1 Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error # 1 Extended offline Completed without error 00% 67 - # 2 Short offline Completed without error 00% 61 -

The short and long self-tests are run immediately on issuing the commands, most likely via a cron job. An offline test, on the other hand, occurs at preset intervals, so the drive could be in use when the test is run, which could lead to performance degradation. The smartctl man page states:

This type of test can, in principle, degrade the device performance. The '-o on' option causes this offline testing to be carried out, automatically, on a regular scheduled basis. Normally, the disk will suspend offline testing while disk accesses are taking place, and then automatically resume it when the disk would otherwise be idle, so in practice it has little effect.

To run offline tests, you use the -o on option with smartctl, and it is up to you whether or not to run the tests. My recommendation is that if the storage devices are likely to be busy, as they would be in a storage system, then you might not want to run the offline tests. However, if the drive is in a compute node or a workstation with idle device usage periods, then you might want to run the tests.

Is S.M.A.R.T. Useful?

With S.M.A.R.T. attributes, you would think that you could predict failure. For example, if the drive is running too hot, then it might be more susceptible to failure; if the number of bad sectors is increasing quickly, you might think the drive would soon fail. Perhaps you can use the attributes with some general models of drive failure to predict when drives might fail and work to minimize the damage by moving data off the drives before they do fail.

Although a number of people subscribe to using S.M.A.R.T. to predict drive failure, its use for predictive failure has been a difficult proposition. Google published a study [5] that examined more than 100,000 drives of various types for correlations between failure and S.M.A.R.T. values. The disks were a combination of consumer-grade drives (SATA and PATA) with speeds from 5,400rpm to 7,200rpm and drives with capacities ranging from 80GB to 400GB. The data was collected over an eight-month window.

In the study, the researchers monitored the S.M.A.R.T. attributes of the population of drives, along with which drives failed. Google chose the word "fail" to mean that the drive was not suitable for use in production, even if the drive tested "good" (sometimes the drive would test well but immediately fail in production). The researchers concluded:

Our analysis identifies several parameters from the drive's self monitoring facility (SMART) that correlate highly with failures. Despite this high correlation, we conclude that models based on SMART parameters alone are unlikely to be useful for predicting individual drive failures. Surprisingly, we found that temperature and activity levels were much less correlated with drive failures than previously reported.

Despite the overall message that they had difficult developing correlations, the researchers did find some interesting trends.

- Google agrees with the common view that failure rates are known to be highly correlated with drive models, manufacturers, and age. However, when they normalized the S.M.A.R.T. data by the drive model, none of the conclusions changed.

- There was quite a bit of discussion about the correlation between S.M.A.R.T. attributes and failure rates. One of the best summaries in the paper is: "Out of all failed drives, over 56% of them have no count in any of the four strong S.M.A.R.T. signals, namely scan errors, reallocation count, offline reallocation, and probational count. In other words, models based only on those signals can never predict more than half of the failed drives."

- Temperature effects are interesting. High temperatures start affecting older drives (three to four years or older), but low temperatures can also increase the failure rate of drives, regardless of age.

- A section of the final paragraph of the paper bears repeating: We find, for example, that after their first scan error, drives are 39 times more likely to fail within 60 days than drives with no such errors. First errors in reallocations, offline reallocations, and probational counts are also strongly correlated to higher failure probabilities. Despite those strong correlations, we find that failure prediction models based on S.M.A.R.T. parameters alone are likely to be severely limited in their prediction accuracy, given that a large fraction of our failed drives have shown no S.M.A.R.T. error signals whatsoever. This result suggests that S.M.A.R.T. models are more useful in predicting trends for large aggregate populations than for individual components. It also suggests that powerful predictive models need to make use of signals beyond those provided by S.M.A.R.T.

- The paper tried to sum up all the observed factors that contributed to drive failure, such as errors or temperature, but they still missed about 36% of the drive failures.

The paper provides good insight into the drive failure rate of a large population of drives. As mentioned previously, drive failure correlates somewhat with scan errors, but that doesn't account for all failures, of which a large fraction did not show any S.M.A.R.T. error signals. It's also important to note that the comment in the last paragraph states, "… SMART models are more useful in predicting trends for large aggregate populations than for individual components." However, this should not keep you from watching the S.M.A.R.T. error signals and attributes to track the history of the drives in your systems. Again, some correlation seems to exist between scan errors and failure of the drives, and this might be useful in your environment to encourage making copies of critical data or decreasing the time period between backups or data copies.

GUI Interface to S.M.A.R.T. Data

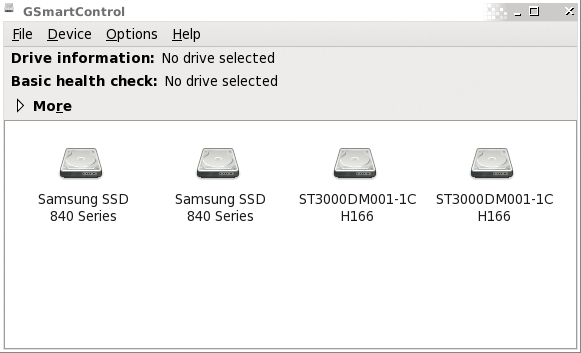

In HPC systems, you like to get quick answers from monitoring tools to understand the general status of your systems. You can do this by writing some simple scripts and using the smartctl tool. In fact, it's a simple thing you can do to improve the gathering of data on system status (Google used BigTable, their NoSQL database [6], along with MapReduce to process all of their S.M.A.R.T. data). Remembering all the command options and looking at rows of test can be a chore sometimes. If your systems are small enough, such as a workstation, or if you need to get down and dirty with a single node, then GSmartControl [7] is a wonderful GUI tool for interacting with S.M.A.R.T. data.

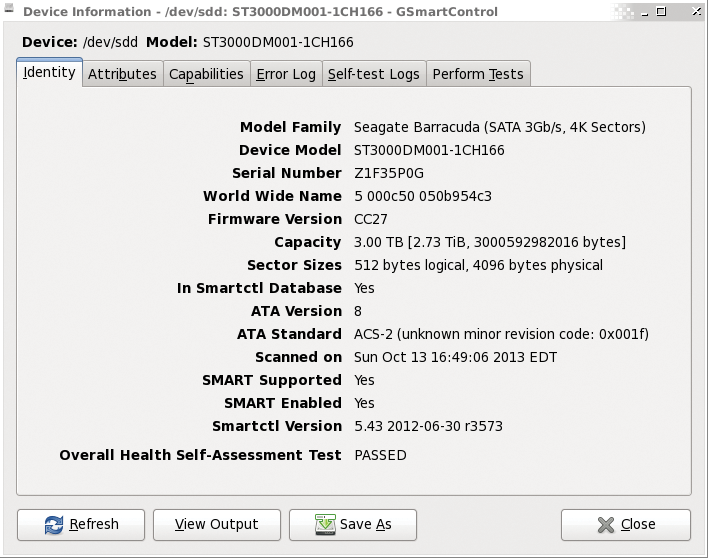

I won't spend much time talking about GSmartControl or how to install it, but I do want to show some simple screenshots of the tool. Figure 1 shows the devices it can monitor.You can see that this system has four storage devices. Clicking on a device (/dev/sdd) and More shows more information (Figure 2).

/dev/sdd.You can see that S.M.A.R.T. is enabled on the device and that it passed the basic health check. If you double-click the device icon, you get much more information (Figure 3). This dialog box has a myriad of details and functions available. The tabs supply information about attributes, tests, logs, and so on, and you can launch tests from the Perform Tests tab.

Summary

S.M.A.R.T. is an interesting technology as a standard way of communicating between the operating system and drives, but the actual information in the drives, the attributes, is non-standard. Some of the information between manufacturers and drives is fairly similar, allowing you to gather some common information. However, because S.M.A.R.T. attributes are not standard, smartmontools might not know about your particular drive (or RAID card). It may take some work to get it to understand the attributes of your particular drive.

S.M.A.R.T. can be an asset for administrators and home users. Probably its best role is to watch the history of storage devices. Simple scripts allow you to query your drives and collect that information, either by itself or as part of a monitoring system, such as Ganglia. If you want a more GUI-oriented approach, GSmartControl can be used for many of the smartctl command-line options.