Best practices for KVM on NUMA servers

Tuneup

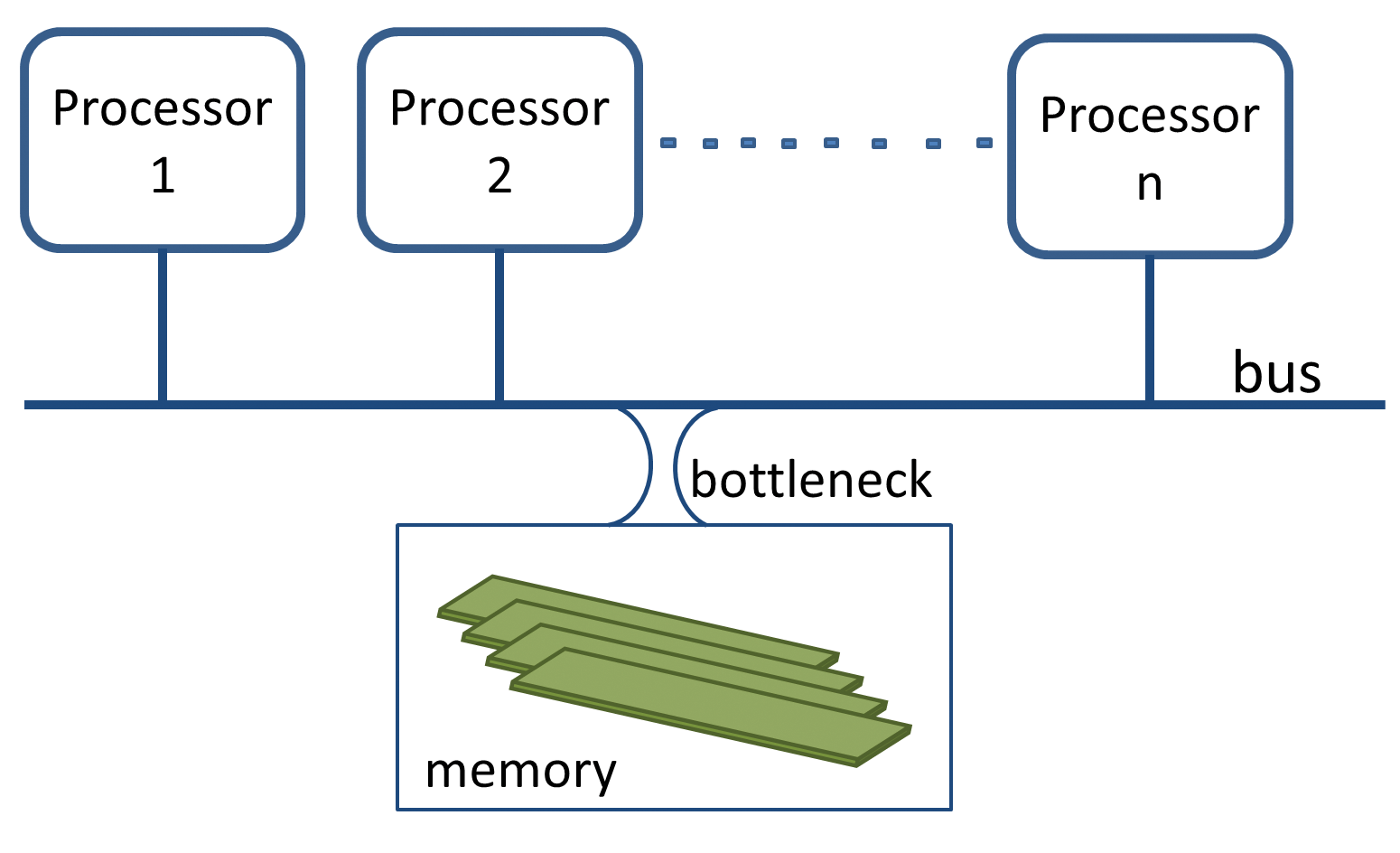

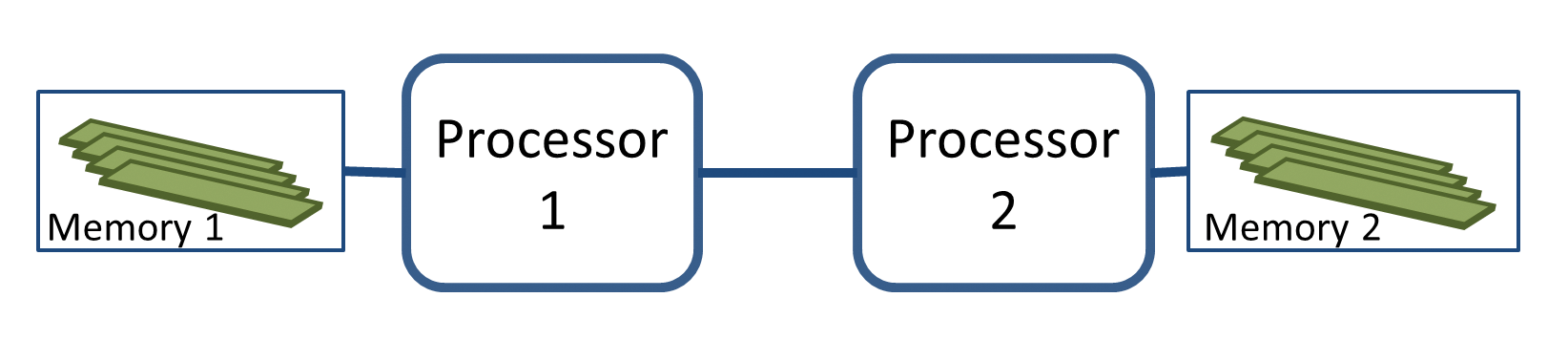

Non-uniform memory access (NUMA) [1] systems have existed for a long time. Makers of supercomputers could not increase the number of CPUs without creating a bottleneck on the bus connecting the processors to the memory (Figure 1). To solve this issue, they changed the traditional monolithic memory approach of symmetric multiprocessing (SMP) servers and spread the memory among the processors to create the NUMA architecture (Figure 2).

The NUMA approach has both good and bad effects. A significant improvement is that it allows more processors with a corresponding increase of performance; when the number of CPUs doubles, performance is nearly two times faster. However, the NUMA design introduces different memory access latencies depending on the distance between the CPU and the memory location. In Figure 2, processes running on Processor 1 have a faster access to memory pages connected to Processor 1 than pages located near Processor 2.

With the increasing number of cores per processor running at very high frequency, the traditional Front Side Bus (FSB) of previous generations of x86 systems bumped into this saturation problem. AMD solved it with HyperTransport (HT) technology and Intel with the QuickPath Interconnect (QPI). As a result, all modern x86 servers with more than two populated sockets have NUMA architectures (see the "Enterprise Servers" box).

Linux and NUMA

The Linux kernel introduced formal NUMA support in version 2.6. Projects like Bigtux in 2005 heavily contributed to enabling Linux to scale up to several tens of CPUs. On your favorite distribution, just type man 7 numa, and you will get a good introduction with numerous links to documentation of interest to both developers and system managers.

You can also issue numactl --hardware (or numactl -H) to view the NUMA topology of a server. Listing 1 shows a reduced output of this command captured on an HP ProLiant DL980 server with 80 cores and 128GB of memory.

Listing 1: Viewing Server Topology

01 # numactl --hardware available: 8 nodes (0-7) node 0 cpus: 0 1 2 3 4 5 6 7 8 9 node 0 size: 16373 MB node 0 free: 15837 MB node 1 cpus: 10 11 12 13 14 15 16 17 18 19 node 1 size: 16384 MB node 1 free: 15965 MB ... node 7 cpus: 70 71 72 73 74 75 76 77 78 79 node 7 size: 16384 MB node 7 free: 14665 MB node distances: node 0 1 2 3 4 5 6 7 0: 10 12 17 17 19 19 19 19 1: 12 10 17 17 19 19 19 19 2: 17 17 10 12 19 19 19 19 3: 17 17 12 10 19 19 19 19 4: 19 19 19 19 10 12 17 17 5: 19 19 19 19 12 10 17 17 6: 19 19 19 19 17 17 10 12 7: 19 19 19 19 17 17 12 10

The numactl -H command returns a description of the server per NUMA node. A NUMA node comprises a set of physical CPUs (cores) and associated local memory. In Listing 1, node 0 is made of CPUs 0 to 7 and has a total of 16GB of memory. When the command was issued, 15GB of memory was free in this NUMA node.

The table at the end represents the System Locality Information Table (SLIT). Hardware manufacturers populate the SLIT in the lower firmware layers and provide it to the kernel via the Advanced Configuration and Power Interface (ACPI). It gives the normalized "distances" or "costs" between the different NUMA nodes. If a process running in NUMA node 0 needs 1 nanosecond (ns) to access local pages, it will take 1.2ns to access pages located in remote node 1, 1.7ns for pages in nodes 2 and 3, and 1.9ns to access pages in nodes 4-7.

On some servers, ACPI does not provide SLIT table values, and the Linux kernel populates the table with arbitrary numbers like 10, 20, 30, 40. In that case, don't try to verify the accuracy of the numbers; they are not representative of anything.

KVM, Libvirt, and NUMA

The KVM hypervisor sees virtual machines as regular processes, and to minimize the effect of NUMA on the underlying hardware, the libvirt API [2] and companion tool virsh(1) provide many possibilities to monitor and adjust the placement of the guests in the server. The most frequently used virsh commands related to NUMA are vcpuinfo and numatune.

If vm1 is a virtual machine, virsh vcpuinfo vm1 performed in the KVM hypervisor returns the mapping between virtual CPUs (vCPUs) and physical CPUs (pCPUs), as well as other information like a binary mask showing which pCPU is eligible for hosting vCPUs:

# virsh vcpuinfo vm1 VCPU: 0 CPU: 0 State: running CPU time: 109.9s CPU Affinity: yyyyyyyy---------------------------------------------- ....

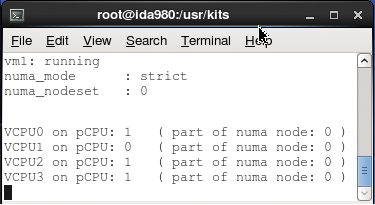

The command virsh numatune vm1 returns the memory mode policy used by the hypervisor to supply memory to the guest and a list of NUMA nodes eligible for providing memory to the guest. A strict mode policy means that the guest can access memory from a listed nodeset and only from there. Later, I explain possible consequences of this mode.

# virsh numatune vm1 numa_mode : strict numa_nodeset : 0

Listing 2 is a script combining vcpuinfo and numatune in an endless loop. You should start it in a dedicated terminal on the host with a guest name as argument (Figure 3) and let it run during your experiments. It gives a synthetic view of the affinity state of your virtual machine.

Listing 2: vcpuinfo.sh

01 # cat vcpuinfo.sh

02 #!/bin/bash

03 DOMAIN=$1

04 while [ 1 ] ; do

05 DOM_STATE=`virsh list --all | awk '/'$DOMAIN'/ {print $NF}'`

06 echo "${DOMAIN}: $DOM_STATE"

07 virsh numatune $DOMAIN

08 virsh vcpuinfo $DOMAIN | awk '/VCPU:/ {printf "VCPU" $NF }

09 /^CPU:/ {printf "%s %d %s %d %s\n", " on pCPU:", $NF, " ( part of numa node:", $NF/8, ")"}'

10 sleep 2

11 done

Locality Impact Tests

If you want to test the effect of NUMA on a KVM server, you can force a virtual machine to run on specific cores and use local memory pages. To experiment with this configuration, start a memory-intensive program or micro-benchmark (e.g., STREAM, STREAM2 [3], or LMbench [4]) and compare the result when the virtual machine accesses remote memory pages during a second test.

The different operations for performing this test are simple, as long as you are familiar with the edition of XML files (guest description files are located in /etc/libvirt/qemu/). First, you need to stop and edit the guest used for this test (vm1) with virsh(1):

# virsh shutdown vm1 # virsh edit vm1

Bind it to physical cores 0 to 9 with the cpuset attribute and force the memory to come from the node hosting pCPUs 0-9: numa node 0. The XML vm1 description becomes:

<domain type='kvm'>

....

<vcpu placement='static' cpuset='0-9'>4</vcpu>

<numatune>

<memory nodeset='0'/>

</numatune>

...

Save and exit the editor, then start the guest:

# virsh start vm1

When the guest is started, verify that the pinning is correct with virsh vcpuinfo, virsh numatune, or the little script mentioned earlier (Figure 3). Run a memory-intensive application or a micro-benchmark and record the time for this run.

When this step is done, shut down the guest and modify the nodeset attribute to take memory from a remote NUMA node:

# virsh shutdown vm1 # virsh edit vm1 ... <memory mode= 'strict' nodeset= '7'/> ...... # virsh start vm1

Note that the virsh utility silently added the attribute mode='strict'. I will explain the consequences of that strict memory mode policy next. For now, restart the guest and run your favorite memory application or micro-benchmark again. You should notice a degradation of performance.

The Danger of a Strict Policy

The memory mode='strict' XML attribute tells the hypervisor to provide memory from the specified nodeset list and only from there. What happens if the nodeset cannot provide all the requested memory? In other words, what happens in a memory over-commitment situation? Well, the hypervisor starts to swap the guest on disk to free up some memory.

If the swap space is not big enough (which is frequently the case), the Out Of Memory Killer (OOM killer) mechanism does its job and kills processes to reclaim memory. In the best case, only the guest asking for memory is killed because the kernel thinks it can get a lot of memory from it. In the worst case, the entire hypervisor is badly damaged because other key processes have been destroyed.

KVM documentation mentions this mechanism and asks system managers to avoid memory over-commitment situations. It also references other documents explaining how to size or increase the swap space of the hypervisor using a specific algorithm.

Real problems arise when you fall into this situation without knowing it. Consider the case where the vm1 guest is configured with 12GB of memory and pinned to node 0 with this default strict mode added silently by virsh during the editing operation. Everything should run fine because node 0 is able to provide up to 15GB of memory (see the numactl -H output). However, when an application or a second guest is scheduled on node 0 and consumes 2 or 3GB of memory, the entire hypervisor is at risk of failure.

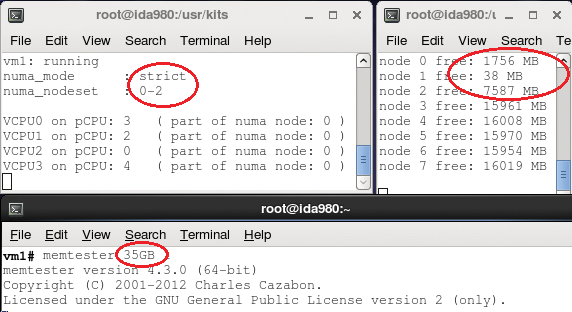

You can test (at your own risk) this predictable behavior with the memtester [5] program. Launch it in a guest configured with a strict memory policy and ask memtester to test more memory than available in the nodeset. Watch carefully the evolution of the swap space and the kernel ring buffer (dmesg); you may see a message like: Out of memory: Kill process 29937 (qemu-kvm) score 865 or sacrifice child. Then: Killed process 29937, UID 107, (qemu-kvm).

The default strict memory mode policy can put both guests and the hypervisor itself at risk, without any notification or warning provided to the system administrator before a failure.

Prefer the Preferred Memory Mode

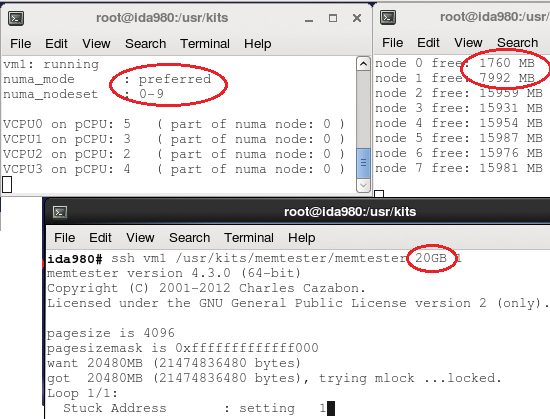

If you want to bind KVM guests manually on specified NUMA nodes (CPU and memory), my advice is to use the preferred memory mode policy instead of the default strict mode. This mode allows the hypervisor to provide memory from other nodes than the ones in the nodeset, as needed. Your guests may not run with maximum performance when the hypervisor starts to allocate memory from remote nodes, but at least you won't risk having them fail because of the OOM killer.

The configuration file of the vm1 guest with 35GB of memory, bound to NUMA node 0 in preferred mode, looks like this:

# virsh edit vm1

...

<currentMemory unit='KiB'>36700160</currentMemory>

<vcpu placement='static' cpuset='0-9'>4</vcpu>

<numatune>

<memory mode='preferred' nodeset='0'/>

</numatune>

...... MI

.........

This guest is in a memory over-commitment state but will not be punished when the memtester program asks for more memory than what node 0 can provide.

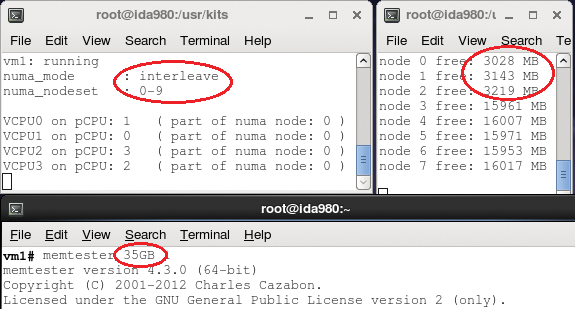

In Figure 4, the top left small terminal shows the preferred numa_mode as well as the vCPUs' bindings. The other small terminal displays the free memory per NUMA node. Observe that the memory comes from nodes 0 and 1. Note that the same test in strict mode leads to punishing the guest.

Interleave Memory

When a memory nodeset contains several NUMA nodes in strict or preferred policy, KVM uses a sequential algorithm to provide the memory to guests. When the first NUMA node in the nodeset is short of memory, KVM allocates pages from the second node in the list and so on. The problem with this approach is that the host memory becomes rapidly fragmented and other guests or processes may suffer from this fragmentation.

The interleave XML attribute forces the hypervisor to provide equal chunks of memory from all the nodes of the nodeset at the same time. This approach ensures a much better use of the host memory, leading to better overall system performance. In addition to this smooth memory use, interleave mode does not punish any process in the case of a memory over-commitment. Instead, it behaves like preferred mode and provides memory from other nodes when the nodeset runs out of memory.

In Figure 5, the memtester program needs 35GB from a nodeset composed of nodes 0, 1, 2 with a strict policy. The amount of memory provided by those three nodes is approximately 13, 15, and 7GB, respectively. In Figure 6, the same request with an interleave policy shows an equal memory consumption of roughly 11.5GB per node. The command:

<numatune>

<memory mode='interleave' nodeset='0-2'/>

</numatune>

shows the XML configuration for the interleave memory policy.

Manual CPU Pinning

So far, optimization efforts have been related to guest memory optimization and not to vCPU tuning. The cpuset XML attribute shows that vCPUs will run on a pCPU part of a set of pCPUs, but it doesn't state which one.

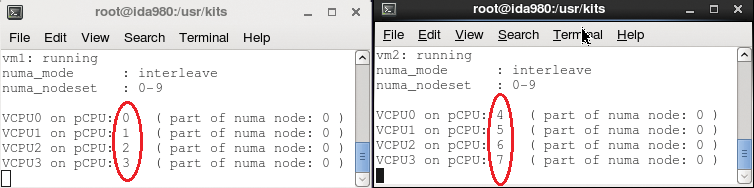

To specify a one-to-one relationship between vCPUs and pCPUs, you have to use the vcpupin attribute, which is part of the <cputune> element. To pin the four vCPUs of vm1 to pCPUs 0, 1, 2, and 3 and the vm2 vCPUs to pCPUs 4, 5, 6, and 7, configure the guests as shown in Listing 3 (Figure 7).

Listing 3: Guest Configuration

01 # grep cpu /etc/libvirt/qemu/vm[12].xml vm1.xml: <vcpu placement='interleave'>4</vcpu> vm1.xml: <cputune> vm1.xml: <vcpupin vcpu='0' cpuset='0'/> vm1.xml: <vcpupin vcpu='1' cpuset='1'/> vm1.xml: <vcpupin vcpu='2' cpuset='2'/> vm1.xml: <vcpupin vcpu='3' cpuset='3'/> vm1.xml: </cputune> vm2.xml: <vcpu placement='interleave'>4</vcpu> vm2.xml: <cputune> vm2.xml: <vcpupin vcpu='0' cpuset='4'/> vm2.xml: <vcpupin vcpu='1' cpuset='5'/> vm2.xml: <vcpupin vcpu='2' cpuset='6'/> vm2.xml: <vcpupin vcpu='3' cpuset='7'/> vm2.xml: </cputune>

Expose NUMA to Guests

Libvirt allows exposing the guests of an underlying virtual NUMA hardware infrastructure. You can use this feature to optimize scale-up of multiple applications (e.g., databases) that are isolated from each other in the guests by using cgroups or other container mechanisms.

Listing 4 shows four NUMA nodes (called cells in libvirt) in guest vm1, each with two vCPUs, 17.5GB of memory, and a global interleave memory mode from physical NUMA nodes 0-3.

Listing 4: NUMA Node Setup

01 # grep -E 'cpu|numa|memory' /etc/libvirt/qemu/vm1.xml 02 <memory unit='KiB'>73400320</memory> 03 <vcpu placement='static'>8</vcpu> 04 <numatune> 05 <memory mode='interleave' nodeset='0-3'/> 06 </numatune> 07 <cpu> 08 <numa> 09 <cell cpus='0,1' memory='18350080'/> 10 <cell cpus='2,3' memory='18350080'/> 11 <cell cpus='4,5' memory='18350080'/> 12 <cell cpus='6,7' memory='18350080'/> 13 </numa> 14 </cpu>

When booted, the numactl -H command performed in the guest returns the information shown in Listing 5.

Listing 5: Output of numactl -H

01 # ssh vm1 numactl -H available: 4 nodes (0-3) node 0 cpus: 0 1 node 0 size: 17919 MB node 0 free: 3163 MB node 1 cpus: 2 3 node 1 size: 17920 MB node 1 free: 14362 MB node 2 cpus: 4 5 node 2 size: 17920 MB node 2 free: 42 MB node 3 cpus: 6 7 node 3 size: 17920 MB node 3 free: 16428 MB node distances: node 0 1 2 3 0: 10 20 20 20 1: 20 10 20 20 2: 20 20 10 20 3: 20 20 20 10

Note that the SLIT table in Listing 5 contains only dummy values populated arbitrarily by the hypervisor.

Automated Solution with numad

Manual tuning such as I've shown so far is very efficient and substantially increases guest performance, but the management of several virtual machines on a single hypervisor can rapidly become overly complex and time consuming. Red Hat realized that complexity and developed the user mode numad(8) service to automate the best guest placement on NUMA servers.

You can take advantage of this service by simply installing and starting it in the hypervisor. Then, configure the guests with placement='auto' and start them. Depending on the load and the memory consumption of the guests, numad sends placement advice to the hypervisor upon request.

To view this interesting mechanism, you can manually start numad in debug mode and look for the keyword "Advising" in the numa.log file by entering these three commands:

# yum install -y numad # numad -d # tail -f /var/log/numad.log | grep Advising

Next, configure the guest with an automatic vCPU and memory placement. In this configuration, the memory mode must be strict. Theoretically, you incur no risk of guest punishment because, in automatic placement, the host can provide memory from all the nodes in the server upon numad's advice. Practically speaking, if a process requests too much memory too rapidly, numad does not have the time to tell KVM to extend its memory pool, and you bump into the OOM killer situation mentioned earlier. This issue is well-known and is being addressed by developers.

The following XML lines show how to configure the automatic placement:

# grep placement /etc/libvirt/qemu/vm1.xml <vcpu placement='auto'>4</vcpu> <memory mode='strict' placement='auto'/>

As soon as you start the guest, two numad advisory items appear in the log file. The first one provides initialization advice, and the second says that the guest should be pinned on node 3:

# virsh start vm1 # tail -f /var/log/numad.log | grep Advising ... Advising pid -1 (unknown) move from nodes () to nodes (1-3) Advising pid 13801 (qemu-kvm) move from nodes (1-3) to nodes (3)

With memtester, you can test by consuming some memory in the guest and again watching the reaction of numad:

# ssh vm1 /usr/kits/memtester/memtester 28G 1 ... Advising pid 13801 (qemu-kvm) move from nodes (3) to nodes (2-3)

It advises you to increase by one NUMA node the memory source location for this guest.

Conclusion

As the number of cores per CPU increases drastically, NUMA optimization matters for all multisocket servers. The Linux community understands this trend and, on a nearly day-to-day basis, adds new libvirt elements and attributes to optimize VM placement in the hypervisor. Manual NUMA optimization leads to the best results, but it can rapidly become tedious in terms of management and can lead to dangerous memory over-commitment situations.

An interesting automatic optimization alternative exists with numad, but this user mode service cannot take full advantage of some kernel data structures and does not provide the best response. To address this situation, a kernel-based solution called "automatic NUMA balancing" is under development and may appear in future Linux distributions with better results than numad.