Container virtualization comeback with Docker

Container Terminal

When they hear the term virtualization in an IT context, most admins almost automatically think of the standard tools such as Qemu, VMware, or Xen. What all of these solutions have in common is that they are full virtualizers that emulate entire systems. All the tools in this category impose a large overhead, even if your only need is to run individual programs in virtual environments.

Little Overhead

Container-based solutions prove that virtualization is possible with significantly less overhead. They simply lock processes into a virtual jail, avoiding the overhead of a separate operating system and contenting themselves with the resources provided by the host operating system. Nearly all operating systems have their own container implementations: FreeBSD has its jails, Virtuozzo was quite popular on Windows for a while, and, of course, Linux has containers – even multiple copies if need be: OpenVZ, LXC, and Linux VServer vie for the attention of users.

LXC (which stands for Linux Container) in particular is remarkable: Once a minor hype, this technology has now largely disappeared from the headlines and become a side issue. It definitely didn't deserve this, however. LXC containers let you perform tasks, for which virtualizing a complete operating system would definitely be over the top. The Linux Container developers will probably appreciate the way their project has made it back into the limelight: Docker is currently spreading like wildfire in the community, and it is based on LXC features.

Containers as a Service?

The developers behind Docker have basically done their homework, which the LXC developers maybe should have done. In doing so, they answered the question of why LXC has not asserted itself – probably without even being aware of it. On the one hand, the more famous full virtualizers I mentioned before definitely pack a powerful punch; but, on the other hand, it looks as though many users in the computing world didn't fully understand why containers were good.

Docker makes LXC more sexy: The stated aim of the project is to pack any application into a container in order to be able to distribute these containers. The idea is brilliant. It shifts the technical details into the background and puts the focus on an easy-to-use service in the foreground. For all intents and purposes, Docker supplements LXC with the kind of usability that LXC itself has always lacked.

Additionally, the tool has impressed many users – few have not heard of the solution in recent weeks. That's reason enough to take a closer look at Docker: How does the solution work, and how can it be used specifically to save work or at least to make things easier?

Docker relies exclusively on LXC as its back end. Asking about Docker's capabilities is thus equivalent to asking what features LXC includes. LXC is, at first sight, nothing more than a collection of functions offered by the Linux kernel for sandboxing purposes.

Cgroups

Two functions play a prominent role: Cgroups and namespacing. Cgroups stands for Control Groups and describes a kernel function in Linux that allows process groups to be defined, subsequently to limit the resources available to these groups. The function primarily relates to hardware: For a Cgroup, you can specify how much RAM, disk space, or disk I/O the group is allowed to use. The list of available criteria are, of course, far longer than these examples.

Cgroups became an integral part of Linux kernel version 2.6.24, and over the years, the kernel developers have significantly expanded the Cgroup functions. Besides the above-mentioned resource quotas, Cgroups can now also be prioritized and managed externally.

Namespacing

Namespacing also plays a central role in Linux when it comes to security. Cgroups do not primarily exist to demarcate processes; instead, they tend to manage resources. Security aspects are the domain of namespaces: Namespaces can be used to hide individual processes or Cgroups from other processes or Cgroups.

This technology is also highly granular: Namespaces distinguish between process IDs, network access, access to the shared hostname, mountpoints, or interprocess communication (IPC). Network namespaces are now quite popular, for example, to separate packets from multiple users on the same host. A process within a namespace cannot see – and certainly not tap into – either the host interface or the interfaces in the namespaces of other customers.

Although Cgroups and namespaces are nice features in their own right, they become an attractive virtualization technology as a team. The ability to combine processes in manageable groups, subsequently to limit their options, leads to a simple but effective container approach. LXC offers these functions, and Docker builds on them.

Portable Containers

Docker, however, adds many practical features on top. Probably the most important function is portable containers. In Docker, moving existing containers between two hosts is easy. In pure LXC, this action is more like a stunt; ultimately, it involves manually moving the files – not exactly a convenient operation. Additionally, a user has no guarantee that the whole thing will actually be successful. Containers in "pure" LXC are highly dependent on the environment in which they run (e.g., system A). If target system B is not at least similar, the game is over before it even gets started. The problem here involves various factors, such as the distribution used or the hardware available for the container.

The Docker developers have designed their own container format to make things easier. Docker abstracts the resources that a VM sees and takes care of communication with the physical system itself. The services that run within a Docker container therefore always see the same system. If you now move a container from one system to another, Docker handles most of the work. The user exports the container to the Docker format invented for this purpose, drags the file to another computer, and restores the container in Docker – all done!

The Docker Philosophy

If you look more closely at Docker, you will quickly realize that the Docker developers have different basic views on containers than, for example, the people behind LXC. The Docker developers themselves describe their approach as application-centric. This means the application running within a Docker container is the really important aspect, not the container itself.

LXC is popular in developer circles, because an LXC container can be used as a fast-booting substitute for a complete virtual machine. In Docker, this is not the real issue; instead, the idea is that a container can be a small but friendly environment for almost any application. Against this background, it becomes clear what the container feature is all about: The core motivation in inventing this feature was achieving the ability to move apps quickly from one host to another as appliances.

Do-It-Yourself Images

Incidentally, Docker also helps users prepare containers for export to Docker. A container is usually nothing more than the entire filesystem of a Linux installation; Docker provides the ability to convert easily any directory to a Docker image. If you prefer a more spartan approach, you can, for example, create a lightweight system on a Debian or Ubuntu system using debootstrap. You simply install the dependencies for any application, install the application itself, and then hand over the folder to Docker when you're done. This process gives you an image that can be distributed freely. Building an image Docker-style is simple on top of that, because the docker program does this with a single command:

debootstrap precise ./rootfs; tar -C ./rootfs -c . | docker importubuntu/mybase

And, presto, you have an image.

The Goal: PaaS

With its portfolio of functions, Docker integrates very well with the current cloud computing and everything-as-a-service-dominated IT scene. Ultimately, Docker's goal is clear: By using prefabricated containers that can be distributed at will on the network, admins can easily take platform-as-a-service applications to the people. The PaaS concept suits Docker because it also focuses on the application rather than the operating system on which that application runs.

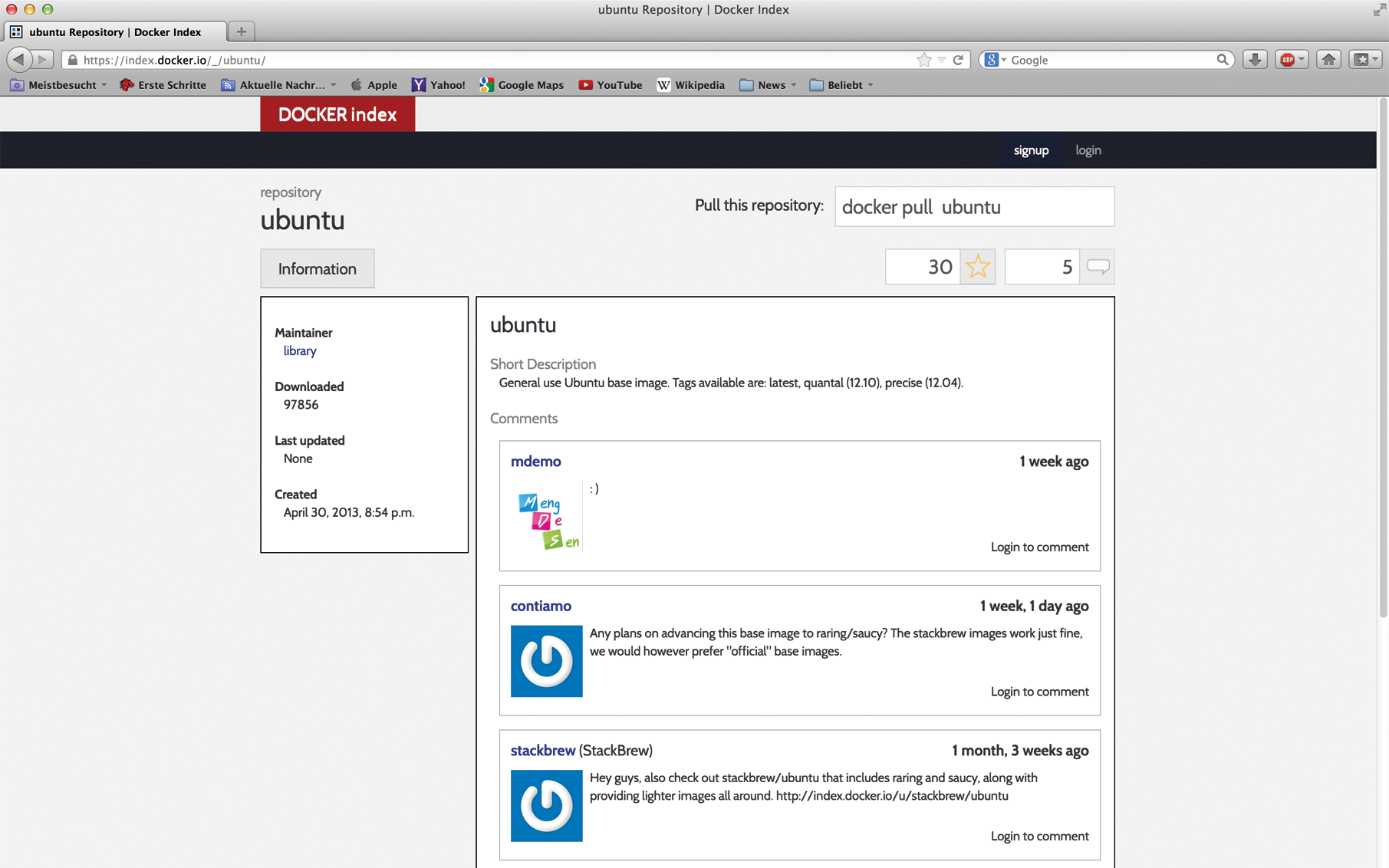

Additionally, a Docker Index service (Figure 1) now acts as a kind of container marketplace. Users who have built a container for a particular purpose can upload it to the index to make it available to other users. Participation in the Docker index is free; you just need to register.

The number of different images that can be found in Docker is impressive. In addition to basic images for virtually all popular distributions, it includes ready-to-run custom images, such as multiple Tomcat images that are used to start an entire Java Tomcat instance at the click of a mouse. Docker images are already available for MySQL, Apache, or Drupal as prebuilt platforms and even for some OpenStack components. New images are being added every day. These choices help lower the barrier to entry: Once you have installed Docker, it does not take long to deploy your program.

Good Thinking: Automatic Build

For the Docker developers, PaaS obviously does not end when a user starts a container, subsequently to install their application in it. The Docker developers also want to facilitate this last step for users. Thus, an automated build system is integrated directly into Docker; it is aimed primarily at program developers who would like to distribute their apps as Docker containers. Dockerfiles provide the ability to configure a container in detail.

Developers can also use Dockerfiles to automate the process of creating Docker containers. This would be useful, for example, if you wanted to offer a snapshot version of your own application in addition to a stable version. In this case, the snapshot version would check out the current GitHub version of the program every night and build a complete Docker image from it. The Docker documentation [1] has more details on Dockerfiles.

Version Control

Another treat for anyone interested in using Docker containers in a production environment is Docker's versioning system. Docker's developers have basically implemented a small clone of Git that is customized for Docker operation. It includes many commands that are reminiscent of Git: docker commit, docker diff, and docker history are just a few examples.

These functions ultimately ensure that you do not need a complete copy of the original file for each new version of an image. Depending on the containers, this approach would quickly use up too much space. Docker circumvents the problem very elegantly by managing a local "container repository," in which changes can be committed at any time. Using the previously described automated build function, you can at any time generate a finished container file for distribution from a specific version of an image. All told, container handling is thus implemented in a very elegant way.

The Docker API

Elegance is also evident in the Docker API, which is effectively a switching and interface center of a Docker installation that is managed in line with the RESTful principle via an API. In the background, the API is responsible for ensuring that docker commands entered at the command line are actually implemented. Consequently, all the Docker commands are ultimately API calls; the Docker API does the real work.

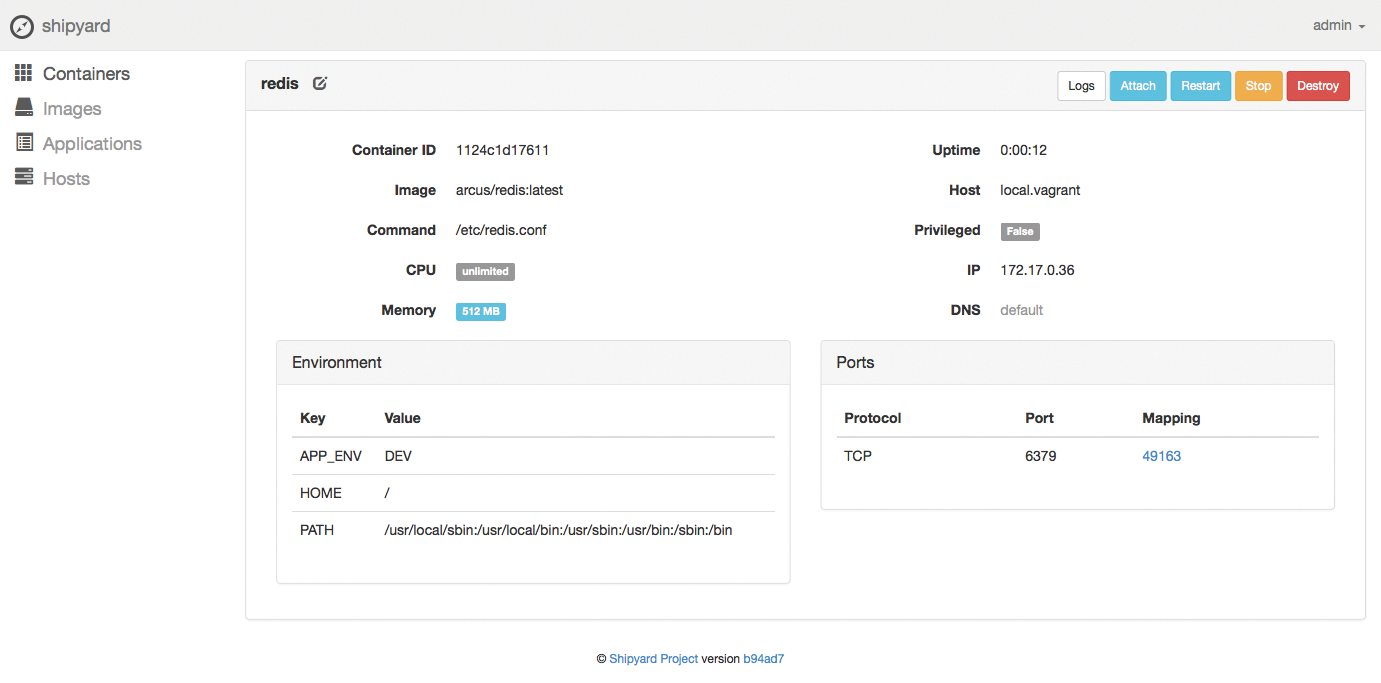

The advantage of such an architecture is obvious; with the API as an abstract command receiver in the background, there are few limits to what you can do in terms of developing front ends. All major cloud computing solutions rely on similar designs, and the principle has asserted itself in Docker, as in Amazon's EC2 or OpenStack. Besides the docker command-line tool, a GUI by the name of DockerUI [2] is also available (Figure 2), and a competitor known as Shipyard [3] vies for the favor of users, as well (Figure 3).

Cloud Integration

With its capabilities, Docker is eminently well qualified for more complex tasks. If you do not need a fully virtualized system but merely a matching container – for example, for development purposes – you can benefit greatly from Docker. It is only logical to integrate Docker with typical cloud and virtualization solutions – and with other tools that swim in the wake of the major cloud environments.

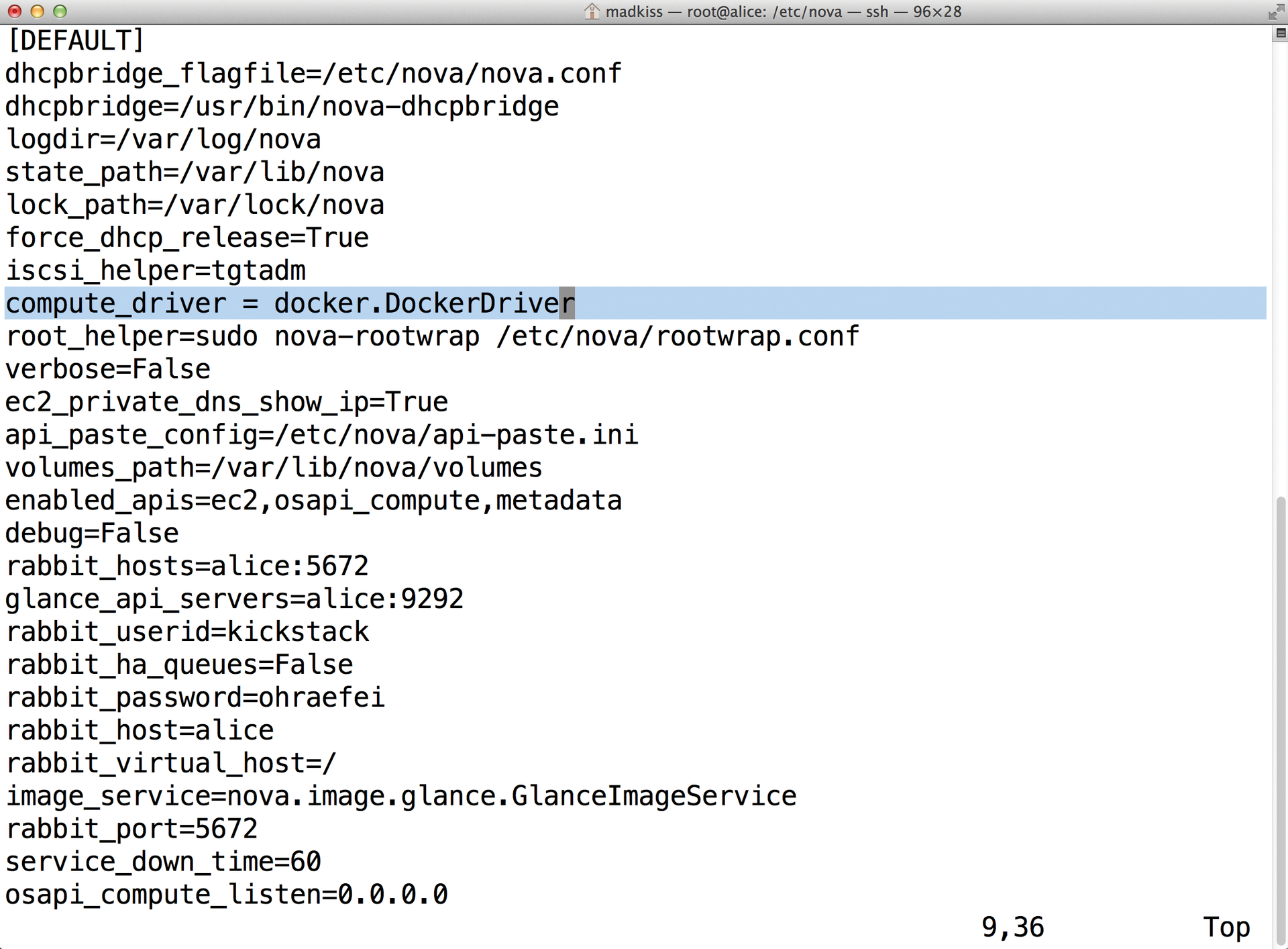

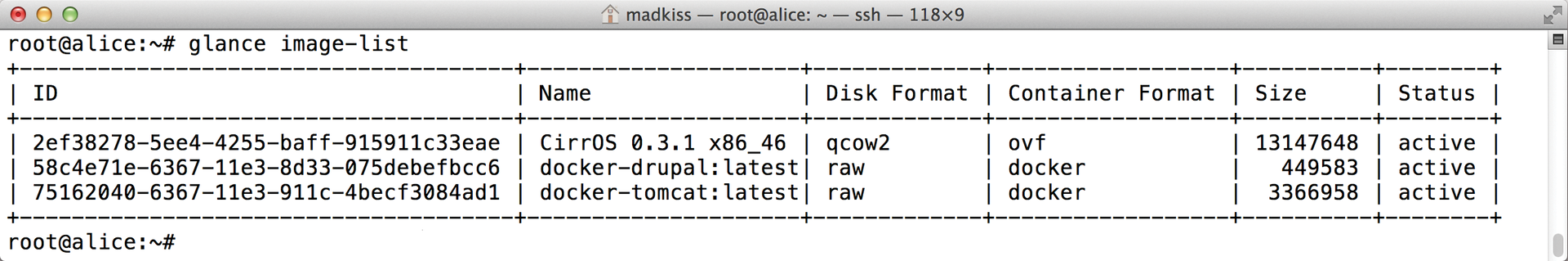

OpenStack very clearly dominates the market for open source clouds at the moment, a fact that has evidently not escaped the Docker developers' attention. After all, the OpenStack virtualization component, Nova [4], now comes with support for Docker.

Nova is basically modular, and the virtualization technology to be used can be enabled by a plugin. Thus, a simple entry in nova.conf decides which technology the OpenStack component uses in the background. The choices are, for example, KVM, HyperV, VMware, and, more recently, Docker (Figures 4 and 5). OpenStack then does not launch a complete VM in the background; instead, it creates a Docker container that offers its own functionality as a virtual system. Docker integration is seamless, so you do not see the difference between running KVM with a full VM and Docker at first glance. In detail, however, quality differences do exist.

These differences partly relate to the requirements that Docker imposes on itself. Because the application seeks always to present the same system externally, as described previously, it needs to go through some contortions internally. This approach requires a high degree of adaptability with respect to various factors such as the network.

The Docker driver in Nova only supports the old network stack (nova-network) in the Havana release of OpenStack, which will probably be removed in the next release of OpenStack. A bug report in Launchpad makes me suspect that this is a bug – not a feature that has been deliberately left unimplemented [5]. Anyone using OpenStack with the forward-looking Neutron SDN stack cannot turn to Docker for the time being. However, precisely because Docker is currently all the rage, you can assume that this issue will be remedied in the foreseeable future. That said, this makes it hard to judge the maturity of the Docker implementation in Nova.

Conclusions

Docker extends LXC, adding a number of useful features that make the software more attractive. The developers of the solution have largely oriented Docker with the requirement of being able to distribute applications in the Docker container format quickly and easily and so that they are capable of running on any system.

However, in reality, Docker will tend to be especially interesting for those setups in which virtualization is desired, but without the overhead of a fully virtualized system.

Docker offers an attractive middle ground here, and it's indeed no coincidence that the software has many fans and is experiencing some hype. Especially in combination with a lean OpenStack setup, Docker could develop into a really useful tool for many tasks. It already comes with many practical tools in its own right.

A working setup, however, relies on the Docker driver for OpenStack Nova, and this should not be based on software from the Iron Age. If you want to run Docker without tying into frameworks such as OpenStack, you will find that it is already a reliable tool today.

Docker is reliable primarily because the Docker API is very versatile, supporting, for example, seamless integration with automation tools like Puppet and Chef, in addition to the features mentioned earlier. Overall, Docker has what it takes to become an admin's darling and is definitely worth a closer look.