Memory management on high-end systems

Memory Hogs

Modern software such as large databases run on Linux machines that often provide hundreds of gigabytes of RAM. Systems that need to run the SAP database (HANA) in a production environment, for example, can have up to 4TB of main memory [1]. Given these sizes, you might expect that storage-related bottlenecks no longer play a role, but the experience of users and manufacturers with such software solutions shows that this problem is not yet completely solved and still needs attention.

Even well tuned applications can lose performance because of insufficient memory being available under certain conditions. The standard procedure in such situations – more RAM – sometimes does not solve the problem. In this article, we first describe the problem in more detail, analyze the background, and then test solutions.

Memory and Disk Hogs

Many critical computer systems, such as SAP application servers or databases, primarily require CPU and main memory. Disk access is rare and optimized. Parallel copying of large files should thus have little effect on such applications because they require different resources.

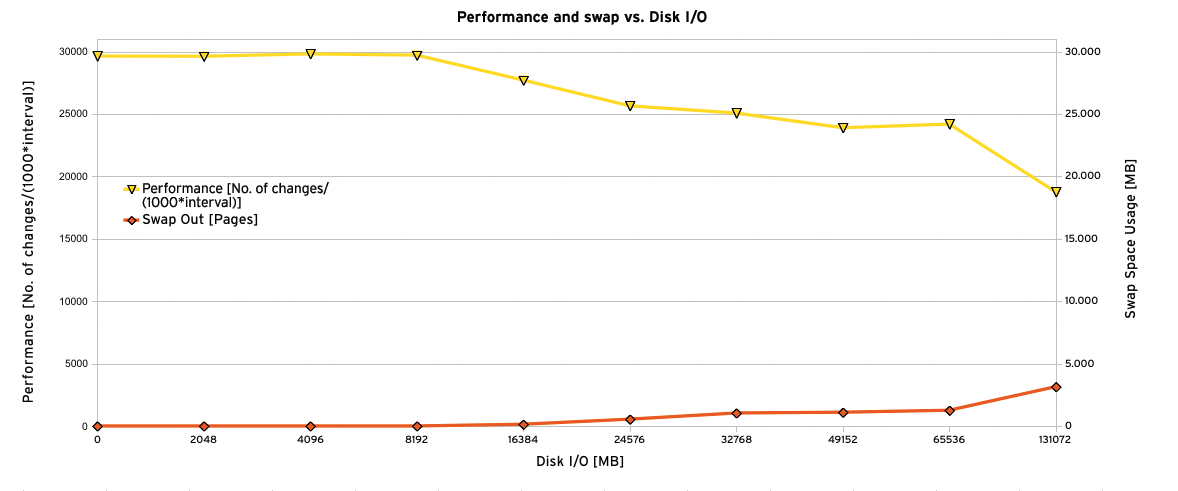

Figure 1 shows, however, that this assumption is not true. The diagram demonstrates how the (synthetic) throughput of the SAP application server changes if disk-based operations also occur in parallel. As a 100 percent reference value, we also performed a test cycle without parallel disk access. In the next test runs, the dd command writes a file of the specified size on the hard disk.

On a system in a stable state, throughput initially is not affected by file operations, but after a certain value (e.g., 16,384MB), performance collapses. As Figure 1 shows, the throughput of the system decreases with increasing file size by nearly 40 percent. Although the figures are likely to be different in normal operation, a significant problem still exists.

Such behavior is often found in daily operation if a backup needs to move data at the same time, or overnight, or generally when large files are copied. A closer examination of these situations shows that paging increases at the same time (Figure 1). Thus, it seems that frequent disk access of active processes that actually need little CPU and memory can under certain circumstances affect the performance of applications that only rarely access the disks.

Memory Anatomy

The degradation of throughput with increasing file size is best understood if you consider Linux-kernel-style main memory management. Linux, like all modern systems, distinguishes between virtual memory, which the operating system and applications see, and physical memory, which is provided by the hardware of the machine, the virtualizer, or both [2] [3]. Both forms of memory are organized into units of equal size. In case of virtual memory, these units are known as pages, whereas physical memory refers to them as frames. On modern systems, they are both still often 4KB in size.

The hardware architecture also determines the maximum size of virtual memory: In a 64-bit architecture, the virtual address space is a maximum of 2^64 bytes in size – even if current implementations on Intel and AMD only support 2^48 bytes [4].

Virtual and Physical

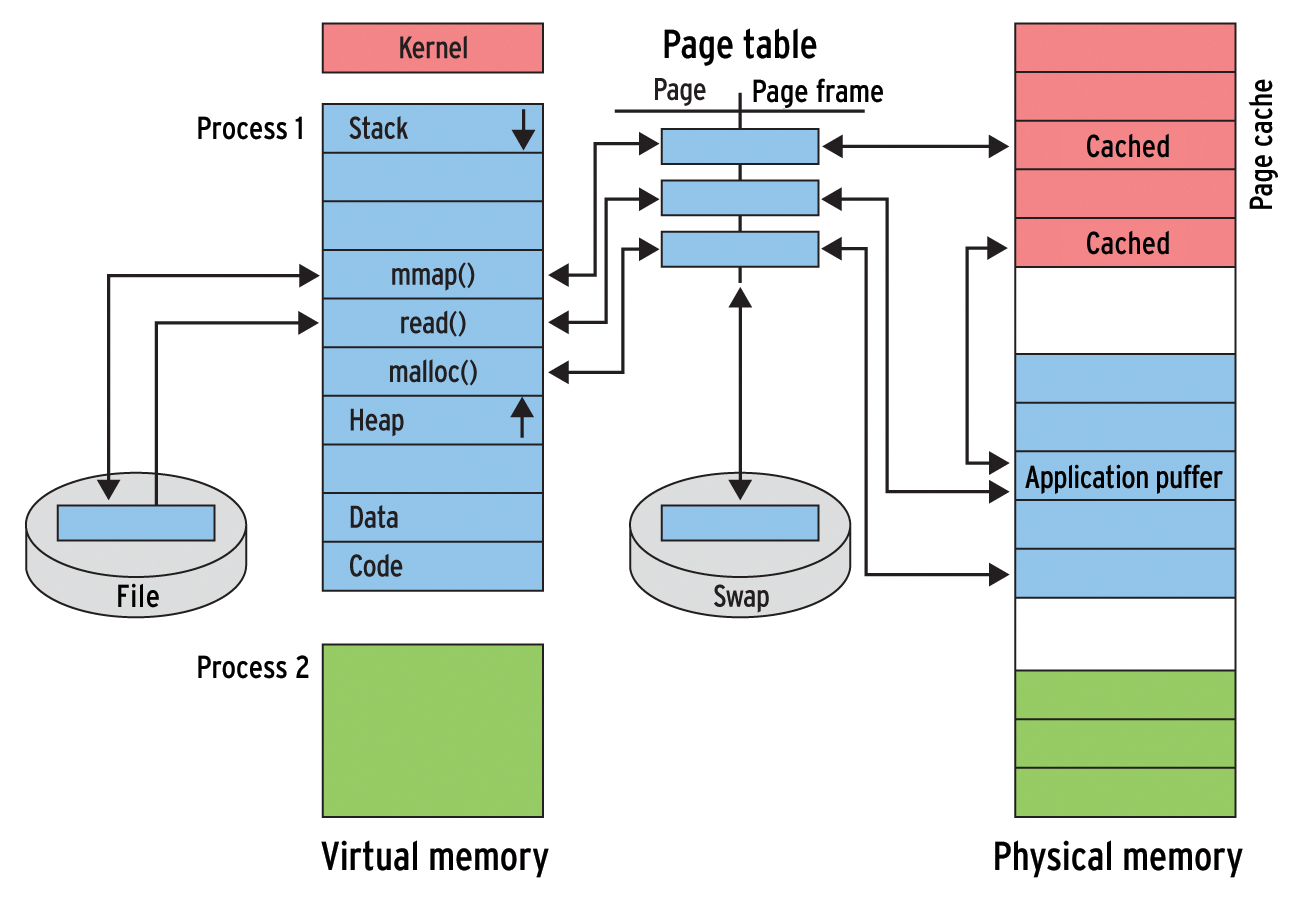

If software – including the Linux kernel itself in this case – wants to access the memory contents, the virtual addresses must be mapped to the addresses in the physical memory. This mapping is realized by process-specific page tables and ultimately corresponds to replacing the page with the content of virtual memory by the page frame in which it is physically stored (Figure 2).

While the virtual address space for applications is divided into sections for code, static data, dynamic data (heap), and the stack, the operating system claims larger page frame areas for its internal caches [1]. One of the key caches is the page cache, which is initially just a storage area in which special pages are temporarily stored. These pages include the pages that are involved in file access. If these pages lie in main memory, the system can avoid frequent access to the slow disks. For example, if read() access to a disk area is needed, the Linux kernel first checks to see whether the requested data exists in the page cache. If so, it reads the data there.

Faster Reads

The page cache is thus a cache for file data that has been stored in pages. Its use speeds up disk access by orders of magnitude. Therefore, Linux makes heavy use of this cache and always tries to keep it at the maximum possible size [5].

Two other areas, which were managed separately in legacy Linux versions (kernel 1.x and 2.x), are integrated into the page cache. First is the buffer cache, which manages blocks belonging to disks and other block devices in main memory. Today, however, the Linux kernel no longer uses blocks as storage units, but pages. The buffer cache thus only stores page-to-disk block mappings, because they do not necessarily have to be the same size as pages. It is of very little importance today.

The second area is the swap cache, which is also a cache for pages that originate from a disk. However, it does not work with pages that reside in regular files but with pages that reside on the swap device – the anonymous pages. When a page is swapped out to the swap partition and later put back into storage, it is initially just a regular disk page. Thus, it should be cached.

The swap cache now primarily contains only pages that have been swapped in but not subsequently modified. Linux can detect from the swap cache whether swapping out again requires a write to disk (to the swap space). One of the swap cache's most important tasks is thus avoiding (write) access. A clear distinction to the actual page cache exists here, which primarily accelerates reads [5].

In the case of frequent disk access, the kernel now tries to adjust the page cache to meet these requirements. In the above-outlined test, it grows as the file being written becomes larger. However, because the number of available page frames is limited (to 10GB of free memory in the test), this growth comes at the expense of the pages that applications can keep for themselves in physical memory.

Old Pages

As the page cache grows, the kernel's page replacement strategies [1] try to create space. Linux uses a simplified LRU (least recently used pages) strategy for this. More specifically, it identifies outsourcing candidates by finding pages that have remained unused for a longer period of time. The kswapd kernel thread handles the actual swapping process.

Unfortunately, many application-specific buffer areas fall into this category. A typical SAP application server can manage many gigabytes in these buffers and temporary areas. The diversity of requests, however, leads to large parts of these not being used very frequently or very quickly, although they are still used from time to time. The request locality is fairly small.

Depending on how aggressively it swaps, the Linux kernel sacrifices these pages in favor of page cache growth and swaps them out. However, this approach degrades application performance, because, under certain circumstances, Linux memory access is no longer served directly from main memory, but only after swapping back in from swap space.

These considerations provide the framework for explaining the empirically observed behavior discussed previously. When backups or copying large files cause substantial disk access, the operating system can swap out application buffer pages in favor of a larger page cache. After this has happened, when the application needs to access the paged memory, this access will be slower. Increasing the physical memory size by installing additional memory modules does not fundamentally solve the problem; at best, it just postpones its occurrence.

Finding Solutions

The background from the previous sections shows that the observed performance degradation is due to the lack of physical memory. Possible solution approaches can be classified in terms of how they ensure sufficient space for an application in physical memory:

- Focusing on your own application: Preventing your own pages from being swapped out.

- Focusing on other applications: Reducing or limiting the memory usage of other applications.

- Focusing on the kernel: Changing the way the kernel manages physical memory.

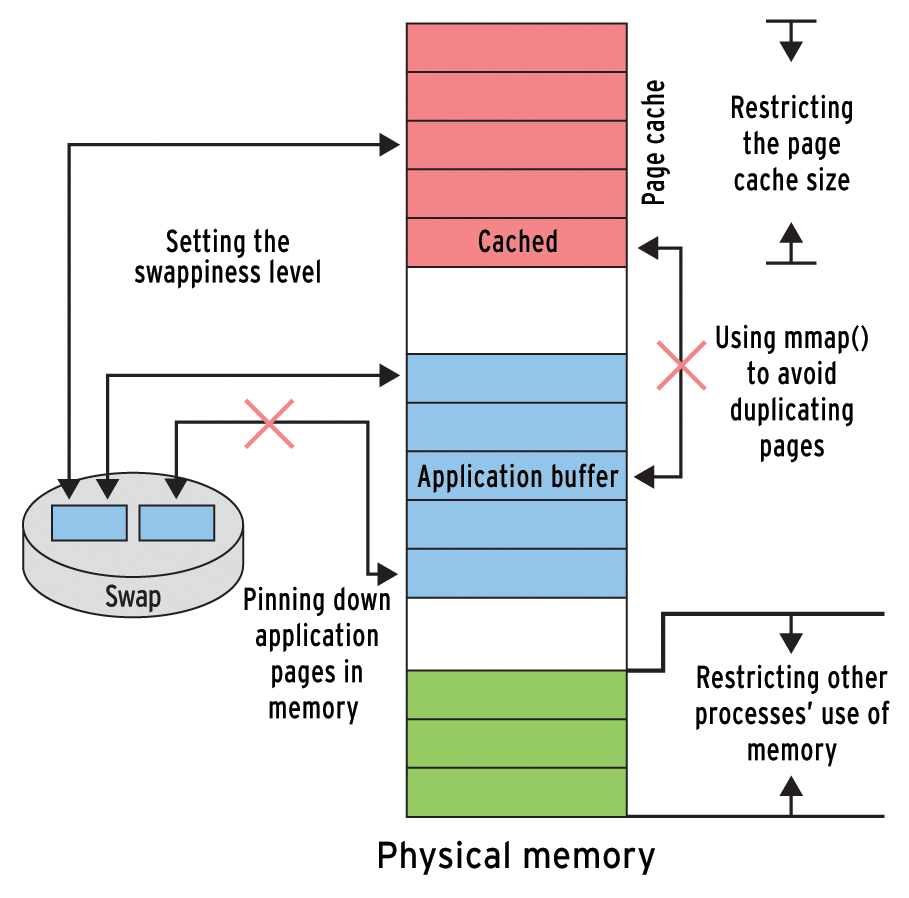

These different approaches are summarized graphically in Figure 3.

Your Own Application

One very obvious measure for reducing storage problems is to keep your application small and always release memory. However, this practice usually requires complex and singular planning. From a developer's point of view, it may seem tempting to prevent your application pages from being swapped out of physical memory. Certain methods require intervention with the code, and others are transparent from the application's perspective and require just a little administrative work.

The well-known mlock() [6] system call belongs to the first group. This command lets you explicitly pin down an area from the virtual address space in physical memory. Swapping is then no longer possible – even in potentially justified, exceptional cases. Additionally, this approach requires special attention, especially for cross-platform programming, because mlock() makes special demands on the alignment of data areas. This, and the overhead of explicit intervention with the program code, make mlock() unsuitable in many environments.

Another approach, which also requires intervention with the code and system administration, relies on huge pages, which the Linux kernel has in principle supported since 2003 [7]. The basic idea behind huge pages is simple: Instead of many small pages (typically 4KB), the operating system uses fewer but larger pages – for example, 2MB on x86_64 platforms.

This approach reduces the number of page to page frame mappings, thus potentially accelerating memory access noticeably. Because of the way they are implemented, Linux cannot swap out huge pages, so they also help to protect your own pages. Unfortunately, however, the use of huge pages is cumbersome. For example, to provide shared memory, you need to set up a separate hugetlbfs filesystem. Shared memory areas are stored here as files that are implemented as huge pages in main memory.

The greater problem is that you cannot guarantee retroactive allocation of huge pages on the fly, because they need to be mapped in contiguous memory areas. In fact, you often have to reserve huge pages directly after boot, which means that the memory associated with them is not available to other applications without huge page support. This comes at a price of less flexibility, especially for high-availability solutions such as failover scenarios but also in general operation. The complexities of huge page management prompted the development of a simpler and largely automated method for the use of larger pages.

This method made its way into the 2.6 kernel in 2011, in the form of Transparent Huge Pages (THP). As the name suggests, the use of THP is invisible to users and developers alike. The implementations generally show slightly slower performance than huge pages. However, THPs are stored in the same way as normal pages.

Efficient: mmap()

Another approach relating to your in-house application is not actually a safeguard in the strictest sense but is a strategy for efficient memory usage, and thus indirectly for reducing the need to swap. The mechanism is based on the well-known mmap() call [8] [9]. This system call maps areas from files in the virtual address space of the caller, so that it can manipulate file content directly in the virtual address space, for example.

In some ways, mmap() replaces normal file operations such as read(). As Figure 3 shows, its implementation in Linux differs in one important detail: For read() and the like, the operating system stores the data it reads in an application page and again as a data file in the page cache. The mmap() call does not use this duplication. Linux only stores the page in the page cache and modifies the page to page frame mappings accordingly.

In other words, the use of mmap() saves space in physical memory, thereby reducing the likelihood that your own pages are swapped out – at least in principle and to a certain, typically small, extent.

None of the approaches presented here for making your application swap-resistant is completely satisfactory. Another approach could therefore be to change the behavior of other applications to better protect your own app. Linux has for a long time offered the ability to restrict an application's resource consumption using setrlimit() and similar system calls. However, these calls presumably require intervention in third-party code, which is obviously not a viable option.

Everything Under Control

A better alternative at first glance could be control groups (cgroups) [10], which have been around since Linux 2.6. With their help, along with sufficient privileges, you can also allocate a third-party process to a group, which then controls the process's use of resources. Unfortunately, this gives system administrators difficult questions to answer, as is the case with restricting resources in the shell via ulimit:

- Can you really restrict an application at the expense of others? Assuming a reasonable distribution of applications across machines, this prioritization is often difficult to get right.

- What are reasonable values for the restrictions?

- Finally, you also can critically question the fundamental orientation: Are fixed restrictions for third-party applications really what you are trying to achieve? Or should the Linux kernel only handle your own application with more care in special cases?

- Is a termination of the application tolerable on reaching the limits?

These questions show that cgroups ultimately do not solve the underlying problem.

The application buffer is swapped out because the page cache takes up too much space for itself. One possible approach is therefore to avoid letting the page cache grow to the extent that it competes with application memory. With the support of applications, you can do this to a certain extent.

Developers can tell the operating system in their application code not to use the page cache, contrary to its usual habits. This is commonly referred to in Linux as direct I/O [11]. It works around operating system caching, for example, to give a database full control over the behavior of its own caches. Direct I/O can be initiated via options for file operations using the O_DIRECT option for open() or DONT_NEED with fadvise(), for example.

Unfortunately, very few applications use direct I/O, and because a single misconfigured application can unbalance a stable system, such strategies can at best delay swapping out of the application buffer.

Focusing on the Kernel

From an administrative perspective, a much easier approach would be to adapt the kernel itself so that the application memory bottlenecks no longer occur. A setting in the kernel would then affect all programs and, for example, not require any application-specific coding. You can try to achieve this in several ways.

First, you could set the page cache limit to a fixed size. Such a limit effectively prevents swapping – as measurements will show. Unfortunately, the patches required are not included in the standard kernel and probably will not be in the near future. Only a few enterprise versions by distributors such as SUSE offer these adjustments, and only for special cases. Thus, this is not a solution for many environments.

The next solution is again only suitable for special cases. If the swap space is designed to be very small compared with the amount of main memory available, or not present at all, the Linux kernel can hardly swap out even in an emergency. Although this approach protects application pages against swapping, it comes at the price of causing out-of-memory situations – for example, if the system needs to swap because of a temporary memory shortage.

In this case, continued smooth operation requires very careful planning and precise control of the system. For computers with an inaccurately defined workload, such an approach can easily result in process crashes and thus in an uncontrollable environment.

Swappiness

Much optimism is thus focused on a setting that the Linux kernel has supported since version 2.6.6 [12]. Below /proc/sys/vm/swappiness is a run-time configuration option that lets you influence the way the kernel swaps out pages. The swappiness value defines the extent to which the kernel should swap out pages that do not belong to the page cache or other operating system caches.

A high swappiness value (maximum 100) causes the kernel to swap out anonymous pages intensely, which is very gentle on the page cache. In contrast, a value of 0 tells the kernel, if possible, never to swap out application pages. The default value today is usually 60. For many desktop PCs, this has proven to be a good compromise.

You can quite easily change the swappiness value to 0 at run time using this command:

echo 0 > /proc/sys/vm/swappiness

This setting, however, does not survive a restart of the system. To achieve a permanent change, you need an entry in the /etc/sysctl.conf configuration file. If you set vm.swappiness = 0, for example, the kernel specifically tries to keep application pages in main memory. These should normally solve the problem.

Unfortunately, the results discussed later show major differences in the way some distributions implement this. Additionally, swappiness changes the behavior for all applications in the same way. In an enterprise environment in particular, just a few applications will be particularly important. The swappiness approach cannot prevent cut-throat competition between applications if you have a system running multiple large applications. Only careful distribution of applications can help here.

Gorman Patch

The final approach, which currently promises at least a partial solution to the displacement problem, only became part of the official kernel in Linux 3.11. The patch by developer Mel Gorman optimizes paging behavior with parallel I/O [13].

Initial results show that the heuristics incorporated into this patch actually improve the performance of applications if you have parallel I/O. However, it is still unclear whether this patch fixes the problem if the applications were inactive at the time of disk I/O – for example, at night. Will the users see significantly deteriorated performance in the morning because the required pages were swapped out?

The practical utility value of these approaches can be evaluated from different perspectives. The above sections have already mentioned some criteria. Kernel patching could be ruled out for organizational and (support) reasons in a production environment. The first important question therefore is whether a change in the application code is necessary. All approaches that require such as change are impractical from the administrator's view.

On the other hand, you cannot typically see the benefits of changes to the kernel directly but only determine them in operation. Thus, the following section of this article shows the effects of these changes for the same environment as described above. Based on these experimental results and the basic characteristics of the approach, you can ultimately arrive at an overall picture that supports an assessment.

Measuring Results

Measurements are shown in Figure 4. Restricting the size of the page cache shows the expected behavior: The applications are not much slower, even with massively parallel I/O. The kernel patch by Mel Gorman behaves similarly: It is already available in SLES 11 SP 3 and is going to be included in RHEL 7. It supports good, consistent performance of the applications.

Also, setting swappiness = 0 on SLES 11 SP 2 and SP 3 seems to protect the applications adequately. Amazingly, Red Hat Enterprise Linux 6.4 behaves differently: The implementation seems to be substantially different and does not protect applications against aggressive swapping – on the contrary.

The different values for swappiness do not show any clear trend. Although they lead to a clearly poorer performance of the application with increasing I/O, it is difficult to distinguish systematically between smaller values like 10 or 30 and the default value of 60. It seems that the crucial question is whether or not swappiness is set to 0. Intermediate values have hardly any effect.

Table 1 summarizes all the approaches and gives an overview of their advantages and disadvantages, as well as the measured results. Currently no solution eliminates all aspects of the displacement problem. The next best thing still seems to be a correct implementation of swappiness – and possibly, in future, the approach by Mel Gorman. Even with these two methods, however, administrators of systems with large amounts of main memory will not be able to avoid keeping a watchful eye on the memory usage of their applications.

Tabelle 1: Strategy Overview

|

Approach |

Tool |

Advantage |

Disadvantage |

|---|---|---|---|

|

Focusing on Your Own Application |

|||

|

Pinning pages |

|

Does the job |

Requires code change; massive intervention; inflexible |

|

Huge pages |

Performance improvement |

Requires code change; massive intervention; inflexible; administrative overhead |

|

|

Reducing size |

|

Elegant access; performance improvement |

Requires code change; only postpones swapping |

|

Focusing on Third-Party Applications |

|||

|

Setting resource limits |

|

– |

Requires code change in third-party application; massive interference with application up to and including termination; does not prevent the page cache growing |

|

Control groups |

|

Flexible; also without code changes |

Settings unclear; does not prevent the page cache growing |

|

Focusing on the Kernel |

|||

|

Keeping the page cache small |

Direct I/O |

Performance boost possible for application because of proprietary caching mechanism |

Requires code change in third-party application; requires own cache management; does not prevent the page cache growing; a single application without Direct I/O can negate all benefits |

|

Restricting the page cache |

Kernel patch |

Works (demonstrated by other Unix systems); no intervention with applications required |

No general support, slow behavior in the case of massive I/O |

|

Small swap space |

Admin tools |

Little swapout |

Not a solution for normal systems; risk of OOM scenarios |

|

Configuring swapping |

Swappiness |

No intervention with applications required; works for a value of |

Not functional on all distributions; no gradual adjustment |

|

Modifying |

Kernel patch |

Does the job; no intervention with applications required; very few side effects |

Officially available as of kernel 3.11; possibly works with explicitly parallel I/O ("hot memory") |

Conclusions

Displacement of applications from RAM still proves to be a problem, even with very well equipped systems. Although memory shortage should no longer be an issue with such systems, the basically sensible, intensive utilization of memory by the Linux kernel can lead to significant performance problems in applications.

Linux is in quite good shape compared with other operating systems, but it can be useful – given the variety of approaches that is typical of Linux – to investigate the behavior of the kernel and the applications you use, so you can operate large systems with consistently high performance.

For more details on experience with these systems and the tests used in this article, check out the Test Drive provided by SAP LinuxLab on the SAP Community Network (SCN) [14].