Hyper-V with the SMB 3 protocol

Fast Track

The SMB protocol is mainly known as the basis for file sharing in Windows and is familiar to Samba and Linux users, too. Windows 8.1 and Windows Server 2012 R2 use the new Server Message Block 3 (SMB 3) protocol, which has several advantages over the legacy version. Although it was already introduced with Windows Server 2012, it was once again improved in Windows Server 2012 R2. Rapid access to network storage especially benefits enterprise applications, such as SQL Server and virtual disks in Hyper-V.

The disks of virtual servers can reside on the network with Windows Server 2012 R2, for example, on file shares or iSCSI targets. Saving large files – like the disks of file-based virtual servers on the network – offers some advantages over block-based storage, including easier management. This is especially true if the files are stored in file shares, because you don't need to use external management tools or to change management workflows.

Windows Server 2012 R2 lets you use VHDX files as an iSCSI target. This means that Hyper-V hosts can store their data on iSCSI disks, which are in turn connected via SMB 3. VHDX files are also much more robust and allow sizes up to 64TB.

SMB 3 can forward SMB sessions belonging to services and users on virtual servers in clusters. If a virtual server is migrated between cluster nodes, the sessions remain active; users are not cut off from services during this operation. Thus, in addition to higher performance and better availability, SMB 3 also supports high availability.

New in SMB 2.0 and 2.1

SMB was initially developed by IBM and integrated by Microsoft into Windows in the mid-1990s via LAN Manager. Microsoft modified SMB 1.0 and submitted it to the Internet Engineering Task Force (IETF), and SMB was renamed to CIFS (Common Internet File System).

Microsoft immediately started improving SMB after taking it over from IBM. Microsoft added some improvements in version 2.0 of Windows Vista and version 2.1 of Windows 7 and Windows Server 2008. As of version 2.0, Microsoft no longer uses the term CIFS, because the original extensions were developed for SMB 1.0, and they no longer play a role in SMB 2.0.

SMB 2.1 mainly offers performance improvements, especially for very fast networks in the 10Gb range. The version supports larger transmission units (Maximum Transmission Units, MTUs). Additionally, the energy efficiency of the clients was improved. Clients from SMB 2.1 can switch to power saving mode despite active SMB connections.

Improvements in SMB 3.0 include, for example, TCP Windows Scaling and accelerations on the WLAN. Microsoft also optimized the connection between the client and server, and improved the cache on the client. With the new pipeline function, servers can write multiple requests to a queue and execute them in parallel. This new technology is similar to buffer credits in Fibre Channel technology.

Microsoft has extended the data width to 64 bits in the current version, allowing block sizes greater than 64KB, which accelerates the transfer of large files, such as virtual disks or databases. Additionally, the optimized connections between the client and server prevent disconnections on unreliable networks such as WLAN or WAN environments.

What's New in SMB 3.0?

In Windows Server 2012, Microsoft introduced SMB version 2.2 with further improvements. Later, these innovations were deemed so far-reaching that the version was subsequently increased to 3.0. One new feature in SMB 3.0, for example, is server-based workloads, which are supported for Hyper-V and databases with SQL Server 2012/2014.

Hyper-V for SMB can now handle Universal Naming Convention (UNC) paths as the location of the control files on virtual servers. Also, virtual disks can now use UNC paths; that is, they can save files directly on the network. Simply put, this means that the location of the virtual disks on Windows Server 2012 or newer can be a UNC path, so you don't need to use drive letters or map network drives. For example, you can now address the data via a service name; there is no longer a requirement for a physical server name, as for a normal drive letter.

SMB 3.0 in the Enterprise

SMB 3.0 is much more robust, more powerful, and more scalable and offers more security than its predecessors, which is especially important for large environments. For example, SMB Transparent Failover allows clients to connect to a file server in a cluster environment. If the virtual file server is moved to another cluster node, the connections to the clients remain active. In the current version of SMB, open SMB connections are also redirected to the new node and remain active. The process is completely transparent to clients and Hyper-V hosts.

If virtual disks are stored on file shares in a cluster, services are no longer interrupted when the server is migrated. Another new feature in SMB 3.0 is SMB multichannel, in which the bandwidth is aggregated from multiple network adapters between SMB 3 clients and SMB 3 servers. This approach offers two main advantages: The bandwidth is distributed across multiple links for increased throughput, and the approach provides better fault tolerance if a connection fails. The technology works in a similar way to multipath I/O (MPIO) for iSCSI and Fibre Channel networks.

SMB Scale-Out file servers use Cluster Shared Volumes (CSV) for parallel access to files across all nodes in a cluster, which improves the performance and scalability of server-based services, because all nodes are involved. The technology works in parallel with features such as transparent failover and multichannel.

Additional SMB performance indicators let admins measure usage and utilization of file shares, including throughput, latency, and IOPS management reporting. The new counters in the Windows Server 2012 and Windows Server 2012 R2 performance monitoring feature support metrics for the client and server so that analysis of both ends of the SMB 3.0 connection is possible. These new technologies are useful for troubleshooting performance issues and for ensuring stable data access on the network.

The new SMB encryption lets you encrypt the data in SMB connections. This technology is only active if you use SMB 3.0 clients and servers. If you use legacy clients with SMB 2.0 and SMB 1.0 in parallel, encryption is disabled.

RDMA and Hyper-V

Normally, every action in which a service such as Hyper-V sends data over the network – for example, a live migration – generates processor load. This is because the processor has to compose and compute data packets for the network. To do so, in turn, it needs access to the server's RAM. Once the package is assembled, the processor forwards it to a cache on the network card. The packets wait for transmission here and are then sent by the network card to the target server or client. The same process takes place when data packets arrive at the server. For large amounts of data, such as occur in the transmission of a virtual server during live migration, these operations are very time consuming and computationally intensive.

The solution to these problems is Direct Memory Access (DMA). Simply put, the various system components, such as network cards, directly access the memory to store data and perform calculations, which offloads some of the work from the processor and significantly shortens queues and processes. This approach, in turn, increases the speed of the operating system and the various services such as Hyper-V.

Remote Direct Memory Access (RDMA) is an extension of this technology that adds network functions. The technology allows the RAM content to be sent to another server on the network, as well as the direct access by Windows Server 2012/2012 R2 to the memory of another server. Microsoft already built RDMA into Windows Server 2012 but improved it in Windows Server 2012 R2 with direct integration into Hyper-V.

Windows Server 2012/2012 R2 can automatically use this technology wherever two servers with Windows Server 2012/2012 R2 need to communicate on the network. RDMA significantly increases the data throughput on the network and reduces latency in data transmission, which also plays an important role in live migration.

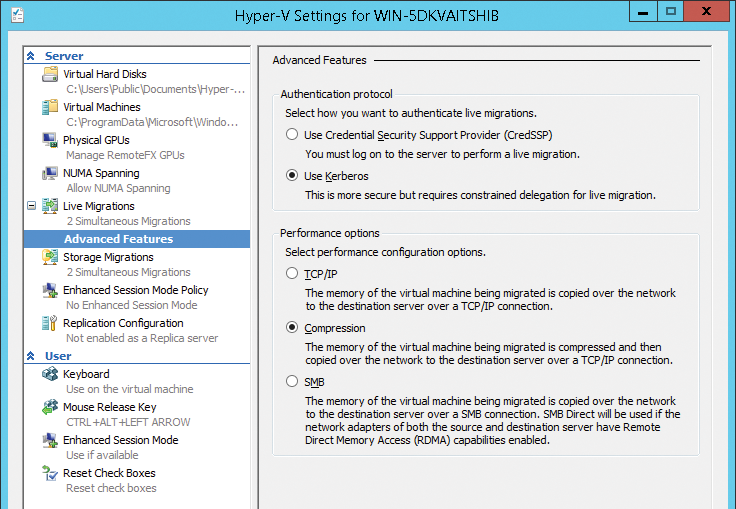

In Windows Server 2012 R2, a cluster node can access the memory of another cluster node during a live migration and thus move a virtual server on the fly – and extremely quickly – in a live migration. On top of this, Hyper-V in Windows Server 2012 R2 supports live migration with data compression. This new technology and the RDMA function again accelerate Hyper-V on fast networks.

Another interesting feature is Data Center Bridging in Windows Server 2012 R2, which implements technologies for controlling traffic on very large networks. If the network adapters support the Converged Network Adapter (CNA) function, data access can be improved based on iSCSI disks or RDMA techniques – even between different data centers. You can also limit the bandwidth that this technology uses.

For fast rapid communication between servers based on Windows Server 2012 R2 – especially on cluster nodes – the NICs need to support RDMA. It makes sense to use this, especially for very large amounts of data – for example, if you are using Windows Server 2012/2012 R2 as a NAS server (i.e., as an iSCSI target) and store databases from SQL Server 2012/2014 there. To a limited extent, SQL Server 2008 R2 can also use this function, but not Windows Server 2008 R2 or older versions of Microsoft SQL Server.

Optimal Use of Hyper-V in a Network Environment

If you use multiple Hyper-V hosts based on Windows Server 2012 R2 in your environment, these hosts can, as mentioned previously, use the new multichannel function for parallel access to data. This technology is used, for example, if the virtual disks of your virtual servers reside not on the Hyper-V host but on network shares.

The approach speeds up the traffic between Hyper-V hosts and virtual servers and also protects virtualized services against the failure of a single SMB channel. To do this, you do not need to install a role service or change the configuration. All of these benefits are integrated out of the box in Windows Server 2012 R2.

To make optimal use of these features, the network adapters must be fast enough. Microsoft recommends the installation of a 10GB adapter or the use of at least two 1GB adapters. You can also use the teaming function for NICs in Windows Server 2012 R2 for this. Server Manager can group network adapters in teams, even if the drivers do not support this. These teams also support SMB traffic.

SMB Direct is also enabled automatically between servers running Windows Server 2012 R2. To be able to use this technology between Hyper-V hosts, the built-in network adapters must support RDMA (Remote Direct Memory Access) and be extremely fast. Your best bets are cards for iWARP, InfiniBand, and RDMA over Converged Ethernet (RoCE).

Storage Migration

In Windows Server 2012 R2, you have the option of changing the location of virtual disks on Hyper-V hosts. You can do this on the fly on the virtual server. To do this, right-click in Hyper-V Manager on the virtual server whose disks you want to move and select Move from the menu; the Move Wizard then appears.

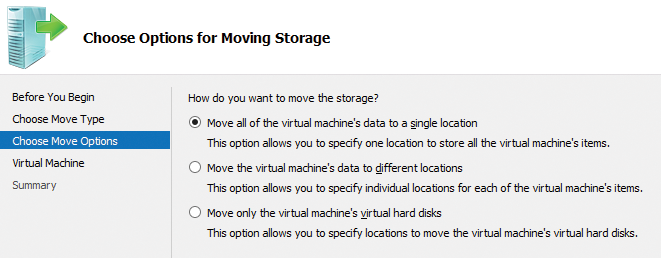

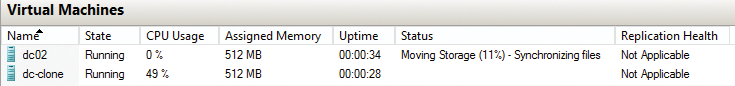

In the wizard, you choose to Move the virtual machine's storage as the Move Type, then you need to decide whether you want to move only the configuration data from the virtual server or the virtual hard disks, too (Figure 1). Finally, select the folder in which you want Hyper-V to store the data for the computer. The virtual server continues to run during the process. You can see the status of the operation in Hyper-V Manager (Figure 2). While moving the data, users are not cut off from the virtual server; the whole process takes place transparently.

Besides the configuration snapshots and the virtual disks, you can store smart paging files separately. Smart paging is designed to prevent virtual servers from failing to start because the total available memory is already assigned. If you are using Dynamic Memory, the possibility exists that other servers on the host are using the entire memory.

The new Smart Paging feature allows virtual servers to use parts of the host's hard disk as memory for the reboot. Again, you can move this area separately. After a successful boot, disk space is released again, and the virtual server regains its memory through dynamic memory.

Live Migration with SMB

Since Windows Server 2012, it has been possible to perform a live migration of Hyper-V hosts without using a cluster or to replicate virtual machines between Hyper-V hosts without clustering them. Another new feature is the ability to move a server's virtual disks via live migration – that is, the virtual servers themselves remain on the current host, and only the location of the files changes. For example, you can move the files to a share. In Windows Server 2012 R2, Microsoft further improved this feature: Services that store data on the network benefit from SMB 3.

Hyper-V Replica lets you replicate virtual servers, including all their disks, on other servers – also on the fly. You do not need a shared medium for this because the virtual disks are replicated. In Windows Server 2012, you could only set the minimum sync interval to a minimum of five minutes. Windows Server 2012 R2 offers the option of replicating the data from the host every 30 seconds. On the other hand, you can also extend the replication interval up to 15 minutes. Replication occurs on the filesystem and the network via SMB; a cluster is not necessary. You can perform the replications manually, automatically, or based on a schedule (Figure 3).

Live Migration without a Cluster

For a live migration without a cluster, right-click the virtual server you want to move between the Hyper-V hosts and select the Move option from the shortcut menu to open the Move Wizard. For Move Type, select Move the virtual machine. Then, select the target computer to which you want to move the corresponding computer.

In the next window, you can specify the live migration more precisely. You have the option of moving different virtual server data to different folders on the network, or you can move all the server data, including the virtual disks, to a shared folder (Figure 4). If the virtual hard drive on a virtual server resides on a share, you can also choose to move only the configuration files between the Hyper-V hosts.

You can also script this process. To do this, open a PowerShell session on the source server and enter the following command:

Move-VM "<virtual server>" <remote server> -IncludeStorage -DestinationStoragePath <local path on target server>

To run Hyper-V with live migration in a cluster, you need to create the cluster and enable Cluster Shared Volumes. Windows then stores data on the operating system partition in the ClusterStorage folder. However, the data does not physically reside on hard disk C: of the node but on the shared disk, and access to this is diverted to the C:\ClusterStorage folder. The medium can also be a virtual iSCSI disk on Windows Server 2012 R2. The VHDX files for the VMs are located in this folder and therefore available to all nodes simultaneously.

Clusters in Windows Server 2012 R2 support dynamic I/O. If the data connection of a node fails, the cluster can automatically route the traffic required for communication between the virtual machines through the lines of the second node, without having to perform a failover to do so. You can configure a cluster so that the cluster nodes prioritize network traffic between the nodes and to the CSV.

Conclusions

Windows Server 2012 R2 and Hyper-V are both designed to store data centrally on network shares. However, you also need to consider the performance of the components used. The network adapters, the server hardware, all the network devices, and of course the cabling in the enterprise must support the new functions.