High availability without Pacemaker

Workaround

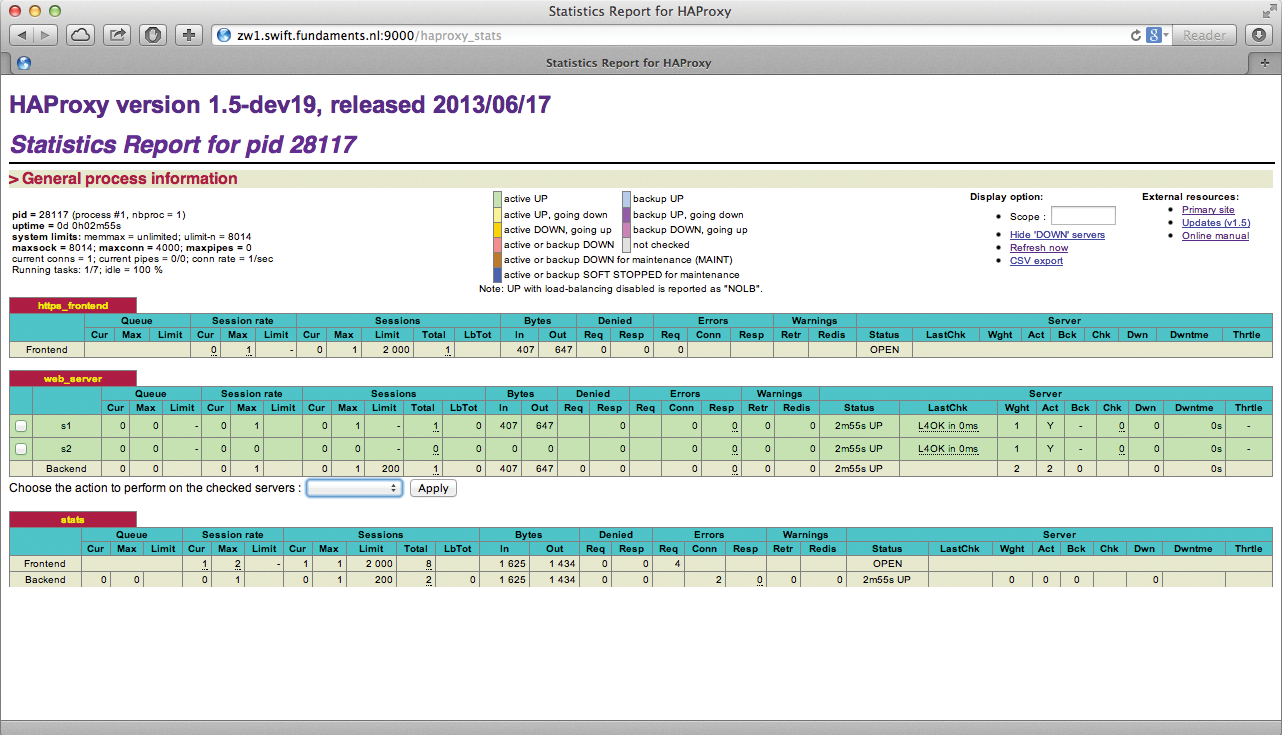

The Pacemaker cluster resource manager is not the friendliest of applications. In the best case scenario, Pacemaker will keep the load balanced on your cluster and monitor the back-end servers, rerouting work to maintain high availability when a system goes down. Pacemaker has come a long way since the release of its predecessor, Heartbeat 2 – an unfriendly and unstable tool that was only manageable at all by integrating XML snippets.

Pacemaker no longer has the shortcomings of Heartbeat, but it still has not outgrown some of the original usability issues. The Pacemaker resource manager has ambitious goals, but what looks promising in the lab often fails in reality, because it is too difficult to use (Figure 1). Additionally, it is not as if development of Pacemaker has progressed particularly well: Although the project can look back on several years of existence, bugs have repeatedly reared their heads, thereby undermining confidence in the Pacemaker stack.

Admins often face a difficult choice: Introducing Pacemaker might solve the HA problem, but it means installing a "black box" in the environment that apparently does whatever it wants. Of course, not having high availability at all is not a genuine alternative. This dilemma leads to the question of whether it is possible to achieve meaningful high availability in some other way. A number of FOSS solutions vie for the admin's attention.

Understanding High Availability

The universal goal of high availability is that a user must never notice that a server just crashed. For most users, this is already an inherent requirement: If you sit down in front of your computer at three o'clock in the morning, you expect your provider's email service to work just as it would at three in the afternoon. In this kind of construct, users are not interested in which server delivers their mail, they just want to be able to access their mail at any time.

The "failover" principle achieves transparent high availability by working with dynamic IP addresses. An IP address is assigned to a service and migrates with the service from one server to another if the original server fails.

This kind of magic works well for stateless protocols; in HTTP, for example, it does not matter whether Server A or Server B delivers the data – incoming requests always arrive at the server that currently has the "Service IP" address. Stateful connections are more complex, but most applications that establish stateful connections have features that automatically reopen a connection that has broken down. The users do not notice anything more than a brief hiccup in the service – and definitely not a failure.

This is precisely the "evil" type of configuration that requires a service like a cluster manager. However, some solutions let you get along without Pacemaker or degrade Pacemaker to a simple auxiliary service that no longer controls the whole cluster. In this article, I'll show how this works through four individual examples: HAProxy as a load balancer, VRRP by means of keepalived for routing, inherent high availability with the ISC DHCP server, and classic scale-out HA based on Galera.

Variant 1: Load Balancing

Load balancing belongs to the category of scale-out solutions and, as a principle, has basically existed for several decades. The pivotal point is the idea that a single service, the load balancer, responds to connections that arrive at an IP address and forwards them in the background to target servers. The number of possible target servers is not limited, and target servers can also can be dynamically added or removed. If a service provider notices that the platform no longer offers sufficient performance, it just adds more target servers, thus ensuring more resources, which the servers in the setup can utilize.

Although originally designed for HTTP, the load balancer concept now includes just about every conceivable protocol. Essentially, it's always just a matter of forwarding an incoming connection on a TCP/IP port to a port on another server, which is something that almost any protocol can do without much difficulty.

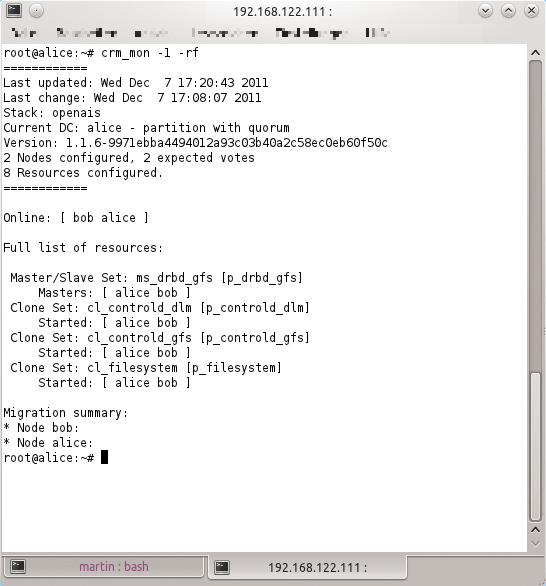

Numerous representatives of the load balancer function on Linux keep the flag for this type of program flying; in particular, HAProxy [1] has a large community. HAProxy can use different redirect methods, depending on which protocol the application speaks (Figure 2). Admins can introduce good and reliable failsafes for mail servers, web servers, and other simple services with the help of load balancers.

A setup in which HAProxy ensures the permanent availability of a service involves some challenges. Challenge number 1 is the availability of data; you will usually want the same data to be available across all your web servers. To achieve this goal, you could use a classic NFS setup, but newer solutions such as GlusterFS are also definite options.

Factory-supplied appliances often have built-in HA features out of the box so that admins do not have to worry about implementing it. However, if you use plain vanilla HAProxy, a basic cluster manager is necessary. This alone does not question the concept, because the cluster manager in this example would only move an IP address from one computer to another and back – unless you use VRRP.

Variant 2: Routing with VRRP

One scenario in which the use of Pacemaker is particularly painful involves classic firewall and router systems. If you do not use a hardware router from the outset, you are likely to assume that a simple Linux-based firewall is probably the best solution for security and routing on the corporate network. Although there is nothing wrong with this assumption, you will rarely need more than one DHCP server or a huge number of iptables rules. Of course, the corporate firewall must somehow be highly available; if the server fails, work grinds to a standstill in the company.

The classic approach using Pacemaker involves operating services such as the DHCP server or the firewall, along with the appropriate masquerading configuration, as separate services in Pacemaker. This method inevitably drags in the entire Pacemaker stack, which is precisely the component you wanted to avoid. VRRP can provide efficient routing functions on multiple systems.

VRRP stands for "Virtual Redundancy Router Protocol." It dates back to 1998, and companies such as IBM, Microsoft, and Nokia were involved in its development. VRRP works on a simple principle: Rather than acting as individual devices on a network, all routers configured via VRRP act as a logical group. The logical router has both a virtual MAC and a virtual IP address, and one member of the VRRP pool always handles the actual routing based on the given configuration.

Failover is an inherent feature of the VRRP protocol: If one router fails, another takes over the virtual router MAC address and the virtual IP address. For the user, this means, at most, a small break but not a noticeable loss.

On Linux systems, VRRP setups can be created relatively easily through the previously mentioned keepalived software [2]. Keepalived was not initially conceived for VRRP, but it's probably the most common task for keepalived today.

Variant 3: Inherent HA with ISC DHCP

After using VRRP on your firewall/router to take care of routing, the next issue is DHCP. Most corporate networks use DHCP for dynamic distribution of addresses; frequently, dhcpcd is used in a typical failover configuration. ISC DHCPd comes with a handy feature that removes the need for Pacemaker. Much like the VRRP protocol, it lets you create "DHCP pools." The core idea behind these pools is that multiple DHCP servers take care of the same network; a DHCP request can therefore in principle be answered by more than one DHCP server. To avoid collisions in the addresses, the two DHCP servers keep their lease databases permanently synchronized.

In the case of failure of one of the two servers, a second node is still left. The pool-based solution thus offers the same kind of availability as a solution based on a cluster manager, but with considerably less effort and less complexity. The same rules apply as for VRRP; the combination of VRRP on one hand and a DHCP pool on the other gives admins a complete firewall/router in a high-availability design without the disadvantages of Pacemaker.

Variant 4: Database High Availability with Galera

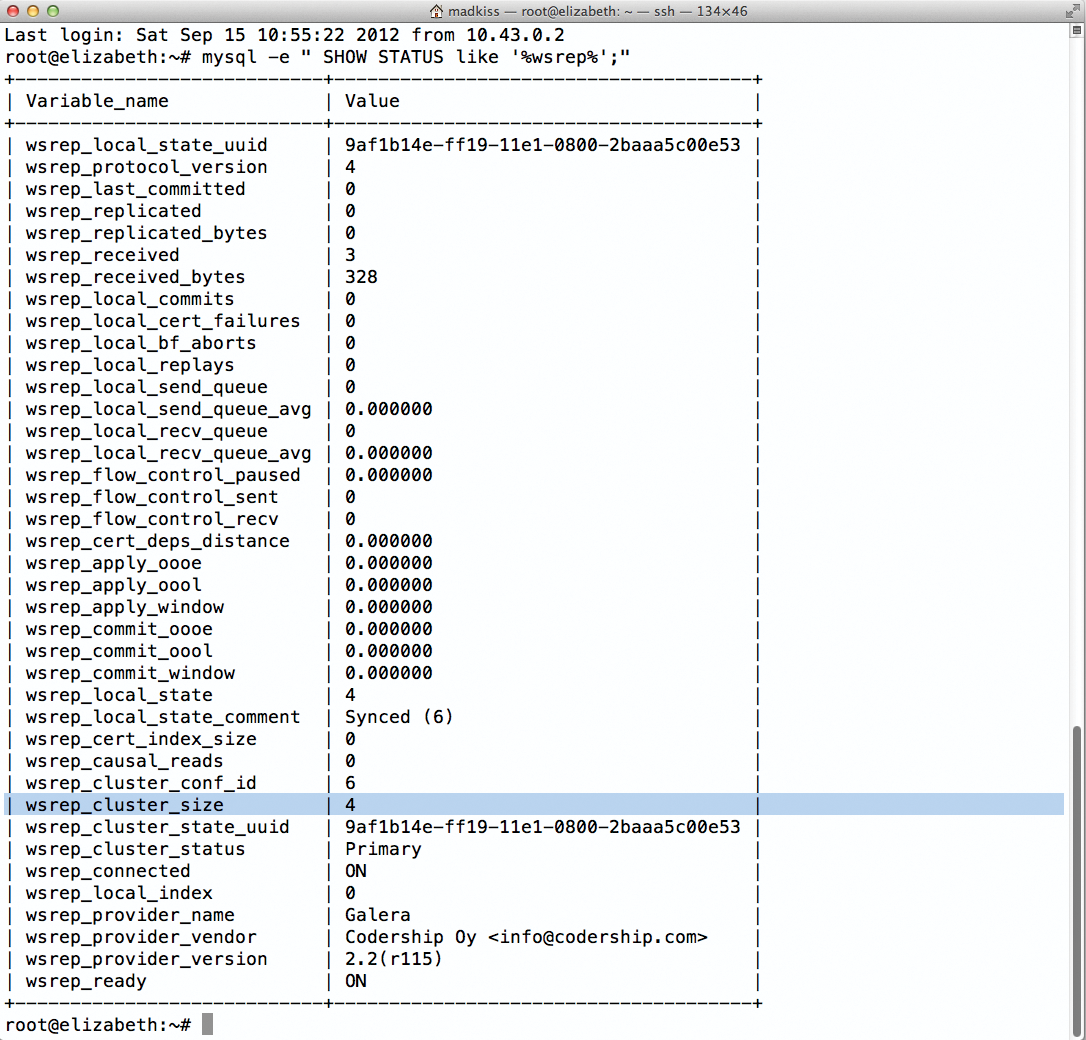

Galera is sort of the latest craze with regard to databases and the way in which databases implement high availability (Figure 3). The solution is based on MySQL; basically, it extends MySQL in a modular way by adding a multi-master feature. Although Galera is not the only tool that can do this, it is, at the moment, the one with the biggest buzz factor. Galera is a seminal new technology because it solves almost all the problems inherent with high availability.

The first problem with high-availability setups is usually highly available data storage. Classic setups resort to tools like DRBD; however, they suffer from limitations. Most programs of this kind do not offer particularly good horizontal scaling and do not go beyond two nodes. Galera has built-in redundant data storage and ensures that data to be stored is always written across multiple nodes to the local MySQL instances before it declares a write to be complete. This means that each always exists several times; if one node fails, the same data is still available elsewhere.

The second typical HA problem is the question of access to the software. Classic MySQL replication uses a master/slave system in which writes only occur on the respective master server. Galera is different: Each MySQL instance that is part of a Galera cluster is a full MySQL server that can handle both reading and writing data.

Depending on the client software, this solution only has one inherent disadvantage: Most clients only let you specify a single host when you choose a database. The Galera Load Balancer provides a remedy for this by accepting all available Galera servers as a back end and forwarding connections from the outside to one working MySQL server.

The same problem affects the Galera load balancer as previously mentioned in the HAProxy section: The glbd daemon can be difficult to make highly available without Pacemaker. However, a Pacemaker setup that only runs one IP for the service and the glbd itself is comparatively easy to maintain and uncomplicated. Compared with MySQL replication with a combination of Corosync and Pacemaker, Galera is certainly more elegant and better designed, and it scales seamlessly, especially in the horizontal direction, which is fairly difficult with typical MySQL replication. (See the "Special Case: Ceph" box for another alternative.)

Conclusions

If you prefer not to use Pacemaker, you have several options for working around the unloved cluster manager. For new protocols, finding an alternative is usually not a problem. (Galera is an excellent example.)

Things get complicated when you need to make a typical legacy protocol highly available. Although load balancers provide good service, the load balancer itself is a single point of failure that typically gives Pacemaker a way to creep back into the setup. However, Pacemaker setups for failing over a load balancer are not very complex – much less so than setups that rely exclusively on Pacemaker.

Pacemaker is definitely not dead: In fact, it will be necessary for quite a while, especially for legacy components. However, the solutions featured in this article, with Galera and Ceph first and foremost, clearly show that the IT world is headed elsewhere.