Monitoring HPC Systems

Nerve Center

When you know better, you do better – Maya Angelou

Monitoring clusters and understanding how the cluster is performing is key to helping users better run their applications and to optimizing the use of cluster resources.

Such information is valuable for a variety of reasons, including understanding how the cluster is being used, how much of the processing capability is being used, how much of the memory is being used for user applications, and what the network is doing and whether it is being used for applications. This information can help you understand where you need to make changes in the configuration of the current cluster to improve the utilization of resources. Moreover, this information can help you plan for the next cluster.

In a past blog post, I looked at monitoring from the perspective of understanding what is happening in the system [1] (metrics) and how important it can be to understand the frequency at which you monitor the metrics.

If you put several cluster admins in a room together (e.g., the BeoBash [2]), and you ask, "What is the best way to monitor a cluster?" you will have to duck and cover pretty quickly from the huge number of opinions and the great passion behind the answers. Having so many options and opinions is not a bad thing, but you need to sort through the ideas to find something that works for you and your situation.

In two further blog posts [3] [4], I wrote some simple scripts to measure metrics on a single server as a starting point for use in a cluster. This code measured the processes of interest by collecting data on an individual node basis.

Now it's time to look at monitoring frameworks where, I hope, the scripts will be useful for custom monitoring and perhaps provide a nice visual representation of the state of the cluster.

A non-exhaustive list of monitoring frameworks that people use to monitor system processes includes the following [5]-[12]:

- Monitorix

- Munin

- Cacti

- Ganglia

- Zabbix

- Zenoss Community

- Observium

- GKrellM

As you can see, you have a wide range of options, including commercial tools.

Ganglia is arguably the most popular framework, particularly in the HPC world, but it is also gaining popularity in the Big Data and private cloud world. In this article, I present Ganglia as a monitoring framework.

A Few Words

Ganglia has been in use for several years (since about 2001). Because of the sheer size of the systems involved, the HPC world has been using Ganglia, and in the past few years, the Big Data and Hadoop communities have been using it a great deal, primarily for its scalability and extensibility. The OpenStack and cloud communities frequently use it, too.

Ganglia has grown over the years and has gained the ability to monitor very large systems – into the 1,000-node range – as well as the ability to monitor close to 1,000 metrics for each system. You can run Ganglia on a number of different platforms, making it truly flexible. Additionally, it can use custom metrics written in a variety of languages including C, C++, and Python.

Ganglia has also gained a new web interface with custom graphs. At a high level, Ganglia comprises three parts: The first part is gmond, which is the part of Ganglia that gathers metrics on the servers to be monitored. Gmond shares its metrics with cluster peers using a simple "listen/announce" protocol via XDR (External Data Representation). By default, the announcements are made via multicast (port 8649 by default). At the same time, each gmond also listens to the announcements from its peers so that every node knows the metrics of all of the other nodes. The advantage of this architecture is that the master node only needs to communicate with one node instead of having to communicate with every single node. It also makes Ganglia more robust because, if a node dies, you can just talk to the other nodes to access the information.

The second part is called gmetad, which you install on the master node. With a list of gmond nodes, it just polls a gmond-equipped node in a cluster and gathers the data. This data is stored using RRDtool [13]. The third piece is called gweb and is the Ganglia web interface. This second-generation web interface offers custom graphs that you can create to match your situation and needs.

Installing on the Master Node

When working with a new tool, you have two choices: build from source or install pre-built binaries. Typically, I like to build from source the first time I use a new tool, so I can better understand the dependencies and how the tool is built. I spend some time building Ganglia following a blog by Sachin Sharma as a guide [14]. You can find my trail of tears in the ganglia-devel mailing list. It's not as easy as it seems, particularly if you want to include the Python modules. Additionally, it appears that no one really uses the default installation locations when building the code.

After a great deal of frustration, I finally gave up on building Ganglia by myself. I hate to admit defeat but Ganglia takes the first round. Fortunately, one of the Ganglia developers, Vladimir Vuksan [15], has some pre-built binaries that saved my bacon. The specific versions of the system software I use are listed in Table 1.

Tabelle 1: Software

|

Software |

Version |

|---|---|

|

CentOS |

6.5 |

|

Ganglia |

3.6.0 |

|

Ganglia web |

3.5.12 |

|

Confuse |

2.7 |

|

RRDtool |

1.3.8 |

Before installing any binaries, I try to install the prerequisites rather than totally rely on RPM or Yum to resolve dependencies. I think this forces me to pay closer attention to the software rather than installing things willy-nilly. Thus, I installed the following packages:

yum install php yum install httpd yum install apr yum install libconfuse yum install expat yum install pcre yum install libcmemcached yum install rrdtool

The php and httpd packages are used for the web interface. I also recommend turning off SELinux (you can Google how to do this). I also turned off iptables for the purposes of this exercise. If you need to keep iptables turned on, please refer to Sharma's blog [14] and go to the bottom for details on how to configure iptables rules for Ganglia. If you get stuck, please email the Ganglia mailing list and ask for help. Now I'm ready to install Ganglia!

Configure/Compile/Install

Vladimir Vuksan also has created a set of CentOS 6 RPMs for the latest version of Ganglia [16]. These RPMs install on CentOS 6.5 and make your life a great deal easier. I just used the rpm command and pointed to the URL for the RPMs. For the master node, I started with gmond (Listing 1). Next, I installed gmetad on the master node (Listing 2). Remember, you only need to install this on one node, typically the master node, for a basic configuration.

Listing 1: gmond Modules

rpm -ivh http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmond-modules-python-3.6.0-1.x86_64.rpm http://vuksan.com/centos/RPMS-6/x86_64/libganglia-3.6.0-1.x86_64.rpmhttp://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmond-3.6.0-1.x86_64.rpm Retrieving http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmond-modules-python-3.6.0-1.x86_64.rpm Retrieving http://vuksan.com/centos/RPMS-6/x86_64/libganglia-3.6.0-1.x86_64.rpm Retrieving http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmond-3.6.0-1.x86_64.rpm Preparing... ########################################### [100%] 1:libganglia ########################################### [ 33%] 2:ganglia-gmond ########################################### [ 67%] 3:ganglia-gmond-modules-p########################################### [100%]

Listing 2: gmetab

[root@home4 RPMS]# rpm -ivh http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmetad-3.6.0-1.x86_64.rpm Retrieving http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmetad-3.6.0-1.x86_64.rpm Preparing... ########################################### [100%] 1:ganglia-gmetad ########################################### [100%]

Ganglia installed very easily using these binaries, but I still had to do some configuration before declaring victory. Before configuring Ganglia however, I wanted to find out where certain components from the RPMs landed in the filesystem. The binaries, gmond and gmetad were installed in /usr/sbin. (Note: If you build from source, they go into /usr/local/sbin). You can use the command whereis gmond to see where it is installed.

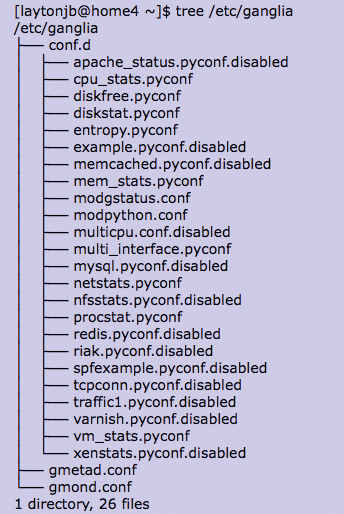

The man pages were installed in the usual location, /usr/share/man. You can check this by running man gmond. The binaries also installed key init scripts into /etc/rc.d/init.d/ that are used for starting, stopping, restarting, and checking the status of the gmond and gmetad processes. The scripts use Ganglia configuration files located in /etc/ganglia. To see the files in this directory, use the tree command (Figure 1). (Note: You may have to install this command with yum install tree).

Ganglia has two files on which to direct your focus: gmond.conf and gmetad.conf. The subdirectory, conf.d contains several Python configuration files that tell Ganglia about Python modules for collecting information about the system.

The file /etc/ganglia/conf.d/modpython.conf contains the details of the Python metric modules. On my system, this file looks like Listing 3. This file tells you the Python metric module code is stored in /usr/lib64/ganglia/python_modules. A quick peek at this directory is shown in Listing 4. I won't list any of the Python code, but this is where you will put any Python metrics you write. Before you can use the Python metrics, you have to tell Ganglia about them in the *.pyconf files in /etc/ganglia/conf.d (see Figure 1). In these files, you define the metrics and how often to collect them (remember my blog post [1] about paying attention to how often you collect monitoring information).

Listing 3: modpython.conf

[laytonjb@home4 ~]$ more /etc/ganglia/conf.d/modpython.conf

/*

params - path to the directory where mod_python

should look for python metric modules

the "pyconf" files in the include directory below

will be scanned for configurations for those modules

*/

modules {

module {

name = "python_module"

path = "modpython.so"

params = "/usr/lib64/ganglia/python_modules"

}

}

include ("/etc/ganglia/conf.d/*.pyconf")

Listing 4: python_modules Directory

[laytonjb@home4 ~]$ ls -s /usr/lib64/ganglia/python_modules total 668 16 apache_status.py* 4 example.pyo 8 netstats.pyc 16 tcpconn.py 12 apache_status.pyc 16 memcached.py 8 netstats.pyo 8 tcpconn.pyc 12 apache_status.pyo 12 memcached.pyc 12 nfsstats.py 8 tcpconn.pyo 8 DBUtil.py* 12 memcached.pyo 8 nfsstats.pyc 8 traffic1.py 12 DBUtil.pyc 16 mem_stats.py 8 nfsstats.pyo 8 traffic1.pyc 12 DBUtil.pyo 8 mem_stats.pyc 16 procstat.py 8 traffic1.pyo 8 diskfree.py 8 mem_stats.pyo 12 procstat.pyc 32 varnish.py* 4 diskfree.pyc 8 multidisk.py 12 procstat.pyo 16 varnish.pyc 4 diskfree.pyo 4 multidisk.pyc 4 redis.py* 16 varnish.pyo 12 diskstat.py 4 multidisk.pyo 4 redis.pyc 28 vm_stats.py 12 diskstat.pyc 8 multi_interface.py 4 redis.pyo 12 vm_stats.pyc 12 diskstat.pyo 8 multi_interface.pyc 12 riak.py* 12 vm_stats.pyo 4 entropy.py 8 multi_interface.pyo 8 riak.pyc 4 xenstats.py* 4 entropy.pyc 28 mysql.py* 8 riak.pyo 4 xenstats.pyc 4 entropy.pyo 24 mysql.pyc 8 spfexample.py 4 xenstats.pyo 4 example.py 24 mysql.pyo 4 spfexample.pyc 4 example.pyc 8 netstats.py 4 spfexample.pyo

The RPMs also add gmond and gmetad to chkconfig, so you can control whether they start on boot or if you have to start them manually. You can discover this by running the chkconfig --list command and examining the output (Listing 5). I cut out a lot of the output, but as you can tell, gmetad and gmond are configured to start on boot the next time the system is booted into runlevels 3 to 5 (i.e., runlevels 3 to 5 are on). The Ganglia libraries were installed in /usr/lib64/ (Listing 6). When you first install Ganglia, gmond and gmetad services have not started yet. However, they start when the system is rebooted. In the next section, I begin to configure Ganglia by editing the files gmond.conf and gmetad.conf.

Listing 5: chkconfig --list

[laytonjb@home4 ~]$ chkconfig --list ... gmetad 0:off 1:off 2:on 3:on 4:on 5:on 6:off gmond 0:off 1:off 2:on 3:on 4:on 5:on 6:off ..

Listing 6: Ganglia Libraries

[laytonjb@home4 ~]$ ls -lstar /usr/lib64/libganglia* 104 -rwxr-xr-x 1 root root 106096 May 7 2013 /usr/lib64/libganglia-3.6.0.so.0.0.0* 0 lrwxrwxrwx 1 root root 25 Feb 10 17:29 /usr/lib64/libganglia-3.6.0.so.0 -> libganglia-3.6.0.so.0.0.0*

Configuring

Some tweaks (edits) need to be made to the gmetad and gmond configuration files. The changes to /etc/ganglia/gmetad.conf are fairly easy. First, look for a line in the file that reads

data_source "my cluster" localhost

and change it to

data_source "Ganglia Test Setup" 192.168.1.4

where 192.168.1.4 is the IP address of the master node. You can use any name you want for your Ganglia cluster name, but I chose to make it "Ganglia Test Setup" as an example. The gmond configuration files also need to be modified slightly.

The following change needs to be made to the file /etc/ganglia/gmond.conf: A section in the file starts with cluster {. In that section, assign the variable name anything you like; just be sure it is in quotes and don't use any quotes or other unusual characters in the name itself. I changed mine like this:

name = "Ganglia Test Setup"

That's about it for configuring Ganglia on the master node. When I start adding Ganglia clients, I'll have to come back and edit gmetad.conf to add client IP addresses, but that happens later in the article. At this point, I have a choice: I can proceed with installing the Ganglia web interface, or I could test gmond to make sure it's collecting data on the master node. I tend to be a little more conservative and want to run a test before jumping into the deep end of the pool.

Testing gmond and gmetad

To run and test (debug) gmond from the command line, I'll run it "by hand," telling it that I'm "debugging." Sometimes this process produces a great deal of output, so I'll capture it using the script command (Listing 7). Remember to use Ctrl+C (^c) to kill gmond and then Ctrl+D (^d) to stop the script.

Listing 7: Testing gmond

[root@home4 laytonjb]# cd /tmp [root@home4 tmp]# script gmond.out [root@home4 tmp]# gmond -d 5 -c /etc/ganglia/gmond.conf [root@home4 tmp]# ^c [root@home4 tmp]# ^d

Take a look at the top of the file and you should see some output that looks like Listing 8, which indicates that gmond is working correctly. If everything is running correctly – at least as far as you can tell – then start up the gmetad and gmond daemons and make sure they function correctly (Listing 9). You should see the OK output from these commands (one from each). If you don't, you have a problem and should go back through the steps.

Listing 8: gmond Test Output

[root@home4 tmp]# gmond -d 5 -c /etc/ganglia/gmond.conf

loaded module: core_metrics

loaded module: cpu_module

loaded module: disk_module

loaded module: load_module

loaded module: mem_module

loaded module: net_module

loaded module: proc_module

loaded module: sys_module

loaded module: python_module

udp_recv_channel mcast_join=239.2.11.71 mcast_if=NULL port=8649 bind=239.2.11.71 buffer=0

socket created, SO_RCVBUF = 124928

tcp_accept_channel bind=NULL port=8649 gzip_output=0

udp_send_channel mcast_join=239.2.11.71 mcast_if=NULL host=NULL port=8649

Unable to find the metric information for 'procs_blocked'. Possible that the module has not been loaded.

Unable to find the metric information for 'procs_created'. Possible that the module has not been loaded.

Unable to find any metric information for 'softirq_(.+)'. Possible that a module has not been loaded.

metric 'cpu_user' being collected now

[tcp] Starting TCP listener thread...

...

Listing 9: Starting Daemons

[root@home4 ~]# /etc/rc.d/init.d/gmond start Starting GANGLIA gmond: [ OK ] [root@home4 ~]# /etc/rc.d/init.d/gmetad start Starting GANGLIA gmetad: [ OK ]

If you got two OKs, then you can also check whether the processes are running and the ports are configured correctly (Listing 10). Notice that port 8640 is in use, so everything's good at this point. Now I'm ready to install the web interface!

Listing 10: Checking Processes and Ports

[root@home4 ~]# ps -ef | grep -v grep | grep gm nobody 21637 1 0 18:12 ? 00:00:00 /usr/sbin/gmond nobody 21656 1 0 18:12 ? 00:00:00 /usr/sbin/gmetad [root@home4 ~]# netstat -plane | egrep 'gmon|gme' tcp 0 0 0.0.0.0:8651 0.0.0.0:* LISTEN 99 253012 21656/gmetad tcp 0 0 0.0.0.0:8652 0.0.0.0:* LISTEN 99 253013 21656/gmetad tcp 0 0 0.0.0.0:8649 0.0.0.0:* LISTEN 99 252721 21637/gmond udp 0 0 192.168.1.4:47559 239.2.11.71:8649 ESTABLISHED 99 252723 21637/gmond udp 0 0 239.2.11.71:8649 0.0.0.0:* 99 252719 21637/gmond unix 2 [ ] DGRAM 252725 21637/gmond

Web Interface

Ganglia has a second-generation web interface that is very flexible, including the ability to define your own charts. It uses RRDtool as the database for the charts, a common theme in the monitoring world.

You can download it from SourceForge [17] or get it from the Ganglia website. I will be using the latest version 3.5.12, which was the latest version at the time of writing. RRDtool requires HTTPD and PHP, so be sure you install those.

Download the compressed TAR file and uncompress and untar the file. The README for the tool points to a URL for installation instructions. For my installation, I edited Makefile and made just four changes:

(1) At the top of the file, change the GDESTDIR line to:

GDESTDIR = /var/www/html/ganglia

This is where the Ganglia web interface will be installed.

(2) Change the GWEB_STATEDIR line to:

GWEB_STATEDIR = /var/lib/ganglia-web

(3) Change the GMETAD_ROOTDIR line to:

GMETAD_ROOTDIR = /var/lib/ganglia

(4) Change the APACHE_USER line to:

APACHE_USER = apache

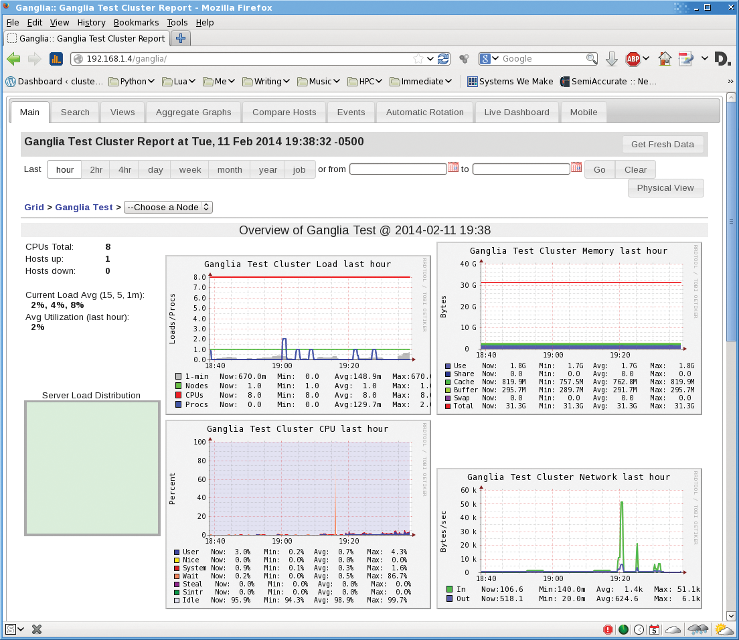

Once these changes are made, you can simply run make install to install the Ganglia web pieces. Now comes the big test. In your browser, open the URL for the Ganglia web page as http://192.168.1.4/ganglia (recall that in gmetad.conf I told it that the data source was 192.168.1.4). You should see something like the image in Figure 2. Notice that on the left-hand side of the image, near the top of the web page, that the number of Hosts up: is 1 and that it has eight CPUs. Plus, the charts are populated. (I took the screen capture after letting it run a while, so the charts actually had real data.)

Remember that the default refresh or polling interval is 15 seconds, so it might take a couple of minutes for the charts to show you much. Be sure to look at the data below the charts. If the values are reasonable, then most likely things are working correctly.

Ganglia Clients

As soon as you have the master node up and running, it's time to start getting other nodes to report their information to the master. This isn't difficult; I just installed the gmond RPMs on the client node.

I used the exact same command I did for installing gmond on the master node. The output looks something like Listing 11. I did have to make sure I had libconfuse installed on the client node before installing the RPMs.

Listing 11: Installing gmond on Clients

Retrieving http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmond-modules-python-3.6.0-1.x86_64.rpm Retrieving http://vuksan.com/centos/RPMS-6/x86_64/libganglia-3.6.0-1.x86_64.rpm Retrieving http://vuksan.com/centos/RPMS-6/x86_64/ganglia-gmond-3.6.0-1.x86_64.rpm Preparing... ########################################### [100%] 1:libganglia ########################################### [ 33%] 2:ganglia-gmond ########################################### [ 67%] 3:ganglia-gmond-modules-p########################################### [100%]

The next steps are pretty easy, just start up gmond:

[root@test8 ~]# /etc/rc.d/init.d/gmond start Starting GANGLIA gmond: [ OK ] [root@test8 ~]# /etc/rc.d/init.d/gmond status gmond (pid 3250) is running...

At this point, gmond should be running on the client node. You can check this by searching for it in the process table with the following command:

ps -ef | grep -i gmond

If you see it, then gmond should be running fine. If not, check /var/log/messages for anything that might indicate you have problems. If you don't find anything there, then try running gmond interactively with

/usr/local/sbin/gmond/gmond -d 5 -c /etc/ganglia/gmond.conf

and look for problems. (Maybe you are missing something on the client system?)

The next step is to add the client to the list of nodes on the master node. As root, edit the file /etc/ganglia/gmetad.conf, then go to the line that starts with data_source and add the IP address of the client node. In my case, the IP address of the client is 192.168.1.250. Now save the file and restart gmetad using:

/etc/rc.d/init.d/gmetad restart

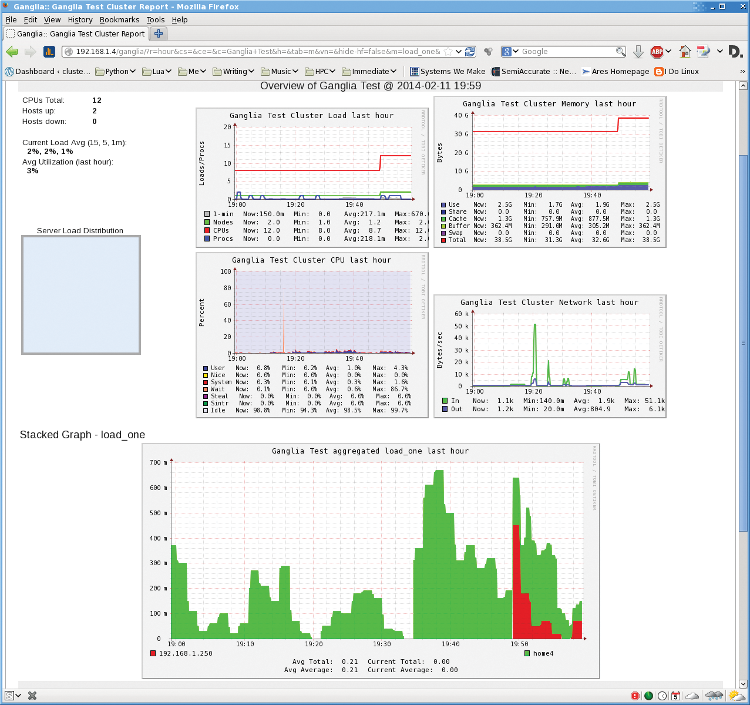

If you are successful, you should see the second node in the web interface (Figure 3). Notice that both nodes are shown: home4 (master node) and 192.168.1.250 (client). In the upper left-hand corner, you can see the number of hosts that are up (2). I also scrolled down a bit in the web page so you could see the "1-minute" stacked graph (called load_one). Notice that you see both hosts in this graph (green = home4, red = 192.168.1.250). I think I can declare success at this point.

Summary

Ganglia is quickly becoming the go-to system monitoring tool, particularly for large-scale systems. It has been in use for many years, so most of the kinks have been worked out, and it has been tested at very large scales just to make sure. The new web interface makes data visualization much easier than before; it was designed for and written by admins.

For this article, I installed Ganglia on my master node (my desktop), which is running CentOS 6.5. Although I originally tried to build everything myself, it's not easy to get right. (You might get it running, but it might not be correct). Thus, I downloaded the RPMs that one of the developers makes available. Installation was very easy (rpm -ivh ?), as was configuration. Gweb was also easily built and installed, and within seconds, I could visualize what was happening on my desktop. This was nice, but I also wanted to extend my power grab to other systems, so I installed the gmond RPMs on a second system, told the master node about it, and, bingo, the new node was being monitored.

Ganglia has a number of built-in metrics that it monitors, primarily from the /proc filesystem. However, you can extend or add your custom metrics to Ganglia via Python or C/C++ modules.

In a future blog, I'll write about integrating the metrics I wrote in previously into the Ganglia framework. Until then, give Ganglia a whirl. I also highly recommend the well-written book Monitoring with Ganglia [18] from O'Reilly, which explains the inner workings of Ganglia.