Measuring performance of hybrid hard disks

Between Worlds

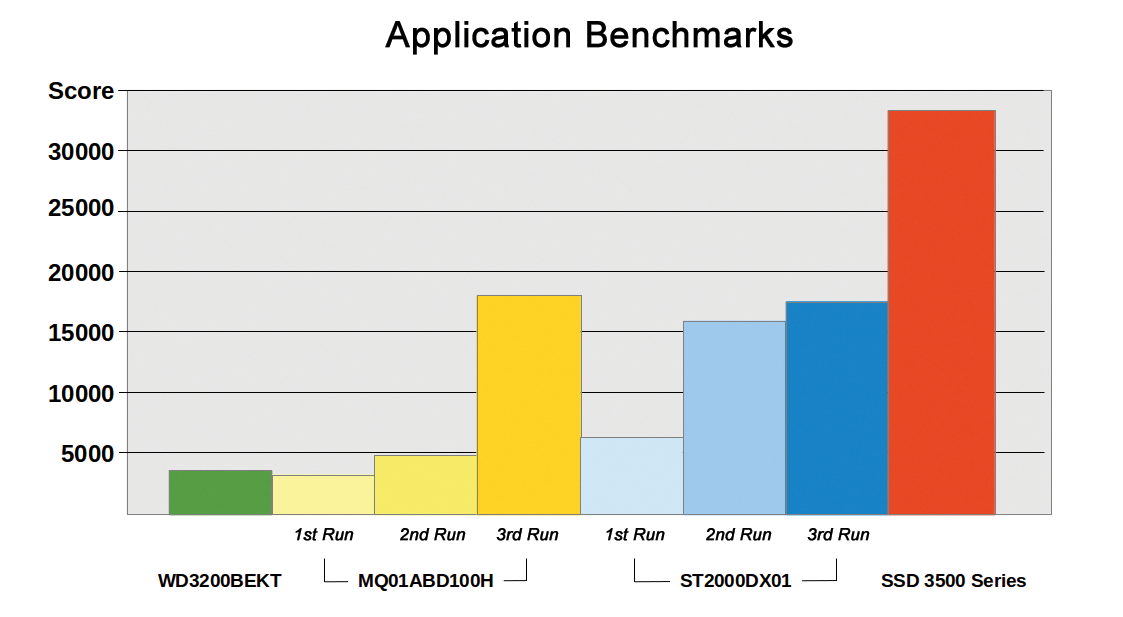

The question of whether hybrid drives are worthwhile is not as easy to answer as it seems at first glance. If you were to measure their performance with a conventional synthetic benchmark, you would in most cases be unable to determine any difference from an ordinary hard drive (Figure 1, first run). This is because they try to fill their relatively small (and therefore cheap) flash memory with the data that is used most frequently. For this much-used data, subsequent access no longer needs to rely on the comparatively slow rotating disks of the hard drive, because the data instead comes from the integrated multilevel cell (MLC) flash memory module.

However, the algorithm of the hybrid drive can only identify frequently used data because they have been read or written multiple times in a given period. A benchmark that generates new random data for each run, and then reads them once only, is always served by the traditional hard disk and never has a chance to accommodate its reading material on the fast SSD.

However, you can sometimes store the test data for multiple use and re-use in a file (e.g., the fio workload simulator). Additionally, practically oriented application benchmarks are more likely to help: One example is Windows PCMark Vantage. In terms of hard drives, this benchmark tests gaming and streaming, adding music and images to a media library or application, and system startup, among other things. Various published values that clearly show the superiority of the hybrid drives can also be achieved here if you run the benchmark several times consecutively and without interruption by other applications; this is the only way to fill the cache with the correct data (Figure 1).

In the ADMIN magazine lab, we initially created our own benchmark, which works like this: A Perl script simulates a lottery with 100 tickets. Each ticket contains the name of a 100MB test file. In total, there are 10 different, equally large test files (called a to j ), but they occur a different number of times in the different lots. For example, j only occurs twice, a occurs four times, but d occurs 10 times and f 24 times. The script sequentially pulls one ticket after another, without putting them back in the hat, and measures how long it takes to read the file listed on the ticket.

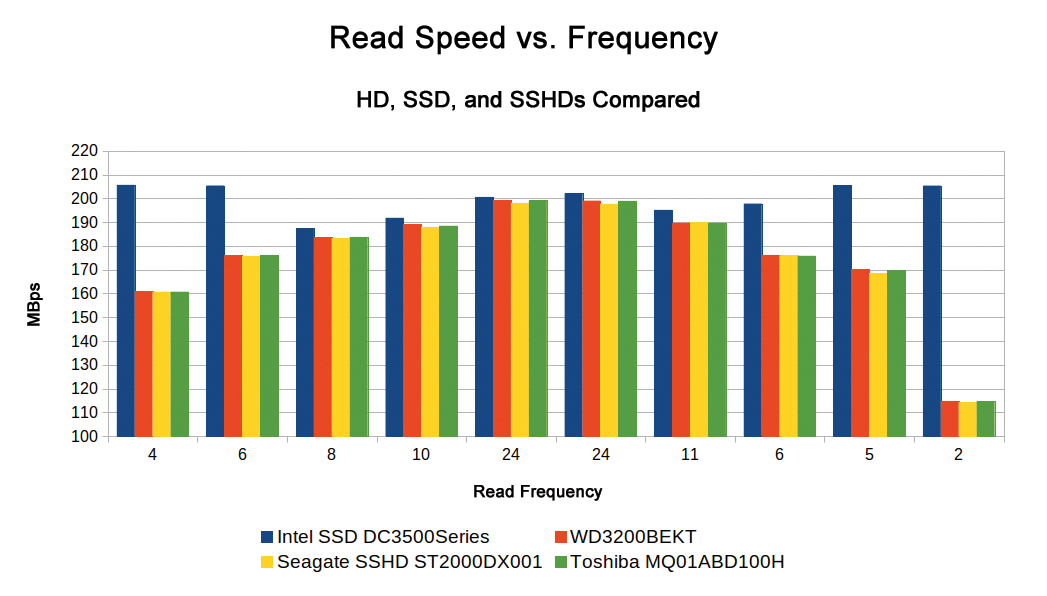

You can clearly see how the read speed depends on the frequency of reading: The more times a file has been read, the higher the average transfer speed is. If you look at the values more closely, you can see that first access is always slow, and subsequent access is always faster, which affects the average value more pronouncedly the more fast cycles you perform (Figure 2).

The I/O Stack

There's just one snag: This result happens regardless of whether you are looking at a normal hard disk or hybrid disk! Why? Because of the more complicated architecture of the I/O stack in the operating system – in this case, Linux.

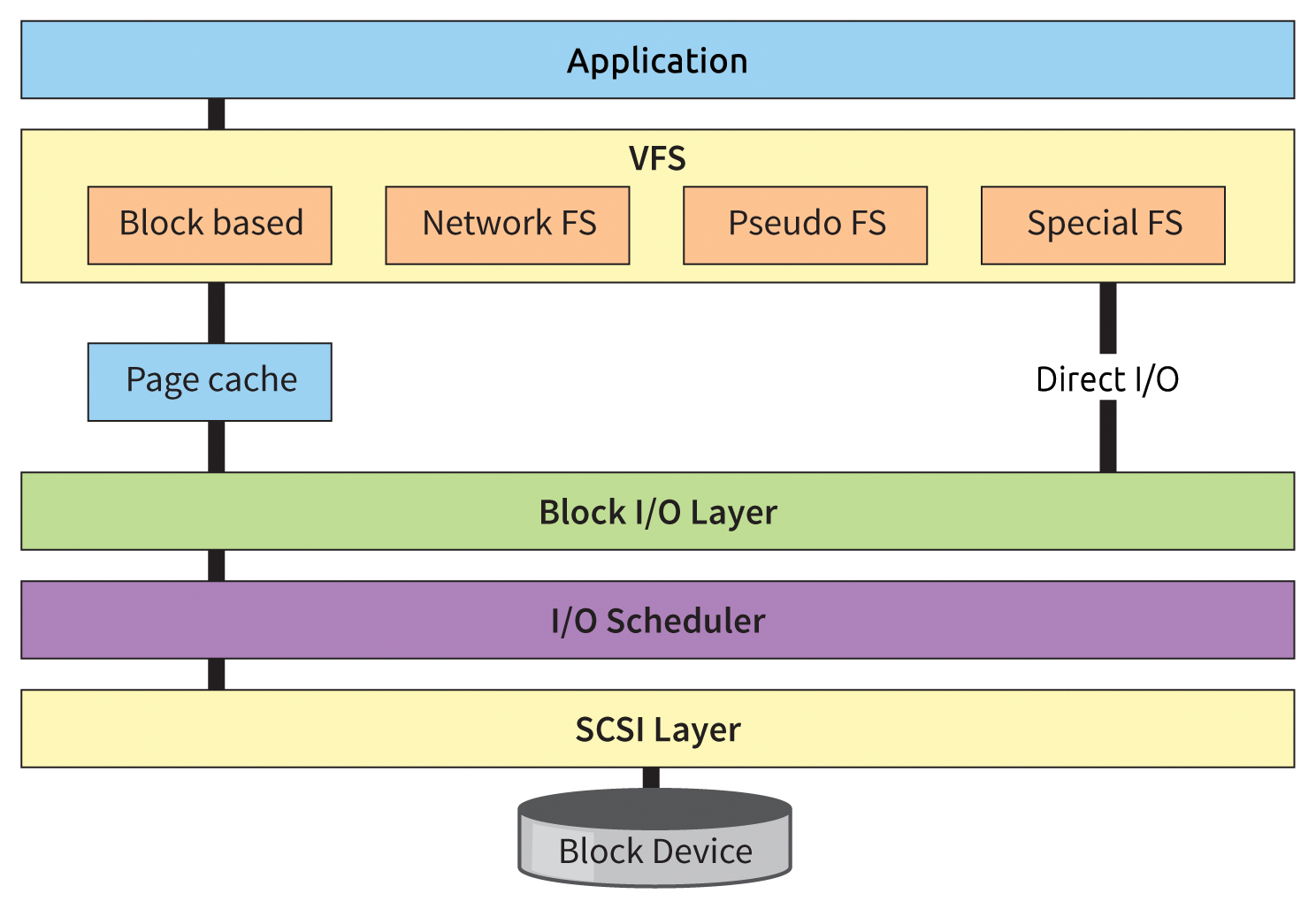

When an application like the benchmark requests data from a mass storage device, the request passes through a sequence of different layers of the operating system. The top layer is the VFS layer. It ensures that different filesystems present a uniform interface to userspace so that applications can always use the same system calls like open(), read(), and write() to access them. This abstracts from the special abilities of the different filesystems; the result is a virtual common denominator for all (Figure 3).

The next layer of the I/O stack uses a very similar abstraction; however, this is not about the filesystem but about block devices. A block device is a mass storage device whose data is organized into fixed-size blocks (sectors) that allow random access. The counterpart to this would be storage that allows only sequential access, such as tape drives.

Although block devices can be realized using very different technology, depending on whether you are looking at, for example, hard disks, CD-ROMs, or SSDs, the block I/O layer again provides a uniform interface for the overlying layers. The block I/O layer is followed by a selection of different I/O schedulers whose job it is to sort and group access to avoid time-consuming hard disk head movements. Then comes the SCSI layer, which is actually structured in three layers, and unified treatment of the various storage devices, whether they are connected via a SAS or Fibre Channel interface, for example. At the lowest level, finally, the physical device appears.

Between VFS and block I/O layers sits the page cache, for which Linux uses almost all the main memory it does not need for other purposes. Basically, all read data end up in this page cache (from which it can be dropped again if it's not used for a long time). This is why repeated read operations that access the same data are served by the cache rather than by the physical disk. This approach also explains why even normal hard drives provide frequently used data so quickly: The data comes from the cache (and thus from RAM) and not from the disk.

The data can take an even shorter route if the application caches itself, which is the case with this benchmark. For example, if I use the local I/O layer (perlio in Perl, which sets up shop on top of the operating system I/O stack), the repeat read requests may not even reach the kernel on their way down through the stack but instead are served by Perl's own cache.

More Cache than Disk

Access that never reaches the disk cannot be accelerated by the disk – regardless of whether it has a flash module. This relationship can be illustrated in another way: For example, the time command, if called with the -v parameter or the format string %f, outputs a statistic that shows how often an application that it monitors (the benchmark, in this case) triggers a major page fault – that is, requests a page that is not found in the cache – and therefore has to be read from the disk.

To do this, first delete the cache,

# sync && echo "3" > \ /proc/sys/vm/drop_caches

and then start the first pass:

root@hercules:/Benchmark# \ /usr/bin/time -f "%F major page faults"\ ./readbench.pl... 15 major page faults

If you then immediately start a second run, the result is:

0 major page faults

In other words, it was necessary to access the physical medium 15 times in the first run to read all the test files with an empty cache. The maximum average speed achieved here was 199.41MBps, and that was for the file that was read 24 times. The file that was only read twice averaged at 114MBps. The second time, all requests were handled by the page cache; no physical disk access was necessary, and the transfer rate for all files was therefore around 206.5MBps independent of access frequency. This is approximately twice the rate of the infrequently read files.

Strengths of the Hybrids

Where can hybrid hard drives play to their strengths? If they save the same data as the page cache, they are too late: At the very bottom of the stack, they have to help the overlying layers serve the user more quickly.

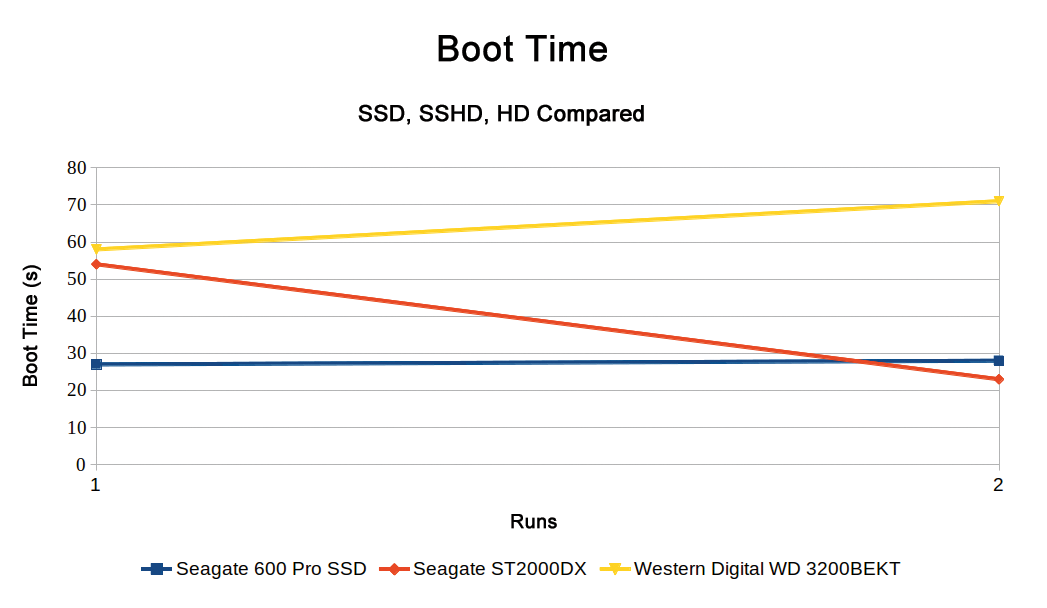

First: Hybrid disks have an advantage, for example, wherever a persistent cache is useful, such as at boot time. The page cache cannot help the operating system here, because it starts at zero for every system start. However, files that need to be read at boot time can persist on the hybrid disk's NAND module, and they can help accelerate the boot process significantly as of the second and all subsequent boots.

To demonstrate this, I installed Ubuntu 13.10 on an ordinary hard disk (Western Digital WD3200BEKT), a hybrid disk (Seagate ST2000DX01), and an SSD (Seagate 600 Pro SSD). Then, I used the Bootchart [1] tool to measure the time required for consecutive boot operations.

The results of the first two runs are shown in Figure 4. There is very little change for the normal hard drive, and the much faster SSD does not benefit from repetition either – in both cases, the caches are initially empty. The result is quite different for the hybrid drive: The time required for booting is halved here, because this read-heavy process can draw on the many files in disk cache right from the outset.

Second: Files are a construct of the VFS layer – the physical hybrid hard disk at the opposite end of the I/O stack knows nothing about them. It caches blocks. Therefore, it can also speed up applications that use direct I/O and bypass the page cache. Such applications (e.g., some databases) usually work with internal application caches; thus, the hybrid drive cache function is just as redundant as with the page cache.

Third: The NAND cache in the hybrid disk also caches write operations. However, the page cache does this by managing modified – but not yet finally stored – pages as dirty pages, which it periodically writes to mass storage. If the page cache is occupied, the data can be re-buffered in the NAND cache of the hybrid drive.

Fourth: If not enough free memory is available, because memory-hungry applications are running, and if large – but not necessarily very important – files are changed (e.g., logs), then these changes can oust some performance-relevant content from the page cache. The NAND cache of the hybrid drive is drive based; at least here, no data can be displaced by content stored elsewhere.

Fifth: Even if very little I/O actually reaches the hybrid drive – and, again, not all of this finds its way into the cache – access that does make it into the cache is much faster, so noticeable performance gains are achieved in total.

Conclusions

Hybrid hard drives are slightly more expensive than normal disks, but they can be much faster under certain circumstances. These circumstances are important, however. Repeated read operations, such as booting, are perfect. In other situations, the benefits are not as great, because the hybrid drive simply has less leverage: Other components of the I/O stack offer the same benefits, but sooner.

Whatever fails to make its way into the cache or is ousted again from the relatively small NAND memory will obviously not translate to speed benefits. However, I/O requests that are passed through to the hybrid drive and then make their way into the flash cache can be served much faster than by a purely mechanical drive.

Hybrid drives can also possibly score points where a persistent cache is beneficial, where the filesystem is bypassed, or where you can gather prioritized data on a drive. Hard disks with an SSD module will always lose out to thoroughbred SSDs in terms of performance, but they are also much cheaper.