Shared storage systems GlusterFS and Ceph compared

Duel of the Giants

Big Data is a major buzzword today in terms of IT trends. Snappy observers sometimes comment that, although everyone might talk about the subject, no one really knows what it actually is. On the other hand, US-based InkTank and the Linux veteran Red Hat have been providing concrete contributions to the subject of Big Data for some time.

Specifically, this means the Ceph [1] object store and the GlusterFS [2] filesystem, which provide the underpinnings for Big Data projects. The term refers not only to storing data but also to the systemization and the ability to search efficiently through large data sets. For this process to work, the data first has to reside somewhere. This is obviously exactly where InkTank and Red Hat see a niche for their products, which both manufacturers are trying their very best to fill.

Endless Expanses

Both companies have made the same basic promise: Storage that can be created with GlusterFS or Ceph is supposed to be almost endlessly expandable. Admins will never again run out of space. This promise is, however, almost the only similarity between the two projects, because underneath, both solutions go about their business completely differently and achieve their goals in different ways. Anyone who has not, to date, dealt in great detail with one of the two solutions can hardly be expected to comprehend the basic workings of Ceph and GlusterFS right away – a comparison of the two projects is therefore not easy. In this article, we draw as complete a picture of the two solutions as possible and directly compare the functions of Ceph and GlusterFS. What is Ceph best suited for, and where do GlusterFS's strengths lie? Are there use cases in which neither one is any good?

Ceph – The Basics

Ceph and GlusterFS newcomers may have difficulty conceptualizing these projects. How does scalable storage work seamlessly in a horizontal direction? How do concrete solutions overcome physical limitations like hard drives, for example?

Those who dare take their first steps in this field with Ceph will be immediately faced with a complex collection of different tools, which take care of precisely that endless storage. Ceph belongs in the "Object Stores" camp, where the definition of this category follows the principle of the lowest common denominator. Object stores are so named because they store data in the form of binary objects. Besides Ceph, OpenStack Swift is another representative of this category currently on the free and open source software market. On the commercial side, Amazon's S3 probably works very similarly.

Binary Objects

Object stores rely on binary objects because they can easily be split into many small parts – if you put the individual parts back together in the original order later, you have exactly the same file as before. Up to the point where the objects are reassembled, the individual parts of the binary object can be stored in a distributed way. For example, they can be shared across multiple hard drives, which can be located on different servers. In this way, object stores bypass the biggest disadvantage that classic storage solutions have to contend with: rigid division into blocks.

As a basic rule, any data storage device (excluding magnetic tapes) that the average consumer can buy works in a block-based manner. Division into blocks is neither positive nor negative per se, but it does have a nasty side effect: It means that a data storage device cannot be used effectively on a block basis. You can store data on it, but it would be impossible to read the data later in a coordinated way without first scanning the entire disk for the stored information. Filesystems help solve the problem. They make a data storage device effectively usable and provide the basic structure. The drawback, however, is that filesystems are very closely connected with the associated data storage device. A filesystem on a data storage device cannot be easily cut into strips and transferred to other disks.

Ceph as an object store bypasses the restriction by adding an additional administrative layer to the block devices used. Ceph also uses block data storage, but the individual hard drives with filesystems for Ceph are only a means to an end. Internal administration occurs in Ceph based solely on its own algorithm and binary objects; the limits of participating data storage devices are no longer of interest.

Introducing Ceph Components

Ceph essentially consists of the actual object store, which may be familiar to some readers under its old name RADOS (Redundant Autonomic Distributed Object Store), and several interfaces for users. The object store again consists of two core components. The first component is the Object Storage Device, or OSD, wherein each individual disk belongs to a Ceph cluster. OSDs are the data silos in Ceph that finally store the binary objects. Users communicate directly with OSDs to upload data to the cluster. OSDs in the background also deal independently with issues such as replication.

The second component is the monitoring server (MON), which exists so that clients and OSDs alike always know which OSDs actually belong to the cluster at the moment: They maintain lists of all existing monitoring servers and OSDs and relay them on command to clients and OSDs. Moreover, MONs enforce a cluster-wide quorum: If a Ceph cluster breaks apart, only those cluster partitions can remain active that have the majority of MON servers backing them; otherwise, this could lead to a split-brain situation.

What is decisive for the understanding of Ceph's functionality is that none of the aforementioned components are central; all components in Ceph must work locally. No single OSDs are assigned a specific priority, and the MONs also have equal rights among themselves. It is possible to expand the cluster to add more OSDs or MONs without considering the number of existing computers or the layout of the OSDs. Whether the work in Ceph is handled by three servers with 12 hard drives or 10 servers with completely different disks of different sizes is not important for the functions the cluster provides. Because of the unified storage principle proclaimed by InkTank, storage system users always see the same interface regardless of what the object store is doing in the background.

Three interfaces in Ceph are important: CephFS is a Linux filesystem driver that lets you access Ceph storage like a normal filesystem. The RBD (RADOS Block Device) provides access to RADOS via a compatibility interface for blocks, and the RADOS Gateway offers RESTful storage in Ceph, which is accessible via the Amazon S3 client or OpenStack Swift client. Together, the Ceph components form a complete storage system that, in theory, is quite impressive – but is this the case in reality?

The Opponent: GlusterFS

GlusterFS has a turbulent history. The original ideas were simple management and administration as well as independence from classical manufacturers. The focus, however, has since expanded considerably – but more on that later. GlusterFS's first steps made the software a replacement for NAS systems (Network Attached Storage). GlusterFS abstracts from conventional data carriers by creating an additional layer between the storage and the user. In the background, the software uses traditional block-based filesystems to store the data. Files are the smallest administrative unit in GlusterFS. A one-to-one equivalence exists between the data stored by the user and the data that arrives on the back end.

GlusterFS Components

Bricks form the foundation of the storage solution. Basically, these are Linux computers with free disk space. The space can be made up of partitions but is ideally complete hard drives or even RAID arrays. The number of data storage devices available per brick or the number many servers involved plays only a minor role. These bricks stick together in a trust relationship. GlusterFS groups these local directories to form a common namespace, which is still a rather coarse construction and only partially usable.

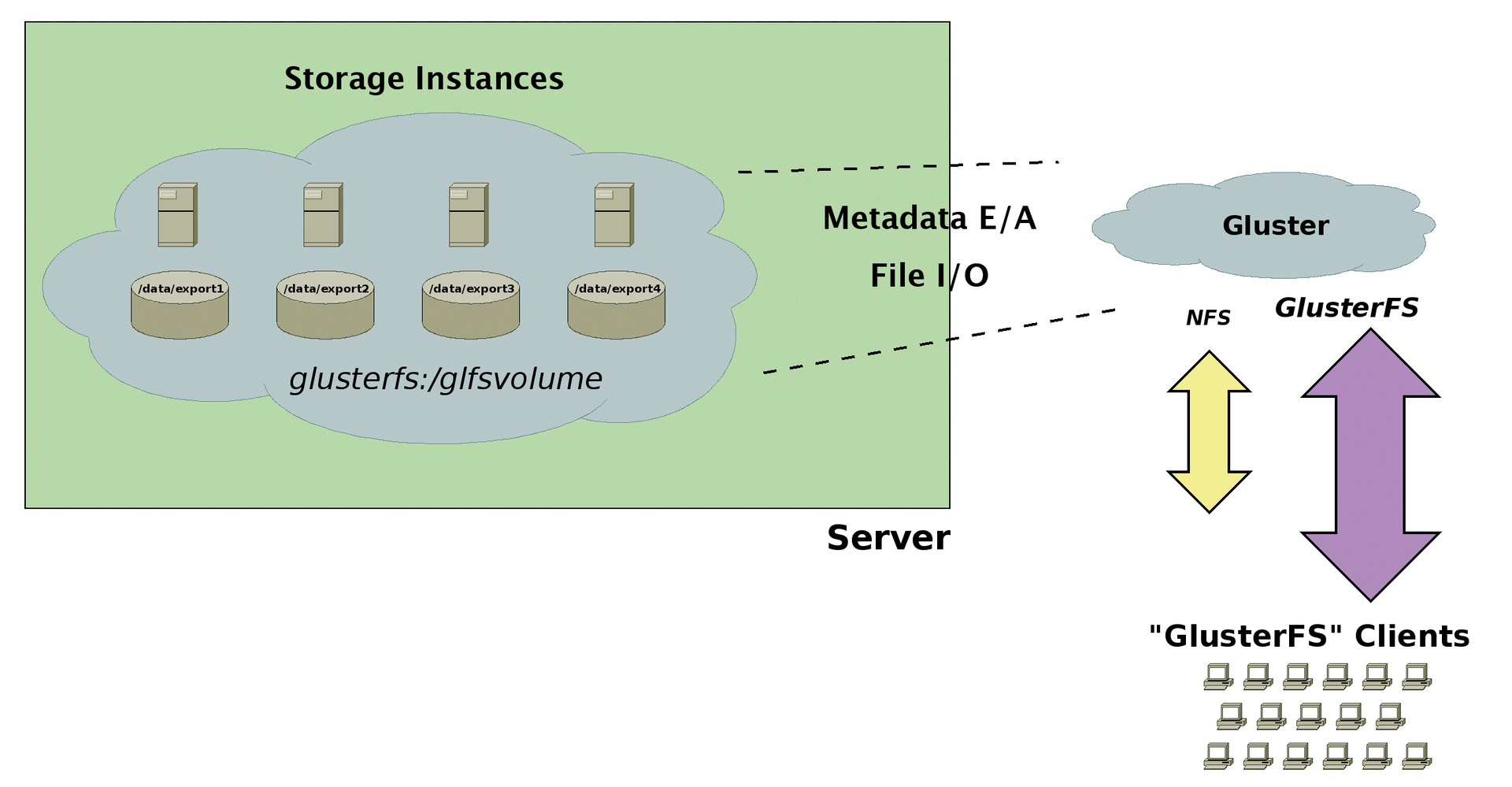

Here is where GlusterFS's second important component comes into play: the translators. These are small modules that provide the space to be shared with a particular property, for example, POSIX compatibility or distribution of data in the background. The user rarely has anything directly to do with the translators. The properties of the GlusterFS storage can be specified in the Admin Shell. In the background, the software brings the corresponding translators together. The result is a GlusterFS volume (Figure 1). One of GlusterFS's special features is its metadata management. Unlike with other shared storage solutions, there are no dedicated servers or entities. The user can choose from various interfaces for storing their data on GlusterFS.

GlusterFS Front Ends

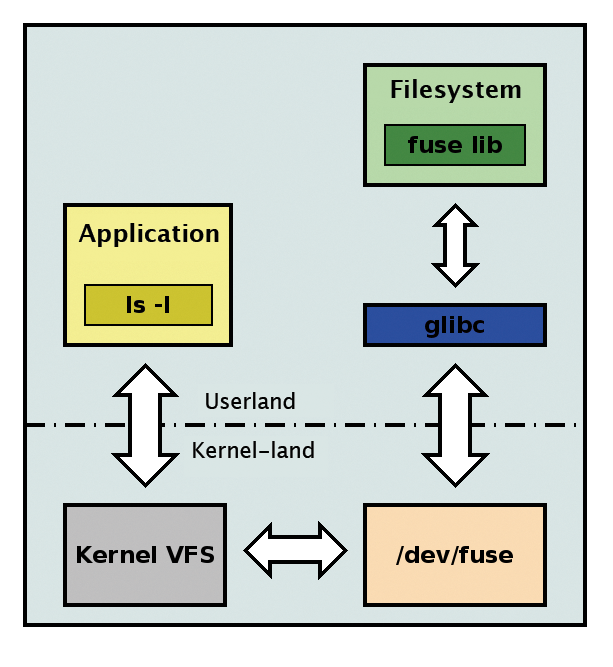

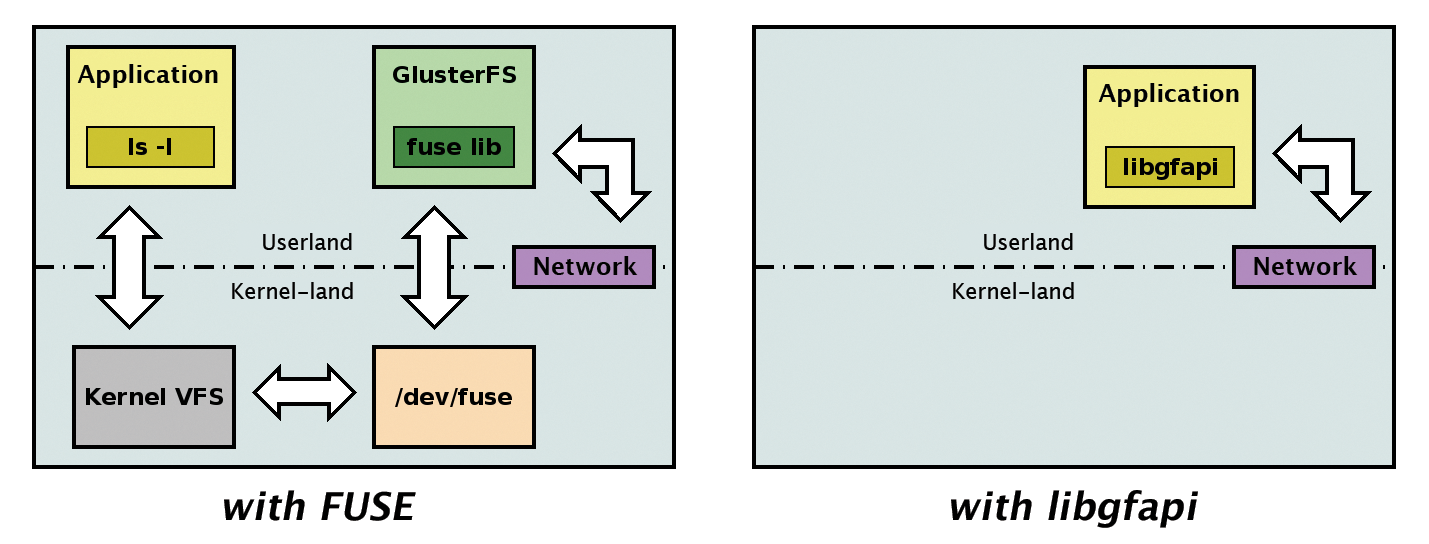

GlusterFS comes with four different interfaces. The first is the native filesystem driver. This is not part of the Linux kernel and uses the FUSE approach (filesystem in userspace; Figure 2). Additionally, the storage solution comes with its own downgraded NFS server. This may only support NFS version 3, and you should expect TCP as the transport protocol, but that is fine in many cases. The obligatory RESTful interface is of course also present. The libgfapi library is the latest access option. Armed with this, GlusterFS is setting out to conquer the storage world. Whether these features are enough, only time will tell.

Licensing Business

Ceph is based throughout on the LGPL – that is, the variant of GPL that even allows linking with non-free software, as long as a completely new piece of work (derivative work) is not subsequently created from the two linked parts. From InkTank's point of view, this decision is sensible; after all, it is quite possible that Ceph could be used in the foreseeable future as a part of commercial storage products, and in such a scenario, the restrictions of the GPL [3] would be rather annoying in regard to linked software.

Incidentally, the LGPL is effective for all components that are part of Ceph. These include OSDs and MONs, as well as metadata servers for the CephFS filesystem and the libraries (i.e., Librados and the RBD Library Librbd).

Until its acquisition by Red Hat in 2011, GlusterFS was released under the GNU Affero General Public License (AGPL) [4]. Additionally, the co-developers had to sign a Contributor License Agreement (CLA) [5]. This setup is not necessarily unusual in the open source community and serves to protect the patrons of the software project. Red Hat removed the need for the aforementioned Contributor License Agreement and put GlusterFS under the GNU Public License version 3 (GPLv3). In doing so, the new "owner" of the open source storage software wanted to show maximum openness. The license change was part of the discussions that took place during the acquisition of Gluster Inc. by Red Hat. No further adjustments are expected in this area in the near future.

Front Ends – Take One!

The software simultaneously provides two different possibilities for POSIX-style access to data in the GlusterFS cluster. A filesystem driver is of course an obvious approach. This driver is usually part of the Linux kernel for local data storage devices or even traditional shared solutions. In the recent past, even filesystems in userspace (FUSEs) have enjoyed a fair amount of popularity [6]). GlusterFS also used this approach, which offers several advantages: Development is not subject to the strict regulations of the Linux kernel. Porting to other platforms is easier – if you assume that they also support FUSE. This means that the entire software stack runs in userspace. The usual mechanisms for process management are thus applicable one-to-one.

FUSE is not an approach without controversy, however. The additional context switch between the kernel and userspace certainly impairs the performance of the filesystem.

Interestingly, GlusterFS provides its own NFS server [7], which, on second glance, is not necessarily surprising. First of all, NAS was the market segment that GlusterFS wanted to conquer. Second, all functions were to be part of the software and thus largely independent of the underlying operating system and its configuration. However, this approach to development came at a price. GlusterFS paid it primary through the fact that its own NFS server is rather limited. Your search for version 4 [8] of the protocol here will be in vain. The same applies to support for UDP. The missing Network Lock Manager (NLM) is now in place (Listing 1).

Listing 1: Gluster NFS

# rpcinfo -p gluster1

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

100227 3 tcp 2049 nfs_acl

100021 3 udp 56765 nlockmgr

100021 3 tcp 54964 nlockmgr

100005 3 tcp 38465 mountd

100005 1 tcp 38466 mountd

100003 3 tcp 2049 nfs

100021 4 tcp 38468 nlockmgr

100024 1 udp 34712 status

100024 1 tcp 46596 status

100021 1 udp 769 nlockmgr

100021 1 tcp 771 nlockmgr

#

The typical Linux NFS client can access data on the GlusterFS network with the above-mentioned limitations. A separate software package is not necessary. This approach facilitates the migration of traditional NAS environments, because the lion's share of the work virtually takes place on the server.

Metadata in GlusterFS

A special feature of GlusterFS is its treatment of metadata. Experts know that this is often a performance killer. Incidentally, this is just not a phenomenon of shared data repositories; even local filesystems such as ext3, ext4, or XFS have learned the hard way here. Implementation of dedicated metadata entities is a typical approach for shared filesystems. The idea is to set them up to be as powerful and scalable as possible, so that there is no bottleneck.

GlusterFS goes about this in a completely different way and does not use any metadata servers. For this approach to work, other mechanisms are, of course, necessary.

The metadata can be broadly divided into two categories. On one hand, you have information that a normal filesystem also manages: permissions, timestamps, size. Because GlusterFS works on a file basis, it can mostly use the back-end filesystem. The information just mentioned is also available there and often does not require separate management at the GlusterFS level.

However, the nature of shared storage systems requires additional metadata, among other things. Information about the server/brick on which data is currently residing is very important – particularly if distributed volumes are used. In that case, the data are distributed across multiple bricks. Users does not see anything of this; to them, it looks like a single directory. GlusterFS also partially uses the back-end filesystem for the metadata – or, to be more precise, the extended file attributes (Figure 3). For the rest, GlusterFS stores nothing; instead, it simply computes the required information.

The elastic hash algorithm forms the basis of this computation. Each file is assigned a hash value based on its name and path. The namespace is then partitioned with respect to the possible hash values. Each area is assigned bricks.

This solution sounds very elegant, but it also has its drawbacks. If a file name changes, the hash value changes, too, which can lead to another server now being responsible for it. The consequence of renaming is then a copy action for the corresponding data. If the user saves multiple files with similar names on GlusterFS, the files may well all end up on the same brick. In the end, it can happen that space remains in the volume itself but that one of the bricks involved is full. In such a case, previous versions of GlusterFS were helpless. Now, the software can also forward such requests to alternative bricks. An asymmetrically filled namespace is therefore no longer a problem.

Ceph Growth

If you think about the history of Ceph, some events seem strange. Originally, Ceph did not work as a universal object store. Instead, it was designed to be a POSIX-compatible filesystem – but with the trick that the filesystem was spread across different computers (i.e., a typical distributed filesystem). Ceph started to grow when the developers notice that the underpinnings for the filesystem were also quite useful for other storage services. However, because the entire project has always operated under the name of the filesystem and the Ceph name was familiar, it was unceremoniously decided to use Ceph as the project name and to rename the actual filesystem. To this day, it firmly remains a part of Ceph and is now somewhat awkwardly called CephFS (although the matching CephFS module is still called ceph.ko in the kernel – a little time may still have to pass before the names are synced).

CephFS offers users access to a Ceph cluster, as if it were a POSIX-compatible filesystem. For the admin, access to CephFS looks just like access to any other filesystem; however, in the background, the CephFS driver on the client is communicating with a Ceph cluster and storing data on it.

Because the filesystem is POSIX compliant, all tools that otherwise work in a normal filesystem also work on CephFS. CephFS directly draws the property of shared data storage from the functions of the object store itself (i.e., from Ceph). What is also important in CephFS, however, is that it enables parallel access by multiple clients in the style of a shared filesystem. According to Sage Weil, the founder and CTO of InkTank, this functionality was one of the main challenges in developing CephFS: observing POSIX compatibility and guaranteeing decent locking, while different clients simultaneously access the filesystem.

In CephFS, the Metadata Server (MDS) plays a role in solving this problem. The MDS in Ceph is responsible for reading the POSIX metadata of objects and keeping them available as a huge cache. This design is an absolutely essential difference compared with Lustre, which also uses a metadata server, but it is basically a huge database in which the object locations are recorded. A metadata server in CephFS simply accesses the POSIX information already stored in the user extended attributes of the Ceph objects and delivers them to CephFS clients. If needed, several metadata servers covering the individual branches of a filesystem can work together. In this case, clients receive a list of all MDSs via the monitoring server, which allows them to determine which MDS they need to contact for specific information.

The Linux kernel provides a driver for CephFS out the box; a FUSE module is also available, which is largely used on Unix-style systems that have FUSE but do not have a native CephFS client.

The big drawback to CephFS at the moment is that InkTank still classifies Ceph as beta (i.e., the component is not yet ready for production operation). According to various statements by Sage Weil, there should not be any problems if only one metadata server is available. Problems concerning metadata partitioning are currently causing Weil and his team a real headache.

Ceph's previously mentioned unified storage concept is reflected in the admin's everyday work when it comes to scaling the cluster horizontally. Typical Ceph clusters will grow, and the appropriate functionalities are present in Ceph for this. Adding new OSDs on the fly is no problem, nor is downsizing a cluster. OSDs are responsible for keeping the cluster consistent in the background, in collaboration with the MONs, whereas users always see the cluster but never the individual OSDs. A 20TB cluster grows to the appropriate size after adding new hard drives in the CRUSH algorithm configuration file (this is Ceph's internal scheduling mechanism), or shrinks accordingly. However, none of these operations can be seen on the front end.

Extensibility in GlusterFS

Easy management and extensibility were the self-imposed challenges for GlusterFS developers. Extensibility in particular did not cause the usual headaches, and, actually, the conditions are good. Adding new bricks or even new servers to the GlusterFS pool is very simple. The trust relationship, however, cannot be established by the new server itself – contact must be made from a previously integrated GlusterFS member. The same applies to the opposite case (i.e., if the GlusterFS cluster needs to shrink). Increasing and decreasing the size of volumes is not difficult, but a few items must be considered.

The number of bricks must match the volume setup, especially if replication is in play. With a copying factor of three, GlusterFS only allows an extension to n "bricks" if n is divisible by three. This also applies to removing bricks. If the volume is replicating and distributed, then this is a little tricky. You must be careful that the order of the bricks is correct. Otherwise, replication and distribution will go haywire. Unfortunately, the management interface is not very helpful with this task. If the number of bricks changes, the partitioning of the namespace changes, too. This applies automatically for new files.

Existing files, which should now be somewhere else, do not migrate automatically to the right place. The admin must push-start the rebalance process. The right time to do this depends on various factors: How much data needs to be migrated? Is client-server communication affected? How stressed is your legacy hardware? Unfortunately, there is no good rule of thumb. GlusterFS admins must gain their own experience, depending on their system.

Replication

An essential aspect for high availability of data in shared storage solutions is producing copies. This is called replication in GlusterFS-speak, and yes, there is a translator for it. It is left to the admin's paranoia level to determine how many copies are automatically produced.

The basic rule is that the number of bricks used must be an integer multiple of the replication factor. Incidentally, this decision also has consequences for the procedure when growing or shrinking the GlusterFS cluster – but more on that later. Replication occurs automatically and basically transparently to the user. The number of copies wanted is set per volume. Thus, completely different replication factors may be present in a GlusterFS network. In principle, GlusterFS can use either TCP or RDMA as a transport protocol. The latter is preferable when it comes to low latency.

Realistically speaking, RDMA plays rather an outside role. Where replication traffic occurs, it depends on the client's access method. If the native GlusterFS filesystem driver is used, then the sending computer ensures that the data goes to all the necessary bricks. It does not matter which GlusterFS server the client addresses when mounting. In the background, the software establishes the connection to the actual bricks.

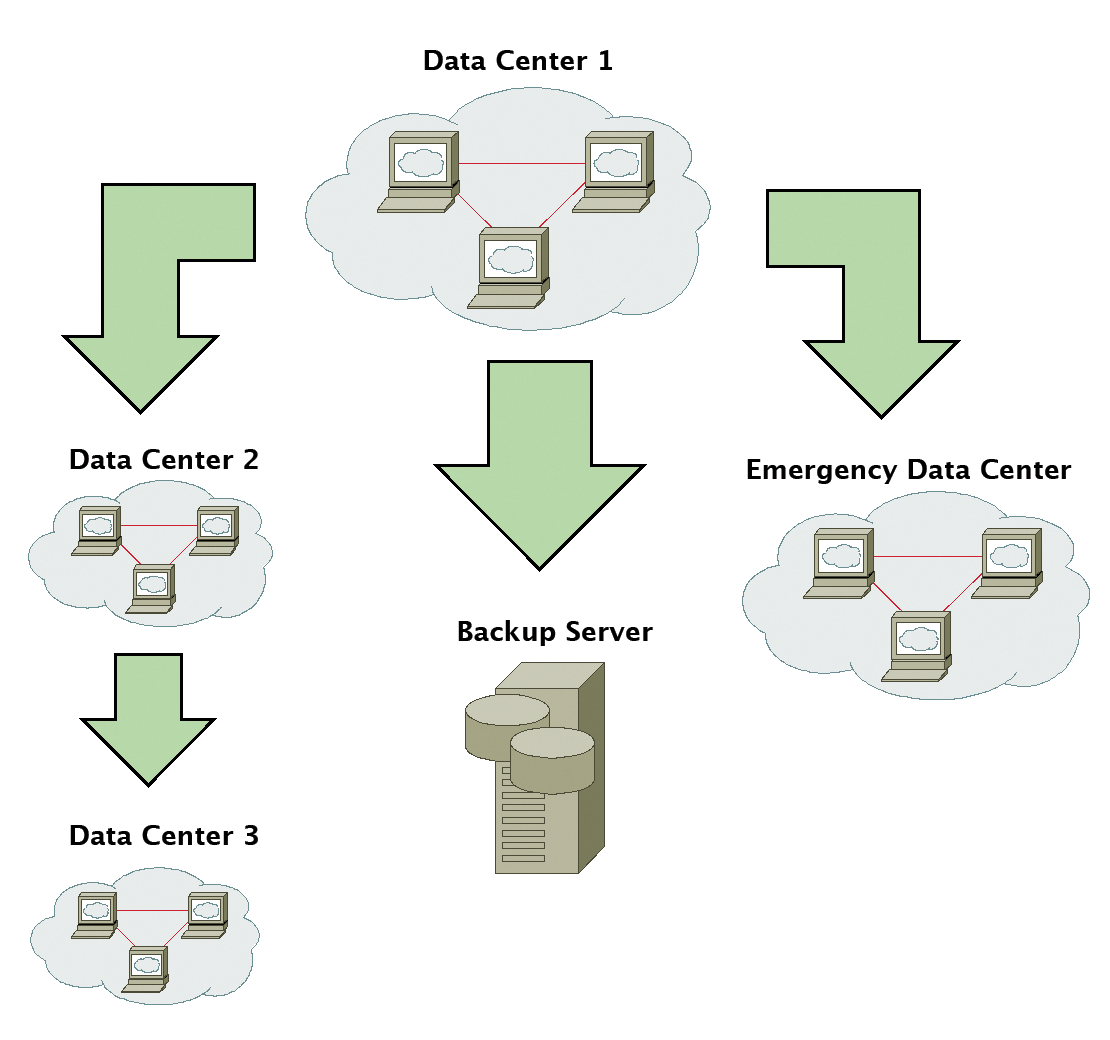

GlusterFS handles access via NFS internally. If it's suitably configured, the client-server channel does not need to process the copy data stream. Geo-replication is a special treat; it involves asynchronous replication to another data center (Figure 4). The setup is simple and is essentially based on rsync via SSH. It does not matter whether there is a GlusterFS cluster or just a normal directory on the opposite side.

At present, geo-replication is a serial process and suffers from rsync's known scaling problems. Also, the process runs with root privileges on the target site in the default configuration and thus represents a security risk. Version 3.5 is said to have completely revised this situation. Replication can then be parallelized. Identifying which changes have been made, and are thus part of the copy process, is also said to be much faster with far higher performance.

The failure of a brick is transparent for the user with a replicating volume – except where it affects the last remaining brick. The user can continue working while the repair procedures take place. After the work is completed, GlusterFS can reintegrate the brick and then automatically start data synchronization. If the brick is irreparable, however, some TLC is needed. The admin must throw it out of the GlusterFS cluster and integrate a new brick. Finally, the data is adjusted so that the desired number of copies is again present. For this reason, implementing a certain fail-safe operator outside of GlusterFS for the individual bricks is a good idea. Possible measures would be the use of RAID controllers and/or redundant power supplies and interface cards.

Accessing users and applications via NFS on GlusterFS requires further measures. The client machine only has one connection to an NFS server. If this fails, access to the data is temporarily gone. The recommended method is to set up a virtual IP which, in the event of an error, points to a working NFS server. In its commercial product, Red Hat uses the cluster trivial database (CTDB) [9], which is well-known from the Samba world.

As already mentioned, the servers involved in the GlusterFS composite enter a trust relationship. For all distributed network-based services, failure of switches, routers, cables, or interface cards is annoying. If the GlusterFS cluster is divided by such an incident, the question arises of who can write and who can't. Without further measures, unwanted partitioning in the network layer creates a split-brain scenario with the accompanying data chaos. GlusterFS introduced quorum mechanisms in version 3.3. In the event of a communication failure, the GlusterFS server can decide whether or not the brick can still write. The setup is quite simple. You can turn off the quorum completely, set it to automatic, or set the number of bricks that are to be considered a majority. If done automatically, a quorum applies if more than half of the participating "bricks" are still working.

Replication with Ceph

Although conventional storage systems often use various tricks to ensure redundancy over replication, the subject of replication (in combination with high data availability) is almost inherently incorporated in the design at Ceph. Weil has put in a lot of effort into, on one hand, making reliable replication possible and, on the other hand, making sure the user notices as little as possible about it. The basis for replication in the background is the conversation that runs within a cluster between individual OSDs: Once a user uploads a binary object to an OSD, the OSD notices this process and starts to replicate. It determines for itself – on the basis of the OSD and MON servers existing in the cluster and using the CRUSH algorithm – to which OSDs it needs to copy the new object and then does so accordingly.

Using a separate value for each pool (which is the name for logical organizational units into which a Ceph cluster can be divided), the administrator determines how many replicas of each object should be present in this pool. Because Ceph basically works synchronously, a user only receives confirmation for a copy action at the moment at which the corresponding number of copies of the objects uploaded by the clients exist cluster-wide.

Ceph also can heal itself if something goes wrong: It acknowledges the failure of a hard drive after a set time (default setting is five minutes) and then copies all missing objects and their replicas to other OSDs. This way, Ceph ensures that the admin's replication requirements are consistently met – except for the wait immediately after the failure of a disk, as mentioned earlier. The user will not notice any of this, by the way; the unified storage principle hides failures from clients in cluster.

Front Ends – A Supplement

At first glance, it may seem odd to ask about programming interfaces for a storage solution. After all, most admins are familiar with SAN-type central storage and know that it offers a uniform interface – nothing more. This is usually because SAN storage works internally according to a fixed pattern that does not easily allow programmatic access: What benefits would programming-level access actually offer in a SAN context?

With Ceph, however, things are a bit different. Because of its design, Ceph offers flexibility that block storage does not have. Because it manages its data internally, Ceph can basically publish any popular interface to the outside world as long as the appropriate code is available. With Ceph, you can certainly put programming libraries to good use that keep access to objects standardized. Several libraries actually offer this functionality. Librados is a C library that enables direct access to stored objects in Ceph. Additionally, for the two front ends, RBD and CephFS, separate libraries called librbd and libcephfs, support userspace access to their functionality.

On top of that, several bindings for various scripting languages exist for librados so that userspace access to Ceph is possible here, too – for example, using Python and PHP.

The advantages of these programming interfaces can be illustrated by two examples: On one hand, the RBD back end in QEMU was realized on the basis of librbd and librados to allow QEMU to access VMs directly in Ceph storage without going through the rbd.ko kernel module. On the other, a similar functionality is also implemented in TGT so that the tgt daemon can create an RBD image as an iSCSI export without messing around with the operating system kernel.

A useful use case also exists for PHP binding: Image hosters work with large quantities of images, which in turn are binaries files. These usually reside on a POSIX-compatible filesystem [10], however, and are exported via NFS, for example. However, POSIX functionality is almost always unnecessary in such cases. A web application can be built via the PHP binding of librados that stores images in the background directly in Ceph so that the POSIX detour is skipped. This approach is efficient and saves resources that other services can benefit from.

GlusterFS Interfaces

Access to shared storage via a POSIX-compatible interface is not without controversy. Strict conformity with the interface defined by the IEEE would have a significant effect on performance and scalability. In the case of GlusterFS, FUSE can be added as a further point of criticism. Since version 3.4, it's finally possible to access the data on GlusterFS directly via a library. Figure 5 shows the advantages that derive from the use of libgfapi [11].

libgfapi, the I/O path is significantly shorter when accessing GlusterFS.The developers of Samba [12] and OpenStack [13] have already welcomed this access and incorporated it into their products. This step has not yet happened in the open source cloud, however; here, users can find a few GlusterFS mounts. For this, libgfapi uses the same GlusterFS blocks in the background, such as the previously mentioned "translators." Anyone who works with QEMU [14] can also dispense with the annoying mounts and store data directly on GlusterFS. For this, the emulator and virtualizer must at least be version 1.3:

# ldd /usr/bin/qemu-system-x86_64\ |grep libgfapi libgfapi.so.0 => \ /lib64/libgfapi.so.0 (0x00007f79dff78000)

Python bindings also exist for the library [15] As with Glupy already, GlusterFS also opens its doors to the world of this scripting language.

A special feature of GlusterFS is its modular design, and the previously mentioned translators are the basic structural unit. Almost every singular characteristic of a volume is represented by one of these translators. Depending on the configuration, GlusterFS links the corresponding translators to create a chain graph, which then determines the total capacity of the volume. The set of these property blocks is broken down into several groups. Table 1 lists these, together with a short description.

Tabelle 1: Group Membership of the Translators

|

Group |

Function |

|---|---|

|

Storage |

Determines the behavior of the data storage on the back-end filesystem |

|

Debug |

Interface for error analysis and other debugging |

|

Cluster |

Basic structure of the storage solution, such as replication or distribution of data |

|

Encryption |

Encryption and decryption of stored data (not yet implemented) |

|

Protocol |

Communication and authentication for client-server and server-server |

|

Performance |

Tuning parameters |

|

Bindings |

Extensions to other languages, such as Python |

|

Features |

Additional features such as locks or quotas |

|

Scheduler |

Distribution of new write operations in the GlusterFS cluster |

|

System |

Interface to the system, especially to filesystem access control |

The posix storage translator, for example, controls whether or not GlusterFS uses the VFS cache of the Linux kernel (O_DIRECT). Here, admins can also set whether files should be deleted in the background. On the encryption side, unfortunately, there's not much to see. A sample implementation using ROT13 [16] can be found in the GlusterFS source code scope. A useful version for users is planned for the next release. Another special refinement is the binding group. Binding groups are not actually real translators but instead compose a framework for writing them in other languages. The most famous is Glupy [17], which represents the interface to Python.

Extensions and Interfaces in Ceph

Unlike GlusterFS, the topic of "extensions via plug-ins" is dealt with quickly for Ceph: Ceph currently supports no option for extending functionality at run time through modules. Apart from the almost factory-standard configuration diversity, Ceph restricts itself to "programming in" external features via the previously mentioned APIs, such as Librbd and Librados.

With respect to offering a RESTful API, Ceph has a clear advantage over GlusterFS. Because Ceph has already mastered the art of storing binary objects, only a corresponding front end with a RESTful interface is missing. At Ceph, the RADOS gateway occupies this place, providing two APIs for a Ceph cluster: On the one hand, the RADOS gateway speaks the protocol of OpenStack's Swift object store [18]; on the other, it also supports the protocol for Amazon's S3 storage, so that S3-compatible clients can also be used with the RADOS gateway.

In the background, the RADOS gateway relies on librados. Moreover, the Ceph developers explicitly point out that the gateway does not support every single feature of Amazon's S3 or OpenStack's Swift. However, the basic features work, as expected, across the board, and the developers are currently focusing on implementing some important additional features.

Recently, the RADOS gateway has also added the seamless connection to OpenStack's Keystone source code – for example, for operations with both Swift and Amazon S3 (meaning the protocol in both cases). This is particularly handy if OpenStack is used in combination with Ceph (details on the cooperation between Gluster or Ceph and OpenStack can be found in the box "Storage for OpenStack").

Object Orientation in GlusterFS

Up to and including version 3.2, GlusterFS was a file-based storage solution. An object-oriented approach was not available. The popularity of Amazon S3 and other object store solutions "forced" the developers to act. UFO – Unified File and Object (Store) – came with version 3.3. GlusterFS is based very heavily on OpenStack's well-known Swift API. Integration in the open source cloud solution is easily the most-used application, and it does not look as if this will change in the foreseeable future.

Version 3.4 replaced UFO with G4O (GlusterFS for OpenStack) and needed an improved RESTful API. In this case, improvement refers mainly to compatibility with Swift, which is too bad; a far less specialized interface could attract other software projects. Behind the scenes, GlusterFS still operates on the basis of files. Technically, the back end for UFO/G4O consists of additional directories and hard links (Listing 2).

Listing 2: Behind the Scenes of Gluster Objects

# ls -ali file.txt .glusterfs/0d/19/0d19fa3e-5413-4f6e-abfa-1f344b687ba7

132 -rw-r--r-- 2 root root 6 3. Feb 18:36 file.txt

132 -rw-r--r-- 2 root root 6 3. Feb 18:36 .glusterfs/0d/19/0d19fa3e-5413-4f6e-abfa-1f344b687ba7

#

# ls -alid dir1 .glusterfs/fe/9d/fe9d750b-c0e3-42ba-b2cb-22ff8de3edf0 .glusterfs

/00/00/00000000-0000-0000-0000-000000000001/dir1/

4235394 drwxr-xr-x 2 root root 6 3. Feb 18:37 dir1

4235394 drwxr-xr-x 2 root root 6 3. Feb 18:37 .glusterfs/00/00/00000000-0000-0000-0000-000000000001/dir1/

135 lrwxrwxrwx 1 root root 53 3. Feb 18:37 .glusterfs/fe/9d/fe9d750b-c0e3-42ba-b2cb-22ff8de3edf0 \

-> ../../00/00/00000000-0000-0000-0000-000000000001/dir1

GlusterFS appears here as a hybrid and assigns an object to each file, and vice versa. The objects manage the software in the .glusterfs directory. The storage software generates a fairly flat directory structure from the GFID (GlusterFS file ID) and creates hard links between object and file. The objects, containers, and accounts known from the world of Swift are equivalent to files, directories, and volumes on the GlusterFS page.

Tuning for GlusterFS and Ceph

To get the most out of GlusterFS, admins can start at different points. In terms of pure physics, the network throughput and the speed of the disks behind the brick are decisive. At this level, these actions are completely transparent to GlusterFS. It uses eth0 or bond0. Faster disks on the back end help. Admins also can tune the corresponding filesystem. It helps for GlusterFS to store files entrusted to it 1:1 on the back end. It is not advisable to choose too many bricks per server, but there are also many things to adjust at protocol level.

Switching O_DIRECT on or off using the POSIX translator has already been mentioned. At the volume level, read cache and write buffers can be adjusted to suit your needs. The eager-lock switch is relatively new. GlusterFS allows faster transfer of locks from one transaction to the next. In general, the following rules apply: The relative performance grows with the number of clients. Distribution at the application level is also advantageous for GlusterFS performance. Single-threaded applications should be avoided. Ceph provides administrators with some interesting options with regard to a storage cluster's hardware.

The previously described process of storing data on OSDs happens in the first step between the client and a single OSD, which accepts the binary objects from the client. The trick is that, on the client side, more than one connection to an OSD at the same time does not represent a problem. The client can therefore split a 16MB file into four objects of 4MB each and then simultaneously upload these four objects to different OSDs. A client in Ceph can thus continuously write to several spindles simultaneously (this bundles the performance of all the disks used, as in RAID0).

The effects are dramatic: Instead of expensive SAS disks, as used in SAN storage, Ceph provides comparable performance values with normal SATA drives, which are much better value for the money. The latency may give some admins cause for concern, because the latency of SATA disks (especially the desktop models) lags significantly behind that of similar SAS disks. However, Ceph developers also have a solution for this problem, which relates to the OSD journals.

Each OSD has a journal in Ceph – that is, an upstream region that initially incorporates all changes and then ultimately sends them to the actual data carrier. The journal can either reside directly on the OSD or on an external device (e.g., on an SSD). Up to four OSD journals can be outsourced to a single SSD, with a dramatic effect on performance in turn. Clients simultaneously write to the Ceph cluster in such setups at the speed that several SSDs can offer so that, in terms of performance, such a combination leaves even SAS drives well behind.

Conclusion

No real winner or loser is seen here. Both solutions have their own strengths and weaknesses – fortunately, never in the same areas. Ceph is deeply rooted in the world of the object store and can therefore play its role particularly well in that area as storage for hypervisors or open source cloud solutions. It looks slightly less impressive on the filesystem area. This, however, is where GlusterFS enters the game. Coming from the file-based NAS environment, it can leverage its strengths – even in a production environment. GlusterFS only turned into an object store quite late in its career; thus, it still has to work like crazy to catch up.

In the high-availability environment, both tools feel comfortable – Ceph is less traditionally oriented than GlusterFS. The latter works with consumer hardware, but it feels a bit more comfortable on enterprise servers.

The "distribution layer" is quite different. The crown jewel of Ceph is RADOS and its corresponding interfaces. GlusterFS, however, impresses thanks to its much leaner filesystem layer that enables debugging and recovery from the back end. Additionally, the translators provide a good foundation for extensions. IT decision makers should look at the advantages and disadvantages of these solutions and compare them with the requirements and conditions of their data center. What fits best will then be the right solution.