Distributed storage with Sheepdog

Data Tender

Kazutaka Morita launched the Sheepdog project [1] in 2009. The primary driver was the lack of an open source implementation of a cloud-enabled storage solution – as a kind of counterpart to Amazon's S3. Scalability, (high) availability, and manageability were and still are the essential characteristics that Sheepdog seeks to provide. For Morita, setting up Ceph, which already existed at that time, was just too complicated. GlusterFS in turn was largely unknown. What these tools had in common was that they then tended to address the NAS market.

Sheepdog was intended from the beginning as a storage infrastructure for KVM/QEMU [2] (see also the box "Virtual Images with Sheep"). Because Morita was working at NTT (Nippon Telegraph and Telephone Corporation), there are corresponding copyright notices in the source code, but the code itself is released under the GPLv2.

A Pack of Hounds

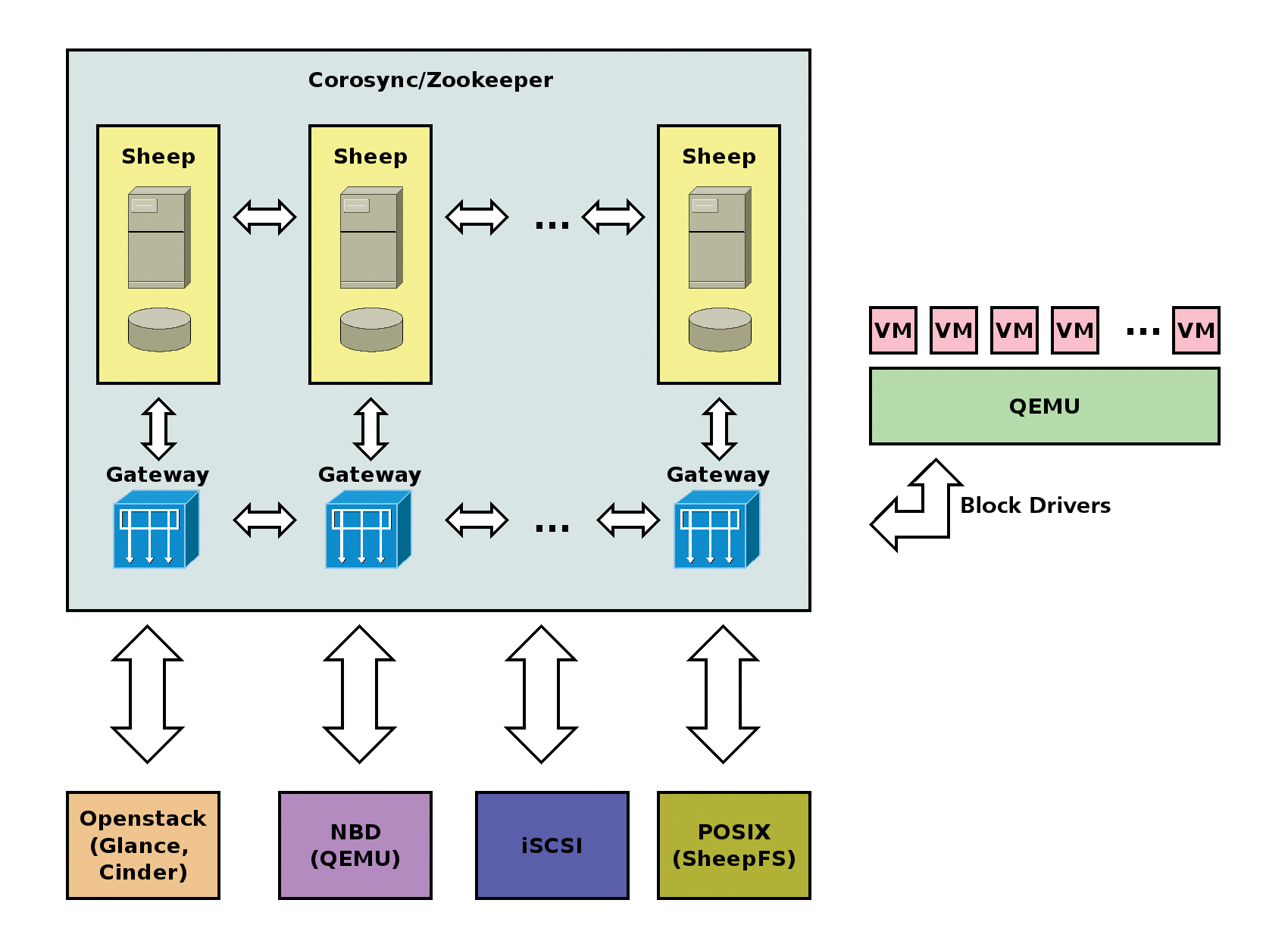

Very roughly, Sheepdog consists of three components: a clusterware, storage servers, and the network. There is not much to say on the subject of networking. Sheepdog is IP-based, which for most environments implies Ethernet as a transport medium. In principle, InfiniBand is feasible as long as the stack passes an IP address [3] to Sheepdog. The clusterware provides the basic framework for the servers that provide the data storage. The corresponding software is not actually supplied with the project. The admin must complete the installation and configuration before turning to Sheepdog.

The clusterware has three functions: It logs which machines are just part of the cluster, distributes messages to the nodes involved, and supports locking of storage objects. In the standard configuration, Sheepdog is set up for Corosync [4]. Alternatively, you can also use Zookeeper [5]. During compilation, you need to configure the software with the --enable-zookeeper option in place. Strictly speaking, however, Zookeeper is not actually clusterware. The software provides the functions listed above generically for distributed applications.

The project recommends the use of Corosync for up to 16 storage servers. The clusterware is integrated into the Sheepdog cluster. Each node runs the Corosync daemon and Sheepdog – there is a 1:1 relationship. For larger networks, Zookeeper is more appropriate. There is no 1:1 relation in this case. According to statements by the project, a Zookeeper cluster with three or five nodes is fine as a framework for hundreds of Sheepdog computers. If you need to be very economical, you can set up a pseudo-cluster on a single machine. The normal IPC (Inter Process Communication) takes on the task of distributing messages in this case. The remaining tasks of the clusterware are irrelevant if only one computer is involved.

The storage servers are the machines running Sheepdog that provide local disk space to the cluster (Figure 1). Normally, only one daemon process by the name of sheep runs on each computer. It processes the I/O requests and takes care of storing the data. The participating storage servers are simply called sheep in the project's jargon and are referenced as such in the documentation.

Admins use command-line options to manage the properties of the Sheepdog storage (Table 1). The most important option is to specify the directory where sheepdog manages the data. This can be a normal directory; however, the underlying filesystem must support extended attributes. For practical reasons, therefore, the use of XFS, ext3, or ext4 is recommended. For the latter, you need to make sure they are mounted with the user_xattr option.

Tabelle 1: Important Sheepdog Options

|

Option |

Meaning |

Examples |

|---|---|---|

|

|

IP address for communication |

|

|

|

Clusterware to use |

|

|

|

Use direct I/O on the back end |

n/a |

|

|

Work as a gateway (server without back-end storage) |

n/a |

|

|

IP address for I/O requests |

|

|

|

Use journal |

|

|

|

Use this TCP port |

|

|

|

Enable caching for the storage objects |

|

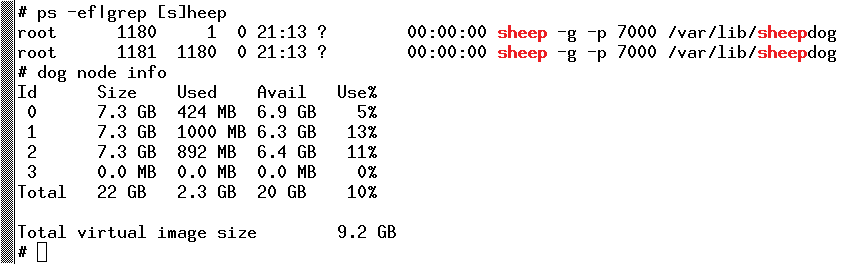

At this time, Sheepdog does not make extensive use of the extended attributes. They are currently used for caching checksums of storage objects, storing the size of the managed disks, and managing the filesystem structure of the POSIX layer (sheepfs – more on that later). The sheep daemon listens on the default configuration on TCP port 7000, which is the port that clients use to talk to the sheep. Make sure that port 7000 is not already occupied – especially if AFS servers are in use (see Listing 1).

Listing 1: Sheepdog Server in Action

# ps -ef|egrep '([c]orosyn|[s]heep)' root 491 1 0 13:04 ? 00:00:30 corosync root 581 1 0 1:13 PM ? 00:00:03 sheep -p 7000 /var/lib/sheepdog root 582 581 0 13:13 ? 12:00:00 AM sheep -p 7000 /var/lib/sheepdog # grep sheep /proc/mounts /dev/sdb1 /var/lib/sheepdog ext4 rw,relatime,data=ordered 0 0 # grep sheep /etc/fstab /dev/sdb1 /var/lib/sheepdog ext4 defaults,user_xattr 1 2 #

For serious use of Sheepdog, you should spend some time thinking about the network topology. For example, it's a good idea to separate the cluster traffic from the actual data. The documentation refers to an I/O NIC and a non-I/O NIC. Because both the clusterware and Sheepdog are IP based, the admin must configure the TCP/IP stack on both network cards and tell the sheep daemon the I/O IP address. If this card fails, Sheepdog automatically switches to the remaining non-I/O NIC. Unfortunately, this does not work the other way round.

If you have looked into distributed storage systems previously, you are likely to pose the legitimate question of how you manage the metadata. Like GlusterFS, Sheepdog does not have dedicated instances for this purpose. It uses consistent hashing to place and retrieve data (for further information, see the "Metadata Server? No, Thanks!" box).

Listing 2: Sheepdog Uses the FNV-1a Algorithm

01 #define FNV1A_64_INIT ((uint64_t) 0xcbf29ce484222325ULL)

02 #define FNV_64_PRIME ((uint64_t) 0x100000001b3ULL)

03

04 /* 64 bit Fowler/Noll/Vo FNV-1a hash code */

05 static inline uint64_t fnv_64a_buf(const void *buf, size_t len, uint64_t hval)

06 {

07 const unsigned char *p = (const unsigned char *) buf;

08

09 for (int i = 0; i < len; i++) {

10 hval ^= (uint64_t) p[i];

11 hval *= FNV_64_PRIME;

12 }

13

14 return hval;

15 }

The result of the hash function is 64 bits in length. The name of the data object provides one input value, the offset another. Sheepdog stores the data in chunks of 4MB. The hash of the name is also used internally by the software to set the object ID. By the way, the project used a different algorithm, SHA-1 [10], in its early months.

The sheep daemon can work in gateway mode. In this mode, the computer does not participate in actual data management (Figure 2). Instead, it serves as an interface between the clients and the "storage sheep." With the gateway, you can sort of hide the topology of the Sheepdog cluster from the computers that access it. This applies both to the structure of the network as well as to the flock of sheep.

A Peek Behind the Drapes

Admins can apply some tweaks to the Sheepdog cluster. As already mentioned, the software breaks down the data entrusted to it into chunks of 4MB. Sheepdog then distributes the chunks to the available sheep. There is no hierarchy here. All data items are located in the obj subdirectory on the back-end storage. On request, Sheepdog can protect write access through journaling (see also Table 1).

The sheep daemon first writes the data to its logfile. This step improves data consistency if a sheep "drops dead." As with the normal filesystem, use of a separate disk is recommended for journaling. If you use an SSD here, you can even improve the performance. Speaking of SSDs: Sheepdog has a Discard/Trim function. However, this is not aimed at the back-end storage but at the hard disk images of virtual servers. When the user releases space on the virtual machine, the VM can inform the underlying storage system – in this case, Sheepdog. This function is disabled in the default configuration, and it only works with a relatively new software stack, including QEMU version 1.5, a Linux 3.4 kernel, and the latest version of Sheepdog.

A sheep process can manage multiple disks, which then form a local RAID 0 with the known advantages and disadvantages. The number of disks managed by Sheepdog is transparent to the members of the flock. Whether and how many disks a computer manages has no influence on the distribution of the data. If one drive fails, the other "sheep" just keep on grazing. The logfile of the sheep daemon and information about the Sheepdog cluster are always stored on the first disk.

Safety First!

A good distributed storage system has to meet high-availability requirements. Replication, as a basic mechanism, has established itself here. In the standard configuration, Sheepdog sets a copy factor of 3 – the maximum value is 31. This value cannot be changed later – at least, not without backing up all your data and recovering the storage cluster. Once configured, Sheepdog uses this replication factor for all data to be stored. For individual objects, however, you can set a different number of copies (Listing 3).

Listing 3: Defining Replication Factors

01 # dog vdi list 02 Name Id Size Used Shared Creation time VDI id Copies Tag 03 one.img 0 4.0 MB 0.0 MB 0.0 MB 2014-03-01 10:15 36467d 1 04 three.img 0 4.0 MB 0.0 MB 0.0 MB 2014-03-01 10:16 4e5a1c 3 05 two.img 0 4.0 MB 0.0 MB 0.0 MB 2014-03-01 10:15 a27d79 2 06 quark.img 0 4.0 MB 0.0 MB 0.0 MB 2014-03-01 10:16 dfc1b0 31 07 #

The software, however, does not perform any sanity checks. You can therefore specify a replication factor that is greater than the number of available storage computers, but only in theory. In practice, the software stops at its physical limits. Despite replication, Sheepdog still insists on distributing the data chunks across the available sheep. This means that the copies are as wildly distributed as the data itself. If a server fails, Sheepdog reorganizes the chunks to keep the replication factor. The same thing happens when the failed server resumes its service. If this behavior is not desired, you need to disable Auto Recovery by stipulating:

dog cluster recover disable

Data availability via replication of course costs disk space. In the standard configuration, the Sheepdog cluster must provide three times the nominal capacity. You can save a little space with erasure coding [11], which Sheepdog also supports. The configuration options are similar to the copy factor. You define the default setting for all data when setting up the Sheepdog network. You can assign different values for individual objects to change the number of data or parity stripes. The maximum for the latter is 15. The powers of two between 2 and 16 are configurable for data stripes.

Come In – The Doors Are Open

Although it's primarily aimed at QEMU, you now have some options for storing normal data in a Sheepdog cluster. If you come from the SAN camp, you might like to check out the iSCSI setup. The trick here lies in the backing-store parameter. If the iSCSI daemon runs on one of the sheep, you can simply use the sheep process's corresponding Unix socket. Otherwise, you have to reference the IP addresses and port of a Sheepdog computer. However, the use of multiple paths has not yet been implemented.

Support for the NFS [12] and HTTP [13] protocols is under development. The former can only handle version 3 and TCP. In the lab, I failed to create a stable cluster. HTTP only serves as a basic framework for the implementation of the Swift interface. With the r option, you can tell the sheep daemon the IP address and port on which the associated web server is listening, and what size the intermediate buffer has to be for the data transfer.

Incidentally, Swift is the last remaining weak point in the OpenStack Sheepdog freestyle exercise [14]. The other two storage components of the open source cloud, Glance [15] and Cinder [16], already cooperate with the flock of sheep.

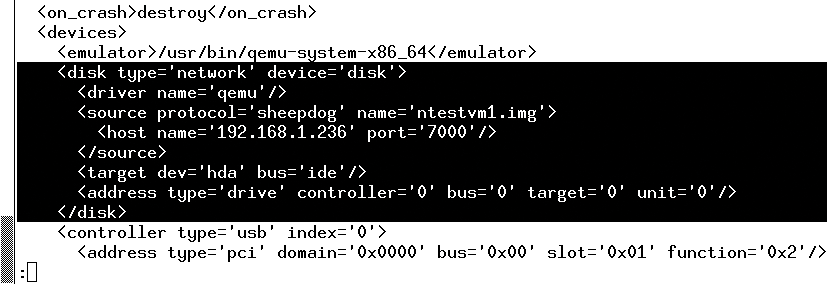

Also, of course, are QEMU and libvirt [17]. The open source emulator and virtualizer can manage images directly in the Sheepdog cluster and use them as virtual disks. If you use the NBD protocol [18], you can use it with Sheepdog, too. The server part is qemu-nbd-ready. The fact that libvirt understands "the language of the sheep" is actually a logical consequence of QEMU functionality.

Libvirt can store disk images as well as complete storage pools in the flock of sheep (Figure 3). The only thing missing is integration with tools such as virt-manager [19]. Last, but not least, I'll take a look at sheepfs. This is a kind of POSIX layer for the Sheepdog cluster – both for the actual storage objects and for the status information. The associated filesystem driver is not part of the kernel. Not unexpectedly, FUSE technology [20] is used here. In principle, sheepfs is only the representation of the dog management tool in the form of directories and files (Listing 4).

Listing 4: Using sheepfs

# mount |grep sheepfs sheepfs on /sheep type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other) # # dog vdi list Name Id Size Used Shared Creation time VDI id Copies Tag eins.img 0 4.0 MB 0.0 MB 0.0 MB 2014-03-01 10:15 36467d 1 # # cat /sheep/vdi/list Name Id Size Used Shared Creation time VDI id Copies Tag eins.img 0 4.0 MB 0.0 MB 0.0 MB 2014-03-01 10:15 36467d 1 # # dog node list Id Host:Port V-Nodes Zone 0 192.168.1.210:7000 128 3523324096 1 192.168.1.211:7000 128 3540101312 2 192.168.1.212:7000 128 3556878528 3 192.168.1.236:7000 0 3959531712 # # cat /sheep/node/list Id Host:Port V-Nodes Zone 0 192.168.1.210:7000 128 3523324096 1 192.168.1.211:7000 128 3540101312 2 192.168.1.212:7000 128 3556878528 3 192.168.1.236:7000 0 3959531712 #

What Else?

Sheepdog handles the standard disciplines of virtual storage, such as snapshots and clones, without any fuss. Normally, QEMU manages the appropriate actions, but the objects can also be managed at the sheep level. This is true of creating, displaying, deleting, or rolling back snapshots. Sheepdog admins need to pay attention, however, because the output from dog vdi list changes. The "used" disk space now shows the "difference" between the objects related as snapshots. Cascading filesystem snapshots are also possible (Listing 5).

Listing 5: Snapshots in Sheepdog

# dog vdi list

Name Id Size Used Shared Creation time VDI id Copies Tag

s ntestvm1.img 4 8.0 GB 0.0 MB 2.7 GB 2014-02-05 15:04 982a39 2 feb.snap

s ntestvm1.img 5 8.0 GB 292 MB 2.4 GB 2014-03-01 11:42 982a3a 2 mar.snap

s ntestvm1.img 6 8.0 GB 128 MB 2.6 GB 2014-03-10 19:48 982a3b 2 mar2.snap

ntestvm1.img 0 8.0 GB 276 MB 2.5 GB 2014-03-10 19:49 982a3c 2

# dog vdi tree

ntestvm1.img---[2014-02-05 15:04]---[2014-03-01 11:42]---[2014-03-10 19:48]---(you are here)

#

# qemu-img snapshot -l sheepdog:192.168.1.236:7000:ntestvm1.img

Snapshot list:

ID TAG VM SIZE DATE VM CLOCK

4 feb.snap 0 2014-03-01 11:42:30 00:00:00.000

5 mar.snap 0 2014-03-10 19:48:38 00:00:00.000

6 mar2.snap 0 2014-03-10 19:49:58 00:00:00.000

#

Sheepdog uses copy-on-write snapshots and procedures for cloning. Thus, the derived storage objects only consume space for data that has changed. For reasons of data consistency, Sheepdog allows cloning of snapshots, but don't bother looking for encryption and compression. Additionally, it does not look as if this situation will change any time soon. Instead, the developers point to the use of the appropriate formats for the virtual disks [21].

At the End of the Day

Sheepdog is a very dynamic project with some potential. Integration with libvirt, Cinder, and Glance, and the ongoing work in the Swift area clearly shows this. The separation of the cluster part is interesting. A small Corosync setup is also quickly accomplished, but for professional use in the data center, Sheepdog still needs to become more mature. Topics such as geo-replication, encryption, or fire area concepts play an important role – Ceph and GlusterFS are already much more advanced.

Partial integration into OpenStack is a positive aspect point for the project, but Swift integration must come quickly if Sheepdog does not want to lose touch here. The tool is definitely worth testing in your own lab (see the "Start – With Prudence" box). This is even more true if Corosync or Zookeeper are already in use. If your existing solution for distributed storage leaves nothing to be desired, however, you have nothing to gain by trying out Sheepdog.