Coordinating distributed systems with ZooKeeper

Relaxed in the Zoo

Admins who manage the compute cluster with a specific number of nodes and high availability (HA) requests will at some point need a central management tool that, for example, takes care of the naming, grouping, or configurations of the menagerie. Thanks to ZooKeeper [1], which is available under the Apache 2.0 license, not every cluster has to provide a synchronization service itself. The software can be mounted in existing systems – for example, in a Hadoop cluster.

Server and Clients

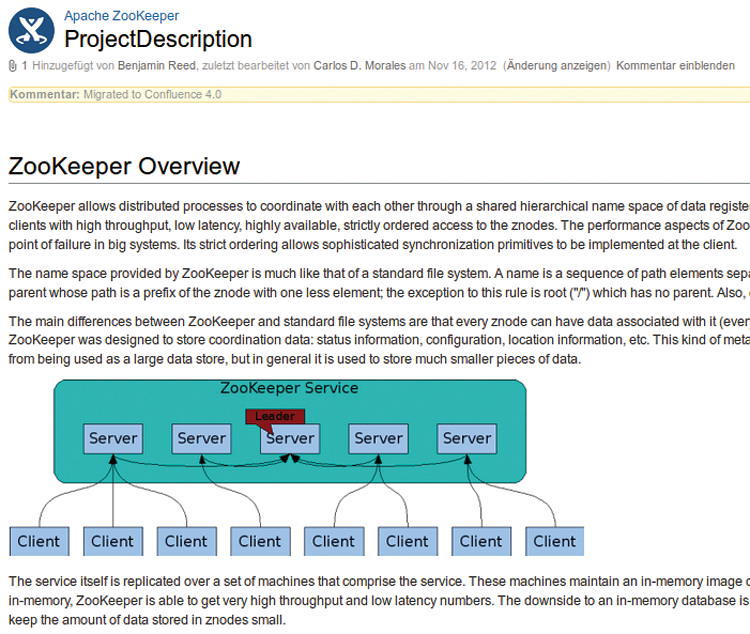

A ZooKeeper server keeps track of the status of all system nodes. Larger decentralized systems and multiple replicating servers can be used (Figure 1). They then synchronize node status information among themselves, making sure that system tasks run in a fixed order and that no inconsistencies occur.

You can imagine ZooKeeper as a distributed filesystem, because it organizes its information analogously to a filesystem. It is headed by a root directory (/). ZooKeeper nodes, or znodes, are maintained below this; the name is intended to distinguish them from computer nodes.

A znode acts both as a binary file and a directory for more znodes, which serve as subnodes. Like most filesystems, every znode comes with metadata which, in addition to version information, regulates read and write permissions.

Order in the Cluster

You can run a ZooKeeper server in standalone mode or with replication; you can see a sample configuration in the online manual [2] [3]. The second case seems more favorable for distributed filesystems, whereas the first is more suitable for testing and development. A group of replicated servers, which the admin assigns to an application and which use the same configuration, form a quorum (see the "Quorum" box).

If three or more independent servers are used in the ZooKeeper setup, they form a ZooKeeper cluster and select a master. The master processes all writes and informs the other servers about the order of the changes. These servers, in turn, offer redundancy if the master fails and offload read requests and notifications from the clients.

It's important to understand the concept of order, on which ZooKeeper's service quality is based. ZooKeeper defines the order of all operations as they arrive. This information is spread across the ZooKeeper cluster to the other clients – even if the master node fails. Although two clients might not see their environment in an exactly synchronous state at any time, they do observe changes in same order.

Available Clients

The ZooKeeper project maintains two clients that are written in Java and C; additionally, wrappers are available for other programming languages. The client expects ZooKeeper to be running on the same server and automatically connects to port 2181 on localhost. The -server 127.0.0.1:2181 line in Listing 1 can thus typically be omitted.

Listing 1: Connecting to the Server

beiske-retina:~ beiske$ bin/zkCli -server 127.0.0.1:2181 [...] Welcome to ZooKeeper! JLine support is enabled [zk: localhost:2181(CONNECTING) 0] [zk: localhost:2181(CONNECTED) 0]

Know Your Nodes

After connecting, the admin sees a prompt that looks like the last line of Listing 1, where ls / shows whether a node already exists. If this is not the case, the admin can now create znodes:

$ create /test HelloWorld! Created /test

The content (i.e., HelloWorld!) can be discovered using the get /test command (shown in Listing 2), which outputs the metadata.

Listing 2: get /test

[zk: localhost:2181(CONNECTED) 11] get /test HelloWorld! cZxid = 0xa1f54 ctime = Sun Jul 20 15:22:57 CEST 2014 mZxid = 0xa1f54 mtime = Sun Jul 20 15:22:57 CEST 2014 pZxid = 0xa1f54 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 11 numChildren = 0

ZooKeeper supports the concept of volatile (ephemeral) and sequential (sequence) znodes – in contrast to a normal distributed filesystem. A volatile znode disappears when the owner's session ends.

Admins usually use this node to discover hosts in the distributed filesystem. Each server then announces its IP address via a volatile node. If the server loses contact with ZooKeeper, or the session ends, the information will disappear along with the node.

ZooKeeper automatically appends a suffix of consecutive numbers to the names of newly generated sequential znodes. A leader election can be easily implemented in ZooKeeper, because the software ensures that new servers publish their information in znodes, which are both sequential and volatile nodes at the same time (see the "Leader Election" box).

To create double nodes like this, the admin uses the -e (ephemeral) and -s (sequence) flags:

$ create -e -s /myEphemeralAndSequentialNode \ ThisIsASequentialAndEphemeralNode Created /myEphemeralAndSequentialNode0000000001

The contents of these nodes can be read with get, as demonstrated in Listing 3. Again, additional information is created here.

Listing 3: Volatile and Sequential Nodes

§§nonubmer [zk: localhost:2181(CONNECTED) 14] get /myEphemeralAndSequentialNode0000000001 ThisIsASequentialAndEphemeralNode cZxid = 0xa1f55 [...]

Messenger Service

Another key feature of ZooKeeper is that it offers software watchers for znodes; you can use them to set up a notification system. To do so, you register a watcher for all clients that want to track a particular component. The registered clients are thus notified of the monitored component when the znode content changes.

To transform an existing znode into a Watcher, you run the stat command, adding the watch parameter:

$ stat /test watch cZxid = 0xa1f60 [...]

If you connect with ZooKeeper from a different terminal, you can change the znode:

$ set /test ByeCruelWorld! cZxid = 0xa1f60 [...]

The watcher set up in the first session now sends a message via the command line:

WATCHER:: WatchedEvent state:SyncConnected type:NodeDataChanged path:/test

A user on the system thus discovers that the content of the znode has changed and can view the new content if they so desire.

However, there is a downside: znodes only fire once. To receive more updates, you need to re-register the znode, and an update could be lost in the meantime. However, you can discover this if you look at the znode version numbers. If each version number counts, sequential nodes are recommended.

Zoo Rules

ZooKeeper guarantees order by making every update part of a fixed sequence. Although not all clients may reside in the same time frame, they still see all updates in the same order. This approach makes it possible to make write access dependent on a particular version of the znode: If two clients attempt to update the same znode in the same version, only one of the updates will prevail because the znode receives a different version number after the first update. This makes it easy to set up distributed counters and make minor updates to node data.

ZooKeeper also offers the possibility of carrying out several update operations in one fell swoop. It applies the system atomically, which means that it performs all of the operations, or none of them.

If you want to feed ZooKeeper with data that needs to work consistently across one or more znodes, one option is to use of a multiupdate API. However, you should consider the fact that the API is not as mature as ACID transactions in traditional SQL databases. You cannot simply type BEGIN TRANSACTION, because you still have to define the expected version numbers of the znodes involved.

Limits

Even if it seems tempting to use one system for everything, you might run into some obstacles if you try to replace existing filesystems with ZooKeeper. The first obstacle is the jute.maxbuffer setting, which defines a 1MB size limit for single znodes by default. The developers recommend not changing this value, because ZooKeeper is not a large data repository.

I found an exception to this rule: If a client using many watchers loses the connection to ZooKeeper, the client library (which is called Curator [4] in this scenario) will try to reconstruct all watchers again as soon as the client connects successfully.

Because the same applies to all messages sent and received by ZooKeeper, the admin has to increase the size limit so that Curator again successfully connects the clients with ZooKeeper. So, what is Curator? The software helps create a solid implementation of ZooKeeper that correctly handles all possible exceptions and special cases in the network area [5].

On the other hand, you can expect limitations in data throughput if you use ZooKeeper as a message service. Because the software mainly relies on correctness and consistency, speed and availability are secondary (see the "Zab vs. Paxos" and "The CAP theorem" boxes).

In the Wild

Found [7], which offers Elasticsearch (ES) instances [8] and is also my employer, uses ZooKeeper intensively to discover services, allocate resources, carry leader elections, and send messages with high priority. The complete service consists of many systems that have read and write access to ZooKeeper.

At Found, ZooKeeper is specifically used in combination with the client console: The web application opens a window for the customer into the world of ZooKeeper and lets it manage the ES cluster hosted by Found. If a customer creates a new cluster, or changes something on an existing one, this step ends up as a scheduled change request in ZooKeeper.

Last Instance

A constructor looks for new in jobs in ZooKeeper and converts them by calculating how many ES instances it needs and whether it can reuse the existing instances. Based on this information, it updates the instance list for each ES server and waits for the new instances to start.

A small application managed by ZooKeeper monitors the instance list on each server running Elasticsearch instances. It starts and stops LXC containers with ES instances on demand. When starting a search instance, a small ES plugin provides the IP address and port to ZooKeeper and discovers further ES instances in order to form a cluster.

The constructor will be waiting for the address information provided by ZooKeeper so it can connect with the instances and verify whether the cluster is there. If no feedback arrives within a certain time, the constructor cancels the changes. ES plugins that are misconfigured or too memory-hungry are typical problems that prevent the start of new nodes.

HA and Backup

To give customers high availability and failure safety, a proxy is deployed upstream of each search cluster. It forwards the traffic to the appropriate server, regardless of whether changes to orders need to be made. At the same time, the proxy reads the information that the ES instances send to ZooKeeper. Based on this knowledge, the proxy decides whether it can forward traffic to other instances, or whether it needs to completely block requests so that a stressed cluster's state of health does not deteriorate.

In the backup section, ZooKeeper looks after the leader election. For backups, admins use the backup and restore API by Elasticsearch that does not save instances, just indexes and cluster settings. Found therefore only preserves the content of clusters, not the servers and instances.

Because the API does not support periodic snapshots, Found implemented its own scheduler. To make it as reliable as the ES cluster for which it is responsible, a scheduler runs on each server with ES instances. This approach removes the single point of failure for Found's HA customers. The schedulers are not supposed to run on independent servers because this introduces additional sources of error. Because the backups occur on a per cluster basis and not per instance, the backup scheduler must be coordinated. One scheduler alone triggers snapshots by choosing a leader for each cluster via ZooKeeper.

Found also needs a connection that is as latency free as possible, because many systems rely on ZooKeeper. Thus, one ZooKeeper cluster is used per region. Having the client and server in the same region increases the reliability of the network. However, admins need to consider errors that occur during maintenance work on the cluster.

Found's experience also shows that it is extremely important to consider in advance what information a client should keep in its local cache, and which actions it can carry out if the connection to ZooKeeper breaks down.

Size Matters

Although Found uses ZooKeeper very intensively, the company also makes sure not to exceed certain limits. Just because A and B use ZooKeeper, the admins do not exclusively send messages via ZooKeeper. Instead the value and urgency of the information must be high enough to justify the cost of a transmission, which consists of the size and update frequency. Admins must consider the following:

- Application logs: As annoying as missing logs might be when debugging, they are still usually the first thing an admin sacrifices when a system reaches its limits. Admins should choose a solution with fewer consistency requirements.

- Binary files are simply too big. They would necessitate optimizing ZooKeeper to a point at which exception problems would probably arise that nobody has ever tested. That's why Found stores binaries in Amazon S3 and only manages the URLs with ZooKeeper.

- Metrics: This may work at the beginning, but in the longer term, scaling problems will result. Sending metrics via ZooKeeper simply would be too expensive because the admin would have to provide a buffer for the intended and available capacity. This applies to metrics in general – with the exception of two critical metrics for application logic: current disk usage and memory usage of each node. Proxies use the latter to set the indexes if a customer exceeds disk capacity. The former will be important when it comes to updating customer contracts.

First Aid

ZooKeeper has become a fairly large open source project with numerous developers who implement advanced features with a strong focus on quality. Of course, some effort is required to become familiar with ZooKeeper, but that should not deter admins. The effort is worthwhile, especially for managers of distributed systems.

A few manuals can help you get started: The "Getting Started Guide" [9] shows how to set up a single ZooKeeper server, how to connect with it via a shell, and how to implement a few basic operations. The more detailed "Programmer's Guide" [10] brings together several important tips that admins should note before installing ZooKeeper. The "Administrator's Guide" [11] describes the options that are relevant to a production cluster.

Some admins doubt that using one system for deployments and another for upgrades actually provides any benefits. If you do this, however, make sure that each of these systems is as small and independent as possible. For Found, ZooKeeper is an important step toward achieving this goal.