What's new in OpenStack 2014.2 "Juno"

Do You Know Juno?

OpenStack no longer needs an introduction for system administrators working in the modern universe of the cloud. The open source cloud platform, which was originally created by Rackspace, is now supported by several leading cloud providers. The OpenStack project [1] relies on a strict cycle. New releases appear in April and October no matter what the cost. To date, the release manager, Thierry Carrez, has kept the project on schedule, and the latest OpenStack 2014.2 "Juno" release appeared on October 16.

Conservative Renewal

Juno continues in the OpenStack tradition as a carefully extended OpenStack distribution. The good news for admins is that the existing APIs remain compatible with those that are already in place. So if you use applications that talk directly to the APIs, you can continue to use them in Juno. All told, the amount of work involved in upgrading from the previous Icehouse edition to Juno is quite manageable.

After the chaos of the first versions of OpenStack, the developers understand that it is conducive to the prestige of the project if you leave no stone unturned between two releases. If things change, the developers announce the changes clearly, and at least one version in advance – enough time to prepare carefully and professionally for an upgrade.

Neutron

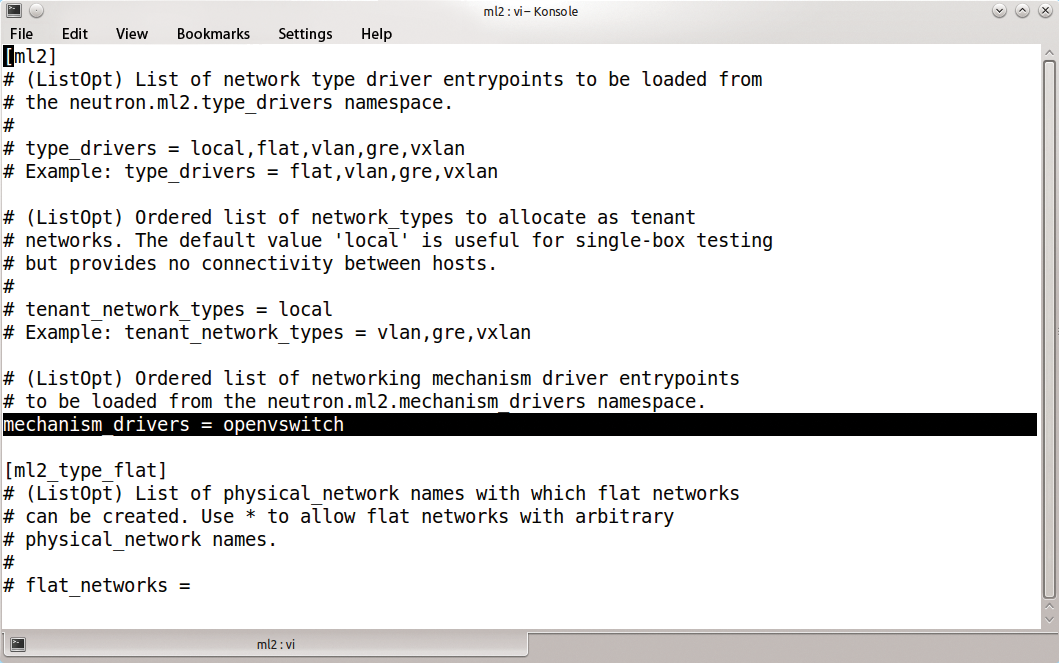

The Open vSwitch driver in OpenStack's Neutron networking component was tagged as deprecated in Icehouse. Juno no longer contains the driver, because it has been replaced by the "Modular Layer 2" framework, which is usually just called ML2 (Figure 1) . If you have a cloud, that uses the old openvswitchdriver, you need to switch to ML2.

The Layer 3 agent finally inherently supports high availability, a feature for which admins have long been waiting. Up to and including Icehouse, Neutron didn't worry at all about failures of the network node that isolated the active VMs from the outside world. If you wanted to use high availability (HA) on Layer 3, you had to work with tools such as Pacemaker, which rarely resulted in satisfactory results, but it did add more complexity to OpenStack scenarios.

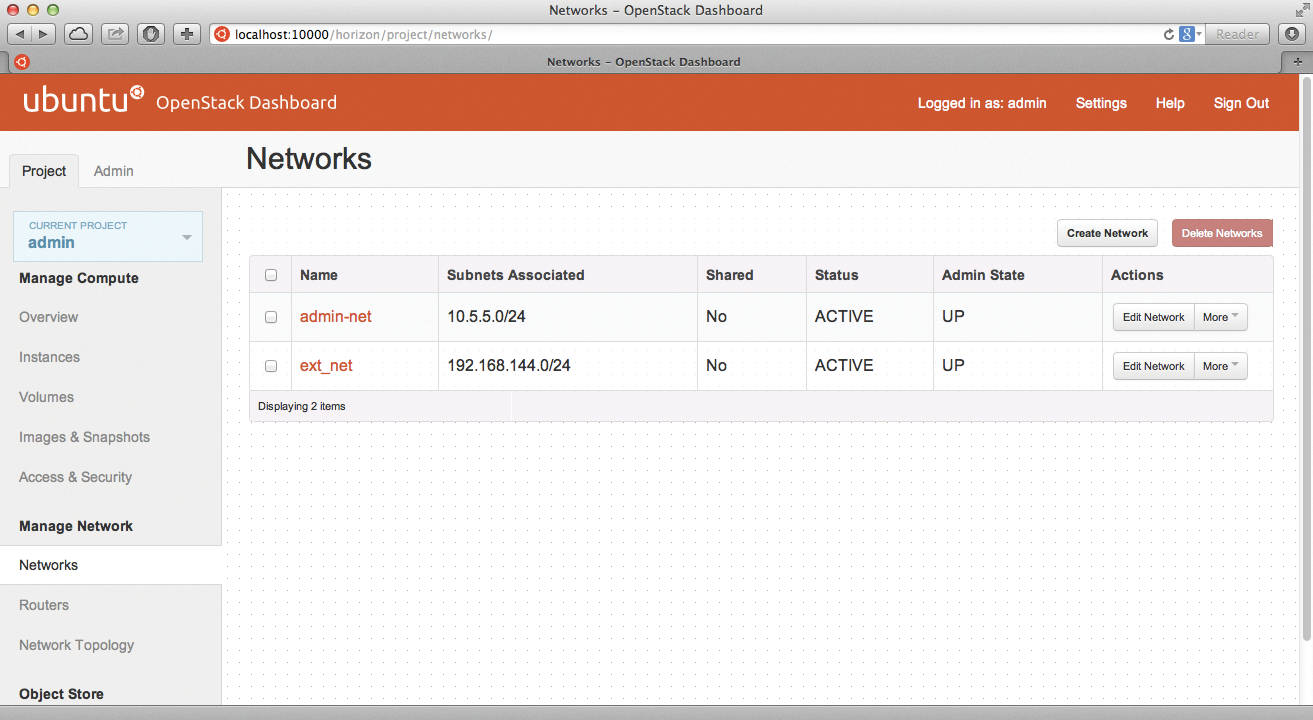

The Neutron Scheduler now ensures that L3 networks automatically switch to other systems should the primary host fail. The developers have garnished the feature with plugins based on VRRP, Conntrackd, and Keepalived; when this issue went to press, it was still uncertain whether all plugins would actually be included with Juno – for example, the internal project review was not completed for the Conntrackd plugin. As a general rule, though, Neutron will have HA features for L3 networks in Juno, and you can regard this change as a milestone (Figure 2).

Juno also brings other Neutron changes: New drivers for a whole bunch of network hardware, including Arista, Brocade, and Nuage, are on board, as are new load-balancing drivers for appliances by A10 Networks. In Juno, Neutron thus grows much closer to devices that commonly appear as infrastructure in data centers.

Keystone and More

Keystone provides identity, token, catalog, and policy services for OpenStack. Behind the scenes, Keystone is a kind of root application; without it all the wheels stand still. For Juno, the Keystone developers came up with several gimmicks, the most important being basic support for a federation model.

In simple terms, the federation model works as follows: Keystone identifies a user by reference to login credentials and issues a matching token. With this token, the user turns to a different, remote Keystone instance that validates the token and provides the user access to the local cloud. Unfortunately, this feature is still marked as experimental for Juno, but the function should be officially available in the upcoming K release or earlier.

More improvements in Keystone include improved LDAP support and compressed tokens, as well as many changes to the version 3 API. Also, the hypervisor drivers for KVM on Libvirt, VMware, and Hyper-V in Nova have been treated to new features. Hyper-V VMs can now be restarted by soft reboot; previously, only a hard reboot was possible.

The upcoming K version of OpenStack will introduce a feature named "Scheduler as a Service," which is based on the component that is still called the nova-scheduler in Juno. To be able move forward, the Nova scheduler has already undergone a major overhaul in Juno.

Cinder

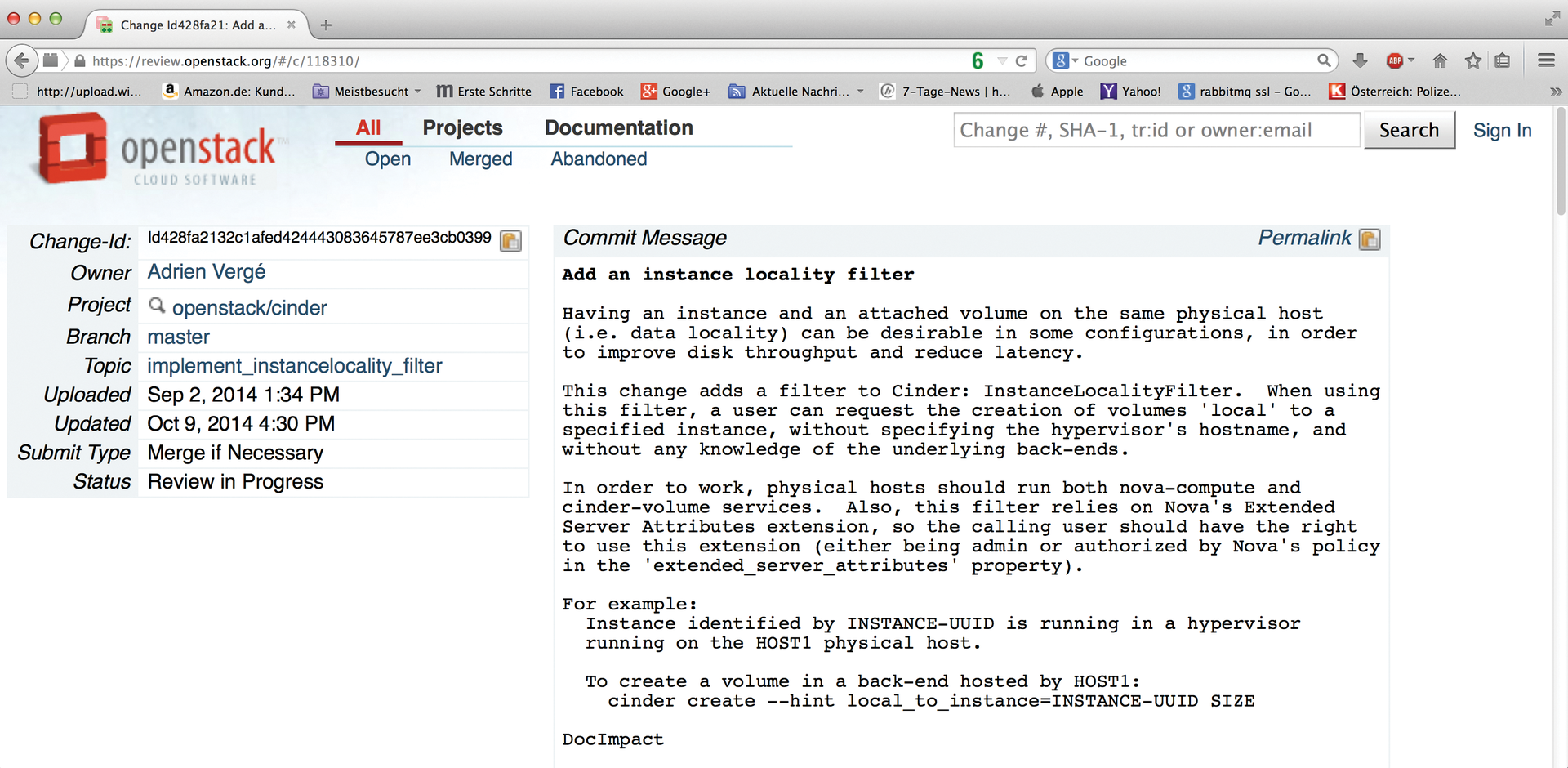

The Cinder block storage component (Figure 3) adds several new drivers, for example, for Datera, EMC, ProphetStor, and smbfs – the Samba file system. Additionally, Cinder now supports volume replication if the underlying storage technology can handle it.

Ceilometer

The developers have also made much progress with OpenStack's metering component Ceilometer, which now has much more extensive metrics. Traffic that runs through VPNaaS, LBaaS, or the FWaaS firewall solution can no longer escape the service's attentions. Additionally, Ceilometer can now use the Intelligent Platform Management Interface (IPMI) to access machines directly, and it supports the Xen API for communication with Xen-based servers. In Juno, Ceilometer is seamlessly integrated with the deployment tool Ironic.

The performance of the service is also improved: In the future, Ceilometer will use multiple worker processes for writes to its database, rather than a single thread as before. As a result, it should be possible for the service to handle larger amounts of data. Little has changed with Heat, the orchestration component. The latest release adds only a few template features, which seem more relevant for niche setups than for most mainstream deployments.

Big Guns: Ironic

No later than in the next release, a piece of software that already many cloud admins desperately need will be promoted to an OpenStack core component. For the first time, Ironic will become a central part of the cloud solution. OpenStack thus has a bare metal deployment driver that lets you integrate new hardware directly.

Anyone who has ever used Ubuntu's MaaS, will find many familiar concepts in Ironic, because the driver lets you handle bare metal within an OpenStack cloud. When you bolt a machine into the rack, Ironic runs PXE and many other tools to ensure that the computer is treated to a complete operating system.

Officially, this process runs under the keyword of "bare metal provisioning." The process culminates in the installation of the actual OpenStack services that act as a cloud in the cloud. The functions that enable the automatic BIOS update of some brands or the correct configuration of RAID hard drives are really neat.

Triple O

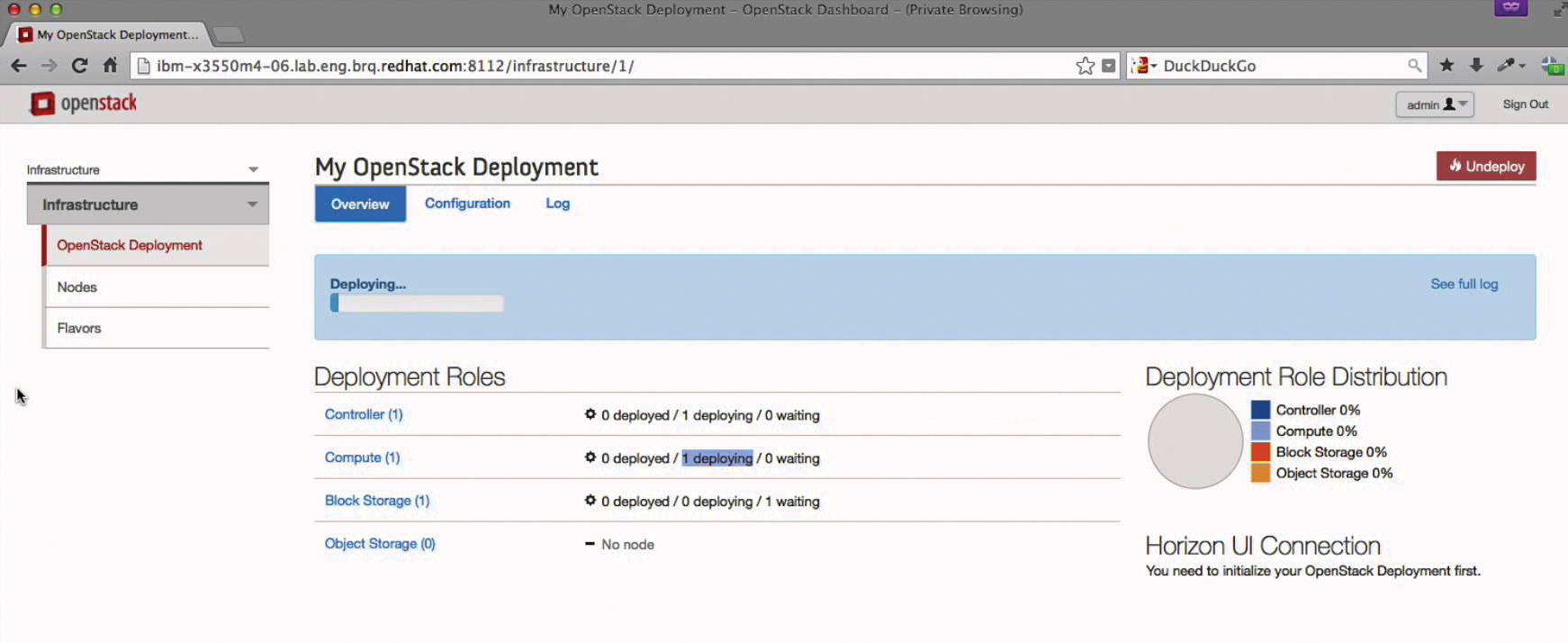

HP's triple O (the triple "O" stands for OpenStack On OpenStack) [2] relies on Ironic at its core, but Ironic actually only becomes really convenient with Triple O, which comes with a GUI by the name of Tuskar (Figure 4). Triple O is a very active subproject within the OpenStack project; in recent months, its developers have completed huge amounts of work to make OpenStack On OpenStack a workable solution.

Ironic is definitely on its way to becoming an official core component; that is, it will soon be part of the innermost circle of the OpenStack environment. However, the path Ironic had to tread before receiving this accolade was anything but short: HP participated extensively in Ironic's development. In the future, Ironic will offer a genuine alternative to provider-specific solutions, allowing Triple O to become one of the great OpenStack players.

Conclusions

Once again, OpenStack presents a new version as a conservative advancement on its predecessor; Juno also has a few smart additional features on top. The good news for admins is: if you can manage Icehouse, you will have no difficulty with Juno, because there are hardly any differences.

The removal of the legacy Open vSwitch driver can pose a challenge in various setups; if you did not listen to the developer warnings in Icehouse, you need to do so now. The remaining changes, however, fit seamlessly into the picture; updates from Icehouse to Juno are said to be no more than moderately challenging. The box titled "OpenStack and Automation" describes what the latest release achieves in terms of automation.

All told, Juno is a successful release. These tiny-step releases are not popular across the community, by the way. You will often find critical comments on the mailing lists, because introducing new features takes quite a while with the current setup, and several releases can see the light of day before they are actually ready for use. This conflict could fuel debate in the community in the future – but for admins, the current model makes far more sense.