Coming to grips with grep

Patterns

We are all creatures of habit in varying degrees, and I frequently find myself settling into various routines in my job as a sys admin. I have had many a moment in which I lucidly caught myself thinking: "Not this task again! I really need to speed this process up." By simply automating a procedure or ultimately deprecating and condemning it as redundant, I could save a lot of time in the long run.

When I recently spent some time away from the computer monitor, I had a chance to consider what I could use to help automate tasks and to think about the procedures that I face routinely. In the end, I concluded that clever little command lines were the way forward, and in most cases, these could translate into clever little shell scripts.

To increase my efficiency in creating command lines and scripts, I made a conscious decision to start again and effectively go back to basics with some of the core shell commands; that is, I wanted a timely way to improve my understanding of a few key packages so that I wasn't always looking up a parameter or switch and, thus, speed up my ability to automate tasks.

When I typed history at the prompt, lo and behold, that old favorite grep stood out as something I use continually throughout my working day. Therefore, in this article, I will dig into some of the history of grep and attempt to help you improve your readily available knowledge, with the aim of being able to solve problems more efficiently and quickly.

Not Such a Bad Pilot

As most sys admins soon discover, one of the heroes of the Linux command line is the grep command. With grep you can rifle through just one file, all of a directory, the process table, and much more without batting an eyelid.

The stalwart that is grep comes to a command prompt near you in a few forms, but I'll come to that a little later. First, I want to get my hands dirty with a reminder of some of the basics.

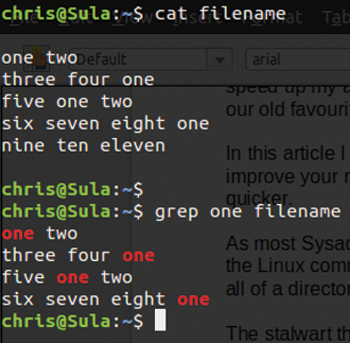

To get moving in the right direction, I'll consider a text file with five lines of words (Figure 1, top). Using that file, I'll start with a simple grep example that searches for a pattern within a file:

# grep one filename

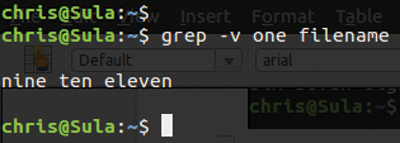

The output in the bottom of Figure 1 shows all the lines that contain the search string (i.e., "one" in this example). Then, I reverse that operation and output everything that does not have the pattern "one" (Figure 2):

# grep -v one filename

These two examples are surprisingly simple but powerful. You can see everything that does and conversely does not include a specified pattern. This is not rocket science, but combined with some suitably juicy command-lines tools (e.g., awk, sed, and cut), you will soon find yourself boasting a formidable arsenal with a little practice.

With this simple introduction, I'll look a little more closely into why grep is so highly regarded.

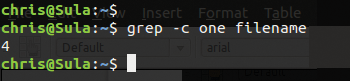

To count how many times the pattern "one" shows up in a file, I use:

# grep -c one filenane

The output from grep faithfully produces an accurate count of the number of pattern instances in that file, as shown in Figure 3.

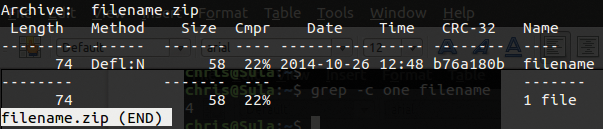

Many extensions exist to complement grep's functionality, such as less and zgrep. If I simply compress the five-line text file using zip, then I should see the file output in Figure 4 when I use less filename to look inside filename.zip. The excellent less command shows a number of useful details about the shrunken file, such as how much the zip file compresses the original file as a percentage.

less command looks inside a ZIP file.The zgrep command can forage among the depths of compressed files and yet promptly return pattern matches. Your mileage may vary with compressed archives containing non-ASCII or binary files. If you think about it for a while, you can see the full potential of this functionality, but if you're unfamiliar with it, I hope you find it as intriguing as I did when I first came across it. To try out zgrep on the zipped text file (Figure 5), enter:

# zgrep one filename.zip

Now I'll dismiss the compression format more commonly associated with Windows operating systems for a moment and doff my cap at files compressed with gzip. Many of the instruction manuals, or info pages, bundled with Unix-like operating systems are compressed to save space. For example, when you run the command,

# info grep

the details displayed by the program it spawns is actually a viewer converting compressed content from the file /usr/share/info/grep.info.gz – thanks to the functionality of grep and less.

With the malleable grep command, it's possible to look through subdirectories, too. According to the man page, you can use either the upper- or lowercase version of -r to search recursively through subdirectories and receive the same result:

# grep -r one /home/chris

The screeds of output from this command might alarm some users executing it on desktops. I was surprised at the fact that the diligent grep hunted down hidden files deep into the dark depths of my subdirectories (those files beginning with a dot) but also at how many subdirectories my Ubuntu desktop needed to operate. It was also surprising because, among the numerous tiny and previously unseen files, a great many returned a hit for the simple pattern "one." However, such unforeseen output is a learning experience that increases my knowledge of how systems work.

Let Me Contain My Surprise

Two pieces of output from recursively checking my home directory piqued my interest. The first was a dictionary file I'd forgotten about and hadn't purged from my Trash, although it's not surprising that there might be one or two matches for the word "one" in a dictionary. The second piece of output (Listing 1) also was not expected. Clearly this history and any associated preferences need to be cached somewhere, and where better than deep within a user's home directory hidden away from prying eyes? The very last word aplicaciones shows the hit for the word "one."

Listing 1: Some Recursive Grep Output

/home/chris/.cache/software-center/piston-helper/software-center.ubuntu.\ com,api,2.0,applications,en,ubuntu,precise,\ amd64,,bbc2274d6e4a957eb7ea81cf902df9e0: <snippet> "description": "La revista para los usuarios de Ubuntu\nNúmero 5: Escritorios \ a Examen\r\n\r\n\r\nEn el interior: Artículos sobre los escritorios Unity, \ Gnome3 y KDE, tutoriales para crear tu propia distro, consejos de seguridad, \ anáslisi de aplicaciones

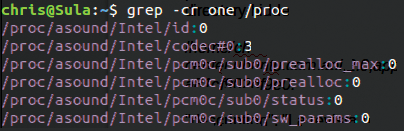

Next, I'll look directly at the /proc virtual filesystem (as opposed to querying the process table per se), which doesn't contain "real files," and look at the results of the command I ran on my home directory. With two of the switches I've already talked about, the grep command in Figure 6 searches recursively and counts the number of "one" patterns it finds.

/proc virtual filesystem that returns three hits for the pattern "one."The next example uses the asterisk wildcard, which allows you to search every file in the current directory, and the -l switch, which returns only the filenames of those files that return a positive hit, as opposed to the full line of content that has a match:

# grep -l pattern *

Change it to uppercase -L and you'll only get those files without the pattern, much as the -v switch inverted matching in Figure 2.

Another switch I come across frequently with regular expressions is the almost globally used -i, which conveniently means that grep should ignore all case sensitivity, as in:

# grep -i ERROR daemon.log

If you're diagnosing an issue or rescuing a failing system and can't remember whether the service in question writes to its log files in upper- or lowercase, then just flick the -i switch.

I hope I have demonstrated that inverting and reporting pattern matches are useful when solving a problem or performing root cause analysis, and when much of a Linux server exists as pure text on a filesystem, you have endless applications to consider.

This Is Some Rescue

As you have seen, the powerful grep outputs a whole line when it matches a pattern. By adding the -o switch, you can output only the string of interest and suppress the other potentially less relevant data. This switch can be very useful when executed on a text file such as this:

# cat searchedfile start-steven-end start-raheem-end start-daniel-end start-phillipe-end start-middle-stop # grep -o "start-.*-end" searchedfile

This statement says to output lines with the suitable middle element (represented by the wildcard asterisk) and yet still find the whole line being sought. As you would expect, this grep statement produces the following output:

start-steven-end start-raheem-end start-daniel-end start-phillipe-end

If you add -b to the command, you can tell how many bytes into a file you will find each entry; that is, the byte offset of a pattern.

Although some characters take up more than 1 byte (e.g., Chinese character sets use more than one byte per letter), generally speaking the following examples will apply. Using the same input file:

# grep -ob raheem searchedfile 17:raheem

If I had used daniel, the output would be 34:daniel. Note how grep counts the bytes in a file.

The ever-useful -n gives grep the power to report the line number in which the offending (or pleasing) pattern appears in your file. This ability becomes very useful when you're hunting high and low for a number of patterns in a large file.

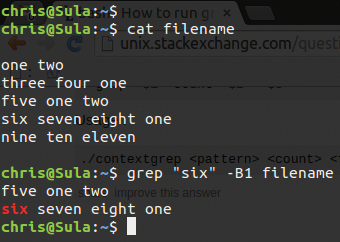

Grep also recognizes adjacent lines with the switches -A (after) and -B (before). This capability could help you manipulate massive chunks of content without splitting up files or getting involved in a manual cut-and-paste marathon. With the use of the text file I employed earlier in this article, I'll run through an example that uses one of these simple but flexible options.

Figure 7 offers a look at what the five-line text file produces from one of the Context Line Control commands, as per the GNU grep manual [1]:

# grep "six" -B1 filename

-B1 switch.Using the after and before options can make light work of all kinds of search applications.

That's No Moon

A slightly more complex application of the stellar grep uses a Bash alias to ignore frequent offenders in Apache logfiles. When an exclude list is lengthy or a logfile is continuously changing as information accumulates, it can be tricky to exclude certain phrases or keywords accurately. With this method, however, I can very effectively do just that. As you might have guessed, at the heart of such a command is a new switch.

Standard input/output (stdio) buffers its output in certain circumstances, which can cause commands piped further down the command-line chain to lose input; however, the --line-buffered switch disables buffering:

alias cleanlog='cat /var/log/apache2/access.log | \ grep -v --line-buffered -f /home/chris/.apache_exceptions | \ less +G -n | cut -d' ' -f2 | sort | uniq'

This Bash alias looks at the exceptions in the file .apache_exceptions in my home directory. The -v switch ignores any occurrence of these exceptions in the access.log logfile before pushing the surviving log entries onward to the formatting commands that finish the command line.

With the output provided by running the cleanlog alias, I can tell quickly which new IP addresses have hit the web server, excluding search engines and staff. Just running that alias offers a list of unique, raw IP addresses, but by adding wc to the command

# cleanlog | wc

the output gives me a count of unique IP addresses in the log. To experiment, you can try the cleanlog alias directly on the command line incorporating tail -f.

A coreutils utility apparently can achieve a similar solution like this:

tail -f /var/log/foo | stdbuf -o0 grep

The -o0 switch disables buffering of the standard output stream.

Next in Line

Once upon time, a group of arcane grep utilities existed that were then forged into one single, powerful tool. That is to say, formerly a number of grep derivatives came into being on a variety of operating systems such as Solaris and other more archaic Unix-like OSs.

The utilities egrep, fgrep, and rgrep are for all intents and purposes now the equivalent of grep -e, grep -f, and grep -r. There's also pgrep which I'll look at in a second.

In this section, I'll very briefly touch on each of these extensions to the standard grep, leaving rgrep aside because I've looked at grep -r already.

The versatile fgrep extension (or grep -F) obtains a text file with a list of patterns, each on a new line, and then searches for these fixed strings within another file.

Note that the first letter in the word "fixed" recalls the fgrep command name, although some say it means fast grep; because fgrep ignores regular expressions and instead takes everything literally, it is faster than grep. An example pattern file could look like this:

patternR2 patternD2 patternC3 patternP0

A very large logfile might also start with lots of similar "pattern" entries. Then, to find exact matches in the logfile of lines beginning with precisely the entries in the pattern file, you would use:

# fgrep -f patternfile.txt hugelogfile.log

Whereas grep stands for Globally search a Regular Expression and Print, its counterpart egrep prefixes the word "Extended." The egrep command enables full regular expression support and is a little bulkier to run than grep.

The pgrep command, on the other hand, is a slightly different animal and originally came from Solaris 7 by prefixing "grep" with a "p" for process ID.

As you can probably guess, pgrep doesn't concern itself with such mundane activities as the filesystem's contents; instead, it checks against the process table. For example, to ask only for processes that are owned by both users root AND chris, you would enter:

# pgrep -u root chris

Subtly adding a comma outputs the processes that are owned by one user OR the other:

# pgrep -u root,chris

If you add -f, then pgrep checks against the full command line as opposed to just the process name. Again, you can invert pattern matching by introducing -v so that only those processes without the pattern are output:

# pgrep -v daemon

Alternatively, to nail down a match precisely, you can force pgrep to match a pattern verbatim:

# pgrep -x -u root,chris daemon

In other words, x means exact in this case.

A command that until recently I hadn't realized was part of the pgrep family is the fantastic pkill. If you find looking up a process number before applying the scary

kill -9 12345

command, then why bother with PIDs at all? The affable pkill lets you do this with all matching process names:

# pkill httpd

Obviously, you will want to use this with some care, especially on remote servers or as the root user.

By combining a couple of the pgrep tricks, the following example offers a nice way of retrieving detailed information regarding the ssh command:

# ps -p $(pgrep -d, -x sshd) PID TTY TIME CMD 1905 ? 00:00:00 sshd 16863 ? 00:00:00 sshd 16869 ? 00:00:00 sshd

Just for fun, I'll check the difference that adding the -f flag makes by using the full command (Listing 2).

Listing 2: pgrep with -f

# ps -fp $(pgrep -d, -x sshd) UID PID PPID C STIME TTY TIME CMD root 1905 1 0 Jul03 ? 00:00:00 /usr/sbin/sshd root 16863 1905 0 13:11 ? 00:00:00 sshd: chris [priv] chris 16869 16863 0 13:11 ? 00:00:00 sshd: chris@pts/0

Simply too many scenarios are possible to give the full credit due to pgrep, but the combination of any of the popular utilities (awk, sed, trim, cut, etc.) makes pgrep an efficient script and command-line tool.

Elixir of Grep

As sys admins, we are commonly purging lots of data to get to the precious pieces of information buried within. However, it's not always easy, so now I want to look at another text file of sentences (Listing 3) as an example and try running some regular expressions (regex) alongside grep, starting with:

Listing 3: simplefile

A stitch in time saves nine. An apple a day keeps the doctor away. As you sow so shall you reap. A nod is as good as a wink to a blind horse. A volunteer is worth twenty pressed men. A watched pot never boils. All that glitters is not gold. A bird in the hand is worth two in the bush.

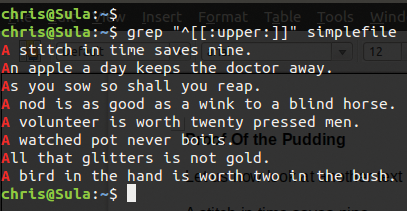

# grep "^[[:upper:]]" simplefile

The output of this command shows which characters are uppercase (Figure 8). Those readers who are familiar with regular expressions know that the [[:upper:]] regex is the same as [A-Z].

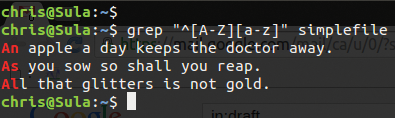

Similarly, to look for an uppercase followed by a lowercase character at the start of the line, entering

# grep "^[A-Z][a-z]" simplefile

outputs the results shown in Figure 9. Many other regex potions suit the powerful grep command. The resources online are extensive, so I'll move on to yet more derivatives.

Master of Many Forms

The various incarnations of grep have taken many forms. Back in the day, grep was actually a function of the somewhat old-school editor ed. The easy-to-use ed was very similar to vi and vim in the sense that it used the same set of colon-based commands. From within this clever editor, you could run a command starting with g that enabled you to print (with p) all the matches of a pattern:

# g/chrisbinnie/p

From these humble beginnings, a standalone utility called grep surfaced, toward which you could pipe any data.

Thanks to grep's pervasive acceptance, the sys admin's vernacular even includes some highly amusing references in its lexicon. For example, are you familiar with vgrep, or visual grep [2], which takes place when you quickly check something with your eyes instead of using software to perform the task?

Back in the realm of the command line, you might have come across the excellent and regular-expression-friendly network grep, or ngrep [3], which offers features similar to tcpdump, as well as a few additional features on top.

The agrep, or approximate grep, command utilizes fuzzy logic to find matches. If you employ the slick regex matching library TRE, you can install a more powerful version of agrep called tre-agrep. To install libtre5 and tre-agrep on Debian or Ubuntu, use:

# apt-get install tre-agrep

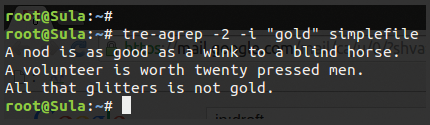

You could try out tre-agrep with the word "gold" on the simplefile example file. A numeric switch between 0 and 9 tells tre-agrep how many errors to accept, so adding -2

# tre-agrep -2 -i "you" simplefile

means it will accept patterns within two errors of the original pattern. Therefore, it would return mistakes like "göld" with a diacritical. Figure 10 shows the output from this case-insensitive (-i) command. The first line is within one error of "gold" (good), the second line is within two errors (volu), and the third line matches exactly (gold).

-2) the word "gold," with case ignored (-i).Not Stable

As if all that information about grep isn't enough, a Debian-specific package offers even more grep derivatives in debian-goodies. To install, enter:

# apt-get install debian-goodies

If you rummage deeper into this bag of goodies, you will discover a tiny utility called which-pkg-broke, which lets you delve into the innards of a package's dependencies and when they were updated. This utility along with the mighty dpkg can help you solve intricate problems with packages that aren't behaving properly. To run the utility, all you do is enter

which-pkg-broke binutils

where binutils is the package you are investigating.

Another goodie is dgrep, which allows you to search all files in an installed package using regular expressions. In a similar grep-like format, dglob dutifully generates a list of package names that match a pattern. (The apt-cache search command has related functionality.) Both goodies are highly useful for performing maintenance on servers.

All Clear

My intention within this article was to provide enough information related to grep to assist you in building useful command lines and creating invaluable time-saving shell scripts. There's nothing like getting back to basics sometimes.

Many other possible applications of the clever grep are there to explore in much more detail, such as back-references [4], but I hope your appetite has been sufficiently whetted for you to invest more time in brushing up on the basics and less time referencing instruction manuals. As you can imagine, you can launch all sorts of weird and wonderful quests by combining the functionality that these utilities provide.