Scalable mail storage with Dovecot and Amazon S3

Storage Space

Mail server administrators have a hard life. On one hand, few other services produce as many complaints about improper use as mail operations – incorrect blacklisting is an example. On the other hand, people are beginning to realize that email is a product from another age that is now struggling to deal with today's fast-paced IT. Users' demands for receiving and sending email have grown steadily in recent years.

Several years ago, it was quite common to allow email attachments only up to a particular, very low size, but this practice is frowned upon today: Users now expect to be able to send their 20MB PowerPoint presentations by email. Moreover, the number of email users is still growing steadily, and the amount of data stored by all of a provider's users is growing proportionally.

This problem might not be specific to email, because other Internet services are also experiencing the effect of the steady growth of the data volumes that need to be handled. However, working around this problem for email setups is relatively complex, primarily because of the architecture inherent in the "email" principle.

Architecture

Normal setups usually follow the same old pattern: On one side, SMTP daemons ensure that customers can send email and that incoming messages reach the recipients. On the other side, the services that deliver email to customers carry out the majority of the work. Some providers still exclusively rely on POP3 or only offer IMAP against payment; however, on the whole, the IMAP protocol has asserted itself.

This situation means additional worries for email service providers: Customers quite rightly expect to find their email on the provider's servers at any time. These customers rarely even download local copies of their email and simply rely on their providers. The providers then have to figure out how to cope with the burden and memory requirements of such a setup. Even if each user only has a small mailbox, the need for storage is already substantial if you have a large numbers of users.

Sooner or later, you will need to adjust your infrastructure to accommodate the increased number of users, which means growing your infrastructure. From experience, load issues with services can be dealt with relatively simply by using multiple mail exchanger (MX) entries (on the SMTP side) and load balancers for IMAP or POP3 daemons. The storage problem is trickier.

The Storage Problem

When email administrators wanted to add storage space to their servers in the past, they mainly used central storage systems. SAN models from the major manufacturers are the classic candidates. However, those things are expensive and scale vertically at best. Remember that vertical scalability means repowering the existing hardware, which is the opposite of horizontal scalability, for which new machines are simply added to existing machines. The load is then distributed across the devices that are part of the installation.

Sharding

Traditional storage systems can be expanded by installing new hard drives, but the end of the road is always reached eventually, and additional storage space can only be managed by purchasing multiple storage systems. The process of storing data records across multiple machines (known as sharding) has been the standard approach for many years, with users being assigned a variety of storage systems.

This approach brings a raft of disadvantages: First, SAN storage is significantly more expensive than regular, off-the-shelf hardware; second, multiple storage islands make centralized administration more difficult. Admins previously just had to grin and bear this because of the lack of alternatives; the storage market seems to have been in a deep sleep when it came to horizontal scaling.

Object Stores to the Rescue

The situation changed dramatically, however, with the advent of object stores. These solutions were given their name because they handle all data in the same way internally – as binary objects. The trick here is that binary objects can be split up and put back together as desired, as long as this happens in the same order.

This trick lets object stores offer real horizontal scaling, because the object store itself "only" has to make sure that the binary objects are split correctly and distributed neatly across the existing hard drives. If more hard drives are added to the installation, the object store automatically uses them and thus expands scalability limits to theoretical dimensions.

The existing cloud computing solutions have brought a whole wave of different stores into the limelight. Red Hat acquired Ceph [1]-[3] and introduced its own Storage Server [4] as a solution for storing objects. OpenStack entered the game with Swift, which is also an object store in the classical sense. Moreover, you have those who provide object stores as a service for users, such as Amazon S3 or Dropbox.

Setting up scalable storage systems with all the services is certainly possible, and it would be great for email platform administrators if such a storage solution could be harmonized with the email architecture referred to previously. After all, there is no obstacle to treating an email message like a binary object. That is what Timo Sirainen, the author of the Dovecot secure IMAP mail server [5], probably thought and drew his own conclusions: The enterprise version of Dovecot offers an Amazon S3 plugin that perfectly exploits the benefits of the object store.

Dovecot with S3

Sirainen has offered the Dovecot S3 plugin for some time. Importantly, the plugin only runs with the enterprise version of the Dovecot mail server (Figure 1). The license for the enterprise-grade Dovecot Pro edition costs around EUR5,000 per year for 10,000 mailboxes. Admittedly, that is not exactly cheap, but the number has little meaning.

Dovecot Pro might cost more than the free version, but using it with an object store for backup will, in many cases, mean significant hardware discounts in the enterprise, because it removes the need for SAN storage, so off-the-shelf hardware will do. Companies should certainly take such considerations into account if they are considering using Dovecot with the S3 plugin.

How, specifically, does the S3 plugin work for Dovecot? Sirainen explains this in detail in the documentation for the plugin. Generally, anyone who wants to use the Dovecot S3 back end needs access to an object store as per the Amazon S3 standard. Login credentials in the form of two values are usually attached to such accounts: The access key acts as a kind of username, and the secret key is the password. Anyone who creates an account with Amazon receives both pieces of information automatically.

To storing email with Dovecot, you also need to create your own bucket in S3. At first, you might be a little uneasy and feel insecure because not all users get their own buckets – but this is an illusion. Not all users have their own filesystem with a regular mail server, after all; the responsibility for enforcing access rights lies with Dovecot as the mail server in both cases.

Dovecot Configuration

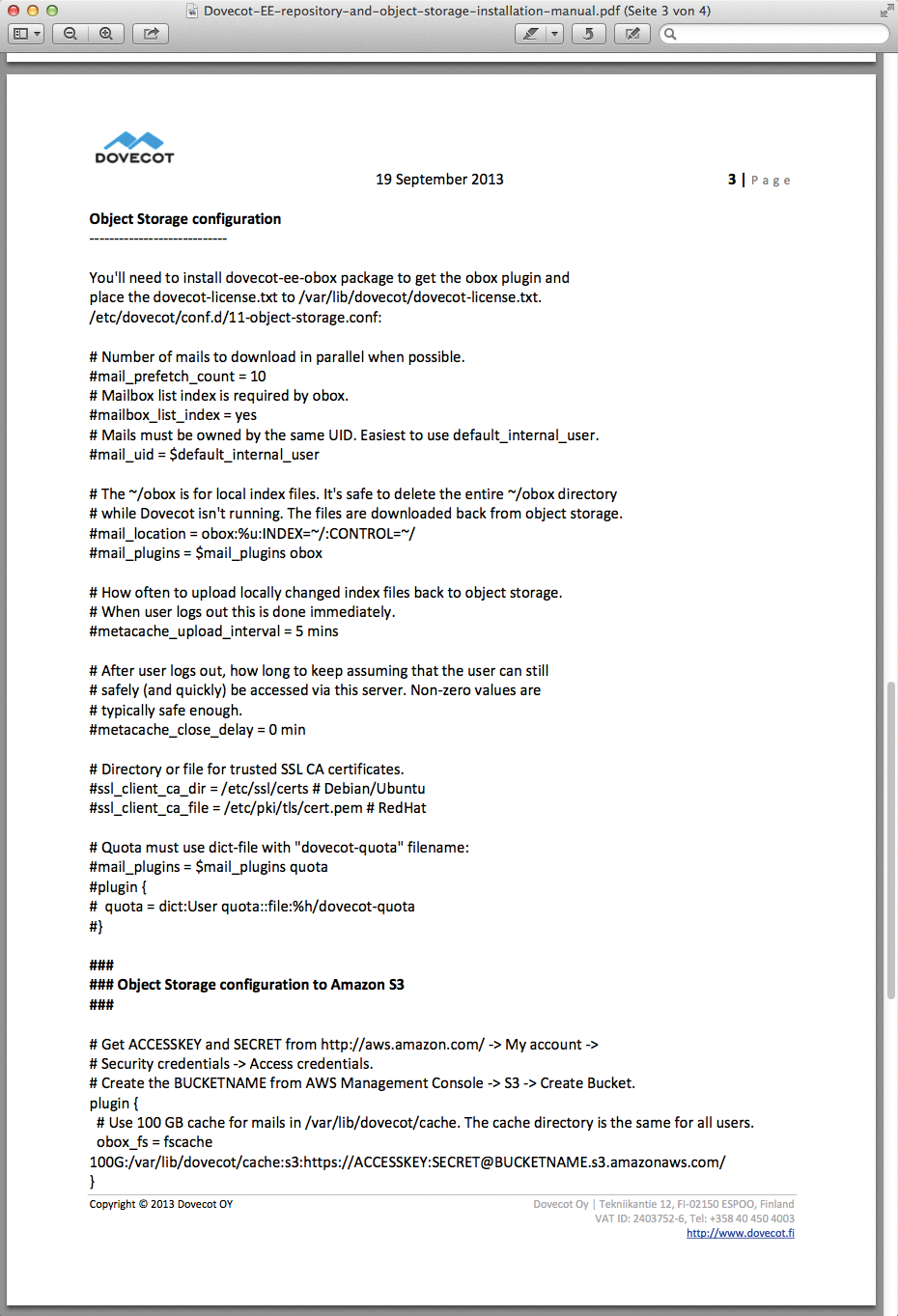

The next step involves the configuration of Dovecot itself: Anyone who already uses the program for IMAP or IMAPS will be familiar with the paragraph-like structure of the configuration files. Registering an additional paragraph that takes over the plugin configuration is all it takes for the Amazon S3 plugin. The example in Listing 1 is taken directly from the Dovecot documentation (Figure 2).

Listing 1: A Dovecot Plugin

plugin {

# Use 100 GB cache for mails in /var/lib/dovecot/cache. The cache directory is \

the same for all users.

obox_fs = fscache 100G:/var/lib/dovecot/cache:s3:https://Accesskey:Secret@\

Bucket-Name.s3.amazonaws.com/

}

In this case, Dovecot uses a local cache with 100GB of storage space to process local access to frequently used objects as quickly as possible. Clearly, it is not very difficult to dock Dovecot onto S3, as long as the required Dovecot license is available for the plugin.

Those who would prefer to store their data in Microsoft's Azure cloud can do just that – a plugin for Azure is available too. Dropbox support is available on top of that; thus, Dropbox can also be used as back-end storage for Dovecot.

This very fact, however, leads to a discussion that is much more legal than technical: Do companies actually want to use Dovecot to store their email on Amazon, Microsoft, or Dropbox? Skepticism is perfectly understandable in light of the Snowden revelations.

Ceph Basics

These concerns, however, do not mean that you need to refrain entirely from the convenience of S3 storage in Dovecot. Because the S3 protocol is publicly documented, several projects that provide S3 storage exist on a FLOSS basis, including the shooting star of the storage environment, Ceph.

Ceph has received much publicity in recent months, especially because of its sale to Red Hat. Thus, Ceph is a familiar concept for most admins, and it can be seen as an object store with various front ends. The ability to provide multiple front ends is quite a distinction compared with other object stores such as OpenStack Swift. Ceph was designed by its creator Inktank as a universal store for almost everything that happens in a modern data center.

A Ceph cluster ideally consists of at least three machines. Various Ceph components, including at least one monitoring server per host (MON), and storage daemons (object storage daemons, or OSDs) for each existing hard drive then run on these machines.

The monitoring servers are the guards within the storage architecture: They monitor the quorum using the Paxos algorithm to avoid split brains. Generally, a cluster partition is only considered quorate if it contains at least 50 percent of the MONs plus a whole MON. Consequently, in a three-node cluster, a cluster partition is quorate if it sees two MONs – Ceph would automatically switch off a partition that only sees one MON.

MONs

Furthermore, the MONs act as the directory for the Ceph cluster: Clients actually talk directly to the hard drives in the cluster (i.e., the OSDs). However, if the clients want to talk to the OSDs, they need to know how to reach them. The MONs export dynamic lists containing the existing OSDs and the existing MONs (OSD and MON maps) and serve up both maps if clients ask for the information. On the basis of the Crush algorithm, clients can then calculate the correct position of binary objects themselves. Ceph does not, in this sense, have a central directory where the target disks are recorded for each individual binary object.

Parallelism is proving to be a greater advantage of Ceph; it is inherent to almost all Ceph services. Individual clients who want to store a 16MB file in Ceph usually divide it into four blocks of 4MB. They then upload all four files onto four OSDs in the cluster at the same time, leveraging the combined write speed of four hard drives. The more spindles there are in a cluster, the more processes the Ceph cluster can deal with simultaneously.

This is an important prerequisite for use in the S3 example: Even mail systems that are exposed to increased loads can easily store various email in Ceph in the background at the same time. Ceph usually only performs badly compared with conventional storage solutions when it comes to sequential write latencies; however, this is irrelevant for the Dovecot S3 example.

Ceph Front Ends

The most attractive object store is useless if the clients cannot communicate with it directly. Ceph provides multiple options for clients to contact it. The RADOS block device emulates a normal hard drive based on Linux. What appears to be locally installed on the client computers is, in fact, a virtual block device. Writes to this block device migrate directly to Ceph in the background.

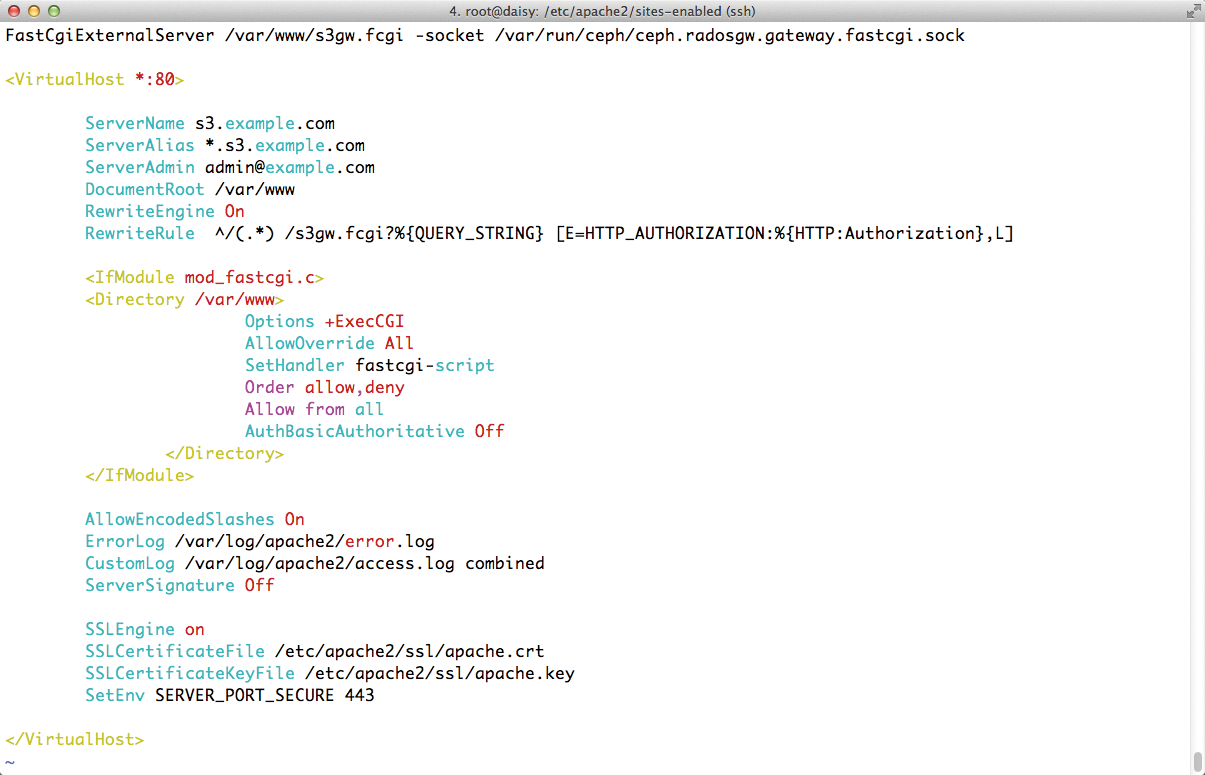

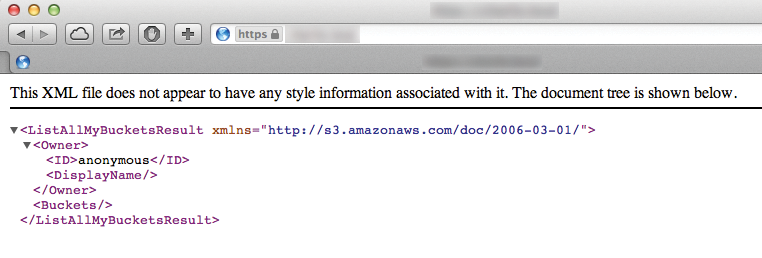

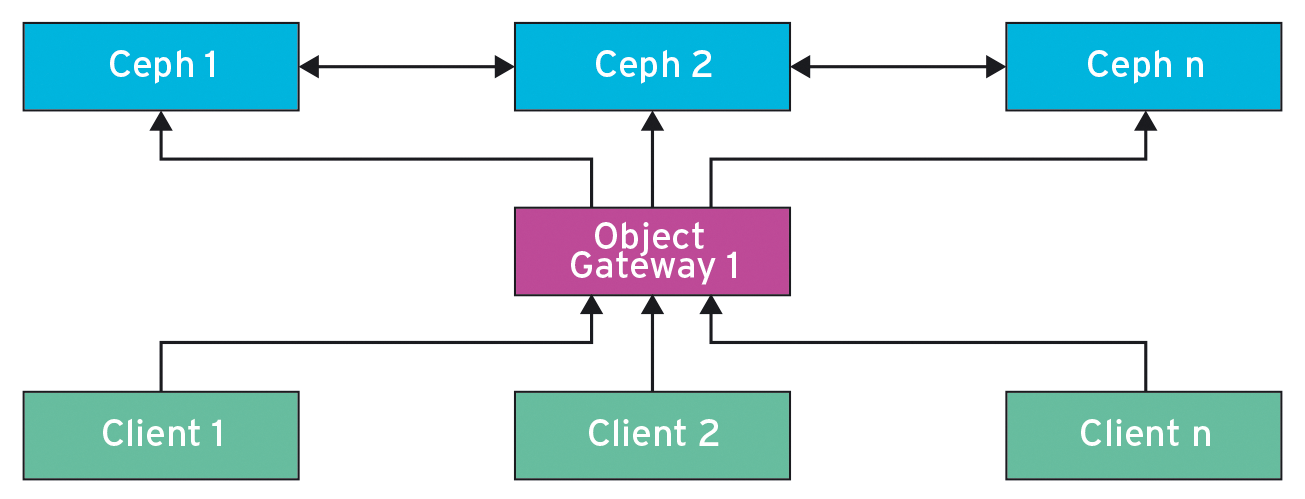

Ceph FS is a POSIX-compatible filesystem: however, it is Inktank's eternal problem child, which has stubbornly remained unfinished for years. The Ceph Object Gateway, however, is really interesting for the S3 example shown in Figures 3, 4, and 5. The construct previously called RADOS Gateway is based on Librados, which allows direct and native access to Ceph objects. The RADOS Gateway, on the other hand, exposes RESTful APIs that either follow the syntax of Amazon S3 or OpenStack Swift.

anonymous user.The Ceph Object Gateway might not implement the S3 specification completely, but the main features can be found in the gateway. This completes the solution: A cluster with at least three nodes operates on local Ceph disks. Additionally, a server controls the Ceph Object Gateway and allows various instances of Dovecot with the S3 plugin to store email.

Such a solution scales on all levels: If the Ceph cluster needs more space, you can just use more computers. If the strain on the Dovecot server becomes too high, you can also use more computers. As long as there is enough space, this principle can be expanded with practically no limits.

S3 Load Balancing

The road to a finished Ceph cluster with the S3 front end is actually not as rocky as it looks at first glance. Inktank [4] has a powerful tool up its sleeve in the form of ceph-deploy, which Ceph provides on systems with common distributions in just a few minutes. Anyone who first wants to test on three virtual machines can, of course, do just that.

Ceph is now integrated in common automation tools like Puppet or Chef. The Ceph cluster itself is therefore quick. Just a little more overhead and the Ceph Object Gateway is connected: Inktank itself provides detailed documentation that explains the entire process [6]. In the end, you have an instance of the Ceph Object Gateway that speaks S3 and can be used as a starting point for a Dovecot instance.

You should, however, bear in mind that the S3 gateway can break quite a sweat with large setups. No wonder: If there is only a single gateway instance, all connections from the Dovecot front ends to Ceph have to squeeze through the eye of a needle. This is not just a problem from the perspective of performance, but also a classic single point of failure (Figure 6).

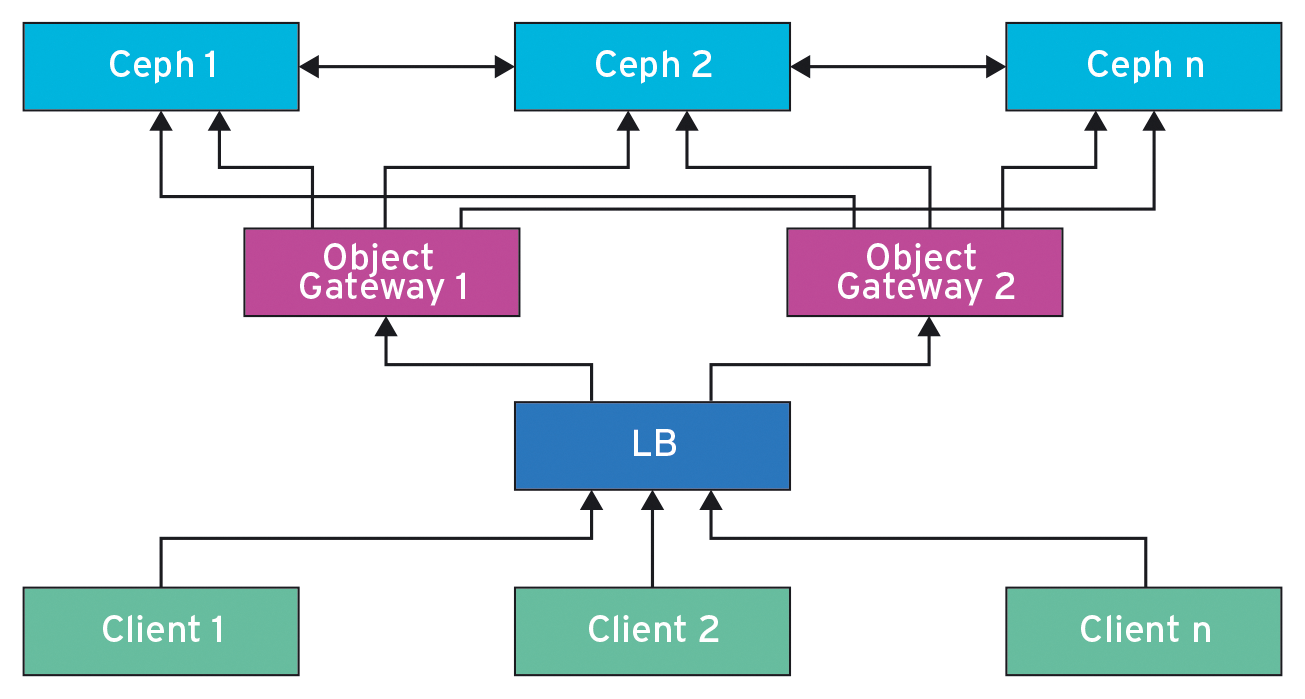

However, because S3 is based on HTTP and there are enough high-availability approaches for HTTP, the solution for the problem is obvious: It makes sense to start with two object gateways, for which a (highly available) load balancer (LB) redirects incoming connections accordingly (Figure 7). This architecture is also scalable; additional object gateways can be integrated at any time, and the process can be done with no downtime.

Conclusions

With the S3 plugin for Dovecot, Sirainen has expanded the scalability of mail at the storage level. Thus, a useful alternative is now available to previous solutions, such as sharding, whose inflexibility often causes problems in today's agile IT structures. The license fees the author charges for the plugin are undoubtedly a drawback. With a sensible setup, however, the costs can be recouped quickly because they remove the need for an expensive SAN on the storage side; instead, Ceph runs on off-the-shelf hardware. In terms of performance, Ceph – except the single-serial-write scenario – usually proves to be the superior solution and certainly has no reason to fear direct comparison with commercial storage solutions.

Those who operate an email setup and regularly face challenges when creating more space for user data should certainly take a closer look at the combination of Dovecot, the S3 plugin, and Ceph with Object Gateway. Instructions [7] explain how to set up Ceph with Object Gateway so that it cannot be distinguished from "real S3" clients.