Container orchestration with Kubernetes from Google

Administrative Assistant

Container-based virtualization has several advantages over classical virtualization tools such as KVM or Xen. Containers allow a higher packing density: A container is initially no more than a directory within a Linux system that shares the host's resources, comprising only the components needed for the container and stressing the CPU only as needed by the services within the container.

In Its Sights for Years

Google has focused on container virtualization for years. The need for efficient management tools is particularly high at Google, which operates so large an infrastructure that even the smallest performance gains quickly translate into big savings.

Google generally allows the open source community to participate in testing and developing its products; against this background, the company published the first public version of Kubernetes [1] in 2014. Behind the somewhat unwieldy name, you will find Google's own Linux distribution, specifically geared toward operating Docker containers and extending Docker [2] to include many useful functions for large computing networks. SUSE and Red Hat now also participate in Kubernetes' development.

How does Kubernetes fare in a market in which CoreOS and various other container-friendly systems are already active?

The Problem

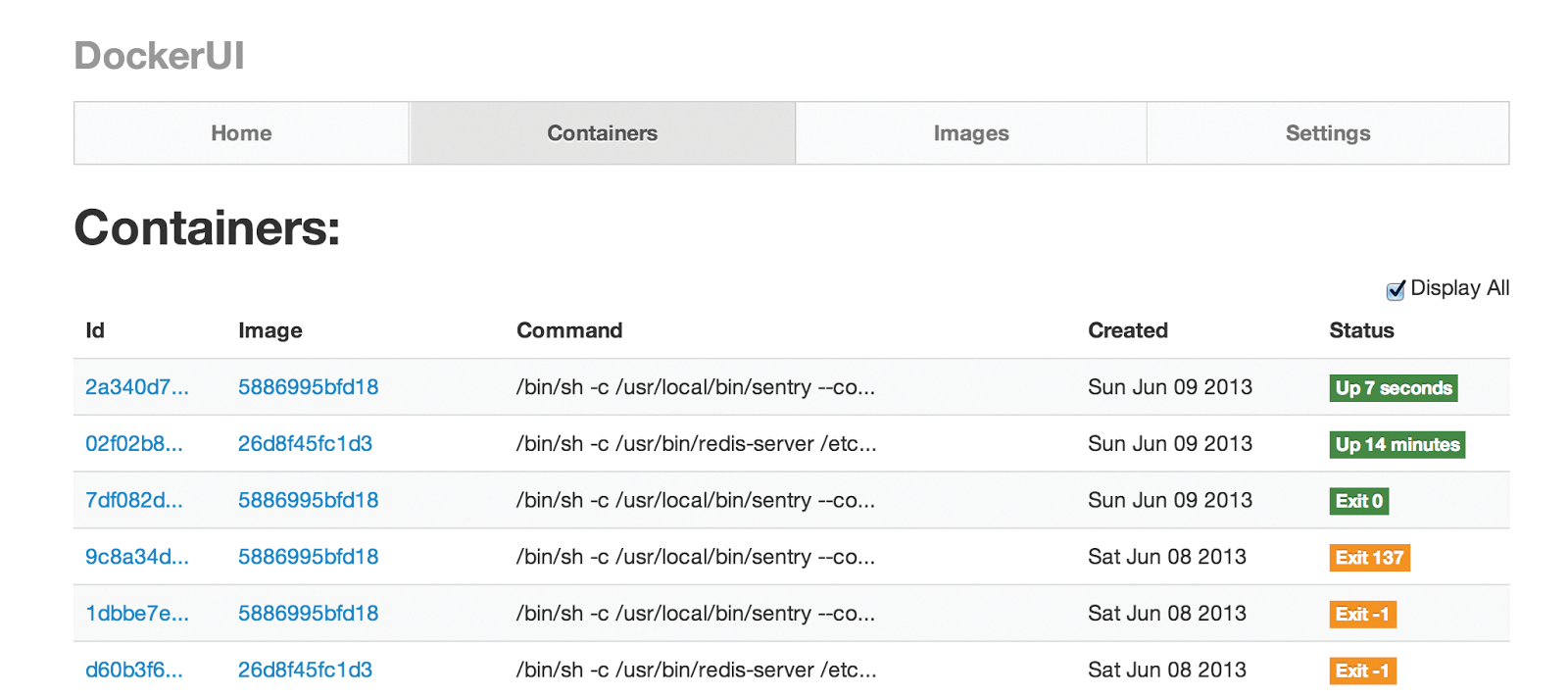

Google has to unite countless systems worldwide to form a single computing environment and ensure that servers can be managed well remotely. Kubernetes is Google's approach to making Docker enterprise-ready (Figure 1). The Kubernetes solution is designed to leverage the benefits of large-scale computing environments.

Kubernetes has competitors: CoreOS [3] and Red Hat's Atomic project [4] have similar goals. Kubernetes, however, occupies a special position in the ranks of fleet virtualizers with container technology. It follows a superordinate goal and seeks to be a universal solution because it does not rely on any specific distribution and is not developed directly by a distributor. This distributor independence is reflected in the fact that Kubernetes works the same way on Red Hat as it does on Ubuntu.

Docker is networked out of the box and is designed to manage containers. Kubernetes aims to extend Docker to add fleet capacity, so Google has introduced several technical terms in its design documents to explain the capabilities of Kubernetes. The following sections describe some of those important terms and concepts.

Pods

A pod is the smallest administrative unit in which Google's Kubernetes operates. In its documentation, Google always talks about applications and not containers. Even if a user only launches a single container, Kubernetes builds a wrapper pod around it. However, Google actually views pods as a management option for thousands of containers.

In the Kubernetes universe, pods take care of orchestration. You can write text files to define pods; possible formats include YAML or JSON. The pod definition contains all the relevant details: Which containers should the pod include? Which operating systems use them? What specific services will run within the pods? Once a suitable file for starting the pod has been created, you send it to Kubernetes, which ensures in the background that the containers start as desired.

Old Friends: Namespaces

Pods are characterized by several features on the server side. First, a pod slips several Linux namespaces, which all pod containers can then access, over the containers running in it. Remember that namespaces are a feature in the Linux kernel that sets up virtual sections in various areas, such as the network or process level. Applications that run within a namespace cannot just leave it but are virtually locked into it.

A pod's containers therefore have access to the same virtual stack for the network and its processes. Moreover, they share a hostname. Kubernetes also supports interprocess communication. It almost seems as if Google does not even regard Docker containers in a pod as containers, but instead as single programs.

This design fits well with the manufacturer's statement that Kubernetes is, in reality, application specific. Google clarifies that it may be possible to have multiple containers in a pod, but that each container within the environment performs precisely one specific task and, ideally, only runs a single application. Anyone wanting to host more than one application is, therefore, obliged to fit out their pod with more containers as early as pod definition time.

Group Hug

The applications that are part of a pod are stored on the same host. Anyone thinking about the topics of high availability and redundancy might be perplexed here. Google has, however, indicated that pods are actually ephemeral (i.e., not designed to exist permanently). The idea regularly created a stir in the past few years in the context of the cloud, because it apparently conflicts with the mantra of high availability.

In fact, this concept achieves the same goal, but just in another way: Instead of individual, highly specific hosts, there are many generic systems that can be reproduced quickly in a worst-case situation. Specific data is not impossible, but it must be connected separately. Google views pods in Kubernetes along the same lines: Persistence might be possible via specifically bound storage, but this only applies to the specific data.

The pods and the associated applications are replaceable at any time. Kubernetes therefore lacks any kind of functionality for moving VMs: Instead of migrating, the same pod is simply started again on another host, and the same persistent storage is then connected to it.

Labels

In addition to the pods, Kubernetes also gives administrators an option for expressing a relation existing between pods. Pods offering similar services could be linked to a logical unit using a service label at the Kubernetes level. Labels mainly offer administrative benefits. By using them, you can express the fact that three different pods contain applications that talk to one another in the background.

A Galera cluster with MySQL is a typical example: You'll need at least three pods with a Galera application. Using a corresponding label selector, you can ensure that Kubernetes recognizes the pods as belonging together and ensures sound network throughput performance between the pods.

Master Server

If pods and labels are virtual collections of containers and Kubernetes is the overlying management framework, the two spheres must somehow be linked. Google uses multiple components for linking Kubernetes with the containers.

The central hub of a Kubernetes installation is a master server. The master server consists of several components: A collection of APIs, a scheduler for pods, a controller for the user's server services, a database for configuring the cloud, and an authentication component on which all other services are oriented.

The APIs are the most outwardly visible parts: kubectl is the standard command for Kubernetes. You can use it to issue the platform commands directly. A kubectl call leads to a request to the Kubernetes API, which is based on the REST principle. The clients register with the authorization service in advance. The Kubernetes Info Service also provides information for Kubelet instances on the container hosts from the API Kubernetes.

Kubelet designates the service running as an agent on the hypervisors; it starts containers on demand. A separate scheduler in Kubernetes specifies which hypervisor should start a VM – the scheduling actuator forwards the information to the Kubernetes Info Services, where the Kubelet instances pick up their orders.

Integrated Replication

A component known as the controller manager, which manages a replication controller, can also be found on the master server in Kubernetes. This is – very much like the service – a label that expresses the dependencies between two pods. Replication controllers ensure horizontal scaling in Kubernetes: If a replication controller is defined for a pod, Kubernetes automatically starts new instances for each pod based on definable parameters – such as whether specific load limits are exceeded.

Finally, etcd is an integral component of the Kubernetes master. The service acts as a platform-wide key/value store for settings that are required on hypervisor nodes. Etcd is decentralized and automatically replicates its information on all instances of the installation so that all configuration options are available on each host on which etcd runs.

Working Remotely

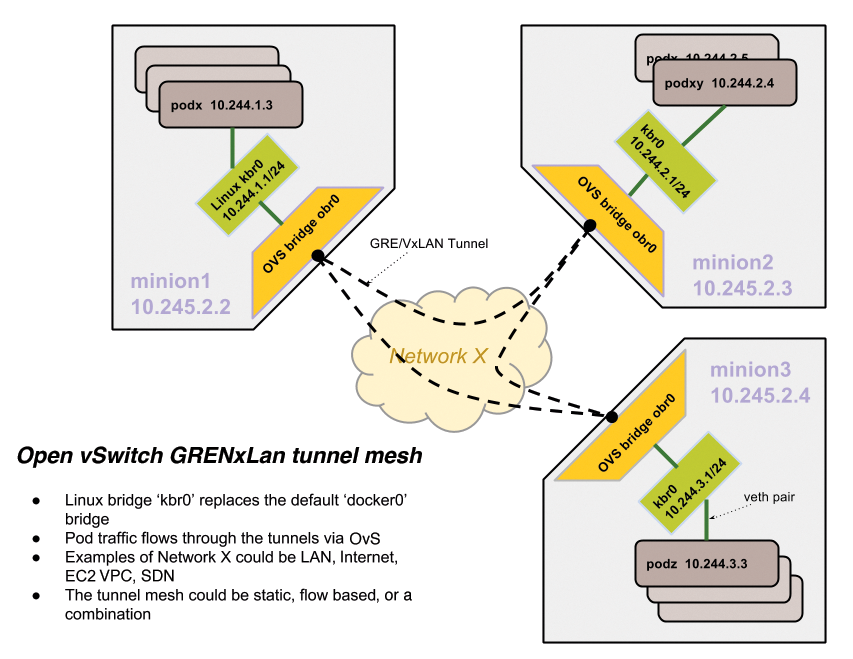

Other services for Kubernetes also run on the hypervisor hosts, as well as the aforementioned Kubelet, such as networking. Kubernetes currently comes with a separate proxy component that centrally handles network access inside and outside the platform (Figure 2).

Whenever a pod needs to be reached, the process is currently handled by the proxy server configured by Kubernetes. However, this approach is anything but elegant and, if you want a genuine software-defined network (SDN) setup, the proxy server needs far more functionality. In any case, the rally to couple SDN approaches such as Open vSwitch with Kubernetes began some time ago, and such solutions are likely to prevail over the simple proxy approach in the long term.

Finally, of course, don't forget SaltStack, which Kubernetes uses internally. SaltStack is a tool for configuration management and clouds. In principle, it is very similar to other solutions, such as Puppet or Chef, but the manufacturers dubbed the product "optimized for the cloud." SaltStack supposedly offers massive benefits over other solutions, especially with scalable systems.

SaltStack has obviously had an effect on Kubernetes developers: Each Kubernetes hypervisor is considered a Minion, where "Minion" is the standard name in SaltStack-speak for a host that participates in configuration management through SaltStack.

Google – whether intentionally or not – staked out a specific claim for Kubernetes when deciding to use its own tool for maintaining and distributing configuration files. If a virtualization solution comes with its own management solution, it is no longer a small tool, but a full-blown environment.

Working with Kubernetes

The basic components of a Kubernetes setup are clear. However, questions about why Kubernetes could be interesting for administrators and what problems you can actually solve using it remain unanswered. One thing is certain: Google wants to position Kubernetes in the cloud frameworks market – that is, in the same environment inhabited by CoreOS, Project Atomic, and many other macro distributions.

Kubernetes, however, clearly requires more overhead to set up a complete installation. It is much easier to get started using CoreOS. Another fact is that Kubernetes can also be used as a CoreOS add-on. Google provides prebuilt images for Kubernetes as part of the Google Computing Environment (GCE), meaning that the application can be tested quickly and easily at the push of a button. Anyone already familiar with working in a Docker-only environment easily will get used to the Kubernetes functionality after a brief learning curve. Google enhances Docker, adding a feature absent from the normal variant in the form of an option for operating containers with pods and labels in the framework of a cluster.

Until now, the development of Kubernetes has taken place in Google's hallowed halls. The project will only become really interesting once Google opens up to other suppliers and manufacturers. With Red Hat and SUSE also onboard, it will probably not be long before a more comfortable installation exists. Additionally, a Kubernetes GUI, which is currently missing, will probably follow some time later. Kubernetes is therefore, sooner or later, likely to catch the fancy of anyone using container virtualization in a setup with many hypervisor nodes.

Kubernetes, OpenStack, or Both?

When reviewing the solutions available on the market, a different question arises: Is Kubernetes even necessary given that cloud solutions such as OpenStack and CloudStack are available and have a much larger community? Kubernetes and OpenStack share certain similarities in their architecture.

The Server-Agent principle is very similar in both solutions. OpenStack also has central servers and APIs, as well as agents that run on the individual hypervisor hosts and receive their commands there. The function types are also similar in Kubernetes and OpenStack: Both solutions seek to allow the easy use of computing resources on a computer network.

A closer look, however, also shows major differences between OpenStack and Kubernetes. User management is one example: OpenStack is designed to be multiclient capable; Kubernetes does not currently have this capability. In return, Kubernetes is a highly specialized tool, whereas OpenStack seeks to be a universal panacea.

Although Kubernetes is not a small environment, it feels quick and flexible compared with the almost oversized OpenStack. Consequently, it is not surprising that many observers do not regard OpenStack and Kubernetes as competitors but instead as components that complement one another meaningfully.

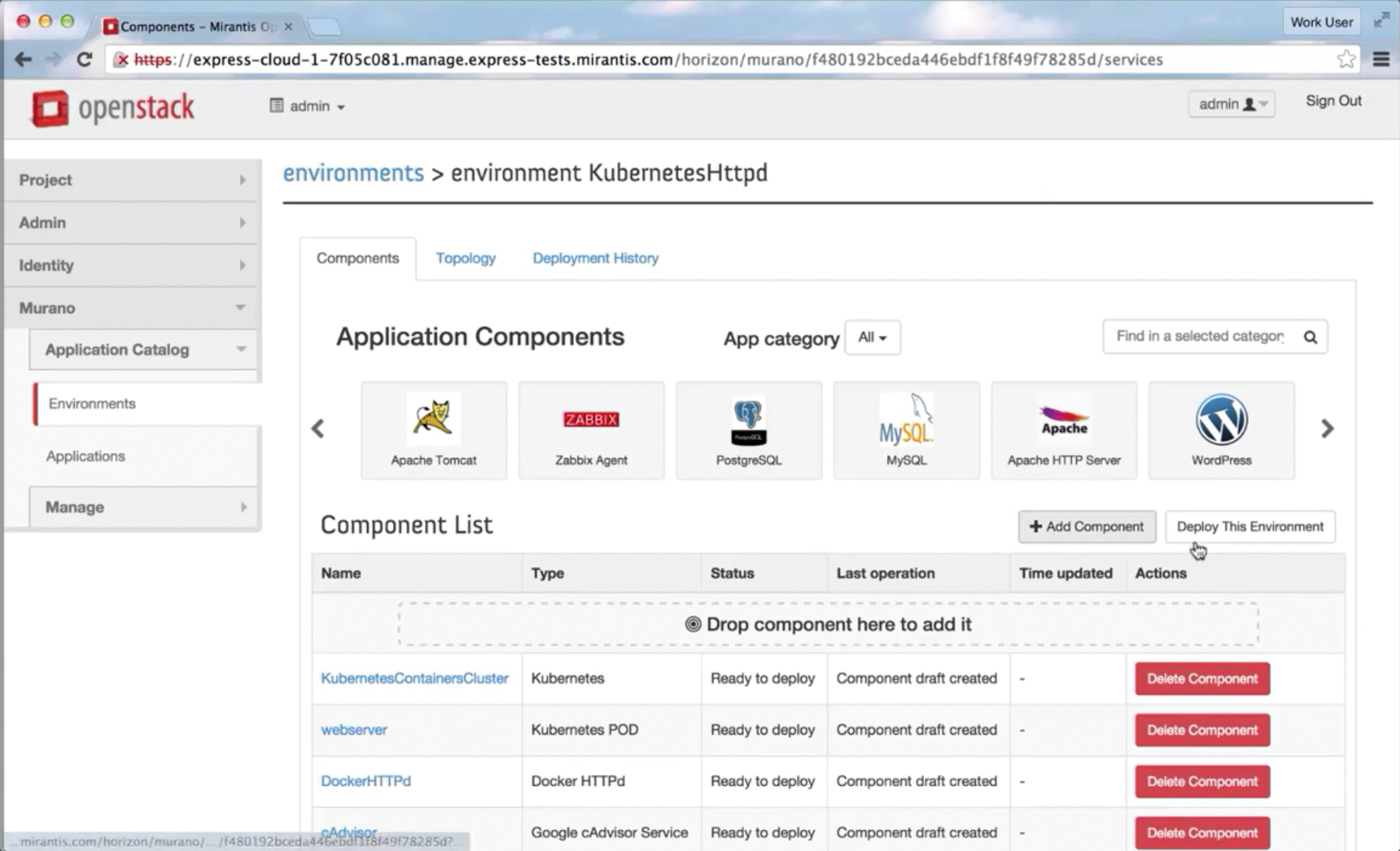

Mirantis apparently takes a similar view (Figure 3). Mirantis has made a name for itself in the OpenStack community by developing Fuel, an OpenStack setup tool. In February, Mirantis announced an agreement with Google to develop meaningful integration of Kubernetes in OpenStack [5]. The purpose of this action is to offer a viable form of virtualization with containers in OpenStack.

Container support is obviously exactly what has been lacking in OpenStack: The only somewhat useful driver for containers may also be intended for Docker, but it is not part of the official OpenStack distribution and does not lend itself well to production use. If Mirantis succeeds in connecting Kubernetes and OpenStack, a very fruitful joint venture could arise for OpenStack users and those interested in Kubernetes.

Mirantis immediately pinned a short video to the Google announcement to show that the announcement was not just empty words: The photo shows an OpenStack dashboard that lets the user start pods in Kubernetes (Figure 4). Mirantis has not, unfortunately, named a release date for the corresponding components.

Update: At the OpenStack Summit in Vancouver in May, the OpenStack Foundation and Mirantis announced that Kubernetes is now in its Community App Catalog [6].

Conclusions

Kubernetes is an interesting project with powerful backers and is probably not a fluke, seeing that Google has chosen it to be the tool of its own virtualization future and is already committed. However, you should check carefully before committing to Kubernetes, because the software does not offer a universal solution. Instead, Kubernetes focuses on the idea of achieving high virtualization density using fewer resources. Only users who have similar requirements should use Kubernetes. All other users are probably better off with smaller projects, such as CoreOS.