Mesos compute cluster for data centers

Distributed!

The Mesos cluster framework by Apache [1] has been around since 2009 and has attracted quite an impressive community, including heavyweights like eBay, Netflix, PayPal, and Twitter.

Mesos is a framework that helps use all of a data center's resources in the most efficient way possible. The Mesos framework extends the concept of a classical compute cluster, combining CPU, memory, storage, and other resources to form a single, distributed construct. Mesos distributes tasks that are typically handled by the operating system kernel. The Mesos developers thus describe Mesos as a distributed kernel for the data center.

The Story So Far

Like many open source projects, Mesos has a university background. Original developers included Ph.D. students Benjamin Hindman, Andy Konwinski, Matei Zaharia, and their professor Ion Stoica from the University of California, Berkeley. In 2009, Mesos was officially presented to the public [2]; at the time, the project went by the working title of Nexus. To avoid confusion with another project using the same name, it was renamed Mesos. Now, Mesos, which is written in C++ and released under the Apache license, is a top-level project of the Apache Foundation in the cloud category. The current version is Mesos 0.21.

Two years after the first release, the developers presented a far more advanced variant [3] at a 2011 Usenix conference. Twitter engineers had already been running Mesos for a year in their own IT infrastructure [4]. Some of the engineers had previously worked for Google and were familiar with its counterpart, which today goes by the name of Omega [5]. Since 2011, many prominent enterprises have adopted Mesos.

Mesos depends on a system of well documented APIs [6] that support programming in C, C++, Java, Go, or Python. As a scheduler, Mesos provides the underpinnings for several other projects in the data center.

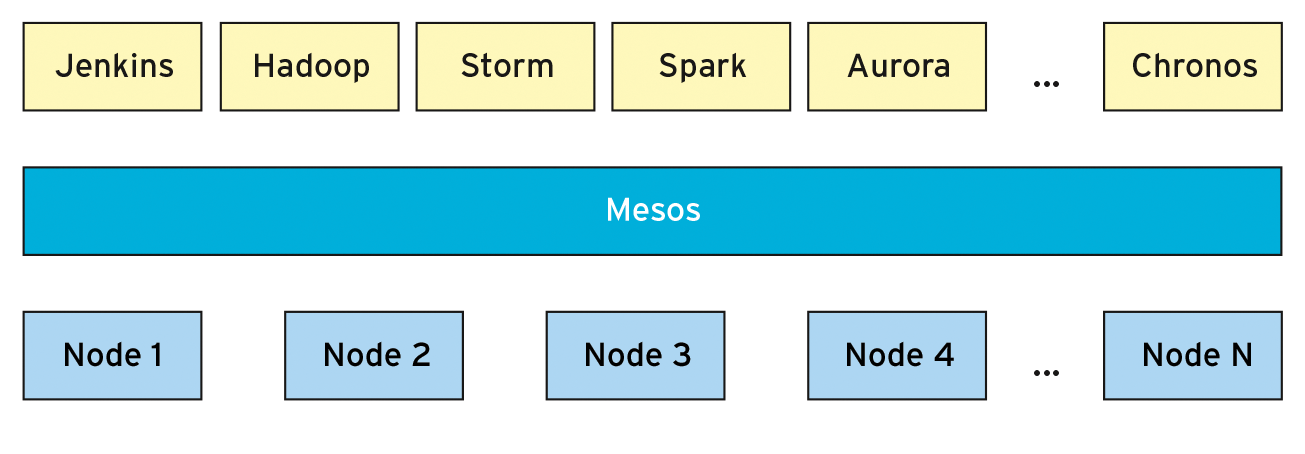

Table 1 lists some other projects compatible with Mesos [7]. In Mesos speak, these "beneficiaries" are also confusingly known as frameworks. "Mesos application" is an alternative term that might make more sense (Figure 1).

Tabelle 1: Known Mesos Frameworks

|

Category |

Framework, URL |

|---|---|

|

Continuous Integration |

Jenkins http://jenkins-ci.org, GitLab http://gitlab.com |

|

Big Data |

Hadoop http://hadoop.apache.org, Cassandra http://cassandra.apache.org, Spark http://spark.apache.org, Storm http://storm.apache.org |

|

Meta/High Availability |

Aurora http://aurora.incubator.apache.org, Marathon http://mesosphere.github.io/marathon/ |

|

Distributed Cron |

Chronos http://github.com/mesos/chronos |

Master and Slaves

The architecture behind Mesos [8] is both simple and complex. Put simply, the structure supports two roles: master and slave. Master is the main process; it fields requests from the frameworks and passes them on to other members of the Mesos cluster. The other members are the slaves or workers, which handle the actual tasks. What exactly they do depends on the framework or Mesos application you are using.

The Master handles the first step of a two-stage, task-planning process. When a slave or worker joins the Mesos cluster, it tells the Mesos boss which resources it has and in what quantities. The master logs this information and offers the resources to the frameworks.

In the second stage of task planning, the Mesos application decides which specific jobs it will assign to the published resources. The master fields this request and distributes it to the slaves. The system thus has two schedulers: the Master and the Mesos application (Figure 2).

Executors then complete the jobs. Put in a slightly more abstract way, executors are framework-related processes that work on the slaves. In the simplest case, they will be simple (shell) commands. For the frameworks listed in Table 1, the runtime environments, or lean versions of the frameworks themselves, act as the executors.

The master decides when a job starts and ends. However, the slave takes care of the details. The slave manages the resources for the process and monitors the whole enchilada. In Mesos speak, a framework job is a slave or executor task (Figure 2) – an equivalent in a conventional operating system would be a thread.

As mentioned earlier, the Mesos master logs the resources that each slave contributes to the cluster or has available at runtime. In the as-delivered state, the number of processors, the RAM, disk space, and free network ports are predefined. Without any customization, the slave simply tells the master what resources it has at the current point in time. The administrator typically defines which resources the slave will contribute to the Mesos array and to what extent [9].

The number of processors and the free RAM play a special role. If a slave is short on either, it cannot contribute any other resources to the Mesos cluster. Both are necessary to run even the simplest commands – independently of the disk space or network port requirements. Of course, you can define other resources of your own design; for details, see the Mesos documentation [10].

Availability

At least two questions remain unanswered:

- What kind of failsafes are in place, or what kind of availability can I expect?

- How does authentication work?

High availability is something that Mesos has not ignored. The high-availability concept is very simple for the slaves; they are organized as a farm [11]. In other words, to compensate for the failure of X workers, the Mesos array must have at least N+X slaves, where N is the number of computers offering at least the minimum resource level.

For the master, things look a little different. Mesos resorts to the ZooKeeper [12] infrastructure. Choosing this system is a no-brainer – ZooKeeper, which is also an Apache project, works as the glue between multiple Mesos masters and as a port of call for the frameworks.

The details for how to reach ZooKeeper are provided by the Mesos administrator on launching the master instances. Alternatively, you can store the details in shell variables (Table 2).

Tabelle 2: Important Options HA Mode

|

Mesos Role |

Configuration |

Example |

|---|---|---|

|

Master |

Command Line |

|

|

Master |

Shell Variable |

|

|

Slave |

Command Line |

|

|

Slave |

Shell Variable |

|

|

Chronos |

Command Line |

|

|

Marathon |

Command Line |

|

For Mesos applications, ZooKeeper is the first port of call when the application attempts to identify the master. Again, the administrator needs to provide this information upon starting the framework. If you want to run Mesos in a production environment, you have no alternative to using ZooKeeper. The "ZooKeeper" box provides some important details.

Mesos authentication includes several aspects. For one, the trust relationship inside the Mesos cluster, that is, between the master and the slaves. For another, the topic also relates to the collaboration between a Mesos application and Mesos.

In as-delivered state, Mesos uses SASL (Simple Authentication and Security Layer [14]) with Crammd5 [15]. As of version 0.20, authorization was also introduced for framework authentication [16] .

Mesos distinguishes three categories: roles, users, and instructors. All categories have different weightings in Mesos. A role is a property below which a framework registers with Mesos. Internally, an Access Control List (ACL) helps with assigning access to roles. If the Mesos application is registered, it is given access to resources for executing jobs.

If the application then runs tasks on the slaves, the user category applies. Users have the same function as their counterparts at operating system level. Again, Mesos uses ACLs internally.

The third category – instructors – controls the framework shutdown. The definition of the matching privilege uses the JSON format [17], For details see the Mesos documentation [9] [16].

Was That All Folks?

Late in 2014, Mesosphere announced the Datacenter Operating System (DCOS) [18]. Mesophere describes DCOS as a "new kind of operating system that spans all of the servers in a physical or cloud-based data center and runs on top of any Linux distribution." Mesos assumes the system kernel role at the base of the DCOS system.

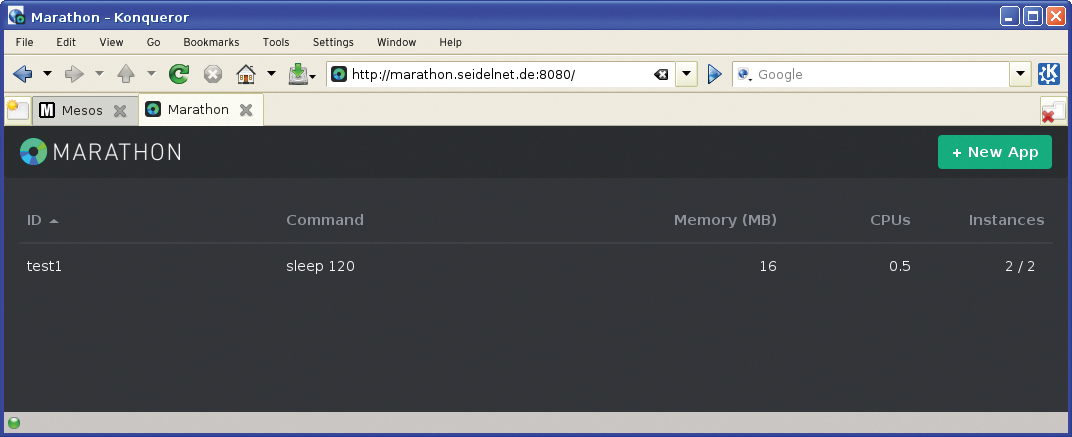

The Marathon [19] and Chronos [20] frameworks will also be part of the package. Marathon manages jobs with a longer execution time. If you compare DCOS with a classical Unix-style operating system, Marathon is equivalent to the init process. You can also view Marathon as a meta-scheduler. Marathon starts the other frameworks and monitors them. In doing so, Marathon checks whether the desired number of job instances are running. If a slave fails, Marathon starts the failed processes on the remaining Mesos nodes.

Chronos, which runs jobs in line with a defined schedule, is the equivalent of cron. In DCOS, Chronos is the first framework started by Marathon. Both have a REST interface for interaction.

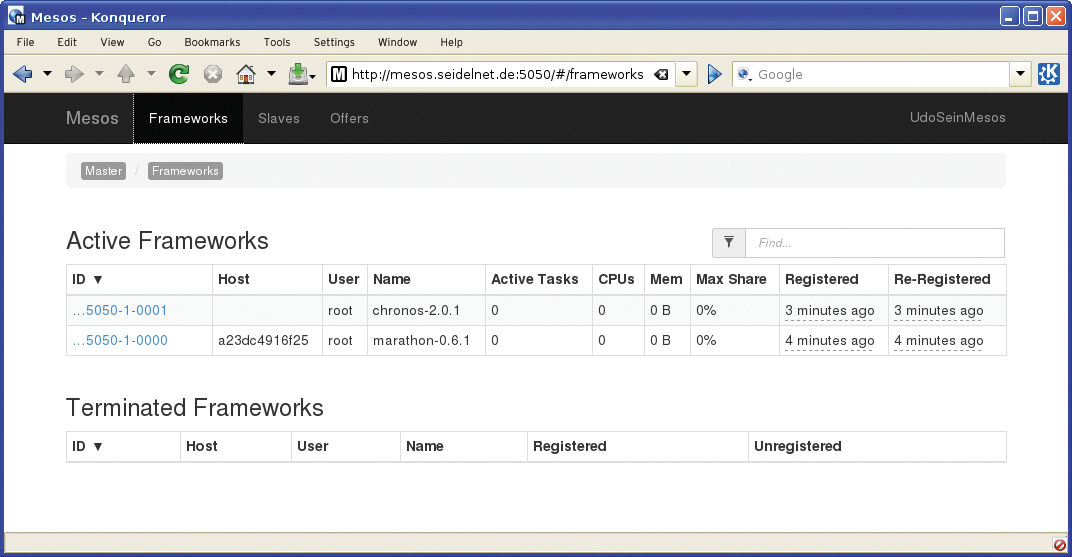

Figure 3 shows a highly simplified DIY version of DCOS. Marathon (Figure 4) and Chronos are registered as Mesos applications. The jobs are simple sleep commands. The use of ZooKeeper is mandatory for DCOS. Both Marathon and Chronos require ZooKeeper.

When this issue went to press, the DCOS by Mesosphere was still in beta. You can visit the Mesosphere website [18] to download a pre-release version.

Conclusions of a Kind

Just a glance at the list of enterprises that have been using Mesos for years is more than enough to show that Mesos is an exciting project. The number of frameworks based on Mesos is also quite impressive. Mesos really comes into its own in large and heterogeneous IT landscapes. The Mesos framework offers a simple approach to pooling existing compute capacities and providing them either as a bulk package or bit by bit. This form of abstraction is also very attractive for smaller IT environments.