Virtual environments in Windows Server

Virtual Windows

Since the first version of Windows NT more than 20 years ago, Microsoft has pursued its goal of providing a unified operating system for clients and servers. In the next generation of Windows, Redmond has expanded this concept: From the embedded computer on smartphones and tablets to PCs and servers for large and small companies, the Windows kernel is intended to be a universal system. Microsoft addresses larger environments with the new Windows Server edition. The preview versions pay particular attention to new features for virtualization and storage capabilities.

The development roadmap for the server operating system largely corresponds to that of its sibling Windows 10: At least one more intermediate version is expected in Spring 2015. The finished product has been announced for late Summer 2015. As always, Microsoft's contract customers with volume licenses will have first access to the new bits and bytes through the web distribution. Single versions on media will be available a few weeks later.

An overview of new features in Windows Server 2016 is available online [1], but here, I want to take a look at the details of support for Hyper-V and Docker containers unveiled shortly after the release of the Technical Preview [2].

Virtual Containers with Docker

A virtualization container behaves similarly to a virtual machine (VM). Unlike conventional VMs, however, a container shares most of the server resources with all other containers and processes running on the server. Thus, the container is not an encapsulated virtual machine with its own memory, virtual disks, and virtual hardware resources such as CPU and network cards, and it does not run its own instance of the operating system.

Container virtualization is based on strict isolation of processes and management of namespaces (registry, filesystem, etc.). All containers use the server operating system as their basis but only see their own isolated environment. The processes within a container are not connected to other containers. Because of resource sharing, they launch much faster than full VMs and are usually up and running within seconds.

Transferring Applications with Ease

As an additional administrative layer for this new form of virtualization, Microsoft integrates the Docker open source software. Docker offers a comprehensive set of management techniques and provides a packet format for complex applications. A Docker container, in addition to specific application software, also includes all the necessary dependencies and prerequisites, including databases, interfaces, and software libraries. Such containers can be transferred en bloc to any Docker-enabled server, and the packaged application will run there without further installation or preparation.

Docker thus solves a problem that arises in particular for operators of complex web applications: Programmers develop new versions of an application on their test systems. It can easily happen that the developer computers include components that are missing on the production computers. If a missing component is revealed after the roll-out of the new version, the application will not run correctly, thus leading to time-consuming fixes. If the application and all necessary components are encapsulated in a Docker container, such deployment errors are preventable. The entire application container can be transferred as a package from the development to the testing system, and from there to the production server, as well. Ongoing upgrades can therefore be carried out quickly and with high reliability.

Container virtualization and Docker integration are not yet available in the Technical Preview. Admins can expect to see them in the later beta versions and, of course, in the final version.

More Flexible Snapshots in Hyper-V

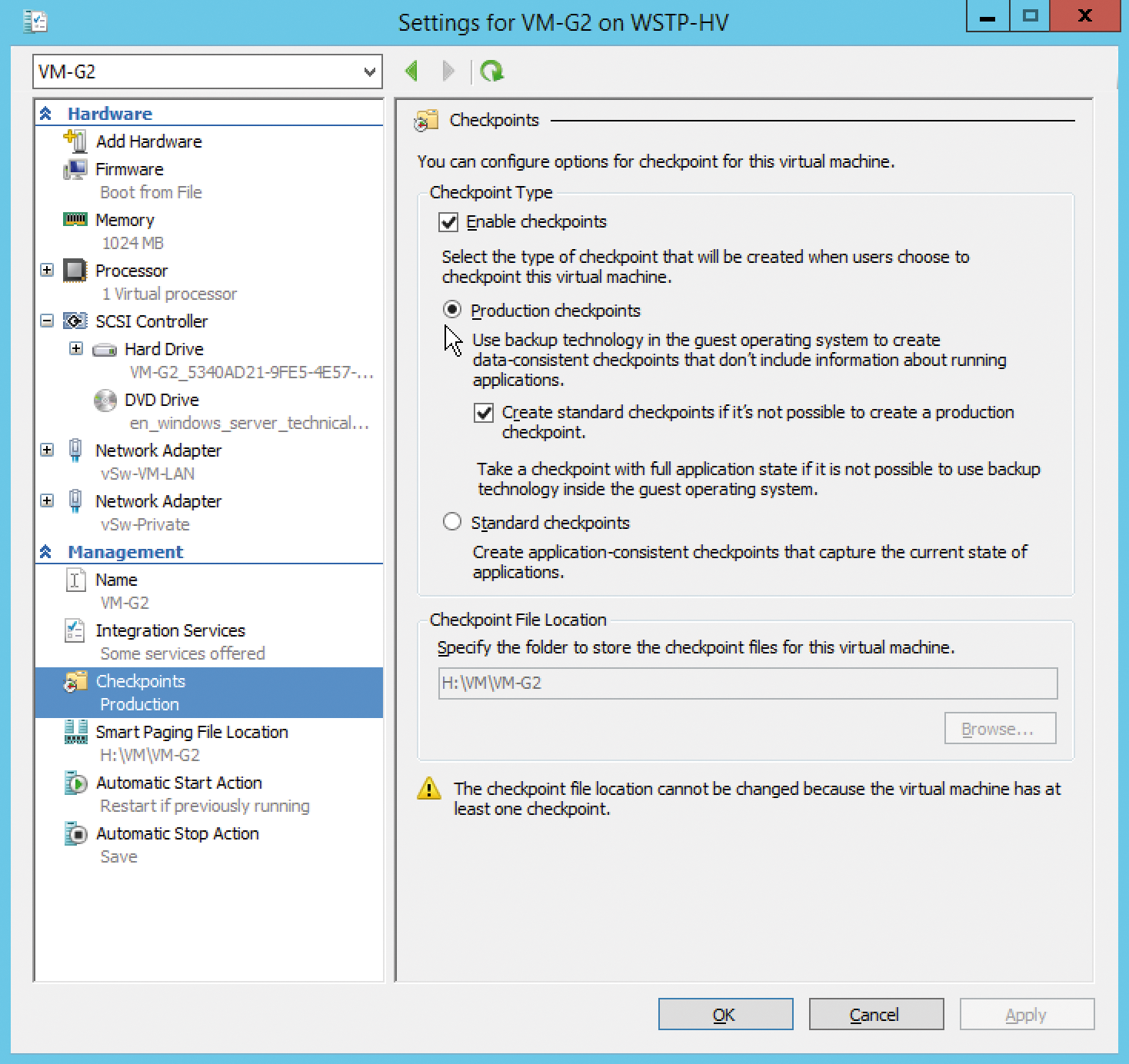

Microsoft's in-house virtualization environment, Hyper-V, has long established itself as a serious competitor to virtualization market leader VMware. The functions of the Windows hypervisor not only cover the needs of SMEs but increasingly those of larger environments as well. The upcoming version takes this into account and is designed especially with the new Production Checkpoints to improve the reliability of virtual servers. This feature is all about a new snapshot technology for virtual machines that is suitable for recovery from server failures (Figure 1). Thus far, VM snapshots (which Microsoft has dubbed "checkpoints" since 2012) have caused serious errors in applications, and their use was therefore not officially supported for recovery.

A conventional snapshot saves the state of a VM in read-only files, which means that both the content of the virtual disks and the memory of the VM is available as a snapshot. The big advantage is that all data and applications are in exactly the same state as when the snapshot was grabbed. However, this can also be a disadvantage, because complex applications, in particular, become confused when they are suddenly beamed to a different time. Production Checkpoints make Hyper-V take a different approach.

This new snapshot variant works with VSS (Volume Shadow Copy Services) to switch the VM and especially its applications into a consistent state. This VSS snapshot then forms the basis for the Checkpoint – the VM's RAM is not stored explicitly. The VM's operating system and the applications are thus in a defined state – simply put, the VM knows that it has been backed up. If you restore the virtual machine to this checkpoint, it behaves as if you had restored a backup.

Following this paradigm shift, Production Checkpoints are now officially approved as a recovery method. Two important conditions apply, however: On one hand, the VM in question must be running under Windows, because VSS is only available there. On the other hand, the applications on the VM must explicitly support the VSS method. This is true today of most server applications. For VMs that do not meet these requirements, Hyper-V by default uses the conventional snapshot technique. Although this does not guarantee data consistency, it is sufficient in some situations. If desired, you can also switch back to the previous snapshot method.

Hardware Changes on the Fly

The virtual hardware that Hyper-V provides for VMs will be even more flexible in the new release. Already on Windows Server 2012 R2, it was possible to add, remove, or resize virtual hard drives while the VM was running. In the future, you will also be able to increase or decrease the statically assigned VM memory at VM run time. Similarly, the sys admin can install or remove virtual NICs during operation. Almost all of a VM's resources can thus be changed without restarting; the only exception is (still) the processors.

Under the hood, Microsoft has also been pushing on with Hyper-V development. The evidence includes new file formats for the configuration of virtual machines that are no longer based on XML but exist as binaries. To do this, the next release of Hyper-V uses a new configuration version for VMs, which leads to another innovation. Previously, the hypervisor updated virtual machines that admins imported, or automatically migrated, from an older system to the new version. As a result, it was impossible to reassign these VMs to the previous host server.

In the future, Hyper-V will no longer perform this step itself, thereby making the VM backward compatible. If you encounter obstacles during a migration, you can push the previously transferred VMs back to the old hosts and keep on working. When you are certain that everything is working, you can then convert the VMs manually. After that, however, there is no easy way back, and only then can the specific VM use all the new features.

Updating a Cluster Gradually

This parallel operation of old and new VM versions is indicative of a change in the cluster configuration of the new Windows Server. Rolling upgrades are now possible for Hyper-V and file server clusters. To migrate a larger server cluster to the new version of Windows, you will be able to update individual servers without removing them from the cluster. You can therefore upgrade a high-availability environment step by step. This reduces the migration risk and relieves budget pressures because, unlike today, new hardware might not be required. The running VMs keep the existing configuration version during the cluster upgrade and can be moved between the old and new hosts. Right at the end, you then update the VMs manually.

If want to run Windows Server in a high-availability environment, you might also want to distribute that environment geographically. It could be useful, for example, to distribute a Hyper-V cluster across two server rooms or data centers. One part of the host then runs at one location and the other part at the second location. If a server completely fails because of a power outage, fire, or water damage, for example, operations can continue in the second room.

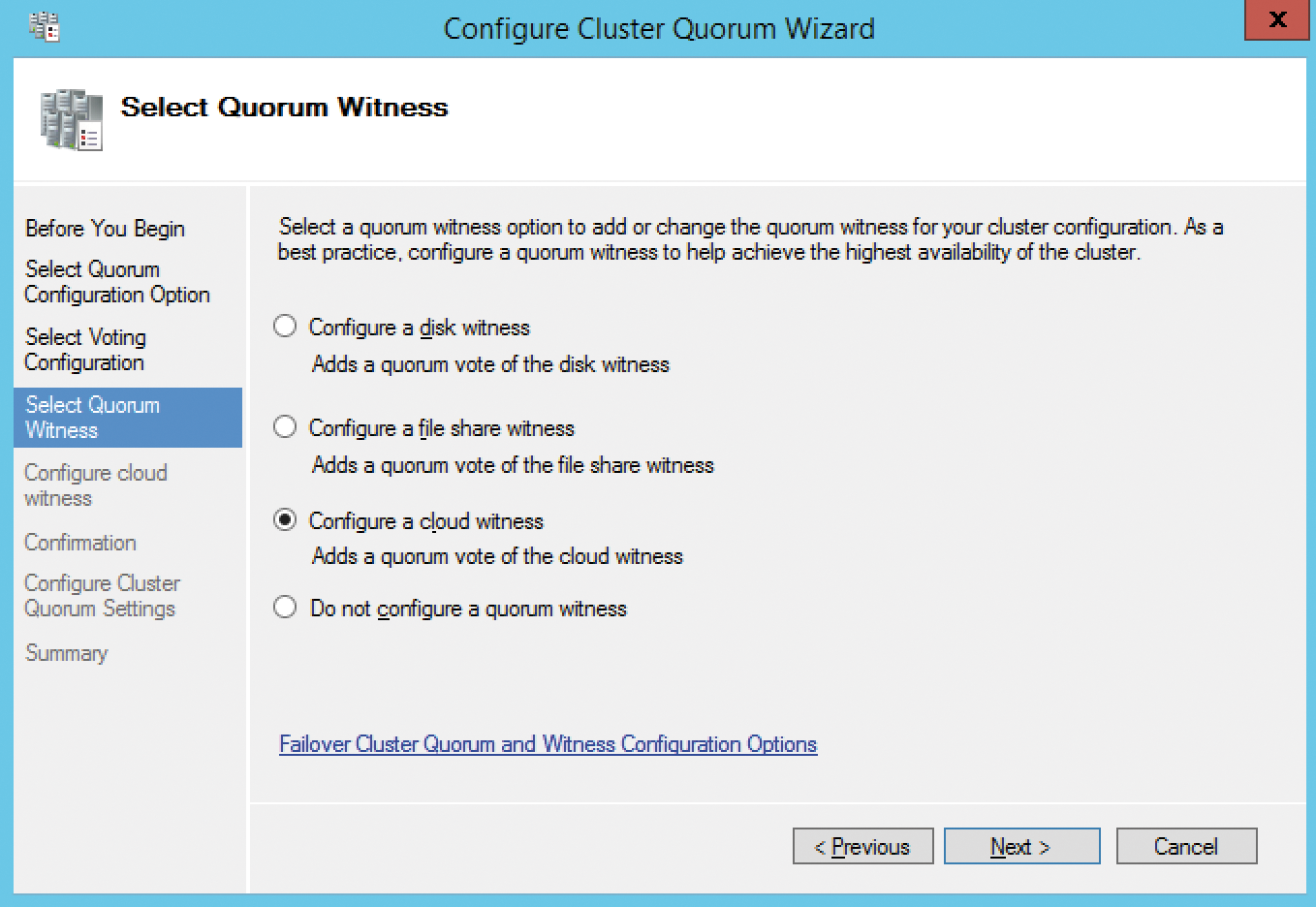

One challenge in such constructions is the witness, often referred to as the quorum. This is usually an additional server that must remain accessible in case of failure to ensure correct continued operation of the cluster. Many companies provide this server at a third location, so that it is not affected by the failure of one of the two main sites. However, if you do not have a third, independent server room, you could not implement this setup in the past.

The new Windows Server will be able to outsource the witness server to a service in the cloud (Figure 2). The highlight is that it does not have to be a full-fledged server VM; Azure file storage in Microsoft's Azure blob format will suffice. This convenient storage format ensures high availability. It allows you to operate the main and backup computer center for your cluster yourself, while running the witness server independently in the cloud. Microsoft naturally prefers Azure here; whether other cloud services will be possible in the future is not yet known.

High-Availability Storage

Even away from virtualization, other innovations can be seen. In Windows Server 2012, Microsoft started to expand the storage capabilities of the operating system significantly to position Windows Server as a storage system. Along with expansion of the file server protocol, SMB (Server Message Block), in version 3.0 to provide an infrastructure for data centers, the Storage Spaces are also worthy of note.

This new form of storage definition groups any data storage such as hard drives of different types or even SSDs as a pool. With advanced techniques such as redundancies or storage tiering, this pool can provide even the most demanding server applications with powerful storage options. Tiering automatically moves frequently used data sectors to fast SSDs, while less often addressed data remain on cheaper disks.

Microsoft's stated goal is that storage servers running Windows will be able to work with low-budget hard disks, thus replacing expensive SAN storage. So far, the technology is not yet capable of doing this on a large scale. In the Technical Preview, however, Redmond has added an important building block: The Storage Replica (SR) function is capable of transferring data from a server to a second server on the fly. This lets administrators build high-availability systems that continue to run without any data loss, even after the failure of a server.

Support for Arbitrary Applications

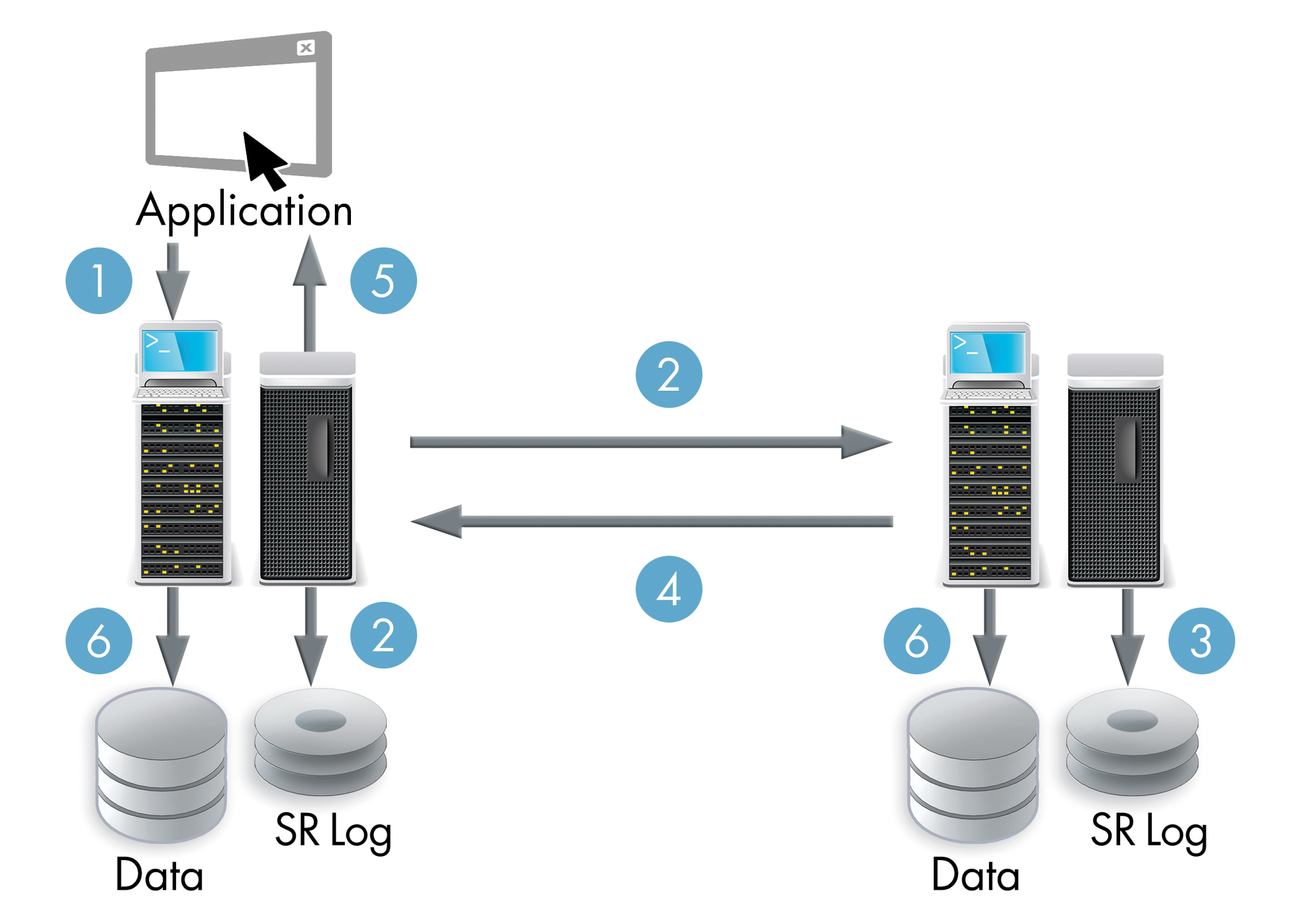

Storage Replica works at the block level and can therefore support arbitrary applications and filesystems. It offers two replication modes: synchronous and asynchronous. In synchronous mode, it can also be used within a failover cluster, where it supports the ability to distribute the servers in a cluster across separate data centers ("stretch cluster") without needing a replicated SAN.

Synchronous replication works on the principle that an application wants to store data and send the request to the operating system (Figure 3: step 1). Storage Replica stores the change in a separate, fast log area and sends the request to the second server at the same time (step 2). This server also backs up the transaction in its log (step 3) and sends a success message to the first server (step 4). It is only now that the application receives confirmation that all data has been stored (step 5). The servers delay actual access to the physical data areas of the storage system (step 6), thus optimizing overall speed.

According to Microsoft, this synchronous replication process rules out data loss. If one of the servers fails, the application does not receive a success message for the save operation. Although this means that the application hits an error condition, it also means that it will not lose any data because the application did not receive confirmation. After repairing the system (or after switching to non-redundant operation with the remaining servers) all confirmed data are again available – either directly in the data area or in the storage logs.

Obviously, you need a high-speed network between the two storage servers that matches the speed of a storage system. The application needs to wait every time you save data until both servers have reported success. The recommendation is to use redundant 10GB networks or an even faster connection.

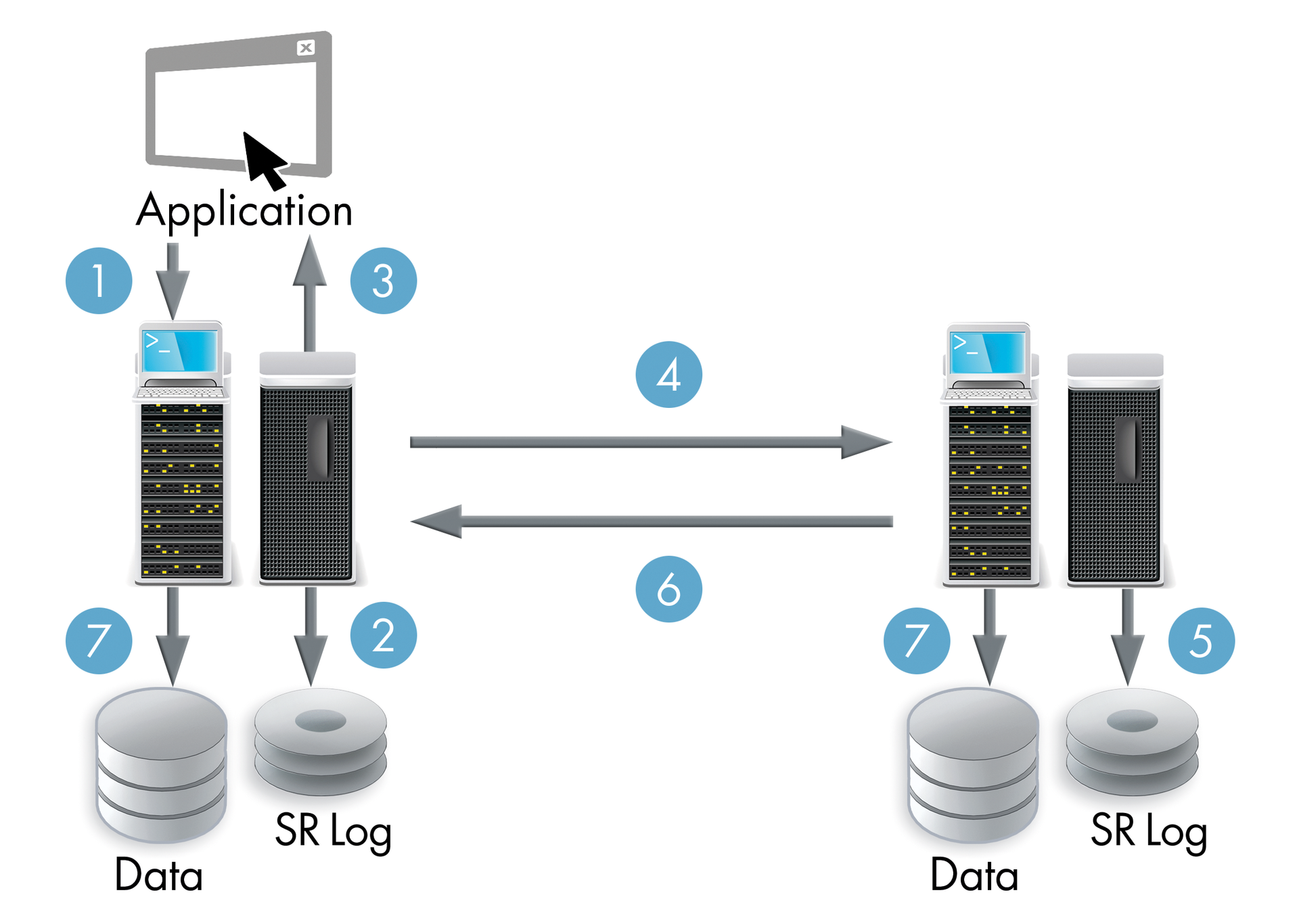

In asynchronous replication mode, the application does not have to wait for the network. Here, only the first server stores the data in its log (Figure 4: step 2) and then immediately acknowledges (step 3). It then transfers the blocks to the second server (step 4). In this mode, the application runs faster because it avoids latencies. However, data loss cannot be ruled out in case of server errors; after all, the system does not ensure that all data really has been replicated before confirming. Asynchronous replication is thus suitable especially for disaster recovery scenarios with applications that can tolerate minor data loss during recovery. Be aware, however, that data replication does not replace a backup because any change – even any data deletion – is applied consistently to both systems.

Setting up this kind of storage replication process is not trivial and can only be implemented via PowerShell – at least in the Technical Preview. For testing purposes, Microsoft offers a comprehensive guide [3], which you can follow step by step.

New in the Network Environment

The other new features in Windows Server relate to Terminal Server (or to be more precise, Remote Desktop Services) and networking capabilities. Terminal Services has just two fairly minor changes for special situations. For example, Windows Terminal Server supports the OpenGL and OpenCL protocols and can therefore run more demanding graphics applications over the RDP protocol.

Microsoft additionally has integrated a product into the Remote Desktop Services that was previously available separately. The Multipoint Services particularly target educational institutions and allow study groups to be supported with a single server. Thus, it's possible to connect multiple monitors, keyboards, and mice to the same computer and still provide each student with their own environment. The system keeps applications, data, and user profiles separate for each user.

In a second mode, the services work more like a traditional terminal server. Here, users have their own computers (iPads and the like are also supported) and use their own sessions on the server via RDP. The group leader, however, can take over control and share his or her own screen like a central blackboard. Likewise, the results of one user project can be shared on the screens of the other users.

Control of Complex Networks

The new Network Controller provides a new control center for complex networks. It positions itself as an intermediary between network devices, such as switches, routers, or virtual networks, and a network management system such as Microsoft's System Center Operations Manager (SCOM). On one hand, the network controller collects device status data and forwards the data for evaluation. On the other hand, it receives commands from the management system and distributes these to the devices. This can never be a standalone function; a separate control software is always required.

Even the Windows DNS server will be smarter in the next release. In the future, it will be managed via DNS Policies and be able to send different responses to clients in a rules-based way. For example, different host addresses can be used for internal and external clients, or the DNS data can vary depending on time of day. Other DNS servers have provided such functions for some time.

In addition to the described major changes, the Windows Server Technical Preview comes with many minor changes. These include a preinstalled malware scanner based on Windows Defender. Environments that do not allow third-party software to be installed on servers thus no longer need to do completely without antivirus. The PowerShell 5.0 scripting environment has been extended to include advanced programming features, such as class bases or improved remote access.

Conclusions

All told, you need to keep in mind that the Technical Preview of the next Windows Server is still at a fairly early stage of development. A few features are not fully implemented, and, of course, there are numerous bugs in the code. Above all, Microsoft may well announce more new features in the course of the development process. Thus, administrators of smaller environments, who do find many interesting new features in the list now, may still have something to look forward to. One thing is certain: The final release will certainly offer more for this important customer group.