Headline over the headline

HeadlineDock of the Bay

The Docker container system [1] is a rising star. The mere fact that Microsoft's latest operating system supports this technology [2] speaks volumes. Meanwhile, OpenStack [3] has been surfing its own wave of hype for some time. Enter Magnum, a hotly debated project at the interface between Docker and OpenStack.

OpenStack has officially supported Docker since the Havana version [4], but the reality is a little more complex. In the past, integrating Docker container technology involved a detour via the OpenStack compute module, Nova. Purists have never really liked this approach, which required integrating Docker as a hypervisor driver [5]. (Docker does have a few things in common with KVM and the like, but at the end of the day, containers are not hypervisors.)

Extending the Nova API for containers was possible in principle, but this solution was not as clean as developing a separate interface. This need for a container interface is precisely the gap that Magnum [6] [7] sets out to fill. As a new OpenStack interface service, Magnum supports CaaS – Containers as a Service.

Interfaces and Conductors

In January 2015, Rackspace's Adrian Otto announced the release of the first version of Magnum [8]. The software is licensed under the Apache license and written in Python. Magnum was officially added to the list of OpenStack projects in March. Adrian Otto is the technical lead. The primary objective of the Magnum project is to provide an OpenStack interface for container orchestration.

Two programs are at work behind the scenes (Listing 1). One of these programs is the Magnum API REST server. The REST server provides the external interface, accepting requests and outputting messages to third parties. As a failsafe, or for ease of scaling, you have the option of running multiple API servers at the same time. Requests to the interface are not answered by the process itself but are passed onto the second Magnum component – the Conductor, which then interacts with the container, or the orchestration instances. Scaling of the Magnum conductors by running multiple instances is not currently supported but is firmly on the roadmap.

Listing 1: Two Server Components and One Client

ps auxww|grep -i magnu stack 19778 0.1 1.2 224984 49332 pts/30 S+ 12:20 0:16 /usr/bin/python /usr/bin/magnum-api stack 19844 0.0 1.4 228088 57308 pts/31 S+ 12:20 0:03 /usr/bin/python /usr/bin/magnum-conductor $ which magnum /bin/magnum $ magnum --version 0.2.2

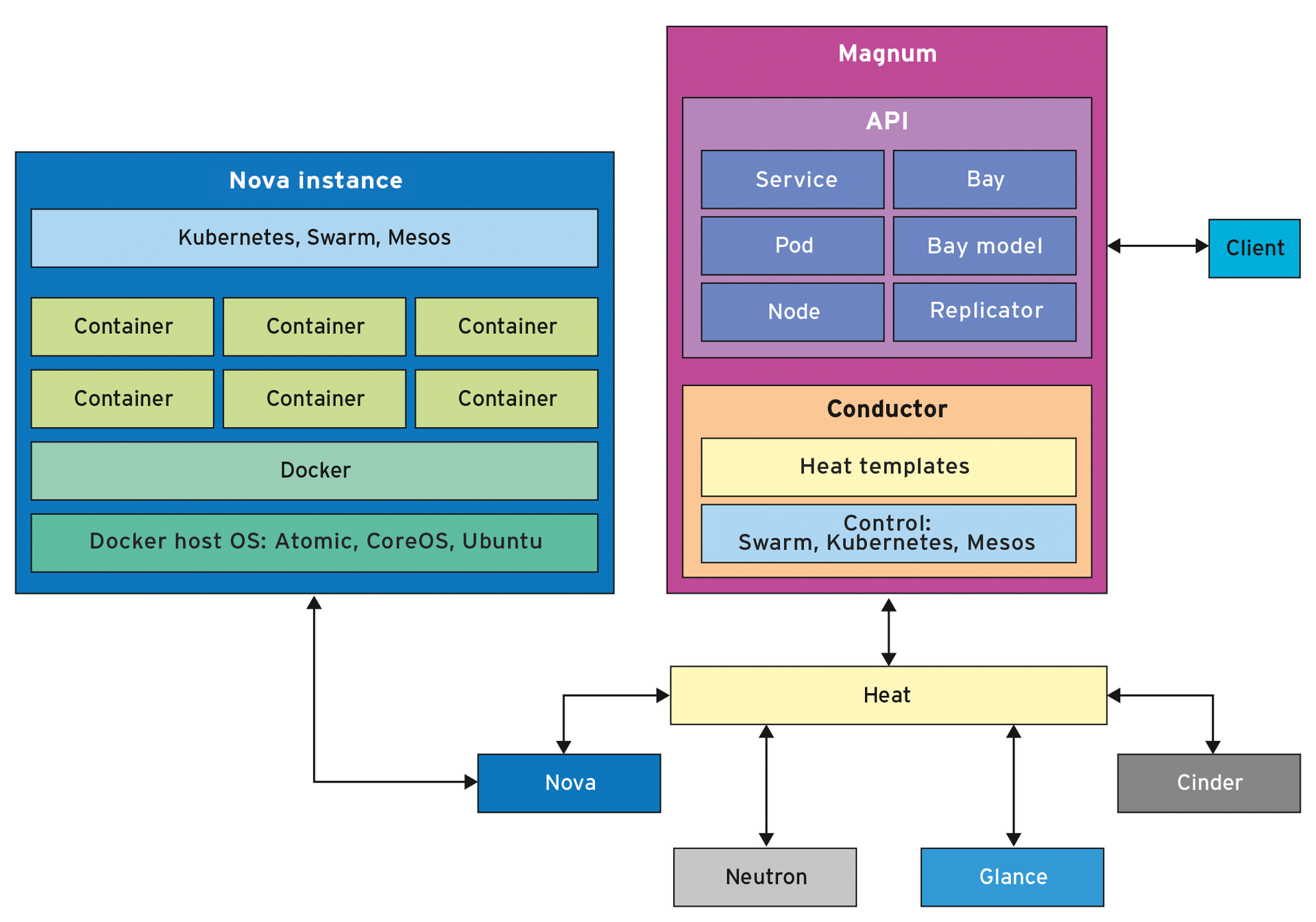

The Magnum project is not actually an interface for direct management of Linux containers but is instead more of an API for container orchestration. Currently, the software supports interaction with Kubernetes [9], Docker Swarm [10], and Mesos [11].

Installation Requirements

If you want to offer CaaS, you have to start on a greensite and first implement the required infrastructure. Magnum resorts to OpenStack's internal orchestration – that is, Heat. Matching templates are part of the project.

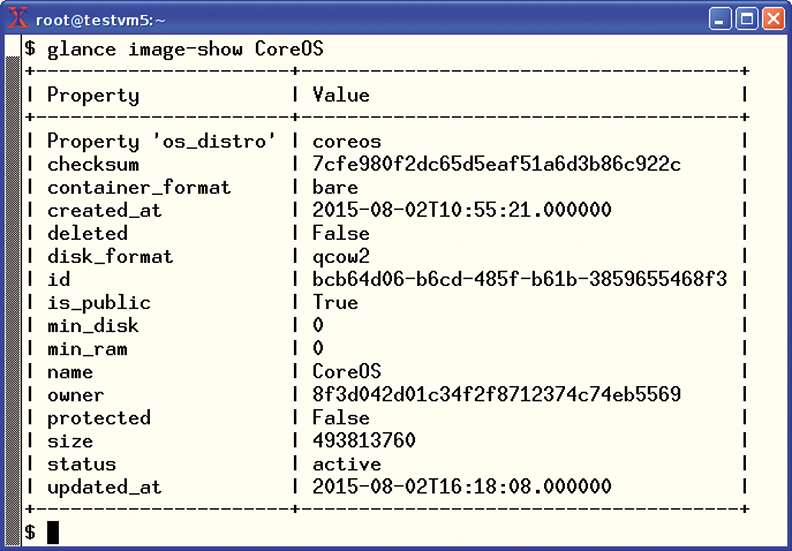

In the ADMIN magazine lab, we used OpenStack DevStack [12], which installs no less than four Magnum templates for Heat if so desired (Listing 2). (Two of these templates are unusable because the matching Glance object is missing.)

In the tests, we experienced difficulties as soon as we left the beaten Kubernetes path. For more details, see the "Into the Fray" box. Magnum requires the necessary software to be part of the Glance object, or, if it is not integrated with the Glance image service, the integration will occur in the course of the corresponding Nova compute component installation.

Container orchestration reaches across several abstraction layers. At the highest level is the service that the system is implementing. The system might be acting as a dedicated web server; however, a setup with multiple software components running separately is more realistic.

One layer below the service is the orchestration software for the Linux containers. The documentation and the maturity of the software show that the most effective orchestration tool is Kubernetes. The logical grouping of containers is known as a pod in Magnum – a term taken at face value from the Google world of container management. On another layer further down are the containers.

You can identify more abstraction layers by unraveling Magnum from the bottom. First is the bay construct, which groups Docker hosts. (In Magnum speak, these hosts are known as nodes by the way.) To provide a simple approach to generating bays, there are templates – or bay models. The major difference is the orchestration software. For bay templates, it is known as the Container Orchestration Engine or COE for short (Listing 2). For more configuration options, check out the online help for the Magnum client or the documentation [13].

Listing 2: Four Partly Usable Templates

$ magnum-template-manage list-templates --details +------------------------+---------+-------------+---------------+------------+ | Name | Enabled | Server_Type | OS | COE | +------------------------+---------+-------------+---------------+------------+ | magnum_vm_atomic_k8s | True | vm | fedora-atomic | kubernetes | | magnum_vm_atomic_swarm | True | vm | fedora-atomic | swarm | | magnum_vm_coreos_k8s | True | vm | coreos | kubernetes | | magnum_vm_ubuntu_mesos | True | vm | ubuntu | mesos | +------------------------+---------+-------------+---------------+------------+ $ $ glance image-list | ID | Name | Disk Format | Container Format | Size w | Status +--------------------------------------+---------------------------------+-------------+------------------+-----------+--------+ | 1d4a3b18-e48a-4554-aa19-6845b1938d69 | cirros-0.3.4-x86_64-uec | ami | ami | 25165824 | active | | 2bbf1d2a-75d8-4c1b-a9e1-2ff65d5ff725 | cirros-0.3.4-x86_64-uec-kernel | aki | aki | 4979632 | active | | 95fe59ac-920f-43f0-964c-1aabdd972b0d | cirros-0.3.4-x86_64-uec-ramdisk | ari | ari | 3740163 | active | | 58ac680d-f640-4af0-83e5-23d417ab4f70 | fedora-21-atomic-3 | qcow2 | bare | 770179072 | active | +--------------------------------------+---------------------------------+-------------+------------------+-----------+--------+

Figure 2 shows the relationships just discussed in a simplified form. The major points are Magnum's two server components, the use of orchestration software for OpenStack and Docker, and the abstraction models. Admins should not be fooled by the simple illustration. In practice, there are many details to watch out for when using Magnum. Again, the "Into the Fray" box gives you some initial guidance.

Additionally, Magnum has a high-availability concept. The key term is Replication Controller (RC), which is based on Pods groups. This construct ensures that the desired number of necessary processes is always running to provide the intended service. In practice, the user first generates the pod, then the service, and finally drops the Replication Controller over the top.

Security Matters

The assumed motivation for Magnum thus far was the desire to use specific container features that go beyond the capabilities of a hypervisor in OpenStack. But implementing CaaS as a separate API service has two further consequences which result naturally, and which are intentional: Magnum makes containers an independent and OpenStack-controllable resource. CaaS is thus the latest in a line of services that include DBaaS (Database as a Service).

Magnum gives admins the ability to provide Linux containers as a service on OpenStack. Integration as a hypervisor driver for Nova did not allow this option. Ideally, the user can now fully focus on using the containers without wasting time worrying about the compute infrastructure.

The second consequence resulting from the Magnum's existence as a separate OpenStack component is Keystone integration. Support for the Keystone identity service means multi-tenancy and other security mechanisms. As a result of the design, it is possible to run Docker containers for different OpenStack customers on one host.

Where to Next?

Separating containers from the hypervisor is a step in the right direction. OpenStack administrators now have all the options they could hope for. If the use case calls for containers in more of a hypervisor context, the Nova interface is a good choice, but if Docker and containers are the focus, Magnum is definitely the best option.

The Magnum project is still quite young and major changes are frequent. The rapid evolution of Linux containers is partly to blame for the frequent changes in Magnum. According to Adrian Otto, the Magnum project has some important backers in the OpenStack community. Clear signs of integration with the Rocket [14] and LXC [15] container tools would help encourage adoption.