What's new in PostgreSQL 9.4

Modern Database

PostgreSQL has used a JSON data type for some time to simplify storing JSON documents in relational databases. This approach is useful for handling JSON documents as entities instead of mapping to a relational data model. Additionally, it allows data storage within a database, with all the advantages that PostgreSQL offers the user, such as uncompromising transaction security, excellent extensibility, and scalability in the same software component.

However, the original implementation of the JSON data type had a major drawback: JSON documents were stored in the database as strings and required parsing and analysis every time JSON data was accessed. Accessing elements of a document with this kind of representation is also very complex, to say nothing of indexing with any kind of efficiency.

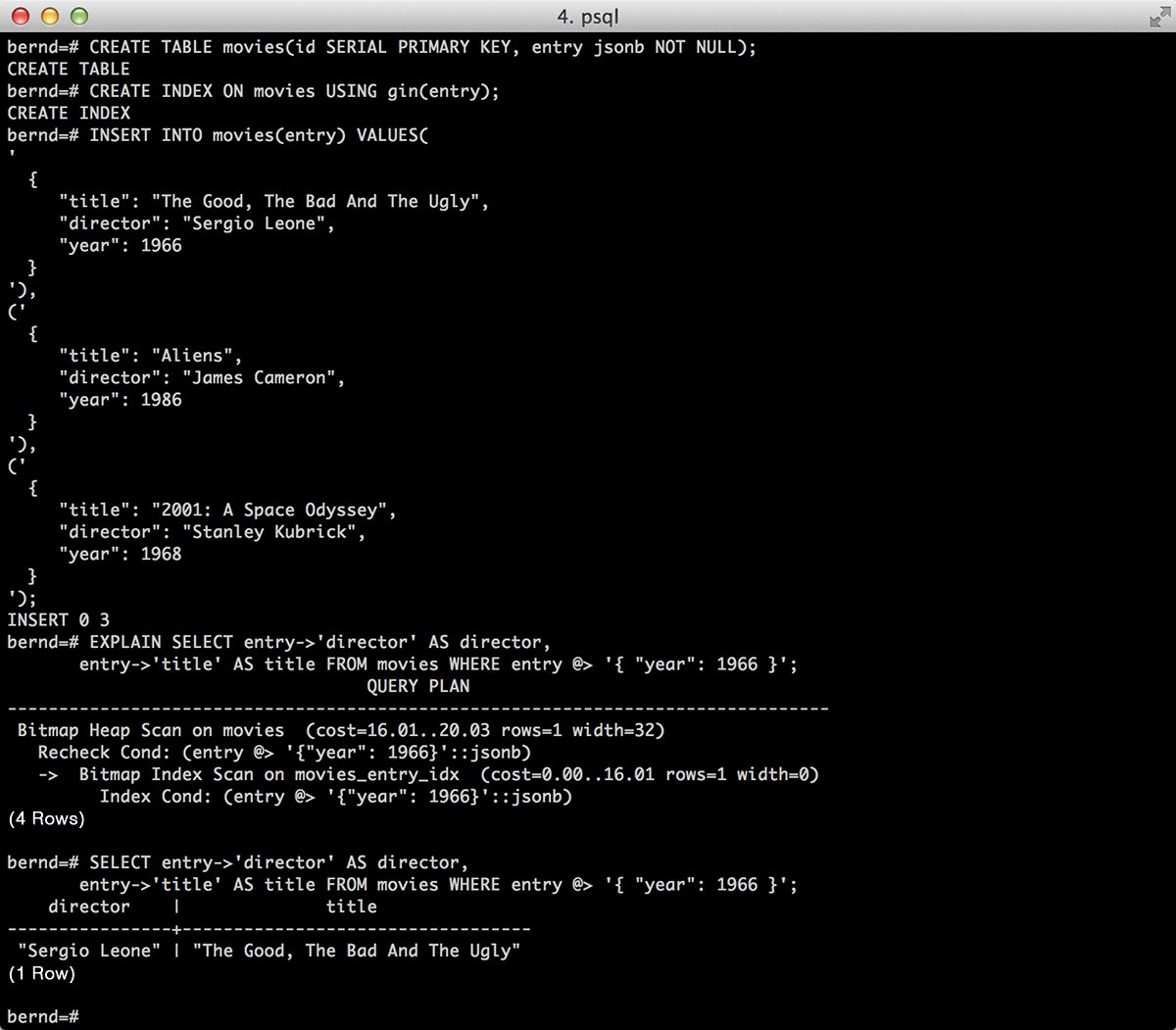

PostgreSQL 9.4 has now introduced a data type, in the form of JSONB, that handles and saves JSON documents as structured, binary data types. This allows efficient access to JSON documents as well as the implementation of fast index access methods. Scalar values from JSONB documents are stored as basic PostgreSQL types within a document. The query half-way down Figure 1 shows a very simple example with the table movies whose column entry contains a JSONB document with a film title. The @> operator checks whether the left operand contains the right JSONB expression.

Additionally, the <@ operator checks whether the left JSONB expression is contained in the right JSONB operand, the ? operator verifies whether a specific key exists, and ?| tests whether a key or element exists in the left JSONB operand. The ?& operator finds out whether all the keys from the given set are present in the JSONB document. All these operators can be accelerated using a GIN index for such queries created by the database admin:

CREATE INDEX ON movies USING gin(entry);

Testing for the presence of certain attribute values or the existence of certain keys in JSONB documents and the associated possibility to index relevant queries provides a variety of interesting applications compared with the old JSON data type.

Configuration Changes Using SQL Commands

Until now, administrators were forced to rely on manual editing for changes to configuration variables in the postgresql.conf configuration file. This included, for example, settings for the shared buffer pool (shared_buffers) or the number of transaction log segments (checkpoint_segments).

As of version 9.4, PostgreSQL has a tool for changing all settings in postgresql.conf using SQL commands in the form ALTER SYSTEM. Another configuration file is maintained by the database server for this purpose – the postgresql.auto.conf file.

The ALTER SYSTEM command changes settings in this additional configuration file; PostgreSQL always reads this after postgresql.conf at startup or when it receives a SIGHUP signal. This means that settings made by the administrator using ALTER SYSTEM always have priority over the corresponding settings in postgresql.conf. The administrator must have superuser permissions. The following example illustrates the new syntax.

ALTER SYSTEM SET maintenance_work_mem TO '1GB'; SELECT pg_config_reload();

Because maintenance_work_mem can be easily changed at run time, reloading the configuration files will suffice here; this is handled by the legacy pg_config_reload() function. Changes can be reset specifically to the original default value for a particular variable:

ALTER SYSTEM RESET maintenance_work_mem ;

All settings that were made via ALTER SYSTEM can be reset once using the keyword ALL:

ALTER SYSTEM RESET ALL;

ALTER SYSTEM facilitates administration (particularly on database systems) where there is strict separation between database and system administration and where configuration files cannot be edited easily. The new command even simplifies administration when installing in the cloud, where there is no direct access to the shell.

Improvements for Materialized Views

The basic functionality for materialized views was introduced in version 9.3 of PostgreSQL. The REFRESH MATERIALIZED VIEW command has now been improved in version 9.4. In version 9.3, if an exclusive lock on the view existed, it had to be held while refreshing the materialized dataset and thus allowed no concurrent access; the new CONCURRENTLY option allows materialized views to be updated without locking concurrent queries.

REFRESH MATERIALIZED VIEW CONCURRENTLY mv_test;

This action takes longer than an exclusive REFRESH with larger amounts of data. Furthermore, REFRESH MATERIALIZED VIEW CONCURRENTLY requires a unique index on one or more columns of the view.

Replication Slots

Replication slots expand the infrastructure with streaming replication. Until PostgreSQL 9.3, connections via streaming replication protocol were relatively anonymous: Using hot_standby_feedback to submit feedback regarding the current state of a streaming standby to the master was an option. However, this only had an effect, for example, on any replication conflicts that arose, such as VACUUM or lock management. If a streaming replication connection falls too far behind, transaction logs on the master can already have been cleared, thus causing a replication breakdown. The standby can then only catch up using an existing archive.

Replication slots now provide a kind of named replication connection, which accurately provides information about which position this slot occupies in the transaction log. PostgreSQL will then stop automatically recycling required transaction log segments until the slot is released again. Replication slots therefore summarize all the characteristics of hot_standby_feedback, wal_keep_segments, and vacuum_defer_cleanup_age. However, PostgreSQL administrators absolutely have to monitor the size of the transaction log if replication slots are only served very rarely or if standby servers are down for extended periods of time.

A distinction is made between physical and logical replication slots. The latter are required for logically decoding the transaction log. A physical replication slot configured by the new configuration parameter primary_slot_name in recovery.conf is required for streaming replication.

Creating such a physical replication slot is simple: The SQL function pg_create_physical_replication_slot() or pg_create_logical_replication_slot() creates a physical or logical replication slot. Existing replication slots can be queried using the new system view pg_replication_slots.

The new configuration parameter max_replication_slots must be set to the maximum number of replication slots in preparation. Changing this setting requires a reboot. The database administrator can now create a physical replication slot (Listing 1).

Listing 1: Physical Replication Slot

SELECT * FROM pg_create_physical_replication_slot('pgstandby');

slot_name | xlog_position

--------------+---------------------

pgstandby |

(1 row)

The slot is automatically activated if a streaming standby server connects to this slot after the parameter primary_slot_name = 'pgstandby' was set in its configuration file.

Listing 2 shows the information from pg_replication_slots for this replication slot.

Listing 2: Information About Replication Slot

SELECT * FROM pg_replication_slots ; slot_name | plugin | slot_type | datoid | database | active | xmin | catalog_xmin | restart_lsn -----------+--------+-----------+--------+----------+--------+------+--------------+------------- pgstandby | | physical | | | t | | | 0/76000138 (1 row)

Logical Decoding of Log Information

The transaction log in PostgreSQL is the database's insurance against data loss. Any changes to the databases are logged in the transaction log. PostgreSQL 9.0 introduced the streaming replication protocol several years ago. It allows the replication of transaction logs to a remote standby server. As the transaction log's binary block contents are replicated, this is referred to as physical replication.

A fundamental innovation in the new PostgreSQL version is a plugin interface for logically decoding transaction log data. The contents of the transaction log can be read out and interpreted via the new interface or converted into specific output formats. This allows for the output of the transaction log in SQL or JSON, for example. One field of application is logical replication, which replicates the logical changes to a database based on the output format (e.g., into another database).

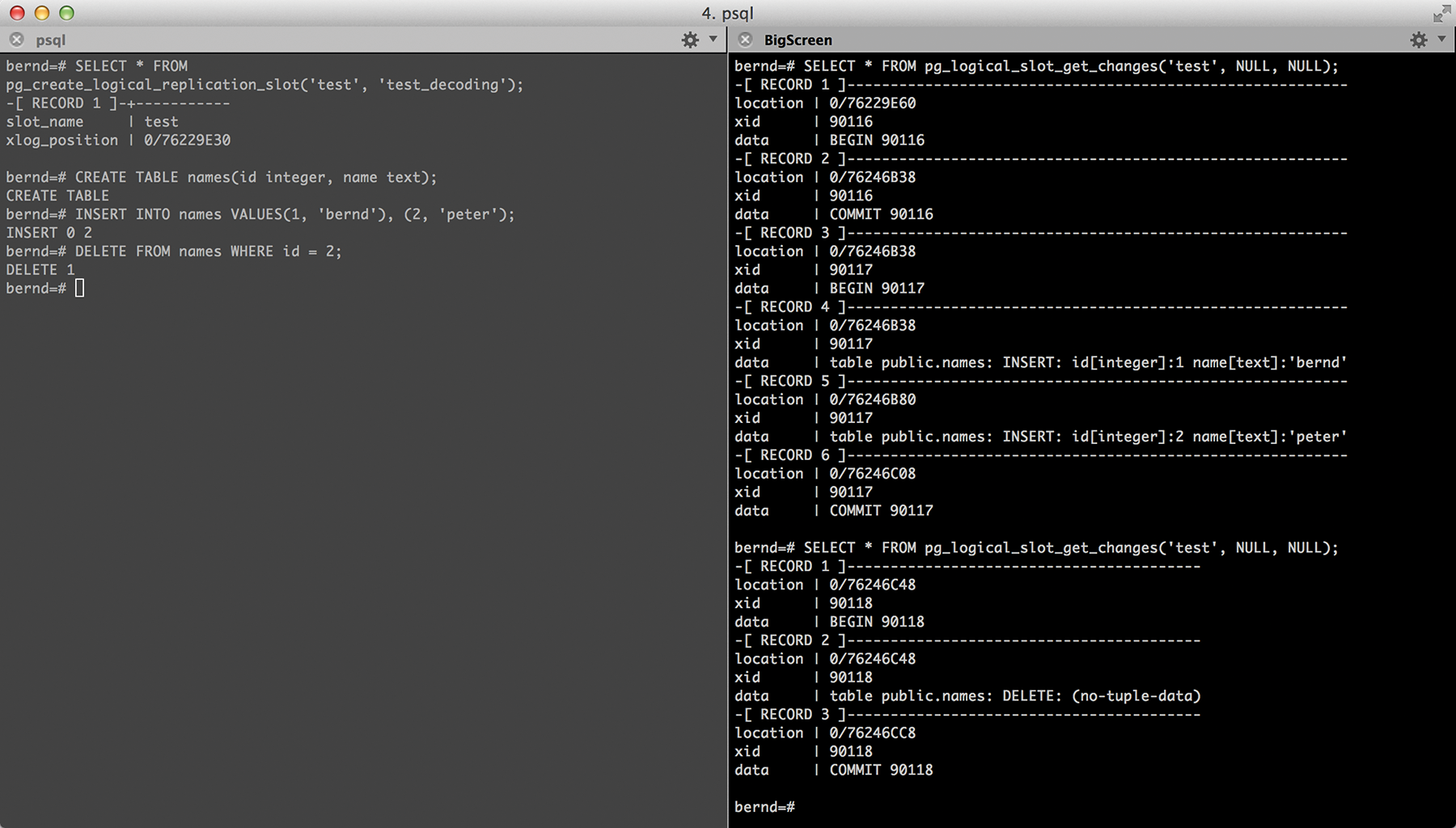

Logical decoding requires more information in the transaction than before. For this reason, the wal_level parameter is logically set to the new value to be able to use logical decoding. Furthermore, a replication slot is required that has been generated for logic decoding and has an appropriate output plugin. A simple plugin for testing this infrastructure is included in the PostgreSQL 9.4 package in the form of test_decoding. It might be necessary to install packages later, depending on the type of installation. test_decoding is included in the package postgresql94-contrib in the community's RPM repositories. If everything is installed correctly, the superuser can create a logical replication slot (Listing 3).

Listing 3: Logical Replication Slot

#= SELECT * FROM pg_create_logical_replication_slot('test', 'test_decoding');

slot_name | xlog_position

--------------+---------------

test | 0/760216D8

(1 row)

Logical decoding offers functions that provide access to decoded transaction log information for SQL. These include the functions pg_logical_slot_get_changes() and pg_logical_slot_peek_changes(). The latter in fact does not actually consume decoded information but instead simply returns it. A repeat call contains the same information. Using pg_logical_decoding_get_changes(), on the other hand, consumes the changes; when called again, it no longer contains the returned information.

Figure 2 shows an application with pg_logical_slot_get_changes(). Notice the empty transaction 90116 at the beginning. Logical decoding cannot currently detect any changes to tables themselves (DDL). The transaction that was started implicitly for the CREATE TABLE command is therefore empty.

Logical decoding covers a wide range of application possibilities. Replication solutions for PostgreSQL, which are based on the infrastructure, are also currently under development. The possible uses are diverse and range from replication and upgrades to interoperability with external systems for exchanging data.

Support for Linux Huge Pages

Thanks to the new configuration parameter, huge_pages, PostgreSQL 9.4 can use the Linux HugePages feature. This feature addresses the main memory in larger blocks so that the page tables are much smaller, thereby reducing the load on the CPU. This is particularly interesting for database systems that use a large amount of RAM. The default setting is try, so that PostgreSQL attempts to use HugePages.

autovacuum_work_mem: Settings for autovacuum

The autovacuum tool is essential for high-performance databases with PostgreSQL. The setting for the permitted use on the system's main memory was split in PostgreSQL 9.4 to offer administrators more flexible resource control. Where the parameter maintenance_work_mem was previously responsible for all maintenance commands, such as ALTER TABLE, CREATE INDEX, and autovacuum, administrators can now configure separate values for automatic vacuuming. Note that this setting will only work for the autovacuum worker processes. Manual VACUUM does not allow this option; instead, it still uses maintenance_work_mem.

Delayed Replication

Replication delay is a function long desired by database administrators, with the idea being that transaction logs would be consumed asynchronously by standby servers at a certain time interval. The new parameter recovery_min_apply_delay now provides this functionality in PostgreSQL 9.4.

Times can, as usual, be assigned to the parameter with common units such as 2H or 180min. A delay affects the COMMIT or the restore points in a transaction. Changes that occur during a transaction can nevertheless take effect immediately on the standby. A DROP TABLE on the master blocks a simultaneous query on the standby before the end of the delay since the corresponding transaction log changes are loaded immediately. The COMMIT is loaded on the standby after the corresponding wait from recovery_min_apply_delay.

Wealth of Detail Improvements

Many of the improvements in the new PostgreSQL 9.4 are found under the hood. Scalability for parallel writes, for example, has been improved significantly. Transactions can now write to the transaction log buffer simultaneously without having to wait for a lock. GIN indexes have been reduced significantly in size, and access to these indices with several indexed columns has been accelerated.

Furthermore, improvements have been made to the execution time of aggregate functions that use NUMERIC values. Additionally, the database's memory consumption has been significantly reduced even for single database connections, allowing for better speed for connections with very few tables, but also for those with many tables. A new system view pg_stat_archiver now provides information about the archiving status of transaction logs, thus facilitating the monitoring of systems with WAL assurance.

Conclusions

This article provides just a brief overview of the new functions in PostgreSQL 9.4 and only touches the surface of many of the new functions. Anyone who is interested in a detailed description of all of the new features should read the detailed PostgreSQL documentation.

The new version underlines the claim by PostgreSQL that it is the most advanced free database system and is definitely worth a close look, especially by newcomers. PostgreSQL has already found a wide user base in critical business areas, and version 9.4 will advance this further.