The advantages of configuration management tools

Config Battle

The hype surrounding Docker has raised the awareness of many administrators regarding a category of programs that were almost unknown before now: fleet and configuration managers. Several representatives of this species are vying for the attention of users: Etcd [1], Consul [2], and ZooKeeper [3] are just a few.

What are these configuration managers actually about? What are their preferred fields of application, and why should administrators employ this innovation? In this article, I explain the ideas behind the tools and then let three candidates do battle.

Was Everything Better in the Past?

Administrators often reminisce about how managing IT environments used to be easier: Each host had a defined role that did not change over the course of the server's life. When people started looking into clusters, it was usually a matter of small, high-availability setups, such as Pacemaker and DRBD synchronizing data between hosts. However, administrators were fighting a problem that still exists today: The configuration of services needs to be identical on two hosts so that if one system fails the other can take over without any problems; therefore, it is essential to synchronize configuration files in some way. Home-made solutions based on Rsync were often used in these cases, and although they might not have been pretty, they served the purpose.

In recent years, however, IT setups have continued to grow and, especially, to become more dynamic. The self-made solutions that administrators used to keep the configuration files at the same level between hosts became more and more complex. Keeping the state of services consistent across multiple hosts also proved to be more and more difficult. Moreover, with scale-out setups, it is important to pay attention to the status of all instances. Databases provide a perfect example: A writing process in a MySQL database clustered with Galera involves events on multiple cluster hosts – a complex overall structure is formed.

Each service with support for scaling out faces the need to explain each of several versions of the data in the current and valid data set within the network. It is important that all the components that are active in a cluster agree on the state of the cluster and all its services in the end.

The programs presented here aim to help and go beyond the scope of simple configuration management: They aim to be a reliable source in scale-out setups that can provide information about the state of the entire system. However, although it might sound simple, it turns out to be technically demanding in practice.

Puppet and Others

The growing complexity of IT environments dictated centralized management tools for the configuration of files. The use of automation tools like Puppet or Chef reduces the admin work involved to importing a template and configuration parameters so that the right data ends up in /etc. Nevertheless, this approach only partially solves the problem, because the configuration of individual services is not nearly as static in scale-out setups as in conventional setups.

Cluster services can be scaled to any size by starting more and more instances as required. Starting Puppet or Chef to provide configuration data for each of these instances would be impractical.

Typical automation tools such as Puppet are therefore barely used inside cloud VMs. Clouds need completely different approaches to manage the configuration of services sensibly. The appropriate tools were barely known until the cloud hype surrounding Docker and OpenStack.

What Is Configuration?

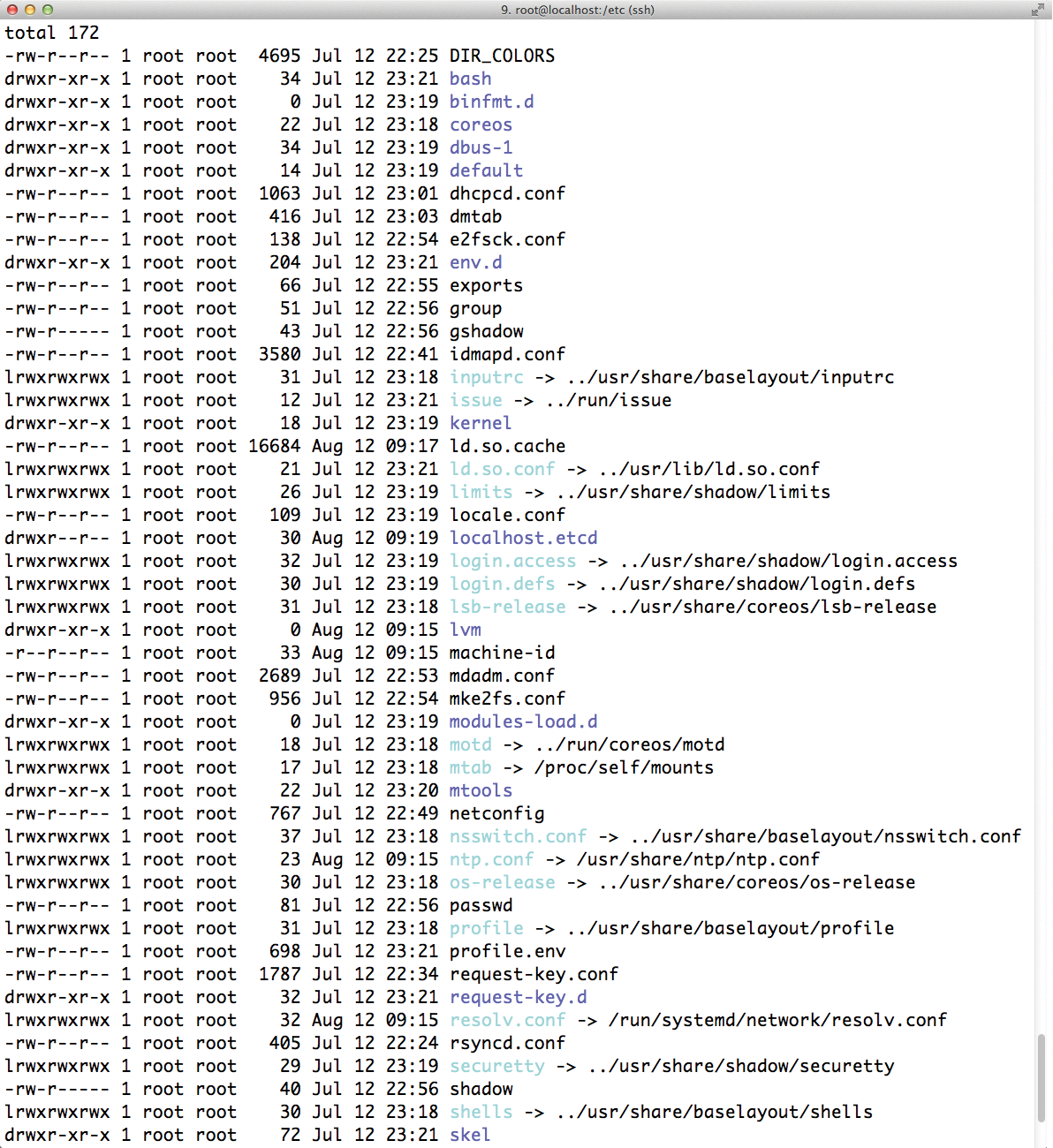

The lack of useful tools is strange, particularly because the problem seems rather simple. Historically, programs on Linux systems, as on its predecessor Unix, always assumed the relevant configuration was in files in the /etc directory. Cluster configuration files follow the same principle in many cases: Pairs of parameters and values obeying a fixed syntax reside in a text file. Therefore, configuration files are just rudimentary key-value stores that can only be managed – poorly – in text files across multiple machines.

However, more than enough meaningful solutions exist for key-value stores in the example of databases. What would make more sense than transferring the database principle to the management of configuration files? This is where the gate opens to configuration databases.

Key-Value Distributed Stores

Basically all configuration databases dub themselves key-value stores. This applies, for example, to both Etcd and Consul; ZooKeeper also is a typical key-value store. However, if it were just a matter of maintaining a database to manage settings, an approach based on MySQL would probably be easier.

Virtually all subjects in the test aim to provide added value beyond key-value stores. Etcd and Consul promise particularly efficient tools for fleet management services. Consul, for example, comes with a built-in discovery service and thus quickly becomes a directory in scaled environments, wherein services register and deregister dynamically.

Ultimately, the promise is that these tools will bring an end to the typical static configuration files in /etc and substitute a dynamic cluster registry. The test candidates will have to prove they work in the test that follows.

Etcd from CoreOS

Etcd is essentially a by-product of CoreOS (i.e., of the micro-distribution that specializes in operating Docker containers). Like almost all CoreOS tools, Etcd is also based on the programming language Go. Its self-described characterization sounds unspectacular: "etcd is a distributed key value store that provides a reliable way to store data across a cluster of machines" [1].

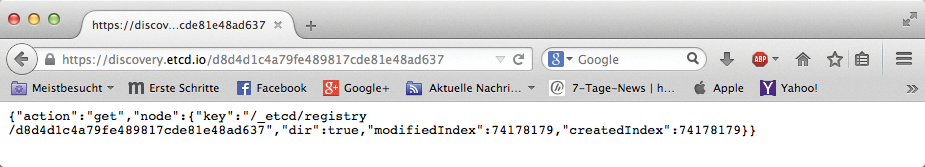

The Etcd front end is a simple design: an HTTP-based RESTful interface that can be operated using any standard web browser. If the administrator or a program connected to Etcd queries its values, they are returned in JSON format. The data structure in Etcd is hierarchical: A key may have different subkeys to which a value is then assigned. Multiple services can then use Etcd at the same time in a distributed setup without the various configuration entries getting in each other's way.

Full Cluster Compatibility

Etcd is cluster-capable: If standalone instances of Etcd are running in a setup on the hosts, they talk to each other in the background using a consensus algorithm based on Raft. The principle is simple: All Etcd instances select a master from among themselves that is the authority on all matters regarding clusters. If the selected master fails, a new selection process takes place automatically, and a new node takes over the regiment. An Etcd cluster that is already running acts as a discovery service for new instances that join the cluster. Instances added later simply ask which Etcd instances already exist and connect to them.

Only when first bootstrapping the cluster do you need to specify which instance is the master (Figure 1). As long as at least one Etcd instance is running, other Etcds can join or leave without bootstrapping again.

Etcd's cluster capabilities also include the quorum: Etcd automatically realizes when a cluster is broken into several parts and only continues to work in the cluster partition that knows the majority of all the existing Etcd instances behind it. The only potential for improvement is in terms of geo-clustering: Etcd is not able to handle multi-data-center installations because, with two sites, it is not possible to decide which site has the decision-making powers after they have lost their connection to each other.

Bringing Old Programs On-board

Etcd also has a solution for traditional software that expects a fixed configuration file in /etc (Figure 2). Because Etcd is familiar with templates, you can place an appropriate template on the target host and then apply the matching key-value pairs to generate an nginx.conf file. A second service then comes into play, confd, which automatically generates the appropriate nginx.conf from the values in Etcd and places them in the desired location. Restarting Nginx then implements the configuration. Impressively, the whole process also complies with changes in configuration. If you change the Nginx configuration in Etcd, Confd triggers both configuration files to update and restarts Nginx.

/etc is completely empty.Somewhat Useful

Although it might also be possible to operate Etcd without additional components such as Confd, Etcd is strictly speaking intended to be a global configuration tool as part of CoreOS and best fits in this setup (Figure 3).

![A clear case: Etcd feels best when used as part of a Docker installation [4]. A clear case: Etcd feels best when used as part of a Docker installation [4].](images/F3_etcdundco_3.png)

With the Armada tool, you can start Docker containers at will from CoreOS servers and running Etcd instances. In return, they read their necessary configuration from the Etcd instances on the host. The cluster part of Etcd ensures that all CoreOS instances are always familiar with the whole configuration. Two separate services called Systemd and Fleetd take control of the cluster on the basis of data from Etcd. It could be possible, but also complex, to use Etcd meaningfully without this framework.

One for All: Consul

Although Etcd and Consul have differences, they are also similar in many respects. Consul, by HashiCorp, takes a significantly more comprehensive approach, leaving no doubt that it wants to serve all areas of application.

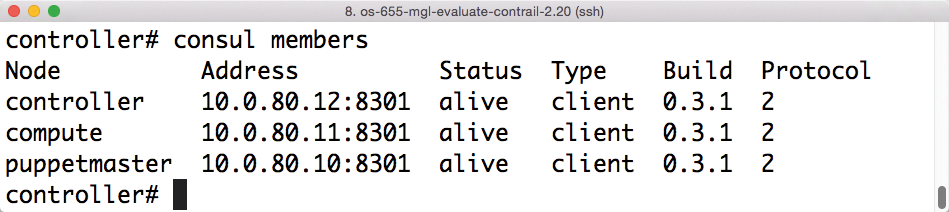

Consul also implements a key-value store for configuration data. Just as in Etcd, it has RESTful interfaces and provides JSON outputs. However, the Consul API yields significantly more than Etcd: For example, a catalog function records which nodes in the network can register services such as MySQL. Other nodes then query the Consul API to receive a list of all nodes in the cluster network that are operating the respective service (Figure 4).

Other factors include standalone API modules for health checks, ACLs, or users events. Consul refers to endpoints, each of which is almost its own service category. Simply replacing configuration files in /etc is no longer so important in Consul. Instead, Consul aims to be a comprehensive service registry in which configuration management is a by-product.

Comprehensive Service Discovery

Consul follows a server-agent architecture comprising the cluster of Consul nodes and the computers running applications (i.e., MySQL, Nginx, or any other tool) on which the associated Consul agent works. It registers the host services in the cluster so that they are listed in the service database in Consul.

If another service wants to use RabbitMQ, for example, it asks for the corresponding host for RabbitMQ in Consul and receives the appropriate answer. Consul also configures its own DNS entries so that legacy software is also able to cope with this system: The client, for example, always connects to the host _rabbitmq._amqp.service.consul, where Consul defines the address for a RabbitMQ host.

It's not easy to fool Consul: If an agent claims that MySQL is running on the local host, you can define your own health checks in Consul. Consul only forwards the host for MySQL to requesting clients if the MySQL server also responds to incoming monitoring requests. The parameters are arbitrary. If, for example, the load on the target host is too high, Consul notices this and diverts traffic to other hosts. Basically, Consul behaves like a well-configured load balancer that can also store the configuration of services on request.

Clustering Capabilities!

Like Etcd, Consul offers an inherent cluster mode. Unlike Etcd, Consul is equipped out of the box with support for real multi-data-center installations, although it also uses Raft to produce a consensus in the cluster.

Consul is familiar with three consistency models: In addition to the strictly consistent model, the default model is a compromise between forced consistency and the rare circumstances when a cluster reads information from the database that is no longer current.

The model is, however, consistent. The "stale" model allows Consul instances to edit requests themselves, even if they belong to a cluster partition without a majority in the quorum (Figure 5). Administrators can basically choose what they prefer: functionless Consul instances (because they have no majority in the quorum) or clients that might recover old input.

In multi-data-center installations, each data center has its own Consul cluster and all clusters are loosely connected.

All cluster information is generally available to each Consul instance in each data center, but a data center failing never affects the Consul cluster at other locations. Because all Consul agents in a data center only talk to the Consul instances there, requests for other cluster partitions are forwarded within the Consul server.

Like Etcd, Consul also has bridges to the old world. Consul Template, introduced at the end of 2014, provides an option to generate template-based configuration files for services. Consul Template is a standalone service similar to confd in Etcd.

ZooKeeper

ZooKeeper is sort of the "grandpa" among the subjects. The project, which is under the Apache umbrella, has the longest history of all the tested tools. Not surprisingly, various features in Etcd and Consul are clearly tailored to offer functionality that appears to be missing in ZooKeeper. ZooKeeper certainly has a powerful circle of supporters, though: The project, which once belonged to Hadoop, is used by large companies such as Rackspace and eBay. The already typical division into several components is also found in ZooKeeper. First, ZooKeeper is equipped with a service for holding configuration data. Although it also offers a RESTful interface, it is currently marked as experimental. The developers prefer to see it when users access ZooKeeper using the tested Java or C clients.

The Cluster Consensus

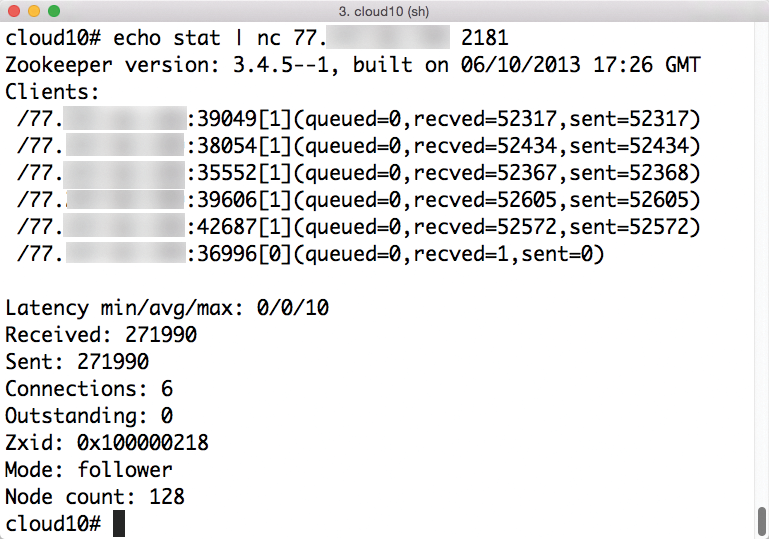

ZooKeeper of course needs an inherent cluster mode to manage the services within a cloud and on the hosts (Figure 6). This is part of the scope of service: Unlike Etcd or Consul, ZooKeeper relies on Paxos rather than Raft as an algorithm. However, this is just a technical detail from an administrator's perspective.

Crucially, ZooKeeper guarantees programs a consistent cluster state and implements a quorum. This means that if a client connects to a ZooKeeper instance, the client can be sure to always receive the latest data recognized as valid by the cluster. The cluster partitions refuse to work without a quorum majority if the cluster temporarily falls apart.

As with Etcd, a framework for service discovery is missing in ZooKeeper. If you want to use the software accordingly, you need to take care yourself that available services log on and off with an entry in ZooKeeper.

ZooKeeper therefore cannot be used as a service registry. Netflix had such bad experiences that it wrote a standalone discovery service called Eureka [5], which does not require ZooKeeper. Ultimately, Eureka may be considered comparable to Etcd, but without being squeezed as tightly into the cloud corset.

Consul clearly defeats ZooKeeper when it comes to functions. In addition to the missing registry function, a mechanism for generating finished files from the stored configuration parameters is also missing in ZooKeeper: you need to make them yourself.

Multi-data-center setups are also more difficult because of the Paxos algorithm used. ZooKeeper comes with so-called observers that work much like the inter-data-center communication in Consul; however, the number of setups in the real world that actually use observers productively is likely to be extremely low.

Conclusions

Programs like Etcd and Consul make administrators' lives easier when used in the tools' traditional territory. For example, if all setup components are equipped with support for the respective tool, you can forget about synchronization of files in /etc. However – and this is the drawback – it is then very likely just a trendy cloud application designed for use as part of CoreOS or one of the many other micro-distributions. Only when all programs in the installation can communicate directly with the respective configuration manager does the manager reach its full potential.

The tools are significantly less attractive when it comes to conventional programs, with which they have no direct connection. This is where administrators can create a configuration file in /etc themselves using the template function from the respective tool, if available. Such a setup, however, hardly brings any advantages compared with classical automation by Puppet and the like. Old-fashioned programs do not benefit at all from the inherent clustering ability of individual tools.

Anyone who has dealt with Docker or one of the micro-distributions for cloud use will probably enjoy using Etcd, Consul, or ZooKeeper. If you only need central maintenance of archaic configuration files, though, you would be better off using Puppet, Chef, or one of the many alternatives available on the market.

This situation will not necessarily remain static. Because of its RESTful APIs, it is very easy for authors of other programs to adapt their software to the tools reviewed here. For example, if it were possible in the future to persuade the ever-popular Dovecot IMAP server to work with one of these tools, it would be a very attractive combination.