Successful protocol analysis in modern network structures

Hunting the Invisible

A primary focus in virtualization is on simple processes, which can mean that actual validation and monitoring of critical parameters is not sufficiently considered. Virtualization also comes with the drawback of no longer being able to use the analysis tools you used to use because of the lack of data visibility. In this article, I show how to establish meaningful monitoring and analysis functions on the network, even when virtualization is involved.

A legacy protocol analysis tool (e.g., the open source utility Wireshark) is a standalone device or a piece of software on a PC that identifies problems, errors, and events relating to the network. Additionally, these tools contribute to determining the reasons for poor network performance by visualizing protocol information and the corresponding network activities.

Measuring Methods for Networks

Unfortunately, network and data center virtualization creates blind spots in your server infrastructure, as well as invisible networks. Because a major part of the traffic is routed via cloud infrastructure (in the form of virtual tunnel endpoints), this traffic does not even touch the physical networks in many cases. This means that administrators lose visibility into their data and, consequently, control over communication flows. For this reason, the data on the computer systems and networks need to be made visible again – and various tools are available for doing this.

SPANs

On switched networks, the data required for data analysis is not transferred to every port. The switch only forwards broadcasts and packets with unknown receiver addresses to all ports. If the switch has the MAC address of the receiver in its switch table, the packets in question are only sent to the port on the target device.

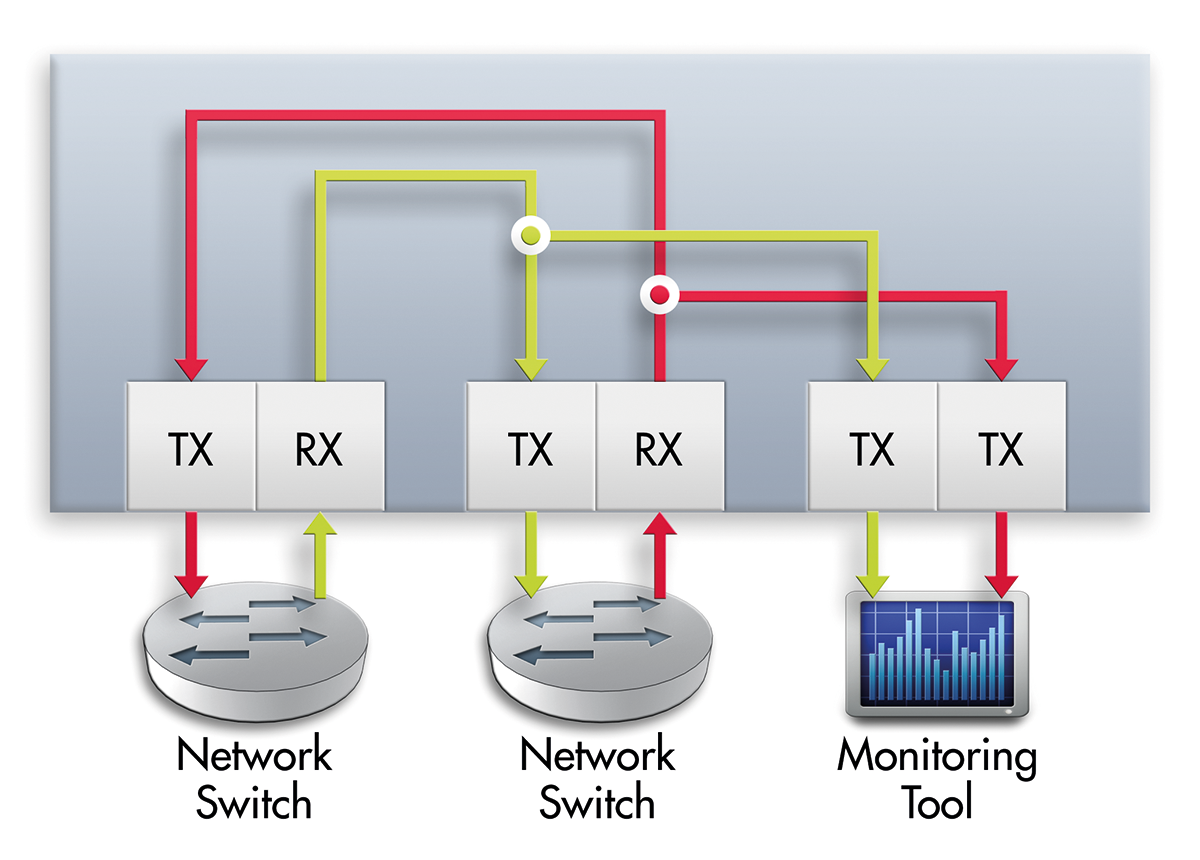

This necessitates new troubleshooting strategies. For this reason, most switches support mirroring with the help of the port mirroring function, which means that the link to be investigated is mirrored on another port of the switch to which the analysis device is connected. Some manufacturers even output the traffic from multiple switch ports to a single mirror port, also known as the SPAN port (Switch Port ANalyzer) or maintenance port (Figure 1).

You should only resort to forwarding the data for analysis to the mirror port if this port can handle the data volume of the mirrored ports. If this is not the case, packets will be dropped. To keep on the safe side, the mirror port should have the same bandwidth as the source port. Additionally, mirroring also affects switch performance, because the switch needs to duplicate all of the packets for mirroring. The mirrored port can also suffer performance hits, which means that troubleshooting in this way can cause more problems than it solves. For another thing, port mirroring falsifies the results because the switch automatically drops defective packets. Thus, in practice, SPAN ports are only rarely used as supplementary measuring points for ad hoc analysis.

TAPs

To ensure precise acquisition of the measurement data, Test Access Points (TAPs, also known as link splitters) are used now for testing. These devices are directly connected to the network connection to be monitored. TAPs have a completely passive mode of operation; they do not generate any errors and keep working in case of a power outage. A TAP (high-ohm interface connection) duplicates all packets and breaks down a full-duplex link into two half-duplex data streams with the Rx and Tx traffic. For this reason, the network analysis device also needs two network interface cards. The analysis software then merges the two streams to create a single trace.

The TAPs are connected in series to a network medium (e.g., copper, fiber). This ensures that all packets, including defective ones, are fed to the analysis or monitoring system. Complex filtering functions help these tools to improve the application performance substantially and only forward traffic flows that are genuinely relevant. In particular, the use of high-speed connections (e.g., 10Gbps, 40Gbps, or higher) means that the cost of traffic analysis can skyrocket. If an error occurs on the TAP, a relay bridges the TAP and ensures that the connection to be analyzed remains in place in the idle state. Either the main route has no interruptions, or they are compensated for on the transport layer.

Invisible Data in Virtual Infrastructures

In the case of virtualization, both TAPs and SPANs fail. Server virtualization entails seamless migration of machines from one virtual machine to another. The fault tolerance integrated into VMware's vSphere vMotion technology minimizes downtime, but this agility also increases complexity. After all, to be able to monitor virtualized system environments, you need seamless representation of all system changes. The monitoring and analysis solutions required for this work must be able to access virtualized resources and data streams.

The easiest way of connecting virtual machines (VMs) to network resources is to use a virtual Ethernet bridge (VEB; also known as a vSwitch). A vSwitch is thus a software application that enables communication between virtual machines. By default, any VM can use the virtual switch to communicate directly with any other VM on the same physical machine. This means that the data streams between VMs on the same physical computer are no longer transmitted across the connected physical network, which in turn means that these data streams are not accessible to network monitoring.

To be able to tap into data streams between virtual machines, the inter-VM traffic flows need to be forwarded to the monitoring and analysis application. Virtualization solution vendors provide guest access to a virtual network adapter for this purpose. This access to the data ensures that all data packets that occur at the switch are forwarded, which also includes traffic belonging to other users. This, in turn, can mean that the sniffed traffic streams are forwarded to the wrong addressees.

Inter-VM traffic flows can also be routed out via a native VMware vSphere 5 virtual machine. This removes the need to install additional agents or make changes to the hypervisor so that system administrators can see the data traffic between virtualized applications at the packet level in a way you would not normally find in a virtualized environment. The traffic streams between virtual machines on the same ESXi host can be selectively filtered and are then forwarded to designated users (analysis, monitoring tool) on the physical network.

Analysis and Monitoring in Distributed Environments

In cases where distributed server resources are virtualized, checking the transmitted data is typically impossible because of its enormous complexity. Based on a GigaVUE-VM fabric node directly integrated into the VMware vCenter infrastructure, the agility functions (VMware High Availability and Distributed Resource Scheduler) can be used to tap the data required for analysis and monitoring.

Cisco also offers the option of forwarding virtual traffic flows to an external switch. In this case, the packets in question are marked with a special VN tag. The most important components of the tag are the interface ID of the virtual data source and the interface ID of the target interface (VIF) for unique identification of multiple virtual interfaces on a single physical port.

The challenge in transmitting the additionally tagged packets is that of doing so without making any hardware or software changes and without additional performance overhead. Adaptive packet filtering is required here to ensure filtering and forwarding of the incoming traffic streams on the basis of the VN source tag, the target VIF_IDs, the payload encapsulated in the packet, or a combination of these methods. Because the analysis and monitoring tools do not understand the additional VN tag headers, the packet filter needs to delete the VN tag header before forwarding the packets to the analysis system in question.

Network Analysis in the Cloud

The limits of today's physical networks cause problems in realizing distributed, virtual computer landscapes (clouds). Generating a virtual machine takes just a couple of minutes, whereas configuring the required network and security services can take several days. Highly available, virtualized server environments are typically implemented as flat Layer 2 networks.

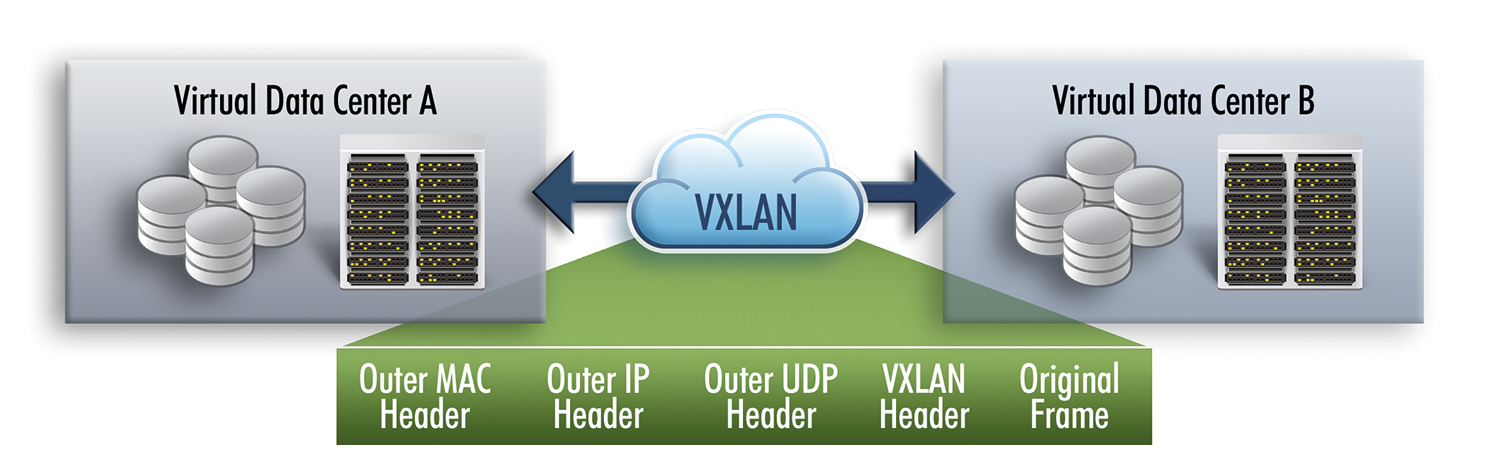

Based on Virtual Extensible LANs (VXLAN), overlay networks are established on the existing Layer 3 infrastructures. VXLAN technology is all about assigning IP addresses in a larger network array and keeping IP addresses in case of location changes. From a technical point of view, a VXLAN creates logical Layer 2 networks, which are then encapsulated in standard Layer 3 packets.

This approach helps extend logical Layer 2 networks beyond their physical borders (Figure 2). To distinguish between the individual networks, a segment ID is added to each packet. In cloud environments, tunnels of this kind can also be generated and terminated within hypervisors – in other words, within virtual machines. It makes no difference whether the physical host systems reside in a single data center or are distributed across multiple locations all over the world. The logical overlay network is thus independent of the underlying physical network infrastructure.

With the help of this method, a large number of isolated Layer 2 VXLAN networks can be mapped on a single Layer 3 infrastructure. Additionally, VXLANs make it possible to install virtual machines on the same virtual Layer 2 network, although they reside on different Layer 3 networks. To this end, VXLANs add a 24-bit segment ID. This means that millions of isolated Layer 2 VXLAN networks can be implemented on one legacy Layer 3 infrastructure, and the virtual machines installed on the same logical network can communicate with one another directly via Layer 3 structures.

Because the entire Layer 2 data traffic is hidden in tunnels with this solution, monitoring and data analysis become more difficult. A visibility structure integrated into the network can help make dynamic data connections visible in distributed virtual machine structures and VXLAN overlay networks. The data streams to be monitored are output to the analysis and monitoring components in a targeted way.

Conclusions

Virtual networks and server structures require additional mechanisms to ensure visibility of the data streams. It makes no difference whether the virtual machines are implemented on one physical host or distributed across multiple physical hosts – the data streams can no longer be captured for monitoring, analysis, or control. To ensure successful monitoring, it is important to correlate events at the physical and logical level. This task can be handled by a visibility structure that, at the same time, gives the IT department access to critical information.