OpenStack Sahara brings Hadoop as a Service

Computing Machine

Scalable cloud environments appear tailor-made for Big Data application Hadoop, putting it squarely in the cloud computing kingdom. Hadoop also has a very potent algorithm at its side: Google's MapReduce [1]. Moreover, the developer of Apache Lucene, Doug Cutting, is also the creator of Hadoop, so the project is not lacking in bona fides.

Hadoop, however, is a very complex structure composed of multiple services and various extensions. Much functionality means high complexity: You have to take many steps between planning a Hadoop installation and having a usable installation. A better, less complex idea is a well-prepared OpenStack service: The OpenStack component Sahara [2] offers Hadoop as a Service.

The promise is that administrators can click together a complete Hadoop environment quickly that is ready to use. Several questions arise: Will you see any benefits from Hadoop if you have not looked thoroughly into the solution in advance? Does Sahara work? Is the Hadoop installation that Sahara produces usable? I tested Sahara to find out.

Hadoop

The heart of Hadoop comprises two parts:

- The Hadoop Distributed File System (HDFS), a scalable filesystem characterized by its inherent high availability. HDFS primarily works as object-based storage (e.g., Ceph, which might become a replacement for HDFS).

- The MapReduce algorithm from Google. Map and Reduce are two functions within Hadoop that let you fish in the Big Data pond to find exactly the data needed.

Several components that fall into the "nice to have" category connect these two core components:

- HBase, a DBMS that runs on top of HDFS. It serves up data from a Hadoop cluster to the outside world.

- Hive, a data warehouse. The data stored in Hadoop can be not only searched but categorized using a syntax similar to SQL.

This list is certainly not complete; Hadoop has both official and unofficial extensions for almost every imaginable task.

Sahara

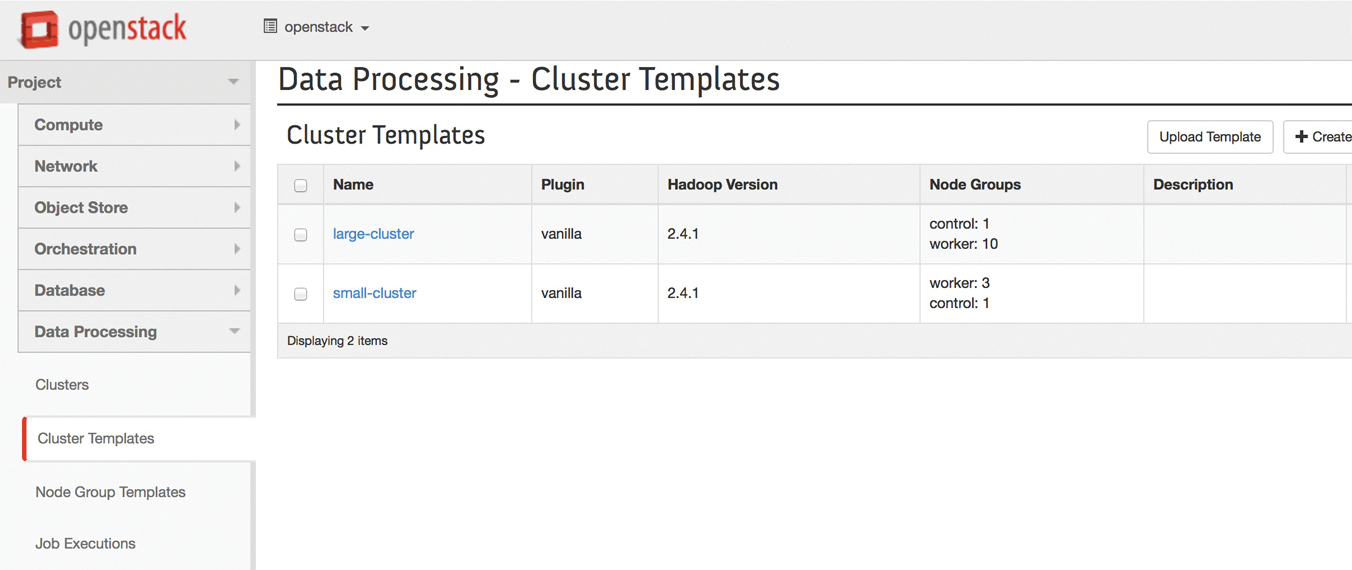

Once you have specified the kind of Hadoop cluster you have in mind, the theory is that Sahara should get on with the work and ultimately deliver a complete, ready-to-use cluster to the user. Like almost all other OpenStack services, Sahara comes in the form of several components (Figure 1).

![Sahara is a complex structure of several components; this graphic shows the most important ones [3]. (OpenStack.org) Sahara is a complex structure of several components; this graphic shows the most important ones [3]. (OpenStack.org)](images/sahara-1.png)

The core project consists of an application for authentication, a provisioning engine, its own database for operating system images, a job scheduler for elastic data processing (EDP), and the plugins used to create different Hadoop flavors. These are joined by a Sahara command-line client and an extension from the OpenStack Horizon component that allows administrators to use Sahara services via the OpenStack dashboard (Figure 2). Following the OpenStack philosophy, a RESTful API is on board.

Naming Conventions

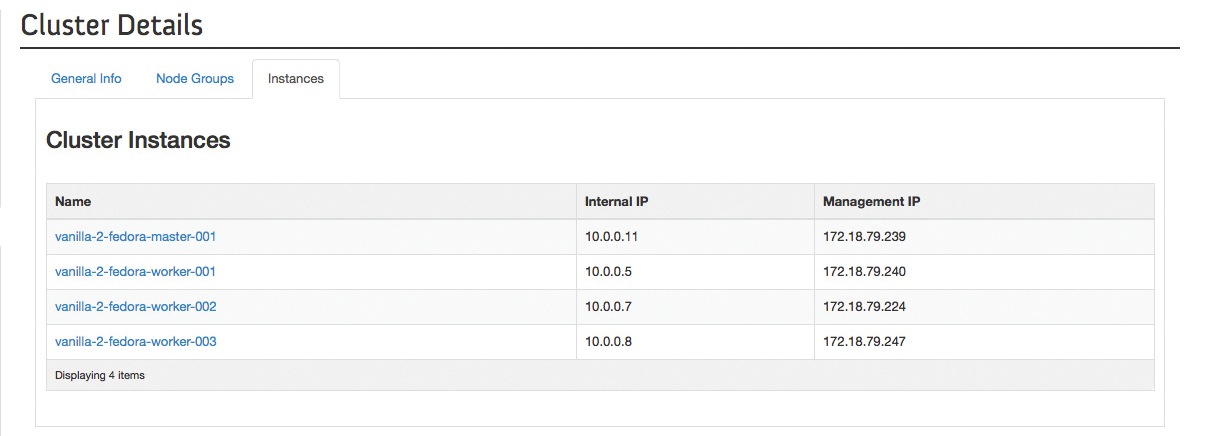

In typical OpenStack style, Sahara has a plethora of terms that do not exist or have a completely different meaning outside the context of Sahara. The term "cluster," for example, is of central importance: Clusters in Sahara are all virtual machines that belong to a Sahara installation, including the Sahara controllers, such as the workers. Sahara divides Hadoop installations into nodes on which specific services run. The master operates the Sahara name node, which acts as a metadata server. The core workers contain data and run a task tracker, which takes commands from the master node. Simple workers only process data, which comes from the core workers.

A central component of Hadoop is HDFS, designed for use in high-performance computing. The data is distributed to the installation's (the core workers') existing nodes. However, because one instance in the cluster must always know what data is where, the master runs the name node, so it is thus virtually a cluster-wide server for the HDFS metadata; that is, Sahara no longer thinks in terms of individual VMs. After all, it is a freely scalable computing cluster.

If the administrator starts a cluster with Sahara, it automatically leads to starting three groups: master, core workers, and workers. Sahara sets how many VMs belong to these groups dynamically at run time when called for by the administrator and adjusts the number automatically on request later on.

Intelligent Provisioning

Many components seem to be duplicated in Sahara and OpenStack. For example, user management is already handled in the form of Keystone, and Heat is OpenStack's engine for templates, which is evidently reproduced in the form of the provisioning engine.

The key element in Sahara is the provisioning engine (i.e., the part that launches Hadoop environments). Nothing works in Sahara without it. If the administrator invokes the command to start a Hadoop cluster via a web interface or command line, the command initially reaches the provisioning engine, where it is then processed.

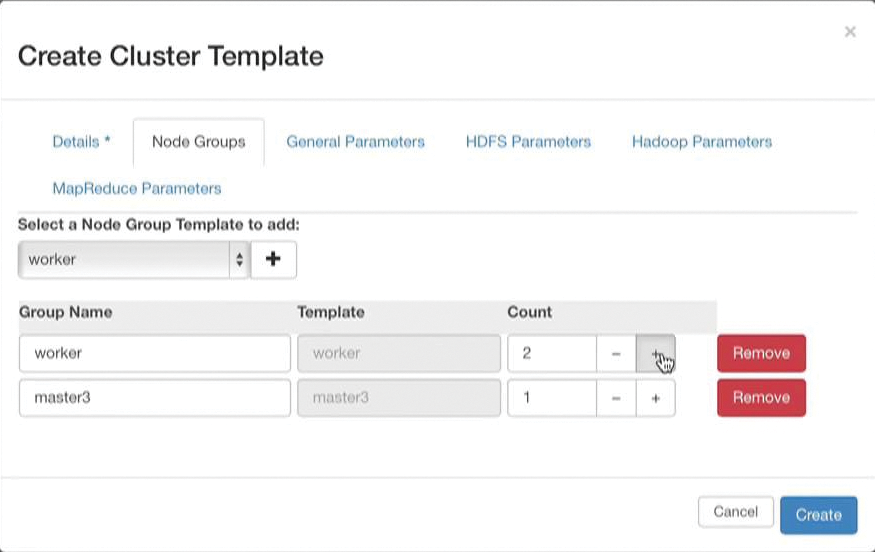

The service has several tasks. First, it interprets the user's input. Regardless of whether the user invoked the command from a GUI or at the command line, the command contains several configuration parameters that control Sahara (Figure 3). Even if the user does not specify the necessary parameters, the Sahara plugin uses appropriate defaults for Horizon or the command line. The most important parameters describe the Hadoop distribution and the underlying Linux distribution. The administrator also sets the topology of the cluster by issuing commands to Sahara (i.e., determining how many nodes each group will have initially).

Then, the party begins: The provisioning scheduler is responsible for transmitting the necessary commands to the other OpenStack services, including the OpenStack authenticator Keystone and the Glance image service. Because the service that handles authentication in Sahara draws on Keystone, Sahara does not implement a Keystone replacement. The same applies to Sahara's own image registry: It is based on Glance's functions but adds Hadoop-specific functions missing in Glance for image management.

The provisioning engine itself does not duplicate code needlessly. Earlier, I referred to the different groups that belong to a Hadoop cluster (master, core workers, workers); however, Heat, which is responsible for central orchestration in OpenStack, is not familiar with these divisions. A cluster of VMs (stack) attached via Heat always assumes that the administrator will use templates to define individual VMs that are then launched by Heat.

For Heat and Sahara's provisioning engines to communicate meaningfully with one another, the one in Sahara contains a built-in "interpreter" that generates templates with which Heat can work. From the administrator's perspective, it is sufficient to define templates in Sahara that basically describe a Hadoop cluster. Sahara takes care of the rest internally.

Tastes Differ

Hadoop is a concrete implementation of Google's MapReduce algorithm. However, in recent years, as with Linux distros, several providers have begun to offer users separate Hadoop distributions. Apache's Hadoop might always be at the core, but it is extended to include various patches and is supplied to the user with all sorts of more or less useful extensions.

When working on Sahara, the developers had to decide whether to support only the original Hadoop or whether to provide support for other Hadoop implementations as well. The result a the second variant. Using plugins, Sahara can be expanded so that it either supports the original Hadoop version or some other version, such as Hortonworks or Cloudera.

Sahara is therefore very flexible. Depending on the setup, the administrator can even set several plugins to be active at the same time. The user is then left to choose a preferred flavor.

Analytics as a Service

Until now, this article has been concerned with Sahara's ability to launch clusters using Hadoop. However, Sahara is not confined just to that task. The tool also implements comprehensive job management for Hadoop in the background. Basically, Sahara as an external component wants to know what is happening with Hadoop in the VMs. In this alternative mode, the user would not launch any VMs.

To use this Sahara functionality meaningfully, which its developers describe as "Analytics as a Service," the user would instead register the computing job directly with Sahara. To do this, the user needs to set some parameters: To which of the categories specified in Hadoop does the task belong? Which script will be run for the task, and where will Hadoop find the matching data? Where should Sahara store the results of the computations and the logs?

As soon as Sahara is familiar with the values, it takes care of the rest automatically. It starts a Hadoop cluster, performs the corresponding tasks in it, and only provides the administrator the results of these calculations at the end. Using elastic data processing (EDP), the administrator has the option of saving the final results in an object store in line with the OpenStack Swift standard.

When faced with this type of task, Sahara forms a second abstraction layer (in addition to the layer for starting VMs) between the user and the software. Administrators can thus still use Hadoop, even if they do not want to deal with the details of Hadoop.

Sahara's Long Arm in the VM

For Sahara to be able to configure the services within the Hadoop VMs in a way that ends in a working Hadoop cluster, it must work primarily on the VMs. The developers have followed the Trove model and other services of this kind and use an agent to implement the configuration. You need to obtain a corresponding basic image to use Sahara or build one yourself [4] for the magic to work.

In the old mechanism, the cloud-init script configured an SSH server, which Sahara then addressed externally. The agent solution, implemented in 2014, is much more elegant and also closes the barn door that previously needed to be kept open for incoming SSH connections to Hadoop VMs.

Unlike Trove, the Sahara agent contributes genuine added value. For example, MySQL can be installed and configured quite easily, although this is not the case with Hadoop, so the agent really does pay dividends in Sahara's case.

CLI or Dashboard?

Like any OpenStack service, users have two options for using Sahara's services. Option one is Horizon (Figure 4), the OpenStack dashboard. However, this still involves a touch of manual work for administrators because the required files are not yet available as a package. The Sahara components are also not part of the official dashboard.

As usual, the command-line client is less complicated. Following the example of other OpenStack services, python-saharaclient allows all important operations to be performed at the command line. At the same time, it is also possible to use the corresponding Python library from scripts. Anyone wanting access to Sahara at a programming level has found a home.

Automatic Scaling

The Sahara provisioning engine can do a lot more than just launch VMs for running new Hadoop installations. However, working with Hadoop is – depending on the extent of the material you need to plow through – CPU and memory intensive. Naturally, corporations will want to spend as little money as possible.

On the one hand, administrators could initially equip their Hadoop clusters with so many virtual CPUs and so much virtual memory that they will certainly have enough to process the upcoming tasks in a meaningful way. On the other hand, Hadoop clusters are regularly assigned new tasks. To keep up with the dynamic growth, administrators would need to integrate more and more virtual resources than are initially necessary, but that would contradict the requirement for efficiency.

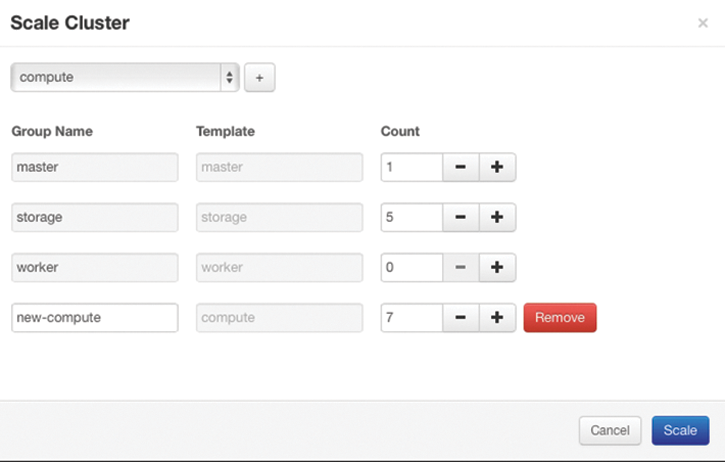

Sahara therefore has the ability to modify the number of VMs automatically in Hadoop clusters out of the box in line with factors set by the administrator. Administrators can tell Hadoop to start more workers if the strain on the existing workers exceeds a certain limit (e.g., taking into account factors such as CPU usage of each instance). Hadoop thus scales the cluster itself as required.

This approach also works if you use Sahara in its Analytics-as-a-Service mode: You only need to establish a limit that must not be exceeded in terms of the number of instances and their shared resources (Figure 5). Sahara and the integrated provisioning engine look after everything else themselves.

ISPs already operating an OpenStack platform and planning to integrate this feature in Sahara need to tackle the topic of hardware wisely. One thing is clear: To operate Hadoop sensibly, customers need a great deal of deployable performance in terms of CPU, RAM, and the network. Because a Hadoop cluster is very network intensive, even gigabit links can be saturated without much difficulty.

Hardware for Sahara

Anyone who wants to offer Hadoop as a Service, needs to use large CPUs, a generous helping of RAM, and, ideally, fast 10Gb network cards. However, this alone is still not enough; Hadoop is only really fast when it can use fast local storage.

As a reminder, the default configuration of OpenStack packs persistent VMs onto storage that is connected in the background via iSCSI. This might not be very elegant from a technical point of view, but beyond that, it is very slow. Alternatives offer high throughput in the form of Ceph. What most of the alternatives have in common is that they come with fairly high latency, because the packets always have to traverse the network.

Local storage helps. If the VM is running on the host and using the system's local storage, the detour via the network is eliminated. Until the most recent OpenStack release (Kilo), OpenStack was unable to map the connection between a VM and storage created with Cinder. Administrators could thus choose whether they wanted to run a VM on persistent network storage or locally on the individual hypervisors – but then not persistently.

In Kilo, the developers retrofitted a long-desired function from which Sahara will also benefit: It is now possible to specify that Cinder should create a volume on the host from which the virtual machine starts. The storage operator in Cinder might then have to take care of the topic of high availability itself, but that should be possible with a detour via DRBD9 [5], for example.

Conclusions

Cloud and Big Data are like chalk and cheese. Ultimately, it was precisely the large HPC setups that first sounded the triumph of cloud computing. For providers to make large amounts of resources available that enables customers to operate Hadoop dynamically and flexibly is certainly a very coherent approach. The cloud particularly offers the advantage that the customer can tap into a production environment immediately, instead of first having to deploy a hardware zoo in their racks.

Fortunately, Sahara developers have solved many problems from earlier times. That a VM can be started from the exact place where the volume provisioned by Cinder is also located makes Hadoop useful at the outset, because Hadoop only works well with fast storage.

However, one big drawback remains: Currently only a few providers operate publicly accessible OpenStack clouds, and those who do only support Hadoop in the rarest of cases and don't offer Sahara support. Except for a DIY cloud, you have virtually no option for using Hadoop's functionality in everyday life – a functionality that is actually very useful. This is a shame, because if a provider were to add Sahara to its portfolio, that provider could probably rely on a multitude of customers rushing to sign up.