The SDFS deduplicating filesystem

Slimming System

The SDFS [1] filesystem developed in the scope of the Opendedup project first breaks a file to be stored down into individual data blocks. It then stores only those blocks that do not already exist on disk. In this way, SDFS can also deduplicate only partially identical files. From the outside, users do not see anything of this slimming process: They still see a backup copy although only the original exists on the disk. Of course, SDFS also ensures that the backup copy is not modified when the original is edited.

Block-Based Storage

SDFS optionally stores the data blocks locally, on up to 126 computer nodes on a network, or in the cloud. A built-in load balancer ensures even distribution of the load across nodes. This means that SDFS can handle large volumes of data quickly – given a suitably fast network connection. Whatever the case, SDFS installs itself as a layer on top of the existing filesystem. SDFS will work either with fixed or variable block sizes.

In this way, both structured and unstructured data can be efficiently deduplicated. Additionally, the filesystem can handle files with a block size of 4KB. This is necessary to be able to deduplicate virtual machines efficiently. SDFS discovers identical data blocks by creating a fingerprint in the form of a hash for each block and then comparing the values.

The risk of failure increases of course because each data block exists only once on disk. If a block is defective, all files with this content are too. SDFS can thus redundantly store each data block on up to seven storage nodes. Finally, the filesystem lets you create snapshots of files and directories. SDFS is licensed under the GNU GPLv2 and can thus be used for free in the enterprise. You can view the source code on GitHub [2].

SDFS exclusively supports systems with 64-bit Linux on an x86 architecture. Although a Windows version [3] is under development, it was still in beta when this issue went to press and is pretty much untested. Additionally, the SDFS developers provide a ready-made appliance in the OVA exchange format [4]. This virtual machine offers a NAS that when launched deduplicates the supplied data with SDFS and stores it on its (virtual) disk. A look under the hood reveals Ubuntu underpinnings.

Installing SDFS

To install SDFS on an existing Linux system, start by using your package manager to install the Java Runtime Environment (JRE), Version 7 or newer. The free OpenJDK is fine as well. On Ubuntu, it resides in the openjdk-7-jre-headless package, whereas Red Hat and CentOS users need to install the java-1.7.0-openjdk package.

If you have Ubuntu Linux version 14.04 or newer, Red Hat 7, or CentOS 7, you can now download the package for your distribution [4]. The Red Hat package is also designed for CentOS. Then, you just need to install the package; on Ubuntu, for example, type:

sudo dpkg -i sdfs-2.0.11_amd64.deb

The Red Hat and CentOS counterpart is:

rpm -iv --force SDFS-2.0.11-2.x86_64.rpm

Users on other distributions need to go to the Linux (Intel 64bit) section on the SDFS download page [4] and look for SDFS Binaries. Download and unpack the tarball. The tools here can then be called directly, or you can copy all the files manually to a matching system directory. In any case, the files in the subdirectory etc/sdfs belong in the directory /etc/sdfs.

After completing the SDFS install, you need to increase the maximum number of simultaneously open files. To do so, open a terminal window, become root (use sudo su on Ubuntu), then run the two following commands:

echo "* hardnofile 65535" > /etc/security/limits.conf echo "* soft nofile 65535" > /etc/security/limits.conf

On Red Hat and CentOS systems, you also need to disable the firewall:

service iptables save service iptables stop chkconfig iptables off

SDFS uses a modified version of FUSE, which the filesystem already includes. Any FUSE components you installed previously are not affected. FUSE lets you run filesystem drivers in user mode (i.e., like normal programs) [5].

Management via Volumes

SDFS only deduplicates files that reside on volumes. Volumes are virtual, deduplicate drives. You can create a new volume with the following command:

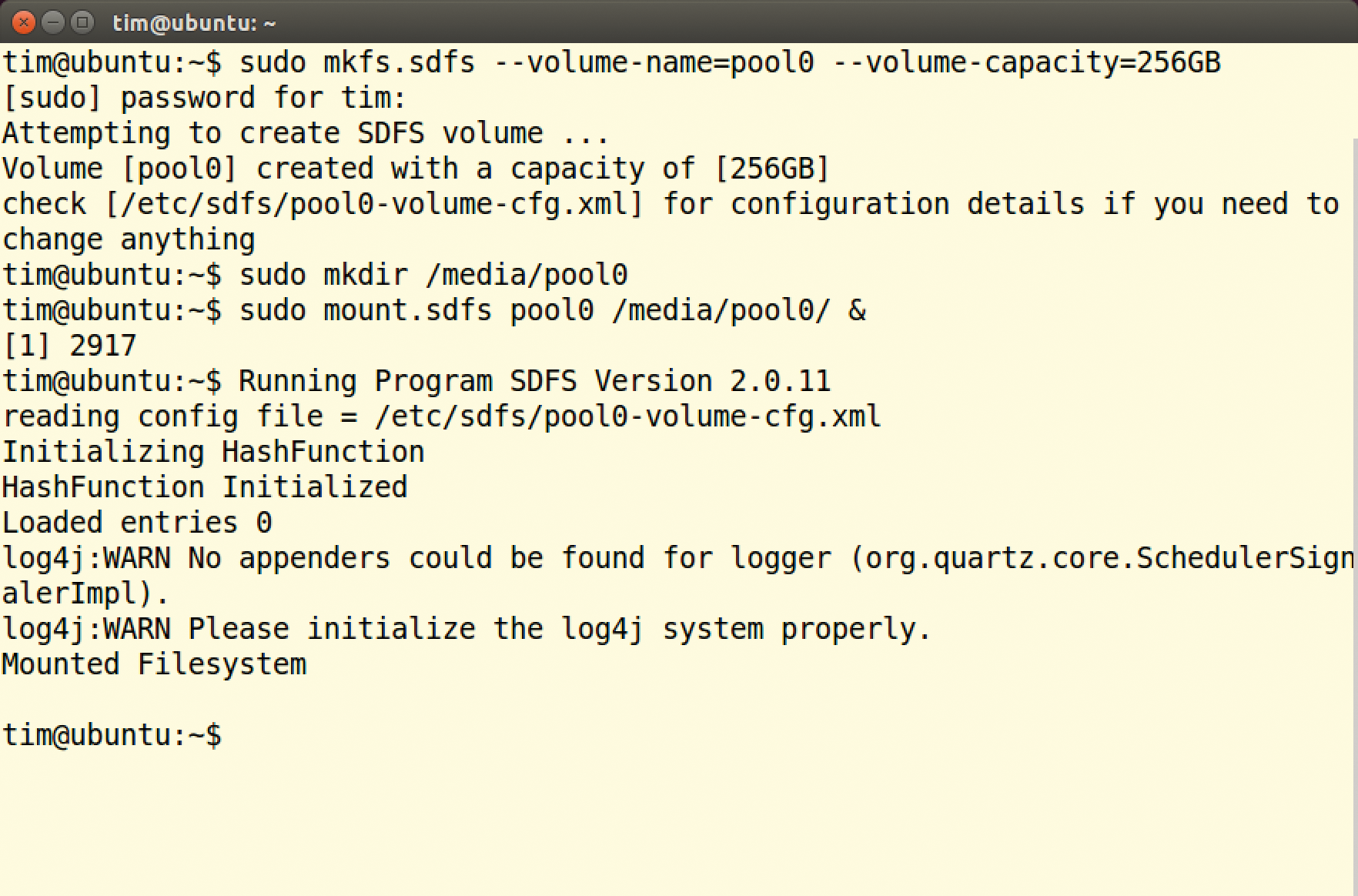

mkfs.sdfs --volume-name=pool0 --volume-capacity=256GB

In this example, it goes by the name pool0 and can store a maximum of 256GB (Figure 1). You need to be root to run this command and all the following SDFS commands; Ubuntu users thus need to prepend sudo. For the volume you just created, SDFS uses a fixed block size of 4KB.

pool0 volume, you can mount it in /media/pool0.If you want the filesystem to use a variable block size for deduplication instead, you need to add the --hash-type= VARIABLE_MURMUR3 parameter to the previous command. On the physical disk, the volume only occupies the amount of space that the deduplicated data it contains actually occupies. SDFS volumes can also be exported via iSCSI and NFSv3.

To be able to populate the volume with files, you first need to mount it. The following commands create the /media/pool0 directory and mount the volume with the name pool0:

mkdir /media/pool0 mount.sdfs pool0 /media/pool0/ &

All files copied into the /media/pool0 directory from this point on are automatically deduplicated by SDFS in the background. When done, you can unmount the volume like any other:

umount /media/ pool0

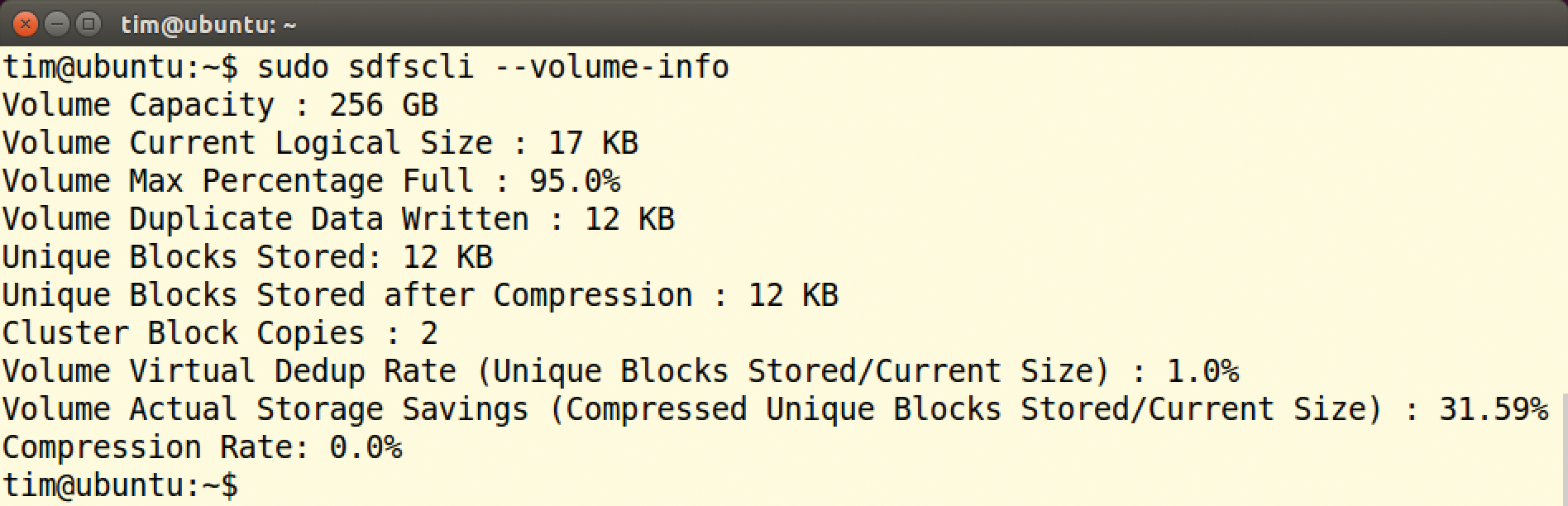

The & that follows mount.sdfs really is necessary, by the way: The mount.sdfs script launches a Java program that monitors the directory and handles the actual dedup. On terminating, the volume automatically unmounts. The & sends the Java program to the background. It continues running there until the administrator unmounts the volume again. In our lab, the Java program did not always launch reliably. If you add an &, you will thus want to check that the volume mounted correctly to be on the safe side. For information on a mounted volume, type, enter

sdfscli --volume-info

(Figure 2). The sdfscli command lets you retroactively grow a volume. The following command expands the volume to 512GB:

sdfscli --expandvolume 512GB

sdfscli command.SDFS saves the data stored on pool0 in the /opt/sdfs/volumes/pool0/ subdirectory. The subdirectories at this point contain the actual data blocks and the metadata that SDFS relies on to reconstruct the original files.

Do not make any changes here unless you want to lose all your stored files. The storage location for the data blocks can only be changed to another directory on creating the volume. You need to pass in the complete new path to mkfs.sdfs with the --base-path= parameter. For example, the command

mkfs.sdfs --volume-name=pool0 --volume-capacity=256GB \

--base-path=/var/pool0

would store the data blocks in /var/pool0.

Snapshot as Backup

After editing a file, most users like to create a safe copy. To do this, SDFS offers a practical snapshot feature, which you can enable with the sdfscli tool. The following command backs up the current status of the /media/pool0/letter.txt file in /media/pool0/backup/oldletter.txt:

cd /media/pool0

sdfscli --snapshot 66 --file-path=letter.txt \

--snapshot-path=backup/oldletter.txt

Even though it looks like SDFS copies the file, this does not actually happen. The oldletter.txt snapshot does not occupy any additional disk space. A snapshot is thus very useful for larger directories in which only a few files change retroactively. The details following --snapshot-path are always relative to the current directory. Seen from the outside, snapshots are separate files and directories, which you can edit or delete in the normal way – just as if you copied the files.

Distributing Data Across Multiple Servers

SDFS can distribute a volume's data across multiple servers. The computers in the SDFS cluster that this creates need a static IP address and as fast a network connection as you can offer. The individual nodes use multicast over UDP for communication. Any existing firewalls need to let the packets through. Beyond this, some virtualizers such as KVM block multicast. A cluster cannot be created in this case, or there are limitations, or you can only use a specific configuration of the virtual machines.

Start by installing the SDFS package on all servers that will be providing storage space for a volume. Then, launch the Dedup Storage Engine (DSE). This service fields the deduplicated data blocks and stores them. Before you can launch a DSE, you must first configure it with the following command:

mkdse --dse-name=sdfs --dse-capacity=100GB \

--cluster-node-id=1

The first parameter names the DSE, which is sdfs in this example. The second parameter defines the maximum storage capacity the DSE will provide in the cluster. The default block size is 4KB. Then, --cluster-node-id gives the node a unique ID, which must be between 1 and 200. In the simplest case, just number the nodes consecutively starting at 1. A DSE refuses to launch if it finds a DSE with the same ID already running on the network.

Next, mkdse writes the DSE's configuration to /etc/sdfs/. You need to add the following line below <UDP to the jgroups.cfg.xml file:

bind_addr="192.168.1.101"

Replace the IP address with your server's IP address. As the filename jgroups.cfg.xml suggests, the SDFS components use the JGroups toolkit to communicate [6]. You can then start the DSE service:

startDSEService.sh -c /etc/sdfs/sdfs-dse-cfg.xml &

Follow the same steps on the other nodes, taking care to increment the number following --cluster-node-id.

By default, DSEs store the delivered data blocks on each node's hard disk. However, they can also be configured to send the data to the cloud. At the time of writing, SDFS supported Amazon's AWS and Microsoft Azure. To push the data into one of these two clouds, you only need to add a few parameters to mkdse that mainly provide the access credentials but depend on the type of cloud. Calling mkdse --help will help you find the required parameters, and the Opendedup Quick Start page [7] has more tips.

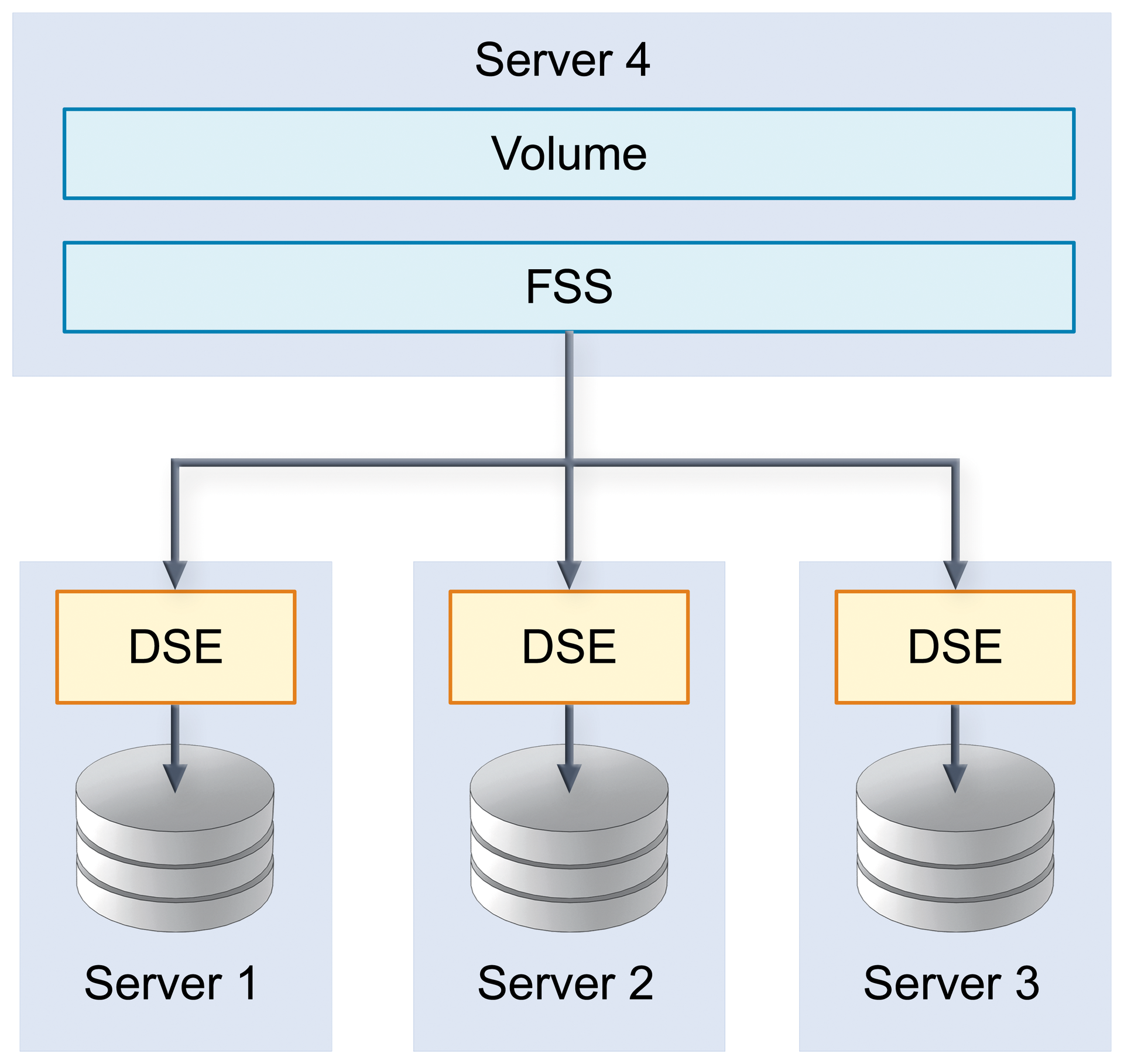

Once the nodes are ready, you can create and mount the volume on another server. These tasks are handled by the File System Service (FSS). To create a new volume named pool1 with a capacity of 256GB, type the following command:

mkfs.sdfs --volume-name=pool1 --volume-capacity=256GB \

--chunk-store-local false

This is the same command as for creating a local volume: For operations on a single computer, DSE and FSS share a process. The final parameter, --chunk-store-local false, makes sure that FSS uses the DSEs to create the volume (Figure 3). The block size must also match that of the DSEs, which is the default 4KB in this example. Then, you can type

mount.sdfs pool1 /media/pool1

to mount the volume in the normal way.

High Availability and Redundancy

SDFS can store data blocks redundantly in the cluster. To do this, use the --cluster-block-replicas parameter to tell mkfs.sdfs on how many DSE nodes you want to store a data block. In the following example, each data block stored in the volume ends up on three independent nodes:

mkfs.sdfs --volume-name=pool3 --volume-capacity=400GB \

--chunk-store-local false --cluster-block-replicas=3

If a DSE fails, the others step in to take its place. However, this replication has a small drawback: SDFS only guarantees high availability of the data blocks, not of the volume metadata – in fact, administrators have to ensure this themselves by creating a backup.

Obstacles to Practical Deployment

The amount of storage space you can save by deduplication depends to a great extent on the data you store. Although the developers promise savings of up to 95 percent, this also assumes that SDFS finds 95 percent identical data blocks. Additionally, the risk of data loss increases with the percentage; if a data block fails, you cannot restore any of the files belonging to it.

In contrast to other deduplicating filesystems, SDFS does offer the option of storing data blocks redundantly in a cluster. However, you need to enable this mirroring explicitly. Doing so means that the data again exists in multiple instances and achieves precisely the opposite of what deduplication promises.

Additionally, you need to back up the metadata of the individual volumes yourself. The SDFS developers suggest using DRDB [8] for this. Multicast packages not only increase the network load, they also force administrators to drill holes in their firewalls. Finally, the communication between the individual components in the cluster is unencrypted by default – although you can at least enable SSL encryption.

The developers have not done the best job of documenting their tools. On the project website, you will find a Quick Start Guide and a description of the architecture, which the developers refer to as an "Administration Guide" [9]. The tools do output a long reference of all parameters when started with the --help option.

Conclusions

SDFS sets up quickly; volumes can also be created easily across multiple computer nodes, and there is support for virtual machines. However, administrators will do well to bear in mind the risks of a deduplicating filesystem. The use of SDFS thus mainly makes sense if you have many similar or identical files that are not particularly important – such as temporary files or logfiles.