LXC 1.0

Lean and Quick

Linux containers have been fully functional since kernel 2.6.29. However, Linux has had containers in the form of Virtuozzo [1] and OpenVZ [2] for some time. The difference is that the Linux kernel now has all the necessary components for operating containers and no longer requires patches. Kernel namespaces isolate containers from each other and CGroups limits resources and takes care of priorities.

Solid Foundation

A first stable version of the LXC [3] userspace tool has been used to manage containers since February 2014. Ubuntu 14.04 has LXC 1.0 on board, which the developers recommend and will provide support for until April 2019 (Figure 1). If you want to install LXC in Ubuntu Trusty Tahr, you are best off using the v14.04.1 [4] server images. Kernel 3.13 (used here) has five years of LTS support; this doesn't apply to the kernels of newer LTS updates. You can get the LXC tools after installation by entering:

apt-get install lxc

The packages (among other things) listed in Table 1 will land on your computer.

![The container is being managed here with the help of LXC Web Panel [5]. The container is being managed here with the help of LXC Web Panel [5].](images/F01-lxc-webpanel.png)

Tabelle 1: LXC Packages

|

Package Name |

Purpose |

|---|---|

|

|

Generates the virtual network device ( |

|

|

Enables the management of CGroups via a D-Bus interface. |

|

|

Helps install Debian-based containers. |

|

|

Enables a minimum out-of-the-box NAT network for containers ( |

|

|

The new LXC API. |

|

|

Templates that create simple containers. |

First Container

After a successful installation, you can create the first container with a simple command:

lxc-create -t ubuntu -n ubuntu_test

Don't forget to obtain root privileges first, which you will need to execute most of the commands featured in this article. If you then call lxc-create for the first time for a particular distribution, the host system needs to download the required packages. It caches them in /var/cache/lxc/. If you enter the lxc-create command again, the system will create a container within a couple of seconds. If you type

lxc-create -t ubuntu -h

LXC shows you template-specific options. You can, for example, select the Debian or Ubuntu release or a special mirror using these options. The following call creates a container with Debian Wheezy as the basic framework:

lxc-create -t debian -n debian_test -- -r wheezy

By default, Ubuntu as the host system stores containers directly in the existing filesystem under /var/lib/lxc/<Container-Name>. At least one file called config and a directory named rootfs are waiting in the container subdirectory. Many distributions will also have the fstab file, which manages container mountpoints.

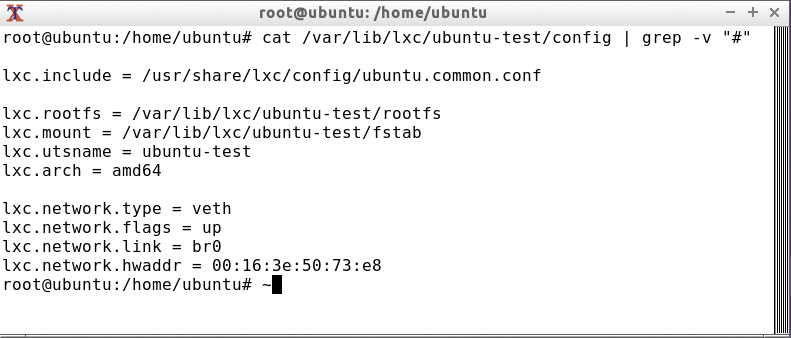

LXC 1.0 configures the container with the help of the config file. Container management also allows the use of includes in the configuration (via lxc.include), which makes it possible to have a very minimalist default configuration for containers (Figure 2).

If the container is on the disk, the host system will tell you what the default login is for Ubuntu (user: ubuntu, password: ubuntu) or Debian (user: root, password: root).

You can then activate the container using the following command, where the -d option ensures that it starts in the background:

lxc-start -n debian_test -d

Similarly, the lxc-stop command stops the container again. LXC usually sends a SIGPWR signal to the init process, which shuts down the container cleanly. You can force the shutdown using the -k option.

Containers usually start within a few seconds because they don't need a custom kernel. Using the first command below takes you to the login prompt, whereas the second command takes you directly to the console without a password prompt:

lxc-console -n debian_test lxc-attach -n debian_test

The command shown in Listing 1 provides a decent overview of the available containers.

Listing 1: Display existing containers

root@ubuntu:/var/lib/lxc# lxc-ls --fancy NAME STATE IPV4 IPV6 AUTOSTART -------------------------------------------------- debian_test RUNNING 10.0.3.190 - NO debian_test2 STOPPED - - NO ubuntu_test STOPPED - - NO

If a specific container should automatically start up when the base system is started, you can activate this in the container configuration (Figure 2):

lxc.start.auto = 1 lxc.start.delay = 0

As you can see, you could also include a start delay here.

Building Bridges

Perhaps you're wondering about the lxcbr0 device in the container configuration or about the IP from the container 10.0.3.0/24 network. This Ubuntu feature allows containers to connect automatically with the outside world via Layer 3 and is implemented by a unique network bridge called lxcbr0; other features include a matching dnsmaq daemon and an iptables NAT rule. The bridge itself doesn't connect an interface with the host. Listing 2 provides details.

Listing 2: The lxcbr0 LXC Bridge

root@ubuntu:~$ brctl show bridge name bridge id STP enabled interfaces lxcbr0 8000.000000000000 no root@ubuntu:~# cat /etc/default/lxc-net | grep -v -e "#" USE_LXC_BRIDGE="true" LXC_BRIDGE="lxcbr0" LXC_ADDR="10.0.3.1" LXC_NETMASK="255.255.255.0" LXC_NETWORK="10.0.3.0/24" LXC_DHCP_RANGE="10.0.3.2,10.0.3.254" LXC_DHCP_MAX="253" root@ubuntu:~# iptables -t nat -L POSTROUTING Chain POSTROUTING (policy ACCEPT) target prot opt source destination MASQUERADE all -- 10.0.3.0/24 !10.0.3.0/24 root@ubuntu:~# ps -eaf | grep dnsmas lxc-dns+ 1047 1 0 18:24 ? 00:00:00 dnsmasq -u lxc-dnsmasq --strict-order --bind-interfaces --pid-file=/run/lxc/dnsmasq.pid --conf-file= --listen-address 10.0.3.1 --dhcp-range 10.0.3.2,10.0.3.254 --dhcp-lease-max=253 --dhcp-no-override --except-interface=lo --interface=lxcbr0 --dhcp-leasefile=/var/lib/misc/dnsmasq.lxcbr0.leases --dhcp-authoritative

If a container uses the lxcbr0 network interface, the dnsmasq daemon on the host system allocates it an IP address when booting via DHCP. It then contacts the outside world using this address. However, you only have access to the container from the host system.

Thanks to iptables and some NAT rules, however, you can pass on individual ports from outside to the container if necessary. The following example shows how the host system forwards port 443 to the container:

sudo iptables -t nat -A PREROUTING -p tcp --dport 443 -j DNAT --to-destination 10.0.3.190:443

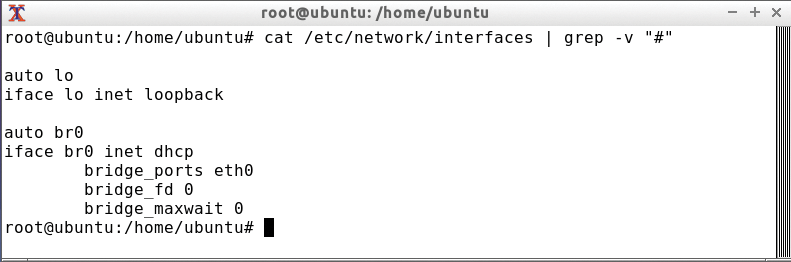

The lxcbr0 interface is great for testing. If, however, you are running multiple containers on a server, it can become complex and confusing. In such circumstances it is a good idea to use a separate bridge device without NAT. In this way, you can completely connects the veth devices in the containers on the Layer 2 level to the network. To do so, first set up a bridge in /etc/network/interfaces on the host system (see Figure 3).

/etc/network/interfaces file.Don't forget to comment out the existing eth0 interfaces. Then, enter the new bridge in the container configuration, which is called /var/lib/lxc/debian_test/config in the example:

lxc.network.link = br0

Containers either still get their IP via DHCP, or you can set up a fixed IP address. Although you can sort out the fixed IP address in the container configuration (lxc.network.ipv4), the better option would be to do it directly in the Debian or Ubuntu container itself in the /etc/network/interfaces file. In addition to the commonly used veth interface, you have other network options: none, empty, vlan, macvlan, and phys.

Limiting Resources

CGroups look after limiting and accounting a container's resources; cgmanager [6] has been used for this since Ubuntu 14.04. Before then, you could access these values via /sys/fs/cgroup/.

The lxc-info command provides a good overview of the current usage (Figure 4). lxc-cgroup requests the CGroup values:

root@ubuntu:~# lxc-cgroup -n debian_test memory.usage_in_bytes 3985408 root@ubuntu:~# lxc-cgroup -n debian_test memory.failcnt 0

lxc-info command provides an overview of the resources used by the container.To enable swap accounting, a boot option has to be passed to the kernel (Listing 3). Memory.failcnt and memory.memsw.failcnt get increased if the container hits the memory limitations. In addition to the RAM, you can also limit the used swap space per container.

Listing 3: Including Swap Space

root@ubuntu:~# cat /etc/default/grub | grep LINUX_DEFAULT GRUB_CMDLINE_LINUX_DEFAULT="" root@ubuntu:~# vi /etc/default/grub root@ubuntu:~# cat /etc/default/grub | grep LINUX_DEFAULT GRUB_CMDLINE_LINUX_DEFAULT="swapaccount=1" root@ubuntu:~# update-grub2 [...] root@ubuntu:~# reboot

The memory.memsw. limit_in_bytes CGroup control file contains the total limit for RAM, including swap. You can set the desired limits in the container configuration. The following example allows 100MB of RAM and 100MB of swap space:

lxc.cgroup.memory.limit_in_bytes = 100M lxc.cgroup.memory.memsw.limit_in_bytes = 200M

Table 2 [7] provides an overview of the available CGroup subsystems. The online documentation [8] is comprehensive and up to date. In addition to RAM, the parameters in Table 3 also prove to be relevant.

Tabelle 2: CGroup Subsystems

|

Subsystem |

Function |

|---|---|

|

|

Limits CPU and memory placement for a group of tasks. |

|

|

Limits for I/O access to and from block devices. |

|

|

Generates automatic reports on CPU resources used by tasks in a CGroup. |

|

|

Allows or disallows access to devices by tasks in a CGroup. |

|

|

Suspends or resumes tasks in a CGroup. |

|

|

|

|

|

Limits memory use by tasks in a CGroup. |

|

|

Tags network packets with a class identifier. |

|

|

Schedules CPU access to cgroups. |

|

|

Monitors threads of a task group on a CPU. |

Tabelle 3: Relevant CGroup Parameters

|

Parameter |

Function |

|---|---|

|

|

CPU core pinning. |

|

|

The higher the value, the more CPU time the container receives. |

|

|

Access to the base system's devices. |

|

|

Limits write and read throughput. |

|

|

Controls network throughput using |

However, the CGroup feature doesn't limit a container's disk space. Either a unique logical volume per container, a separate image file, or an XFS directory tree quota can implement such a limitation. The recommended route is a unique logical volume because the LXC tools already provide good support for the logical volume manager.

Command Bridge

Until now you've probably gotten by with just a few lxc-* commands. For example, you can create containers with lxc-create, start and stop them with lxc-start and lxc-stop, or delete them with lxc-destroy. For all of these commands, you can specify the container name with the -n option. To get a full list of lxc-* commands, enter:

ls /usr/bin/lxc-*.

The commands shown in Table 4 are among the lesser known.

Tabelle 4: LXC Exotics

|

Command |

Function |

|---|---|

|

|

Controls containers that are configured for an autostart. |

|

|

Checks the kernel's requirements. |

|

|

Passes on a device to the container. |

|

|

Executes an individual command in a container. |

|

|

Freezes the processes in a container and wakes them up again. |

|

|

Monitors status changes. |

|

|

Starts a temporary container clone that LXC then automatically destroys when stopping a container. |

New with LXC

The first stable version of LXC offers some important new features:

- Container nesting

- Hooks

- Unprivileged containers

- Prebuilt containers

- Liblxc-API

If you want to use nested containers, first allocate the AppArmor profile lxc-container-default-with-nesting to the parent container. If you share the host system's /var/cache/lxc/ folder with the container, even creating will work quickly. Then, install the LXC package in the container and create the nested container as usual. The base system lists the nested containers (Listing 4).

Listing 4: Container in a Container

root:/# echo "/var/cache/lxc var/cache/lxc none bind,create=dir" >> /var/lib/lxc/ubuntu_test/fstab root:/# echo "lxc.aa_profile = lxc-container-default-with-nesting" >> /var/lib/lxc/ubuntu_test/config root:/# echo "lxc.mount.auto = cgroup" >> /var/lib/lxc/ubuntu_test/config root@ubuntu:/# lxc-ls --fancy --nesting NAME STATE IPV4 IPV6 AUTOSTART ----------------------------------------------------------------- debian_test RUNNING 10.0.3.190 - NO debian_test2 STOPPED - - NO ubuntu_test RUNNING 10.0.3.191, 10.0.4.1 - NO \_ ubuntu_nested RUNNING 10.0.4.197 - NO

With the use of hooks, you can automate container use. The following hooks, prefixed by lxc.hook, are available: .pre-start, .pre-mount, .mount, .autodev, .start, .post-stop and .clone.

The developers worked on the unprivileged containers for a long time. Since version 1.0, it has been possible to run containers on the base system without root access. The developers implement it via the user namespace and separate UID and GID ranges per container. Prebuilt containers make the installation easier because unprivileged containers do not allow some operations. Matching templates are on a Jenkins server [9], although the technology is still in its infancy. The LXC C API is lxccontainer.h, and the Python bindings are similar [10].

Security

A few horror stories are in circulation about containers. They tell of how administrators can compromise their entire host system with a container. Some time ago, these problems existed because containers shared the kernel with the host. However, today, user namespaces are pretty well protected thanks to capabilities, CGroups, AppArmor/SELinux, and Seccomp. Linux containers currently have no known security issues.

The /etc/apparmor.d/abstractions/lxc/ directory gives details about the AppArmor policy in Ubuntu 14.04. The Seccomp policy for LXC is hidden in /usr/share/lxc/config/common.seccomp. Some Allow and Deny rules for CGroups (lxc.cgroup.devices.allow/deny) and capabilities limits (lxc.cap.drop) are in the configuration template in the /usr/share/lxc/config/ folder.

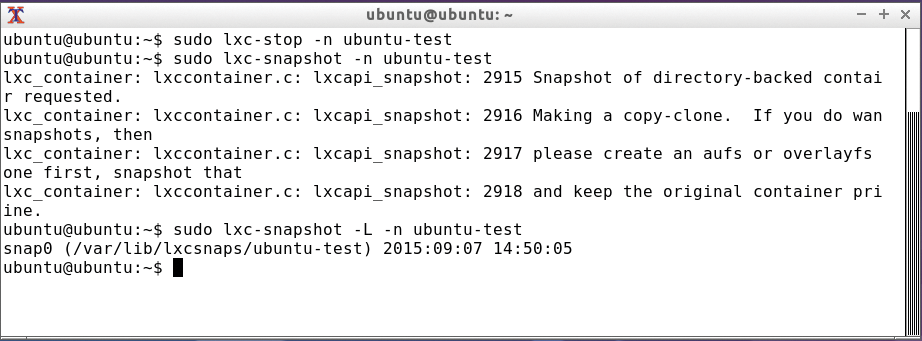

Snapshots and Clones

The usual location for containers is on the host system in /var/lib/lxc/<Container-Name>/rootfs/. LXC also copes with filesystems such as Btrfs and ZFS, as well as with LVM and OverlayFS.

On the basis of OverlayFS, you can create a master container (Listing 5); then, you can create several clones with lxc-clone, of which LXC only stores the deltas. This proves to be practical and space-saving, especially for test environments. However, you shouldn't enable the master container itself in this scenario.

Listing 5: Creating a Master Container

root@ubuntu:/var/lib/lxc# lxc-create -t ubuntu -n ubuntu_master root@ubuntu:/var/lib/lxc# lxc-clone -s -B overlayfs ubuntu_master ubuntu_overlay1 root@ubuntu:/var/lib/lxc# cat ubuntu_overlay1/delta0/etc/hostname ubuntu_overlay1

The lxc-snapshot command, unsurprisingly, creates snapshots of a container (Figure 5). For this, you first need to stop the current container. If a logical volume manager is being used, LXC creates an LVM snapshot via lxc-clone -s. You can reset a container to a snapshot using the -r option for lxc-snapshot.

Troubleshooting

If a container is causing problems when starting up, start it in the foreground. To do this, simply leave out the -d option with lxc-start. Once you have identified the problem, immediately mount a stopped container's filesystem; then, work on the problem in the mounted system. Alternatively, set a different default runlevel.

Additionally, the lxc-* commands support a logfile parameter (-o) and a corresponding log priority (-l). You might also be interested in the kernel ring buffer and the base system's AppArmor messages. To avoid multiple logging, you should disable rsyslogd kernel logging within the container.

Conclusions

LXC 1.0 is a stable version that has been officially supported for five years. It can therefore be used for production systems without concerns. However, compared with Docker, no hype has evolved around LXC so far.

Maybe LXD [11], which is based on LXC, will change this in the future. Linux containers are certainly the fastest way to achieve virtualization and isolation in Linux. That is probably one reason Google has been using containers intensively in projects such as lmctfy [12] and Kubernetes [13] for years.