Speed up your MySQL database

Fast Track

Admins are often faced with the task of solving performance problems of which they only have a rough idea or a vague description. Thus, the first task is to discover precisely what the difficulty is. This step alone helps to restore order, and it involves asking questions like: When exactly does the problem occur? What exactly happens before the user notices that the application is slowing down? What did the user click before this?

Along with such questions to discover the root cause of problems, MySQL logfiles help complete the picture. In any case, it is always useful to investigate the problem immediately after it occurs. Trying to understand what happened three weeks later is going to involve far more work. Finally, you need metrics, metrics, metrics. Ideally, you will have a monitoring infrastructure in place that already acquires all the necessary performance data.

Identify the Objective

Once you have described and documented the performance problem, you can then define the objectives behind your tuning actions. Are you looking for a shorter response time or more throughput? One objective could be, say: "Action X takes 3.5 seconds to complete after clicking – in future, we want to be done in less than one second." If you are facing more of a throughput problem, the objective could be: "Given 30 percent more load next year, we still want the system to respond to an order within 350ms on average."

It is essential to select realistic objectives – that means objectives that at least respect natural limits that you cannot influence. Depending on the network, you will have an upper limit for the transmission speed, or you might have technical limits (searching through 100 million rows will take a dozen seconds or so), or you might even encounter financial limits to tuning. (Yes, an SSD could give you 10,000 IOPS, but the customer doesn't want to pay for this.) Performance tuning always involves compromise – whether this be related to financial or security concerns or expressed in terms of the expense of corrections and code changes.

Finding the Bottleneck

The first task is to identify the bottleneck on the technical side. This can be the web browser, the network, the web or application server, the database, or even the hardware. On database machines, the limiting factors are typically the I/O, RAM, and CPU resources – in that order.

Which of these resources is currently imposing restraints on database performance can be discovered with tools such as iostat for the I/O system, free and vmstat for memory use and swapping, as well as top or mpstat for the CPU load. To discover more about memory use, you can deploy the free command, as shown in Listing 1.

Here's what the most important values in Listing 1 mean:

Listing 1: Using free

# free -m

total used free shared buffers cached

Mem: 117080 113060 4020 2420 1980 62260

-/+ buffers/cache: 48810 68270

Swap: 119730 0 119730

- Mem total: Total amount of available RAM: 117,080MB in this case.

- Mem used: Amount of RAM used by the applications: 48,810MB in this case.

- Mem free: Potentially free RAM: 68,270MB in this case.

- Mem cached: RAM used by the operating system for the filesystem cache: 62,260MB in this case.

- Swap used: There is no swap memory used in this example.

If RAM is the limiting resource, Mem used will be moving in the direction of Mem total, while Mem free tends toward zero. At the same time, the operating system gets rid of the filesystem cache (Mem cached) (it approaches zero), and Swap used moves away from zero in the direction of Swap total.

You can determine the memory use of the most important process (mysqld) as shown in Listing 2. The memory-specific values have the following meanings:

Listing 2: Memory Use of mysqld

# ps aux | egrep 'mysqld|VSZ' USER PID %CPU %MEM VSZ RSS START TIME COMMAND mysql 1568 0.0 0.0 4440 744 Sep08 0:00 mysqld_safe mysql 2337 0.1 5.9 29163040 7115280 Sep08 13:00 mysqld

- VSZ: The memory requested from the operating system by the process (virtual size).

-

RSS: The memory kept in RAM by the operating system (resident set size). This memory is part of the Mem used area shown by the

freecommand. The sum of the RSS values for processes should be approximately equal to Mem used. If the sum of the VSZ values for all processes is well above the available memory (Mem total), then your RAM is overcommitted, which can lead to swapping at full load, thereby slowing down the system.

To discover the presence and extent of system swapping – and what effect this has on the I/O system, check out Listing 3.

Listing 3: Using vmstat

# vmstat 1

procs -------------memory---------- ----swap---- -----io----- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 10848 139772 3612 793656 0 0 0 0 788 2583 7 5 88 0 0

0 0 10924 152000 3584 689528 0 76 4 124 802 2759 7 6 88 0 0 <- < Start of swapping >

0 1 103260 117124 3528 636156 0 92336 0 92556 834 2607 7 5 83 5 0

2 1 126168 138116 3552 553200 0 22908 348 23076 1143 2880 6 3 57 35 0 <- < I/O System loaded (wa) >

0 3 265376 149720 1136 378148 0 105300 252 105348 993 3002 6 4 62 29 0

0 14 495028 117264 560 329792 0 137352 1252 137488 880 3506 5 2 22 71 0 <- < Severe swapping >

0 10 597776 117244 320 317840 0 29400 588 29400 664 2522 2 2 0 96 0 <- < Process killed >

0 13 1667548 8263784 784 304768 296 0 920 0 298 675 0 0 25 75 0 <- < Swapped out >

< RAM back >

1 7 1659568 8247460 1200 314888 6900 0 16056 1132 905 1995 6 1 24 70 0

1 2 1654428 8148296 2516 373604 13004 0 49036 1100 2444 7135 8 6 68 19 0

1 0 1649624 8128492 3088 396844 328 0 2604 28 497 1634 4 1 94 1 0

Determining the load on the I/O system is somewhat more complex. You need to identify four different I/O patterns: sequential reading and writing and random reading and writing, which occurs frequently in databases. Normal hard disks do a good job of sequential read/write, whereas SSDs are far better at random read/write.

You can query the load on the I/O system, as shown in Listing 4 for sequential writing to an SSD (single-threaded, 16KB blocks, O_DIRECT). Here, r/s and w/s stand for the number of read or write operations per second (IOPS), await is the average service time for all I/O requests, and rKB/s and wKB/s indicate the volume of data read or written (throughput).

Listing 4: I/O System Load

# iostat -xk 1 Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util sda 2.00 8.00 2.00 9500.00 16.00 151948.00 31.99 1.07 0.11 4.00 0.11 0.09 88.40

If your read or write throughput is high (as in Listing 4), you are looking at sequential operations. Random read or write operations result in far lower throughput.

That just leaves the CPU load. You'll need to take a closer look here. In the example in Listing 5, the system seems to have 71 percent load while idle, but core 1 (e.g., one thread in a MySQL connection) is fully loaded for extended periods.

Listing 5: Using mpstat

# mpstat -P ALL 1 CPU %usr %nice %sys %iowait %irq %soft %steal %guest %gnice %idle all 26.94 0.00 0.59 1.05 0.00 0.02 0.00 0.00 0.00 71.40 <- < 71% idle > 0 1.00 0.00 0.37 0.00 0.00 0.06 0.00 0.00 0.00 98.57 1 100.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 2 3.77 0.00 1.32 1.32 0.00 0.00 0.00 0.00 0.00 93.58 3 2.82 0.00 0.69 2.89 0.00 0.00 0.00 0.00 0.00 93.60

Once the limiting factor – or the guilty process – has been identified, you can start thinking about how to tackle the performance problem. In the case of MySQL, you can identify five levels at which to tackle the difficulties and hopefully resolve them.

Level 1: The Hardware

Speed problems can often be resolved by throwing hardware at them. And, this approach can even be cheaper than investing person-months in improving the application code or the architecture. Investments in better – that is, potentially more expensive – hardware will not scale well. Poor application design or an ill-considered architecture will quickly see the application reach its limits again. New hardware mainly buys you time to fix the actual problem.

If you want to deploy faster hardware, you will typically want to invest in more RAM and better I/O systems (RAID 10, battery-buffered I/O controllers, SSDs) for a database machine. These actions can seriously improve database performance.

Level 2: The Operating System

Typically, there's not much the user can do about the modern operating system. Operating systems are usually perfectly preconfigured for most use cases. A performance gain of more than 10 to 20 percent cannot be expected. Typical tuning actions involving the operating system for databases include: increasing the number of file handles per process, using a scalable modern filesystem (XFS or ext4), setting I/O schedulers to noop, and setting the swappiness to 1.

One noteworthy item is the trend toward consolidation that has made inroads into data centers. This trend started with the spread of the SAN and is now continuing with virtualization solutions of all kinds. Basically, there's nothing wrong with consolidating many (typically idle) systems on a large-scale host, but for a high-performance database working at full load, virtualization solutions are not the answer.

For one thing, consolidation solutions such as SAN and virtualization always come at the price of some overhead. For another, SAN systems are fairly distant from the database server itself, viewed geographically, which can lead to higher latencies. The biggest problem in consolidation, however, is overcommitting resources (you have too many consumers for not enough resources) and what is known as the noisy neighbor effect: Another virtual guest system causes so much load that it brings the database to its knees.

The noisy neighbor effect is particularly insidious because it is extremely difficult to identify from the perspective of the guest system. You only notice that your own processes are somehow slowing down. Another phenomenon is guest systems running more slowly with more virtual cores than with fewer. In this light, administrators should always avoid virtualization from a performance point of view for critical and heavily loaded database systems.

Level 3: The Database Configuration

The predefined configuration values for MySQL were poor for many years, but the defaults have improved greatly since MySQL 5.6. That said, many older MySQL configuration files still drag the ballast of unfavorable settings along with them.

The current MySQL 5.6 versions can be configured with some 435 variables. For overworked database administrators, the question is: Where do I start? (Figure 1). Fortunately, it is sufficient in most cases to configure seven MySQL variables correctly to boost performance. After doing so, most MySQL databases will probably run at a reasonable speed.

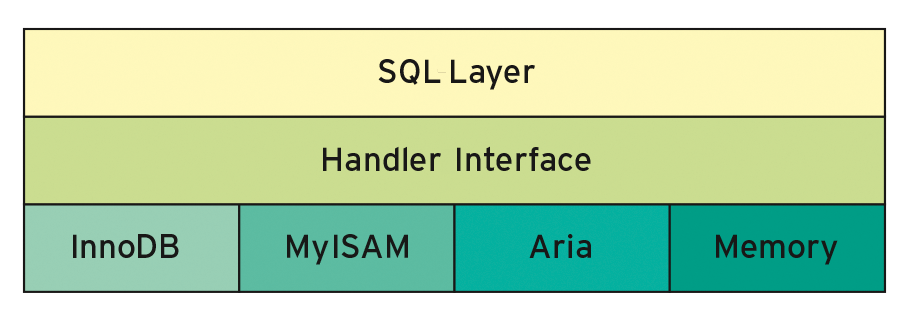

MySQL is highly flexible, thanks to its storage engine architecture. However, this architecture also requires some understanding about which parameters are responsible for which area and which storage engine. MyISAM was the default storage engine for MySQL before version 5.5. From MySQL version 5.5 on, InnoDB has been the storage engine.

Three variables are mainly responsible for the SQL layer – table_open_cache, table_definition_cache, and query_cache_size/query_cache_type – whereas four variables tune storage engine behavior.

Table Open Cache

Table Open Cache controls the number of file descriptors that MySQL requests from the operating system. This value should be approximately the same size as the number of tables in your joins times the number of open connections. The commands shown in Listing 6 tell you if the value is big enough. The math is: (maximum number of concurrent open connections) x (number of tables per join)=151 x 5 (assumption)=755. This means that table_open_cache, with a default value of 400, is likely to be insufficiently dimensioned given maximum system load. However, some old configurations still overtax the new defaults. (Note that MySQL 5.1 renamed table_cache to table_open_cache.)

Listing 6: Table Open Cache

mysql> SHOW GLOBAL STATUS LIKE 'Open%tables'; +---------------+-------+ | Variable_name | Value | +---------------+-------+ | Open_tables | 400 | | Opened_tables | 70123 | +---------------+-------+ mysql> SHOW GLOBAL VARIABLES WHERE variable_name = 'max_connections' OR variable_name = 'table_open_cache'; +------------------+-------+ | Variable_name | Value | +------------------+-------+ | max_connections | 151 | | table_open_cache | 400 | +------------------+-------+

Table Definition Cache

The Table Definition Cache buffers the parsed and interpreted table definitions. For a normal application, you will probably want to set this value to the number of tables in your MySQL instance. You can determine the number of tables with the command:

mysql> SELECT COUNT(*) FROM information_schema.tables; +----------+ | count(*) | +----------+ | 153 | +----------+

The popular defaults for Table Definition Cache, from 256 to 400, would be sufficiently dimensioned for this example.

Query Cache

The next relevant MySQL parameter from the SQL area is the Query Cache. This should actually be called Result Cache because it caches the query results. Opinions differ on this cache – some people think it is useless and would prefer to disable it completely if possible. Others benefit from performance gains during SELECT queries.

Most MySQL users probably have to deal with a relatively small number of concurrent connections to the database and a high percentage of read queries. In this case, it makes more sense to enable the Query Cache.

Caution: MySQL 5.6 disables the Query Cache by default! Be careful when you upgrade. You should not choose too high a value for the Query Cache; otherwise, you might experience complete system freezes in certain scenarios. As a rule of thumb, it should not be greater than 128MB.

MyISAM key_buffer_size

In the case of the MyISAM Storage Engine, one critical parameter above all must be tuned: the MyISAM key_buffer_size. It defines how much RAM the MyISAM storage engine requests from the operating system for caching MyISAM index blocks. But, because almost no one uses MyISAM tables today, this parameter is unlikely to be particularly relevant in the future. As a rule of thumb, figure on 25 to 33 percent of RAM for a dedicated, exclusively MyISAM-reliant database system. The aria_pagecache_buffer_size is similar to this if you use the Aria storage engine provided with MariaDB.

InnoDB Parameters

Now I'll move on to the most critical parameters for the InnoDB storage engine.

- You can set the InnoDB Buffer Pool using the

innodb_buffer_pool_sizeparameter. This defines how much RAM the InnoDB requests from the operating system to cache InnoDB data and index blocks. RAM is around 100,000 times faster than a disk; thus, you can significantly accelerate your queries if the data resides in RAM instead of on your hard disk. The tendency here is that more is better. As a rule of thumb, you will want to assign around 80 percent of your free RAM for the InnoDB Buffer Pool. - The

innodb_log_file_sizeparameter defines the size of the InnoDB transaction logs, of which there are normally two. The size of these two files influences the speed of write access to InnoDB. This value was far too small for many years (5MB). The new default values in MySQL 5.6 take this into account, changing it to 48MB. The tendency is to assume that bigger means better write performance, but it also means a longer recovery time in the case of a system crash. Caution: If you have MySQL older than v5.5, check the documentation to discover how to change the size of this file. If you get things wrong, your database might not start at all. - The

innodb_flush_log_at_trx_commitparameter defines how data is written to disk in the case of aCOMMIT. The default value1stands for the cleanest behavior, which is also ACID-compliant. That means MySQL writes the data from the log buffer to the transaction logs on aCOMMITand then performs a flush (fsync). In the case of a system crash, this ensures that you do not lose any data. This safe setting also has some drawbacks: Flushing is slow, and it affects writes throughput of the system. If write performance is more important than minor data loss from a crash (ca. one second), then you can set the value ofinnodb_flush_log_at_trx_committo0(empty and flush the log buffer once a second) or2(empty the log buffer on eachCOMMITand write out to disk once a second). This will give you a significant increase in the write throughput.

A general overview of MySQL server and MySQL status variables can be found online [1].

Level 4: Application Tuning

The fourth tuning level relates to the application, which primarily means query tuning in the case of databases. This is where you can achieve the greatest performance gains. Unfortunately, doing so typically means changing the design, or at least the code, of the application, which is not always possible and not always well received by developers.

Even without intervening in the code, however, you can often achieve performance gains through intelligent indexing. Indexes mainly serve to accelerate read access (SELECT) to data. In many cases, indexes are also suitable for finding rows more quickly in UPDATE or DELETE operations. You can best imagine an index as being something like a phone book (city, family name, first name) or register in the library (order by title, author, etc.).

If you are looking for a person based on city, family name, and first name, this will be a fairly quick process in the phone book. However, if you do not know the city, your search will be extremely time consuming – you would need to search through every single phone book from the start to the finish. The MySQL database has a similar problem: If an index is missing, it triggers a computationally expensive full-table scan; if the first attribute in the index is unknown, it leads to a full-index scan.

If you have an index, the query can access the correct row in the table directly via the index entry or a range of index entries. By creating the right indexes, users can help the database avoid a huge amount of work, and this often leads to 10 or 50 times faster query performance.

Which Indexes Do You Need?

Each table should have a unique row identifier (ID). MySQL often uses an AUTO_INCREMENT field as the primary key for this. If the table does not have a primary key, it is not very elegant from an academic point of view, but it also affects performance (e.g., during replication).

In the case of InnoDB tables, you need to pay particular attention to the selection of the primary key. The length is relevant: The primary key is also stored as a reference in all secondary keys (all indexes of a table that are not the primary key). If you have a long primary key, you are thus increasing the size of all secondary keys. At the same time, the primary key often sees very intensive use in the context of joins. A long primary key plus a join leads to a correspondingly large number of CPU cycles.

Another consideration when selecting the primary key is the locality (the physical location) of the data. InnoDB always creates what is known as an index-organized table, which means the data in the blocks are always physically sorted in the way that the primary key is structured. This causes a corresponding sorting pattern of the data, which can be advantageous or disadvantageous depending on the use case; consider time series, for example.

The example in Listing 7 generates two tables: One uses the AUTO_INCREMENT attribute for the primary key, id, which instructs MySQL to assign sequence numbers to rows automatically. The second assigns a random primary key, which is equivalent to randomized rows. The first version is useful for typical access patterns in MySQL, because by sorting with newest first, you can find the most recently generated rows. The second primary key does not work well in this case.

Listing 7: Two Primary Keys

01 mysql> CREATE TABLE data_time (id INT UNSIGNED NOT NULL AUTO_INCREMENT PRIMARY KEY, data VARCHAR(64), ts TIMESTAMP); 02 03 mysql> CREATE TABLE data_random (id CHAR(32) NOT NULL PRIMARY KEY, data VARCHAR(64), ts TIMESTAMP); 04 05 mysql> INSERT INTO data_time VALUES ( NULL, 'Row 1', NULL); SELECT SLEEP(1); 06 mysql> INSERT INTO data_time VALUES ( NULL, 'Row 2', NULL); SELECT SLEEP(1); 07 mysql> INSERT INTO data_time VALUES ( NULL, 'Row 3', NULL); SELECT SLEEP(1); 08 09 mysql> INSERT INTO data_random VALUES ( MD5(CURRENT_TIMESTAMP()), 'Row 1', NULL); SELECT SLEEP(1); 10 mysql> INSERT INTO data_random VALUES ( MD5(CURRENT_TIMESTAMP()), 'Row 2', NULL); SELECT SLEEP(1); 11 mysql> INSERT INTO data_random VALUES ( MD5(CURRENT_TIMESTAMP()), 'Row 3', NULL); SELECT SLEEP(1); 12 13 mysql> CREATE TABLE `data_time` ( 14 `id` INT(10) UNSIGNED NOT NULL AUTO_INCREMENT, 15 `data` VARCHAR(64) DEFAULT NULL, 16 `ts` TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, 17 PRIMARY KEY (`id`) 18 ) ENGINE=InnoDB AUTO_INCREMENT=4 19 20 mysql> CREATE TABLE `data_random` ( 21 `id` CHAR(32) NOT NULL, 22 `data` VARCHAR(64) DEFAULT NULL, 23 `ts` TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, 24 PRIMARY KEY (`id`) 25 ) ENGINE=InnoDB DEFAULT 26 27 mysql> SELECT * FROM data_time; 28 +----+---------+---------------------+ 29 | id | data | ts | 30 +----+---------+---------------------+ 31 | 1 | Row 1 | 2015-09-13 15:24:05 | 32 | 2 | Row 2 | 2015-09-13 15:24:06 | 33 | 3 | Row 3 | 2015-09-13 15:24:07 | 34 +----+---------+---------------------+ 35 36 mysql> SELECT * FROM data_random; 37 +----------------------------------+---------+---------------------+ 38 | id | data | ts | 39 +----------------------------------+---------+---------------------+ 40 | 47e0142a3638fdc24fe40d4e4fbce3f1 | Row 1 | 2015-09-13 15:24:12 | 41 | b833c1e4c5bfc47d0dbe31c2e3f30837 | Row 3 | 2015-09-13 15:24:14 | 42 | c7d46523a316de4e1496c65c3cbdf358 | Row 2 | 2015-09-13 15:24:13 | 43 +----------------------------------+---------+---------------------+

Another problem relating to primary keys occurs with time series (Listing 8). The query does not typically reference the time alone, but the time per device. A primary key based only on id would be a poor choice here and is equivalent to random distribution in terms of the query logic. A composite index (device, ts) would re-sort the data (by devices and by time within the devices), substantially accelerating the queries.

Listing 8: Primary Keys and Time Series

01 mysql> CREATE TABLE `data_timeseries` ( 02 `id` INT(10) UNSIGNED NOT NULL AUTO_INCREMENT, 03 `device` varchar(32) NOT NULL, 04 `data` VARCHAR(64) DEFAULT NULL, 05 `ts` TIMESTAMP NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, 06 PRIMARY KEY (`id`) 07 ) ENGINE=InnoDB AUTO_INCREMENT=4 08 ; 09 10 mysql> INSERT INTO data_timeseries VALUES (NULL, 'Refrigerator, '5.8 C', NULL); 11 mysql> INSERT INTO data_timeseries VALUES (NULL, 'Washing machine, '41.2 C', NULL); 12 ... 13 14 mysql> SELECT * FROM data_timeseries WHERE device = 'Refrigerator AND ts 15 BETWEEN '2015-09-13 00:00:00' AND '2015-09-13 23:59:59' 16 17 +----+--------------------------+-----------+---------------------+ 18 | id | device | data | ts | 19 +----+--------------------------+-----------+---------------------+ 20 | 4 | Refrigerator | 5.8 C | 2015-09-13 15:34:42 | 21 | 5 | Washing machine | 41.2 C | 2015-09-13 15:34:42 | 22 | 6 | Pot plant moisture | 75% rel. | 2015-09-13 15:34:42 | 23 | 7 | Refrigerator | 6.0 C | 2015-09-13 15:35:42 | 24 | 8 | Washing machine | 41.1 C | 2015-09-13 15:35:42 | 25 | 9 | Pot plant moisture | 74% rel. | 2015-09-13 15:35:42 | 26 | 10 | Refrigerator | 6.2 C | 2015-09-13 15:36:42 | 27 | 11 | Washing machine | 41.2 C | 2015-09-13 15:36:42 | 28 | 12 | Pot plant moisture | 73% rel. | 2015-09-13 15:36:42 | 29 +----+--------------------------+-----------+---------------------+

Other Indexes

Besides choosing a primary key, it is also important to create the required, and correct, secondary indexes. Most database developers use the attributes that are components of joins, or on which a query filters information (using a WHERE clause), for this purpose. In the example

mysql> SELECT * FROM employee WHERE last = 'Mueller';

it would make sense to index on the field named last.

Whether or not the MySQL optimizer actually uses an index can be determined with the EXPLAIN command (Listing 9). Besides checking whether or not an index is used, you will also want to measure the run time of the command multiple times to make sure the action really does have a positive effect. It can happen that the selected index causes poorer performance.

Listing 9: Using the EXPLAIN Command

mysql> EXPLAIN SELECT * FROM employee WHERE last = 'Mueller'; +----+-------------+----------+------+---------------+------+---------+------+------+-------------+ | id | select_type | table | type | possible_keys | key | key_len | ref | rows | Extra | +----+-------------+----------+------+---------------+------+---------+------+------+-------------+ | 1 | SIMPLE | employee | ALL | NULL | NULL | NULL | NULL | 987 | Using where | +----+-------------+----------+------+---------------+------+---------+------+------+-------------+ mysql> ALTER TABLE employee ADD INDEX (last); mysql> EXPLAIN SELECT * FROM employee WHERE last = 'Mueller'; +----+-------------+----------+------+---------------+------+---------+-------+------+-----------------------+ | id | select_type | table | type | possible_keys | key | key_len | ref | rows | Extra | +----+-------------+----------+------+---------------+------+---------+-------+------+-----------------------+ | 1 | SIMPLE | employee | ref | last | last | 67 | const | 1 | Using index condition | +----+-------------+----------+------+---------------+------+---------+-------+------+-----------------------+

Slow Query Log

To record slow queries systematically, MySQL has the Slow Query Log. You can enable and configure the log dynamically as of MySQL 5.1:

mysql> SET GLOBAL slow_query_log = 1; mysql> SET GLOBAL long_query_time = 0.200;

You can also query where the Slow Query Log resides on disk:

mysql> SHOW GLOBAL VARIABLES LIKE 'slow%file'; +---------------------+----------+ | Variable_name | Value | +---------------------+----------+ | slow_query_log_file | slow.log | +---------------------+----------+

If the path is not specified, the file will reside below the $datadir in /var/lib/mysql.

Level 5: The Architecture

The fifth level at which you have tuning options is the architecture. It typically provides the greatest leverage for improvements, but you need to apply it right from the outset of the development process. If you make the wrong decision here, it is difficult to correct and will typically involve substantial overhead.

Important values are the number of users and the data volume you will have in the final expansion stage. The larger the anticipated values, the more you need to think about the architecture. Some points that are continually debated include the following:

- Database clusters give better performance. This assumption tends to be false. Database clusters are typically a high-availability solution and not a solution for tackling performance problems. Response times for read access remain the same or could even be longer. The read performance will typically improve with most MySQL cluster solutions – as long as you are prepared to accept certain restrictions. Writing will scale badly or not at all.

- Parallelization can drastically improve an application's throughput. MySQL can easily handle dozens of queries at the same time, but this means having the right kind of application. MySQL cannot autonomously parallelize queries.

- For applications that need to scale massively and will have a large percentage of read accesses, it makes sense to open separate read and write connections to the database in the application. This approach will allow you to redirect read queries to a MySQL read cluster later on. Caution is advisable with systems that boast of automatic read-write splitting. Developing reliable intelligence that automatically makes the right decision is difficult. How is an external developer going to do this if your own developers find it impossible? Additionally, these automatic systems typically only work for single-query transactions.

- The biggest problem in scaling is writing. Difficulties in reading can be tackled with a large amount of RAM and a large enough number of read slaves. If write throughput brings the machine to its knees, however, the only thing that helps is a fast I/O system (e.g., an SSD). If this does not help, your final resort is sharding – that is, distributing the data across multiple database back ends. Each back end has its own I/O system, which means that write access can scale infinitely – in theory. This also has a significant influence on the application, however. The application needs to know where the data resides to be able to write it to the correct back end, and this requirement often means low-level changes to the application code.

Low-Hanging Fruit

When it comes to database performance tuning, people often expect fast results – preferably yesterday. Changes in the application code that would offer genuine benefits are time consuming, however. For this reason, you have to determine where you can quickly eke out a little more performance. The faster solutions are:

- Hardware with more RAM and a fast I/O system: a large RAID 10 with hard disks, SSDs, or both.

- Correctly configuring the database: using RAM correctly – with

innodb_buffer_pool_sizefor read access andinnodb_log_file_sizefor high write throughput. If you can live with minor data loss in the case of a database crash, you can additionally setinnodb_flush_log_at_trx_committo0or2. If you have a larger number of tables, it can also help to choose a larger value fortable_open_cacheandtable_definition_cache. - Finally, creating the right indexes will always help to accelerate queries significantly.

If you need to speed up your MySQL database, understanding the important parameters and applying these practical tips can make the task much easier.