Enterprise job scheduling with schedulix

Computing with a Plan

When IT people hear "enterprise job scheduling," they think of software tools for planning, controlling, monitoring, and automating the execution of mutually independent programs or processes. Although job scheduling always has been indispensable on mainframes and midrange systems, automatically controlled workflows are also quite popular on servers.

A job scheduling system can do far more than the Cron service, which simply acts as a timer to start processes. More than half of all mission-critical operations – starting with archiving, through backups and reports, to managing inventories – in companies throughout all branches of industry are designed to run as batch processes. According to a study by BMC, the vendor of an "agentless" scheduling solution, every single web transaction generates on average more than 10 batch processes [1].

To ensure stable operation of independent jobs, however, you need more functionality – for example, the ability to pass control information, to choose a monitoring option, or to request operator intervention. On top of this, resource control, parallel task processing, and distributed execution are all desirable. Little wonder, then, that products optimized in this way exist on the market, including IBM Tivoli Workload Scheduler [2], Entire Operations [3] by Software AG, or BMC's Control-M Suite [4]. All told, the number of available solutions with and without enterprise resource planning (ERP) support is not exactly small [5]. The programs cited here typically work across operating systems and can monitor and control the execution of programs on Windows, Unix, and Linux.

The product discussed in this article, schedulix [6], is a free enterprise resource scheduling system targeting small to medium-sized enterprises, designed for Linux environments, and available under an open source license.

Widespread Scripting

Many small to medium-sized enterprises use scripts (Bash, Perl, Python, etc.) to solve problems in workflow control. Workflow controls implemented in this way ensure that the system coordinates and synchronizes each process so that it completes the processes in the right order. If you want two processes (e.g., A and B) to run consecutively, you could simply bundle them into a shell script, but to make sure process B is working with valid data, you would need to take care that B does not launch if A returns an error. Fielding these errors obviously makes the script more complex in terms of testing and more difficult to read and maintain.

At the same time, you would need to extend the script so that it remembers which parts have been processed. Without this kind of history, the person responsible for job management would need to restart the script manually if, for example, program B terminates with an error after program A has been running for four hours. The manager could then decide whether to live with hours of lost work or to comment out the parts of the scripts that have already been processed, although this process is extremely prone to error.

You could implement a script history with a little help from a step file, which would need to be initialized when called the first time and after each termination. Additionally, you would need error handling when reading and writing progress reports.

This example clarifies how script-based process control can turn into a genuine programming project, even for very simple tasks, and it would be difficult to maintain, as well.

Practical applications show that script-based process control is manageable for a handful of jobs, but it becomes a very complex development task if you need to manage thousands of processes. This scenario is precisely the occasion for enterprise job scheduling.

schedulix and BICsuite

The open source schedulix has a commercial counterpart named BICsuite [7]. Both were created from the job specifications of many IndependIT Integrative Technologies GmbH [8] customers. IndependIT was founded in 1997 as a service provider for consulting projects in the database landscape. Since 2001, is has looked after BICsuite and schedulix exclusively.

According to the vendor, the current software is not just a byproduct of the business, but a totally new development created exclusively on Linux. Here, "new" is obviously relative given a development period of a decade. The company's new major customers include an international telecommunications group and a social network provider in Germany.

The schedulix software is designed for Linux environments and is exclusively delivered as source code. However, if you want to run a schedulix server and agents on a Windows system, you can register for a three- to four-day workshop with IndependIT. The company then installs a version of the Basic edition of BICsuite free of charge in the environment of your choice, be it Windows or Solaris.

Approach

In contrast to legacy job scheduling systems, schedulix takes a dynamic approach and computes the processes to execute on the basis on boundary conditions such as priorities or availability of resources. More specifically, schedulix works with the user-defined exit status model. In addition to freely defined exit states, defined jobs consider dependencies of other jobs, so that execution order can be controlled in stages.

When modeling workflows, users can draw on variables and parameters, sequences, branches to alternative partial sequences, or loops. The loop or branch condition can be an exit status or configurable trigger. Batches or jobs can be statically or dynamically parameterized when submitted. The tool also supports users in handling synchronization and exceptions. Two special features of the software are worthy of note: hierarchic workflow modeling and the ability to break down programs or scripts into smaller units with clearly segregated functionality (see the box "Process Decomposition").

When it comes to parallel processes, schedulix looks to be pretty well equipped. The dynamic submit feature dynamically submits (partial) job workflows and parallelizes them. It is also possible to automate dynamic batch job submits via triggers that depend on the exit status. For example, you can implement messaging for similar automated responses to sequence events in this way. Beyond this, users can break down their resources into units with the help of system resources and assign them to match the execution environment. A Resource Requirement lets you define the load level for a resource for each job (load control).

Jobs can be assigned priorities relative to other jobs for cases in which resources are low. The documentation describes an option for automatically distributing jobs to multiple execution environments, depending on current resource availability, through the interaction of static and system resources; in other words, it is possible to set up job load balancing.

Additionally, schedulix also supports synchronizing resources, which request different lock modes (No Lock, Shared, Exclusive), which in turn retroactively synchronize what were originally independent workflows. Administrators can also assign a state model to a synchronizing resource, thus defining the resource requirement in a status-dependent manner. This means that automatic state changes can be set up depending on the exit status of a job.

API and Architecture

Schedulix has an open and well-documented API that all components use to communicate with the job scheduling server. In principle, then, it can be controlled from any user program. Jobs can use the API to, for example, set arbitrary result variables, which can then be evaluated by a monitoring program – thus improving the results and providing a clear overview. Thanks to the relational repository, the API, and the use of open standards such as Java, JDBC, and SQL, schedulix is well equipped for integration into many system environments.

Schedulix mainly consists of the schedulix Job Scheduling Server, which is written in Java, as the focal point of the architecture for handling all of the job scheduling logic. The server continuously and exclusively stores its configuration, its modeling and logging data, and its process states in a relational database. Schedulix and the matching JDBC driver support PostgreSQL, MySQL or MariaDB, and Ingres. The commercial variant, BICsuite, also supports Oracle, IBM Informix, IBM DB2, and Microsoft SQL Server. Schedulix requires at least the Oracle (Sun) Java SE JRE version 1.7.

Install It Yourself

Setting up the schedulix environment (i.e., compiling and installing all the required components) is not a point-and-click task; however, aided by the complete documentation, it is well within the capabilities of any admin. The system does not require root privileges for the install or for operations on any of the systems involved. After installing the job server and database, setting up the environment to suit your needs, and launching the database, you can then launch the Job Scheduling Server by typing server-start.

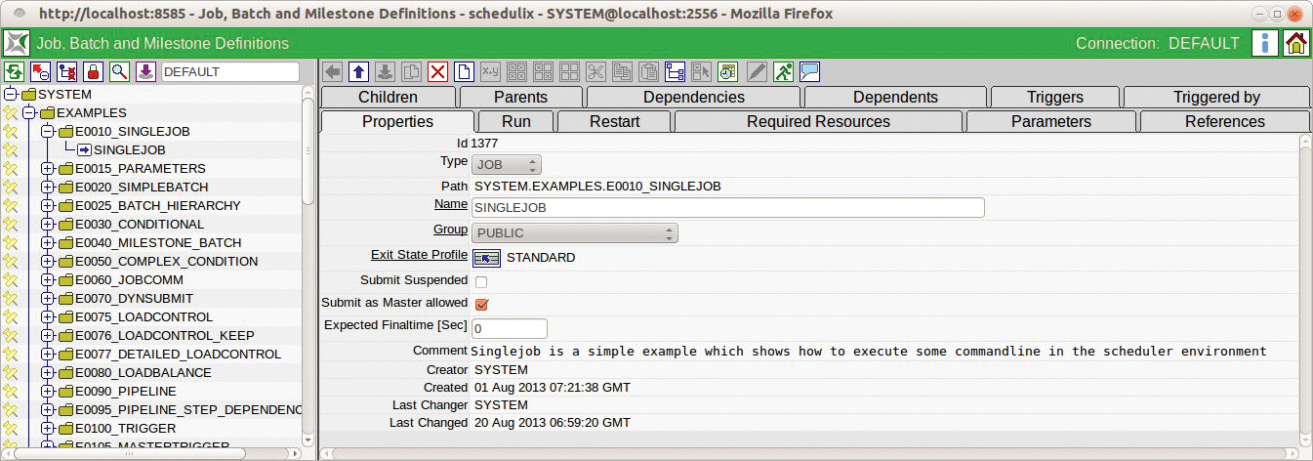

If you also install the Zope-based web interface, first launch the application server to access the web interface on http://localhost:8080/SDMS (alternatively, use SSL). For your first steps, it makes sense to install the large collection of examples as desrcribed in the installation guide. Examples are then selectable in the navigator (Figure 1).

The schedulix Job Server can also be installed as a client/server architecture that distributes the client installations across multiple hosts. The clients need the Java Native Access (JNA) program library. This supports access to platform-specific dynamic program libraries (Shared Libraries, DLLs for Windows) without needing to write platform-specific code as is the case with JNI.

GUI

Schedulix can be managed fully via the web interface, but it also comes with a command-line interface, including its own command language. Use of authentication throughout for all users and components guarantees security. The graphical management interface, which is accessible using HTTP or HTTPS, is implemented on the base of the Zope application server.

The Python source code for the web front end is completely open, as it is for BICsuite. The installation guide states that a C/C++ compiler is required for building the (still) current schedulix version 2.6.1 from March 2015 [9] because of the Java job executor (jobexecutor.c), a small job server component.

After successfully logging in, the system comes up with the main desktop – the main window in the web interface. Its design is typical of the Zope-Python architecture and, correspondingly, not exactly modern. You will not see any sign of active Ajax functions that imitate the native look and feel of a desktop application, and having to press the refresh button to retrieve the object list from the server is certainly not state of the art.

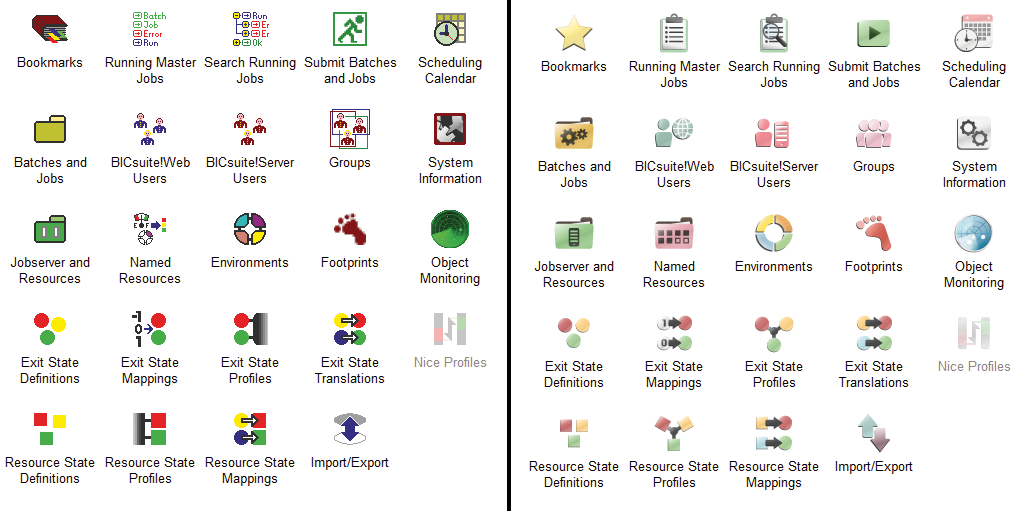

Although more than 10 years of history have certainly benefited the functional diversity and quality of the software across the board, when it comes to interface design, this history turns out to be a burden, according to the developers. IndependIT is obviously aware of this issue and has recently re-launched the interface shortly after the test for this article). Figure 2 shows a before/after comparison of the main desktop window.

On top of the slightly outdated design, which is attributable to the Zope-Python architecture, a collection of 20 icons with similarly jaded designs organized in a matrix again does not exactly contribute to a clear-cut solution. The way these elements are ordered does not seem to reflect the workflow intuitively at first glance. However, if you take a closer look, the arrangement does follow some kind of pattern. In the bottom part, you find modules for defining templates and definitions, such as exit state definitions, exit state profiles, resource state definitions, resort state mapping, and so on.

In the middle, the GUI displays the modules for creating resources, environments, users and groups; at the top are the starting points for submitting or executing batches and jobs. For production use, it is still essential to get to know the schedulix control concept and work approach by poring over the documentation and the examples provided. Experienced admins are unlikely to be fazed by this, but a four- or five-day introductory workshop could be a good investment, especially considering that you are then given BICsuite Basic, including installation, free of charge.

A Practical Example

Many companies generate management reports and back up their databases on a daily basis. The actions this involves, such as aggregating the data required for each report, are always related – at least in terms of time, and often in terms of content. You can imagine creating a PDF from the aggregated data that the management can then access via a link published on the intranet. At the same time, you would want to avoid database activity while you are backing up the database.

To model report aggregation, for example, you need three independent steps after analyzing the task. In the hierarchical modeling concepts used by schedulix, these are parent-child relations to the REPORT master job. To map this in schedulix, implementing numeric exit codes for logical states is a mandatory requirement. If you do not do this yourself, you will get classical Unix mapping, which is the default in schedulix (i.e., an exit code of 0 for success and all other codes evaluated as failures).

Working with logical states helps you document the workflow. The modules that help you do this in the GUI are Exit State Definition and Exit State Mappings. Additionally, you need to define the environment in which the processes need to be executed in the Environments module or menu. For example, Server_1 could be responsible for reporting, while the database system runs on Server_2.

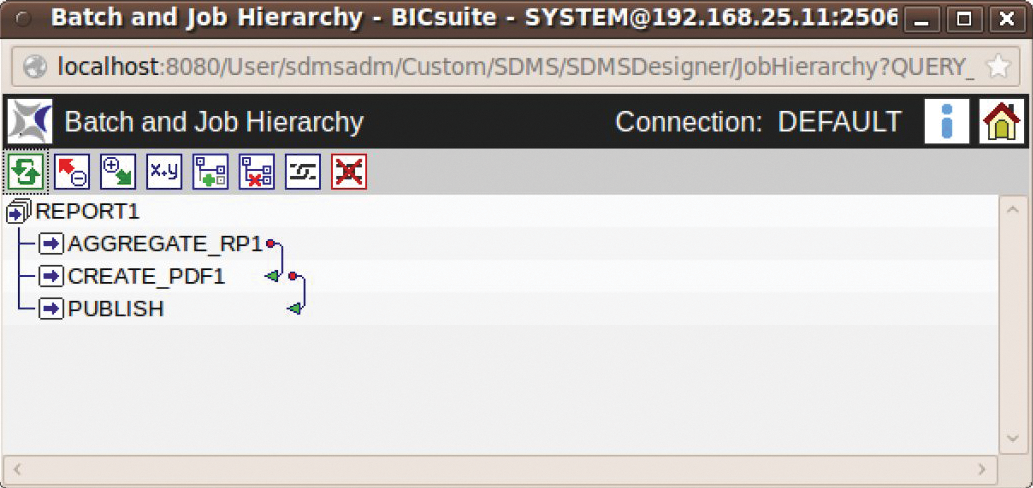

Where you can benefit from the GUI is in visualization of dependencies with arrows, and the option to change dependencies or branches in the job hierarchy with point and click (Figure 3). Another thing in favor of the GUI is that it visualizes the workflow's progress and clearly shows you which step is currently being performed, how long it has been running, and the extent to which the previous steps were successful.

Meta-Levels

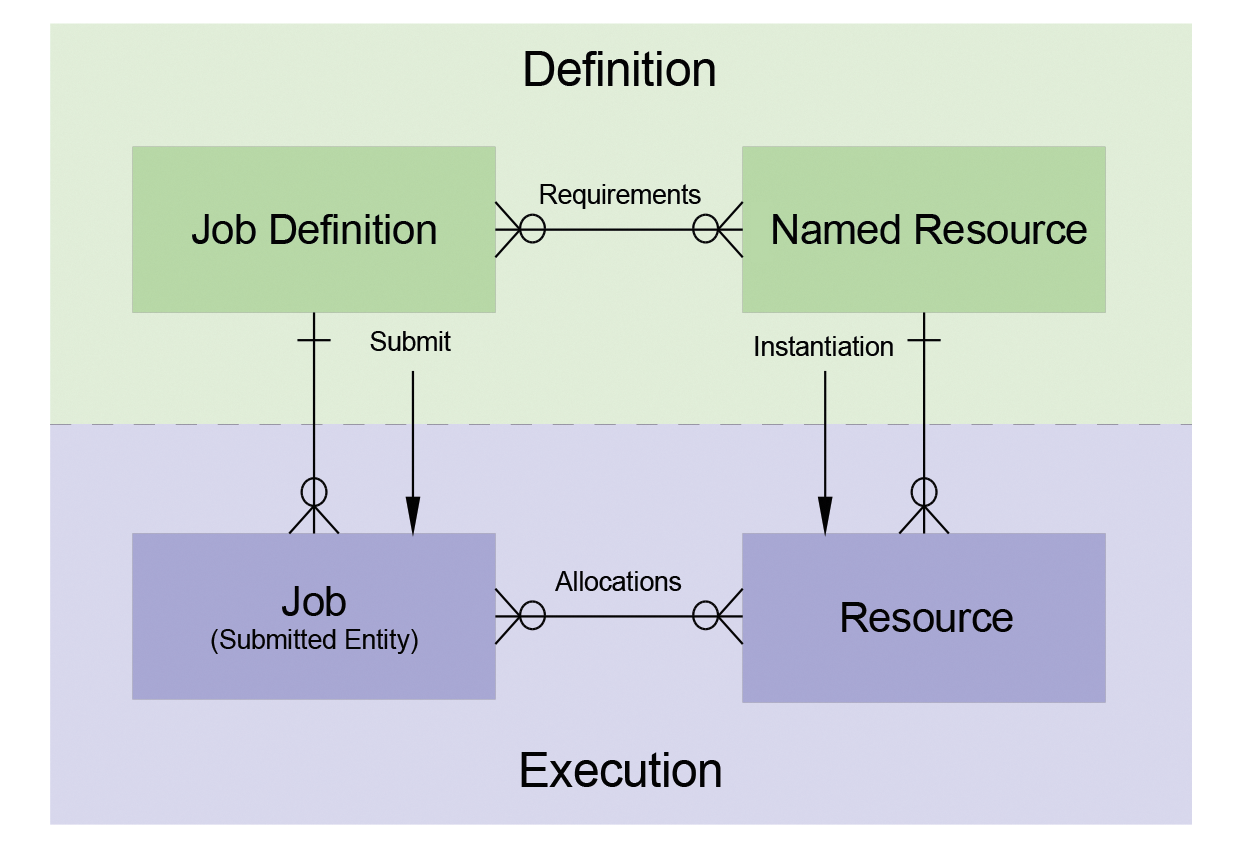

To understand how schedulix works, it is important to distinguish definitions from the execution layer (Figure 4). The definition layer comprises job definitions, which in turn have or pose resource requirements. In schedulix, these requirements are not tied to specific resources but to named resources, which users then need to define in the resource definitions.

A submit operation turns a job definition into a job (i.e., an instance of the associated job definition). By instantiating a named resource in an execution environment (job server), you create resources. When a job (a submitted job definition) is assigned by the scheduling system for execution in one or multiple execution environments, this creates a link (resource allocation) between jobs and resources (instances of named resources).

Conclusions

Traditional scheduling systems are typically oriented on a defined daily workflow. Because the job scheduling system needs to recompute this daily workflow after every interruption, the schedulix vendor considers this mode of working ineffective. Instead, the system relies on a dynamic architecture that continuously recomputes the consequences of the current boundary conditions.

For example, schedulix offers more options for adapting the system behavior to changing requirements and efficiently using existing resources. The vendor, IndependIT, is quite obviously working on a solution for the somewhat outmoded design of the web GUI.

Schedulix and its commercial sibling BICsuite require thorough and thus time-consuming familiarization with the approach and modes of operation – something they have in common with all other enterprise scheduling solutions. Small to medium-sized enterprises who have put themselves under pressure with scripts and DIY process controls will need to evaluate for themselves whether the achievable benefits offset the overhead of introducing schedulix (open source and only for pure Linux environments) or BICsuite (multiplatform, but at a cost). The economic logic will depend on whether or not your company has budgeted for the expense of job scheduling and monitoring.